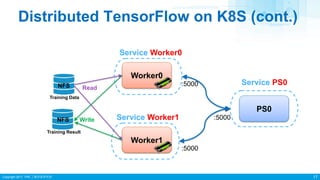

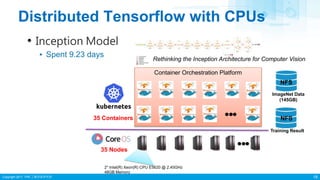

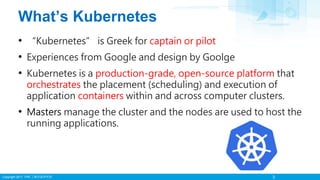

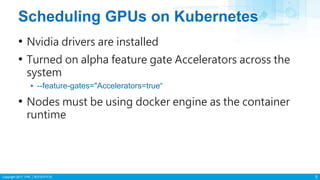

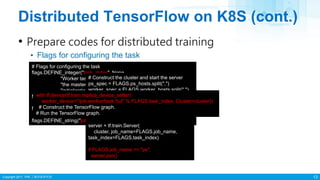

This document discusses running distributed TensorFlow on Kubernetes. It introduces Kubernetes as a platform for container orchestration that can schedule containers across clusters. It then covers scheduling GPUs on Kubernetes nodes and introducing distributed TensorFlow for model replication and training. The remainder discusses building Docker images for distributed TensorFlow jobs, specifying configurations in Kubernetes YAML templates, and running distributed TensorFlow workflows on Kubernetes with workers, parameter servers and shared storage.

![Copyright 2017 ITRI 工業技術研究院

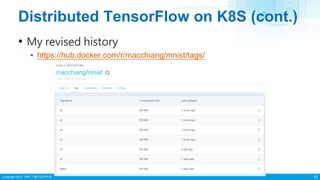

Distributed TensorFlow on K8S (cont.)

14

• Build docker image

▪ Prepare Dockerfile

▪ Build docker image

docker build -t <image_name>:v1 -f Dockerfile .

docker build -t macchiang/mnist:v7 -f Dockerfile .

docker push <image_name>:v1 Push image to docker hub

docker push macchiang/mnist:v7

FROM tensorflow/tensorflow:latest-gpu

COPY mnist_replicatensorflow/tensorflow.py /

ENTRYPOINT ["python", "/mnist_replica.py"]](https://image.slidesharecdn.com/distributedtensorflowonkubernetes-171024083707/85/Distributed-tensorflow-on-kubernetes-14-320.jpg)