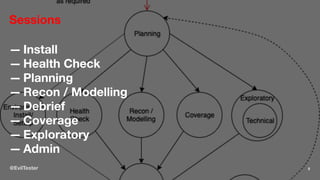

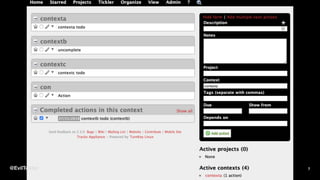

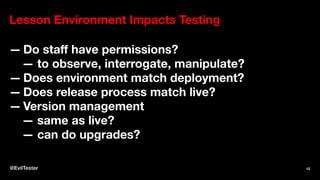

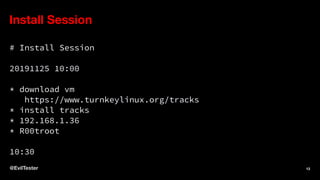

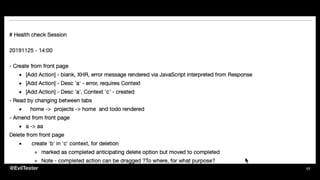

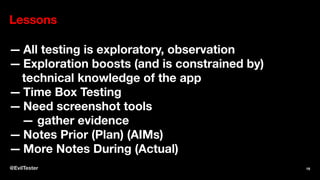

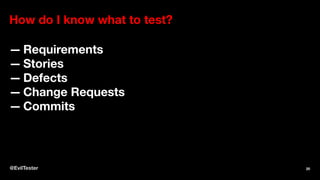

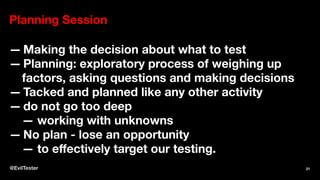

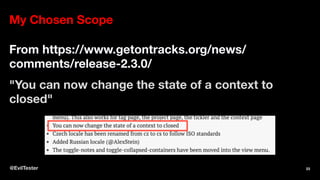

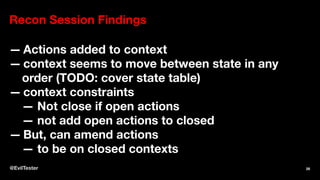

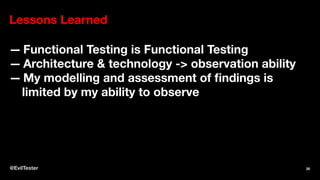

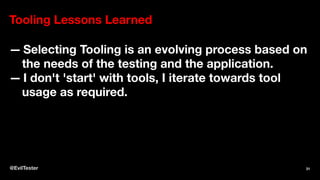

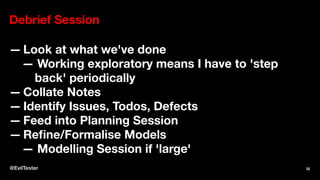

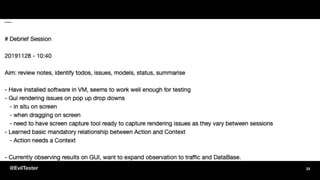

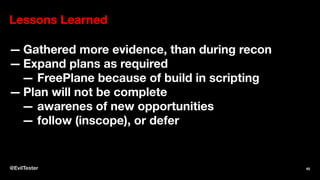

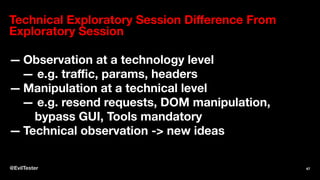

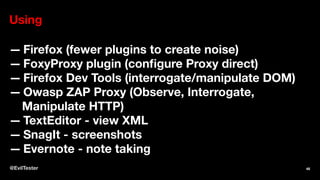

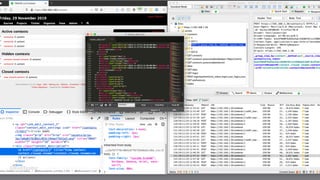

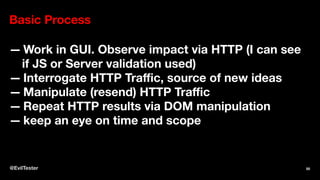

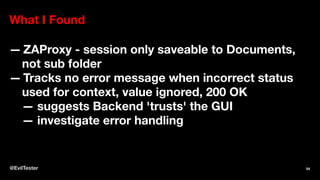

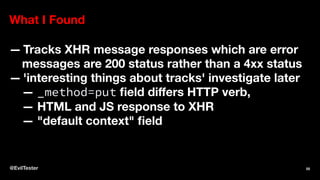

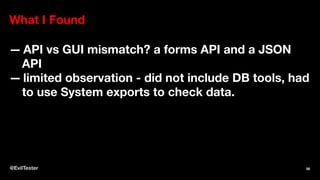

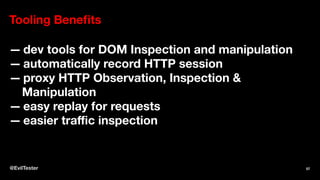

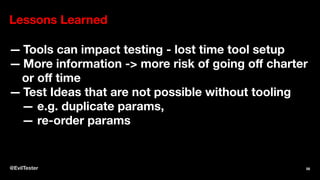

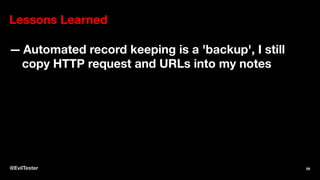

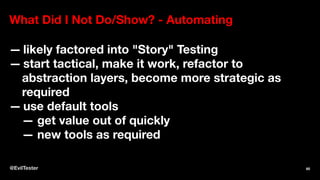

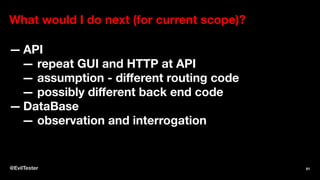

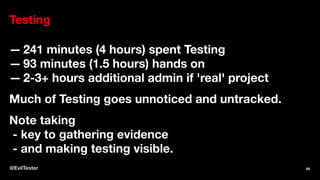

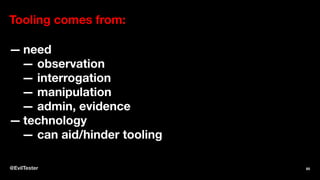

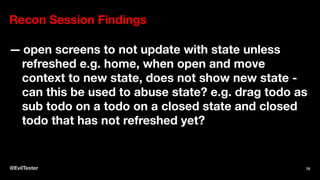

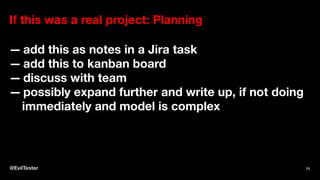

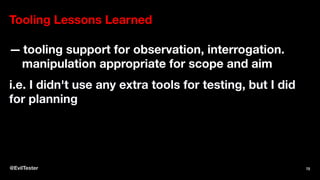

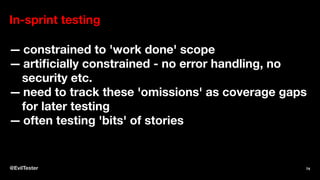

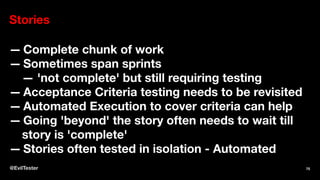

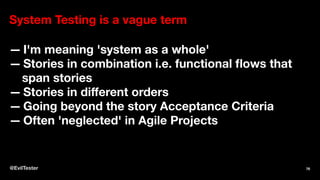

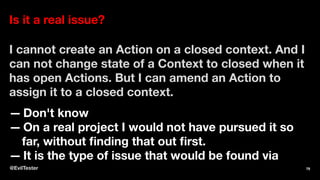

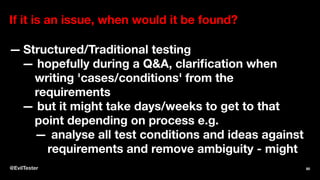

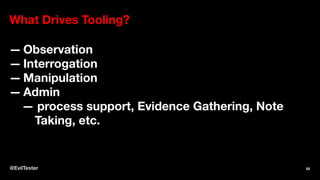

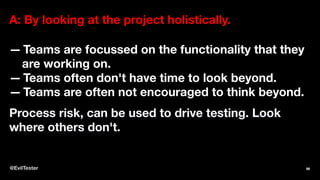

Alan Richardson's presentation at DevFest 2019 focused on practical exploratory testing of an open-source web application, emphasizing the thought processes, tools, and strategies used in real application testing. He highlighted the importance of planning, a thorough understanding of technology, and effective documentation to manage risks and drive future testing. Key lessons learned from his 'micro' case study include the significance of exploratory techniques, adaptable tooling, and the need for a holistic approach to understanding system behavior.