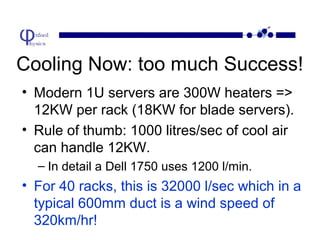

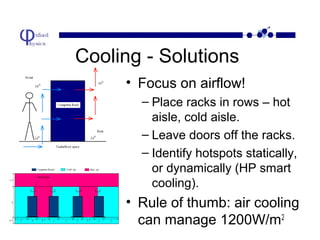

This document summarizes the requirements for a high-density computer room to house rack-mounted servers at Oxford University. It discusses why specialized computer rooms are needed, both historically for security, convenience and size, and currently due to specialized cooling, humidity and power needs of densely packed servers. The document outlines cooling challenges posed by increased server power and proposes solutions like optimized airflow and water cooling. It estimates infrastructure costs, including £400k for a 40-rack room, would represent 25-50% of total project costs, highlighting the importance of efficient computer facilities for high-performance computing.