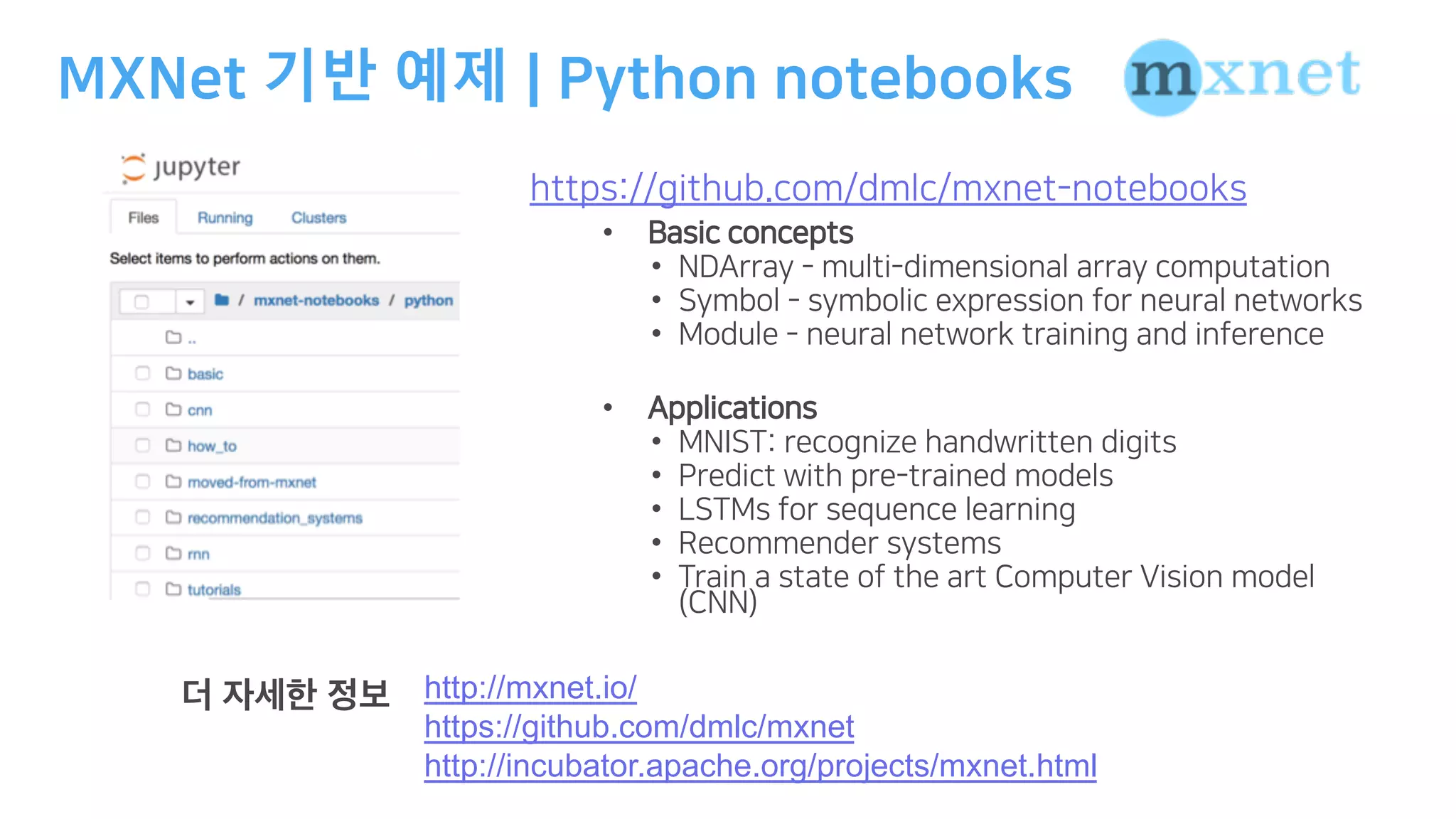

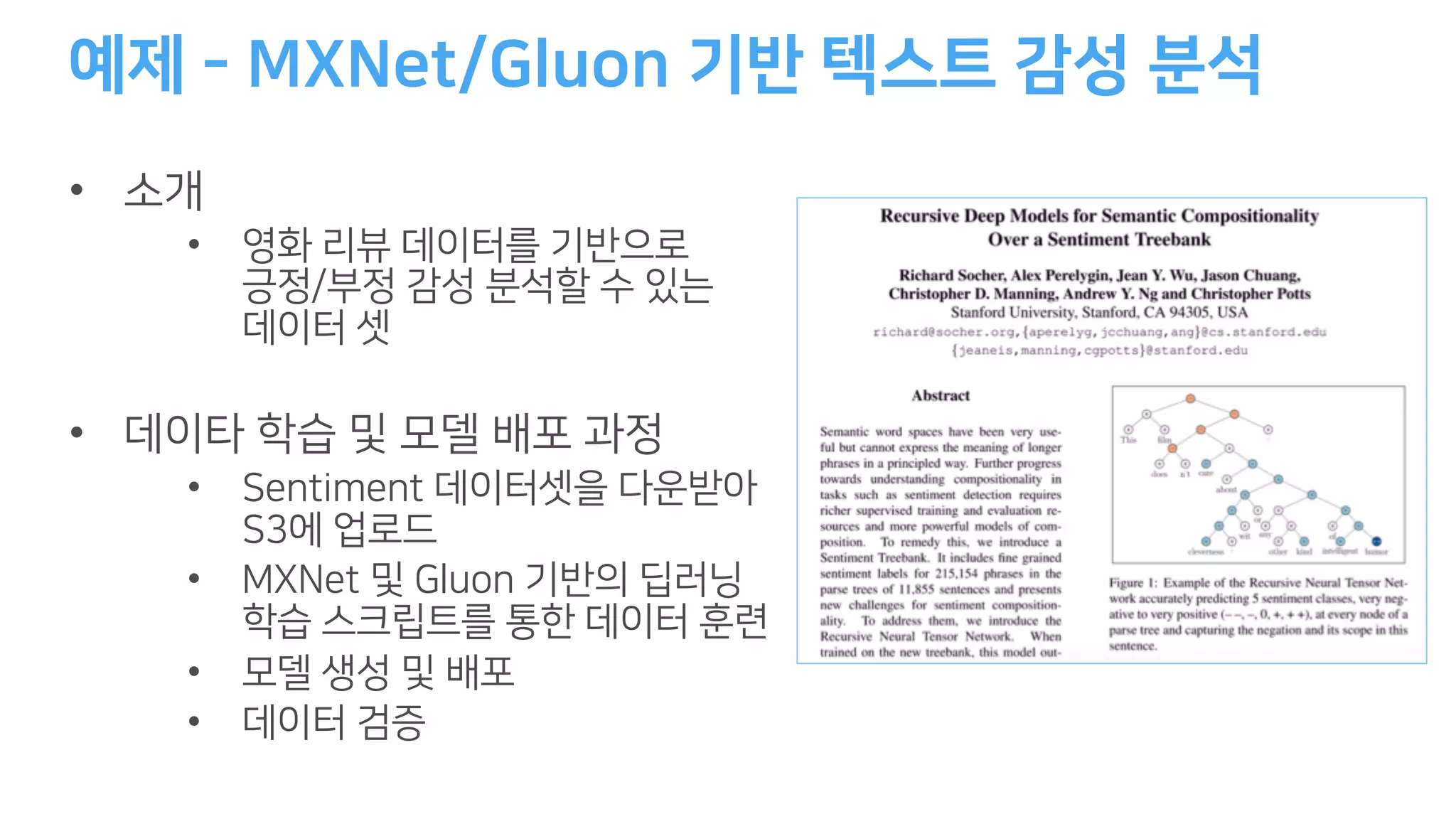

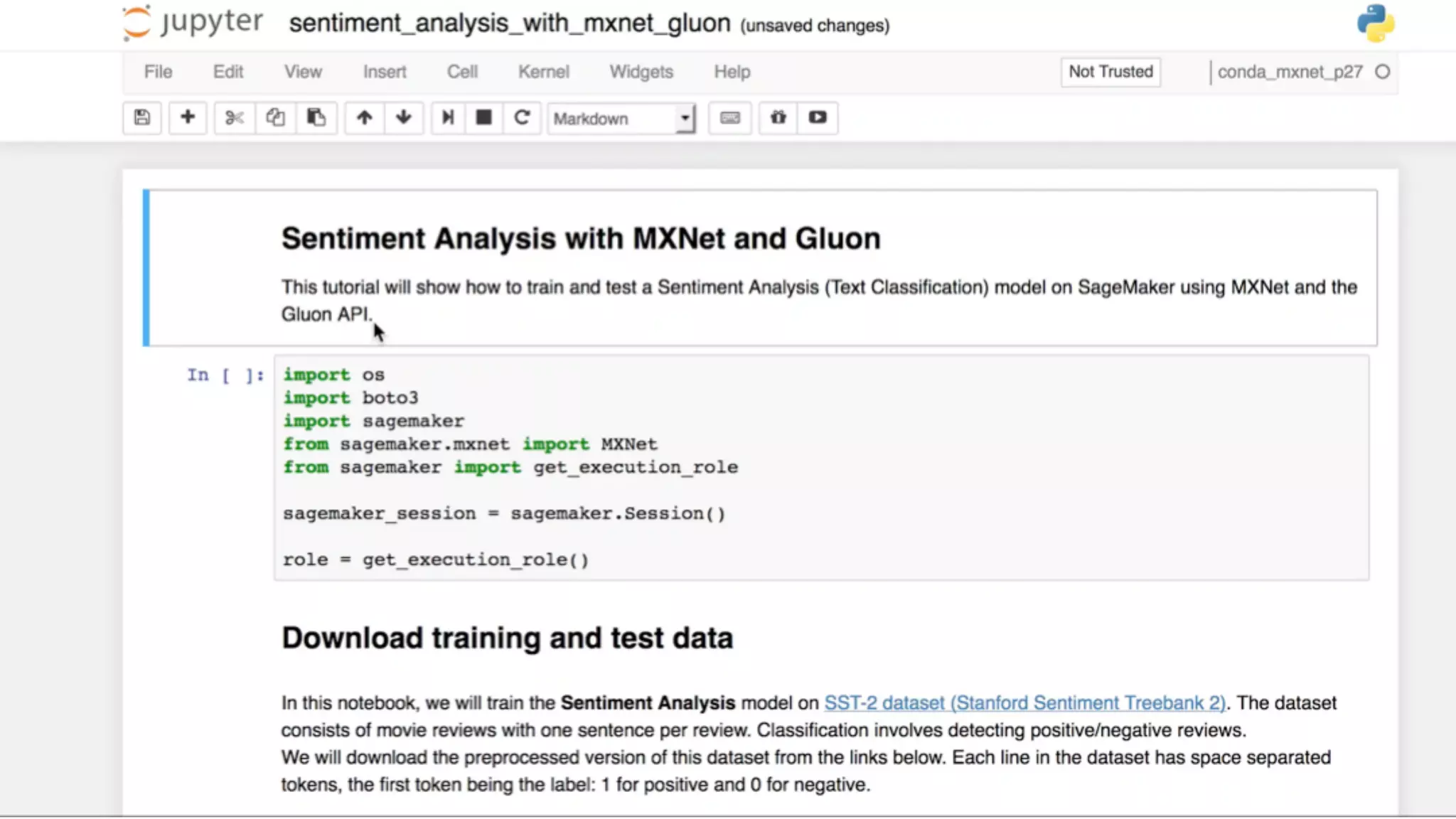

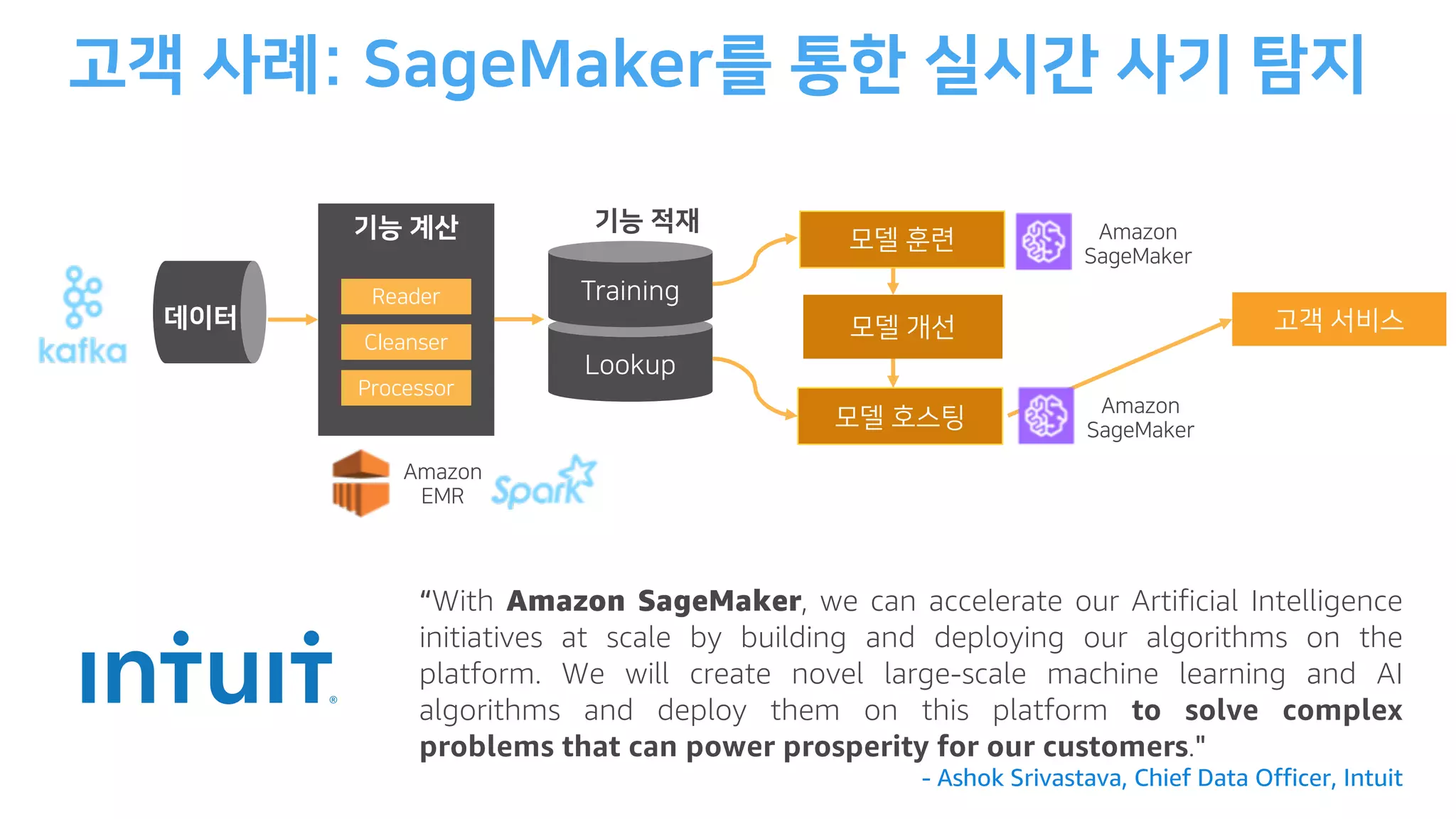

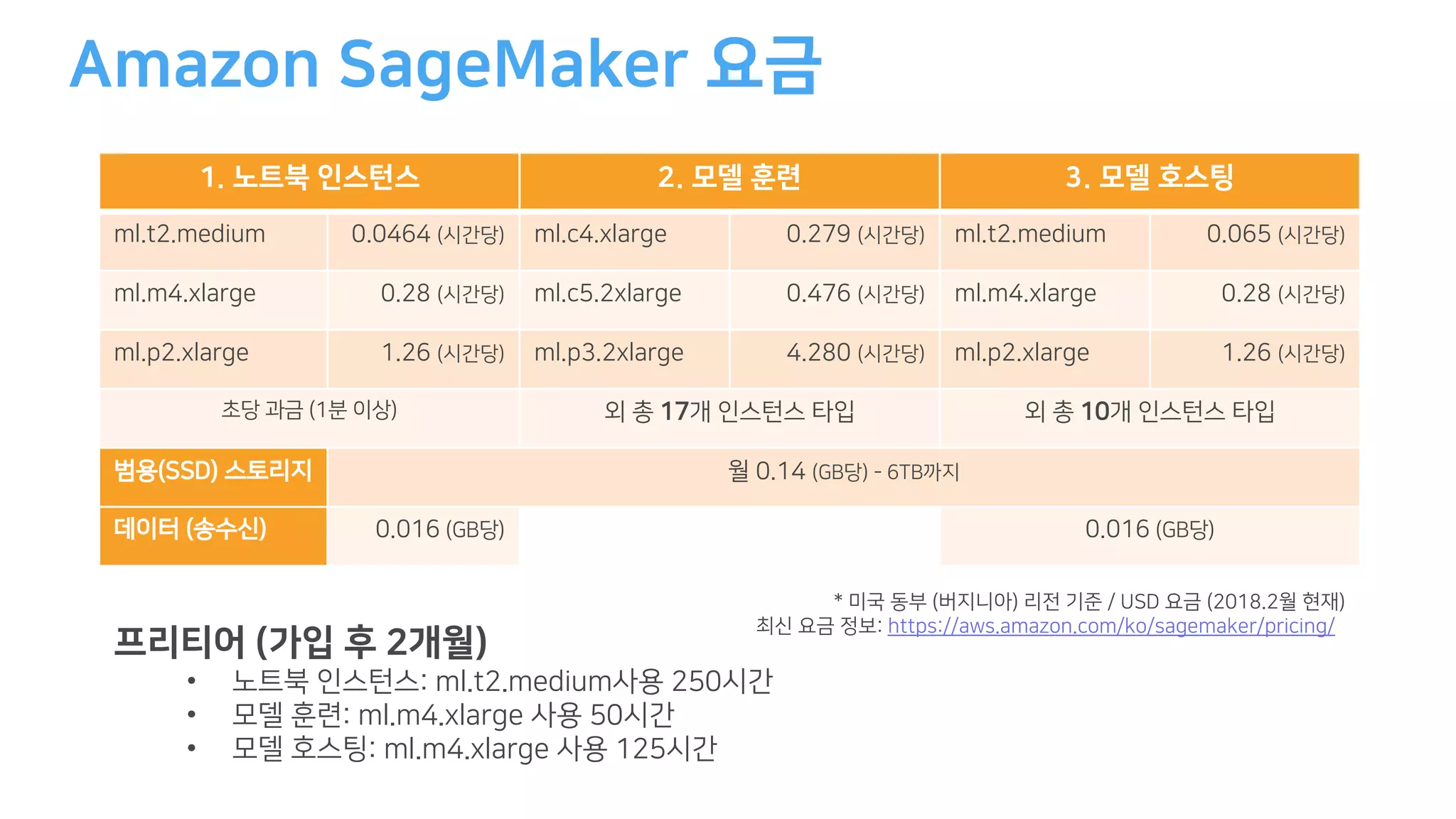

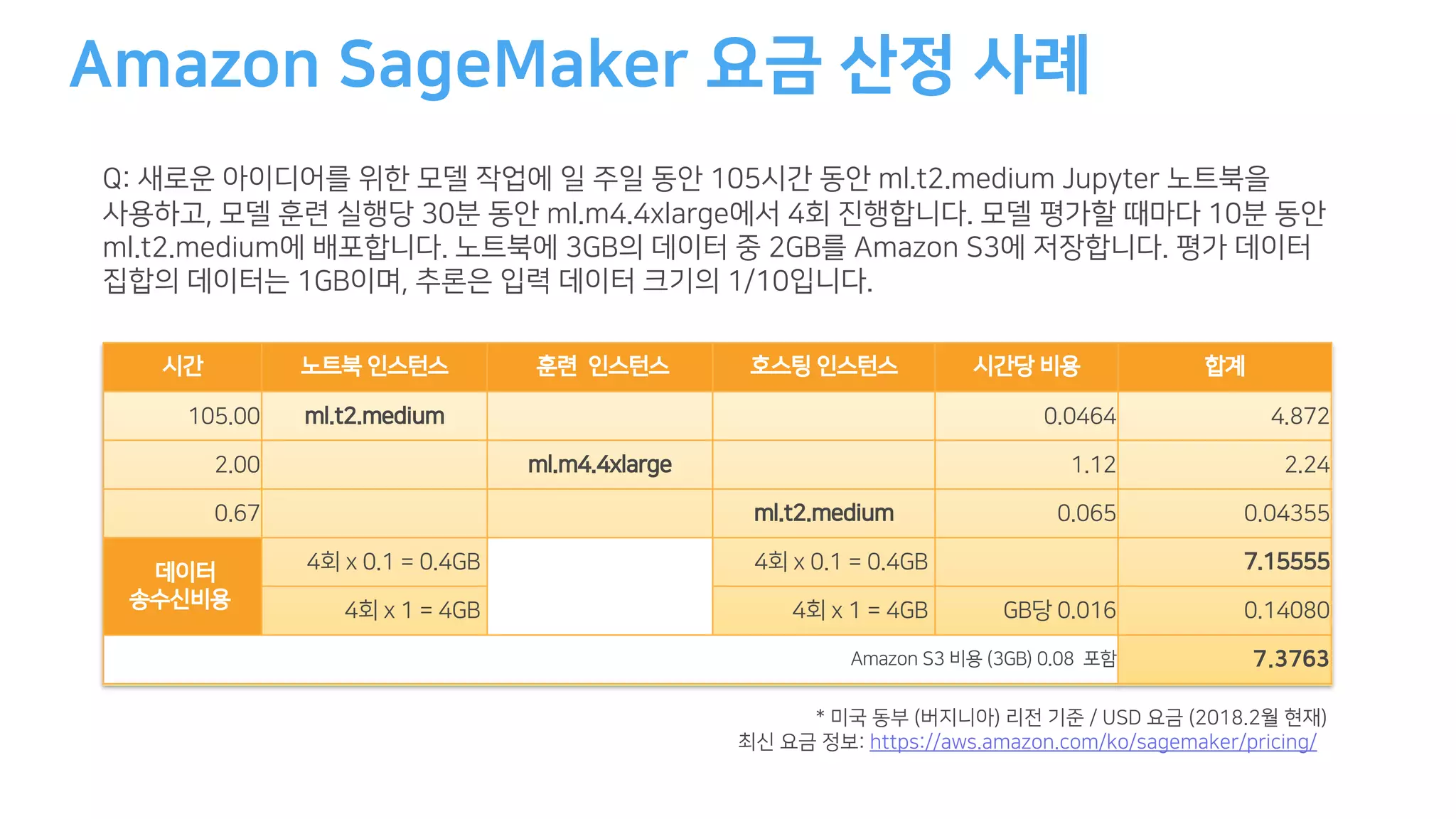

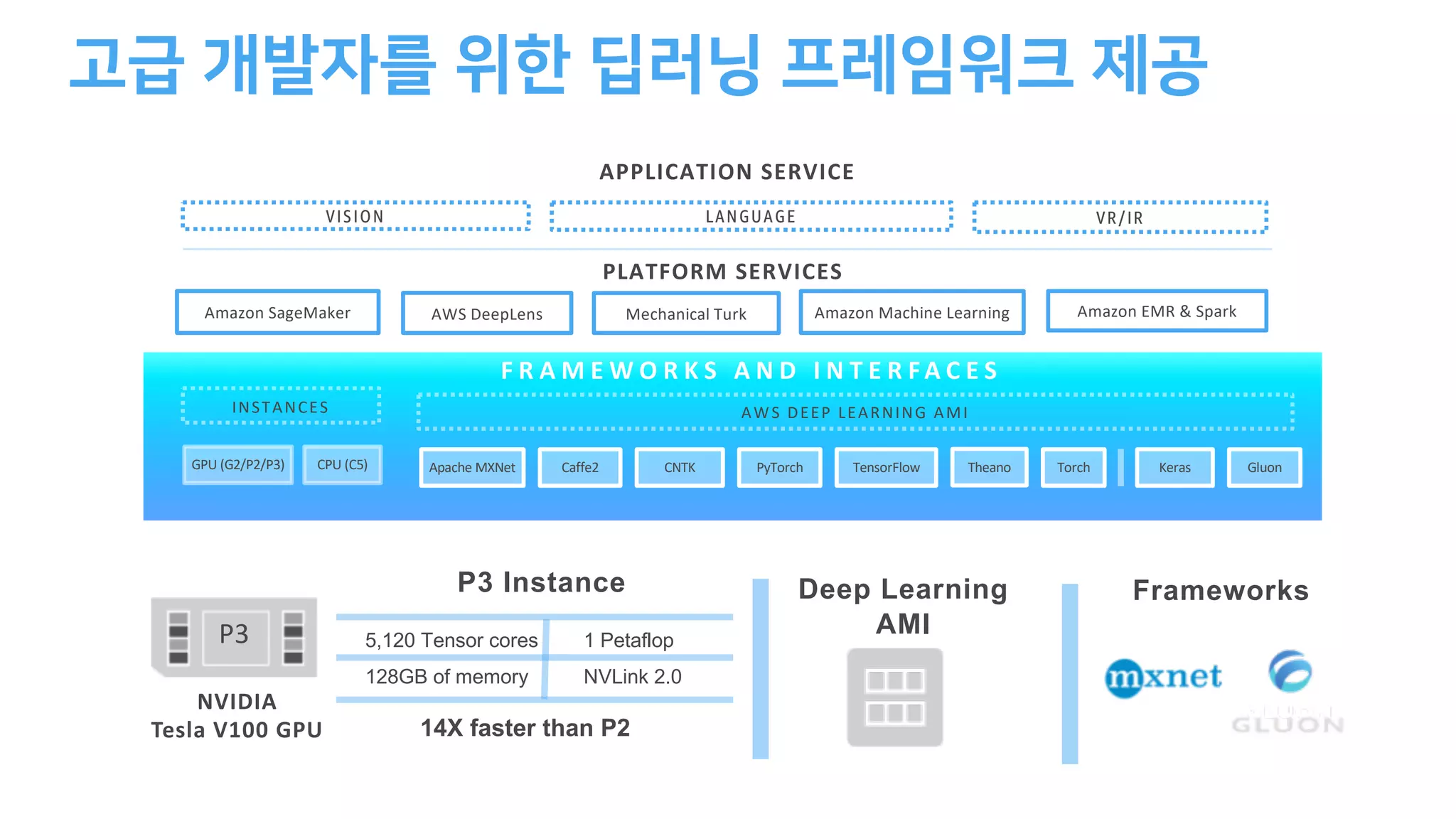

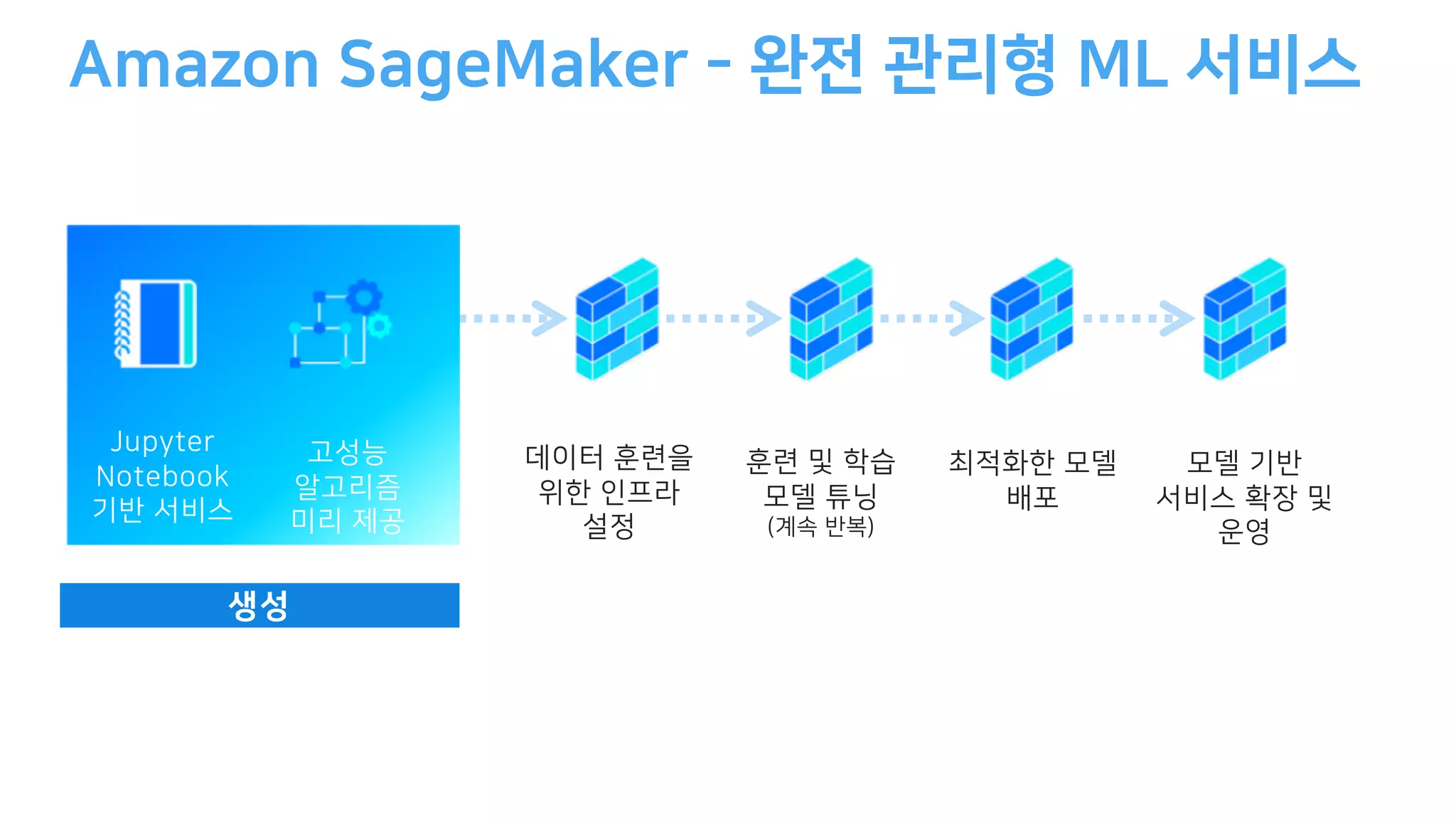

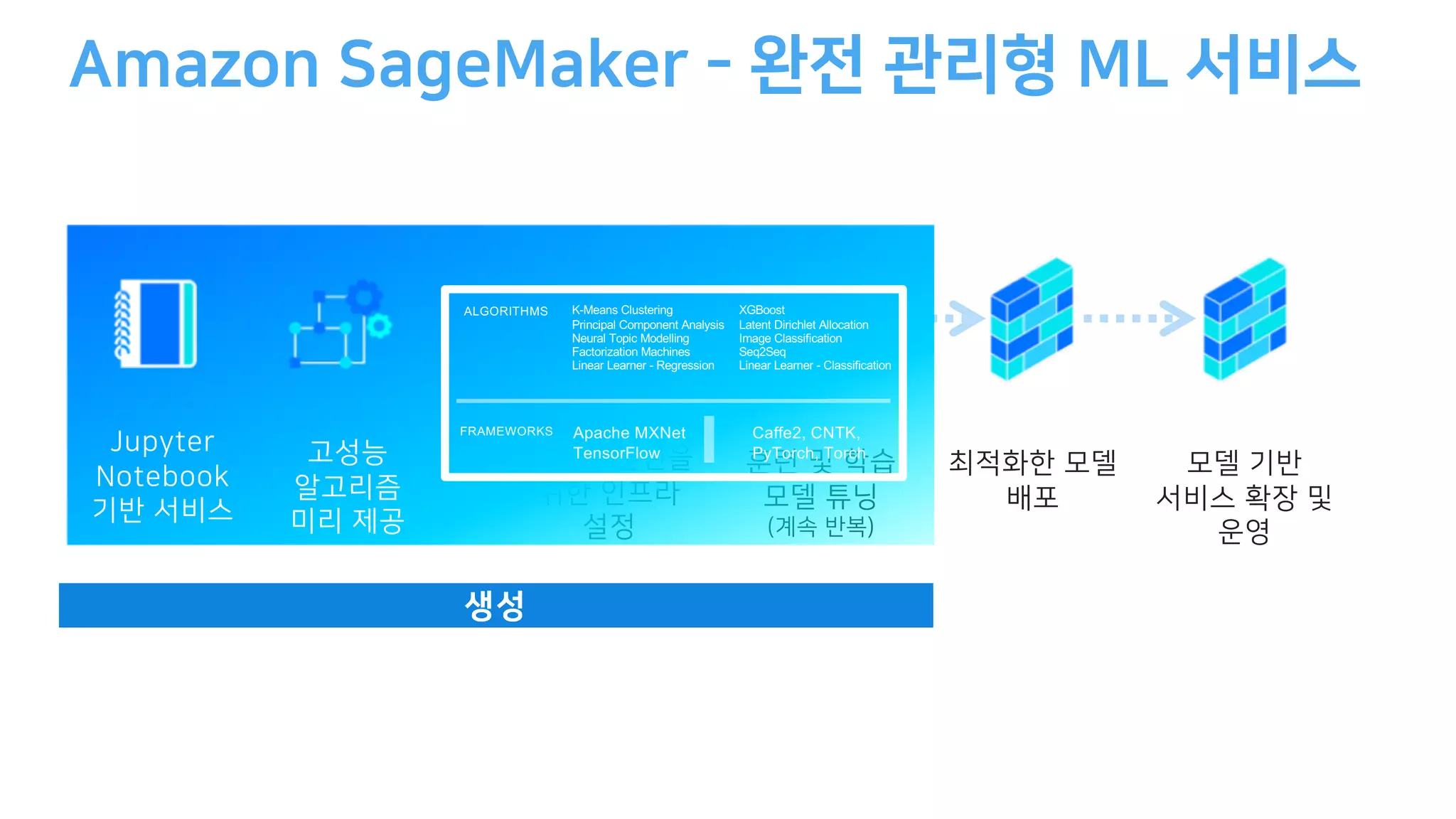

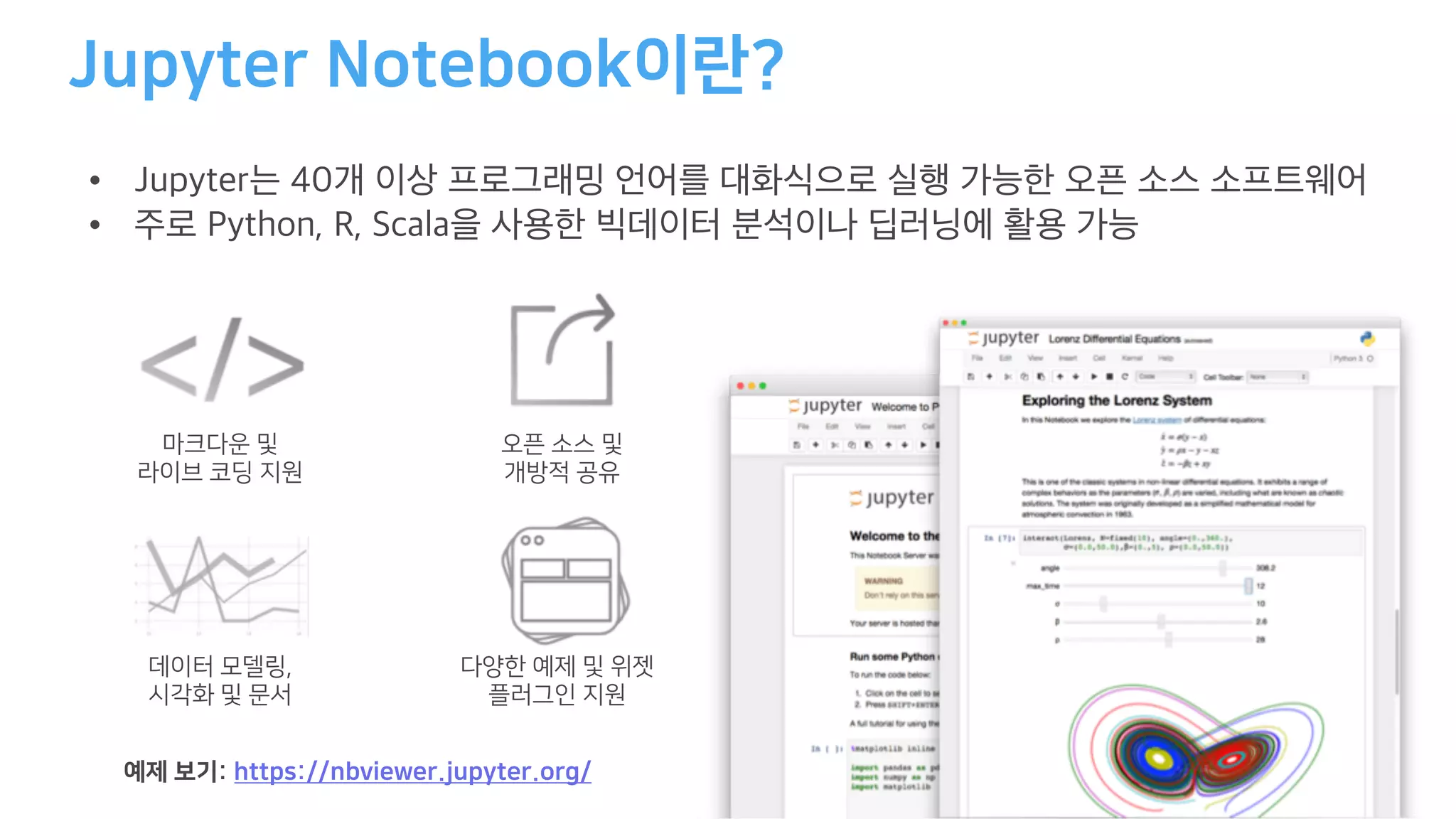

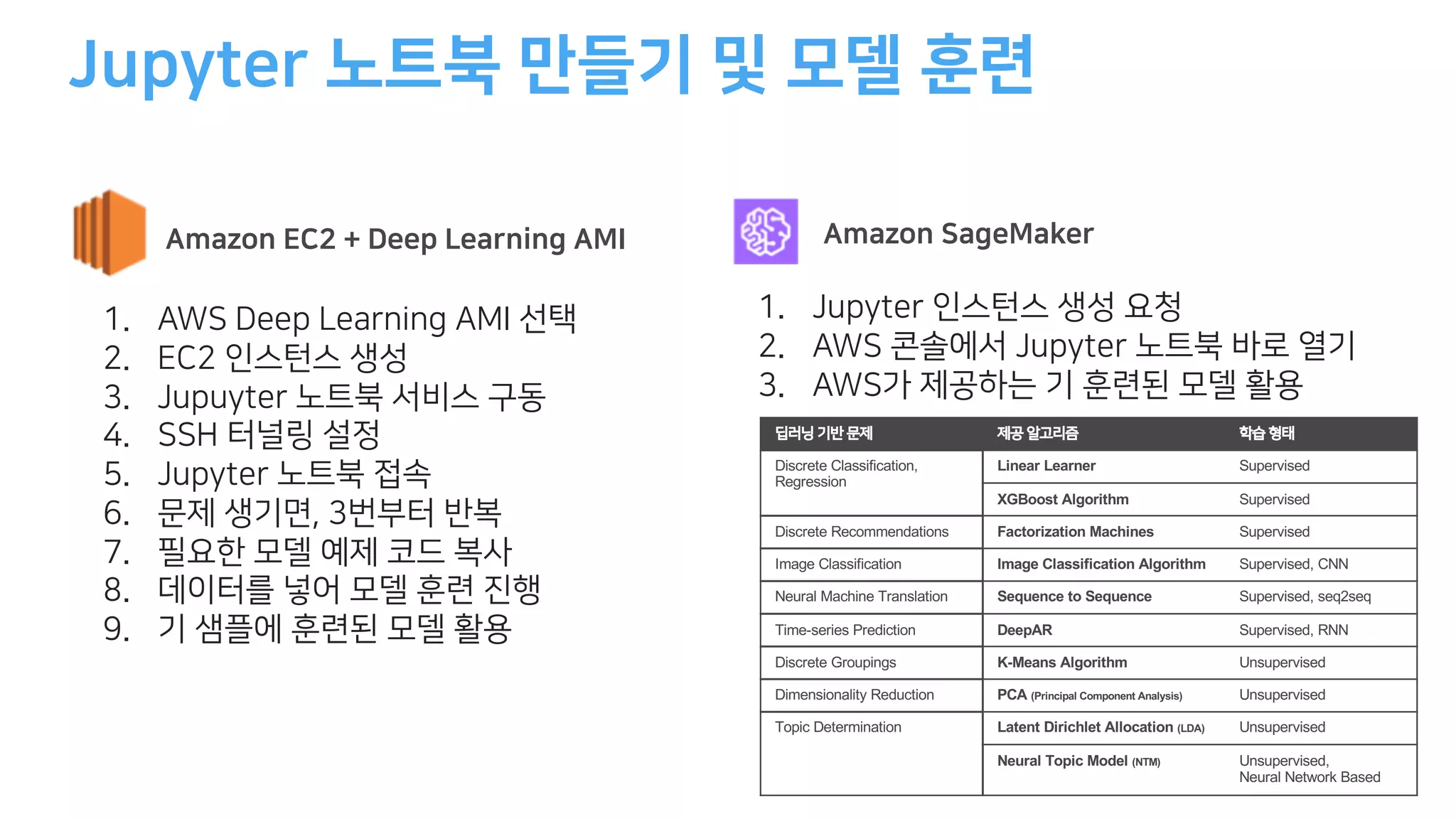

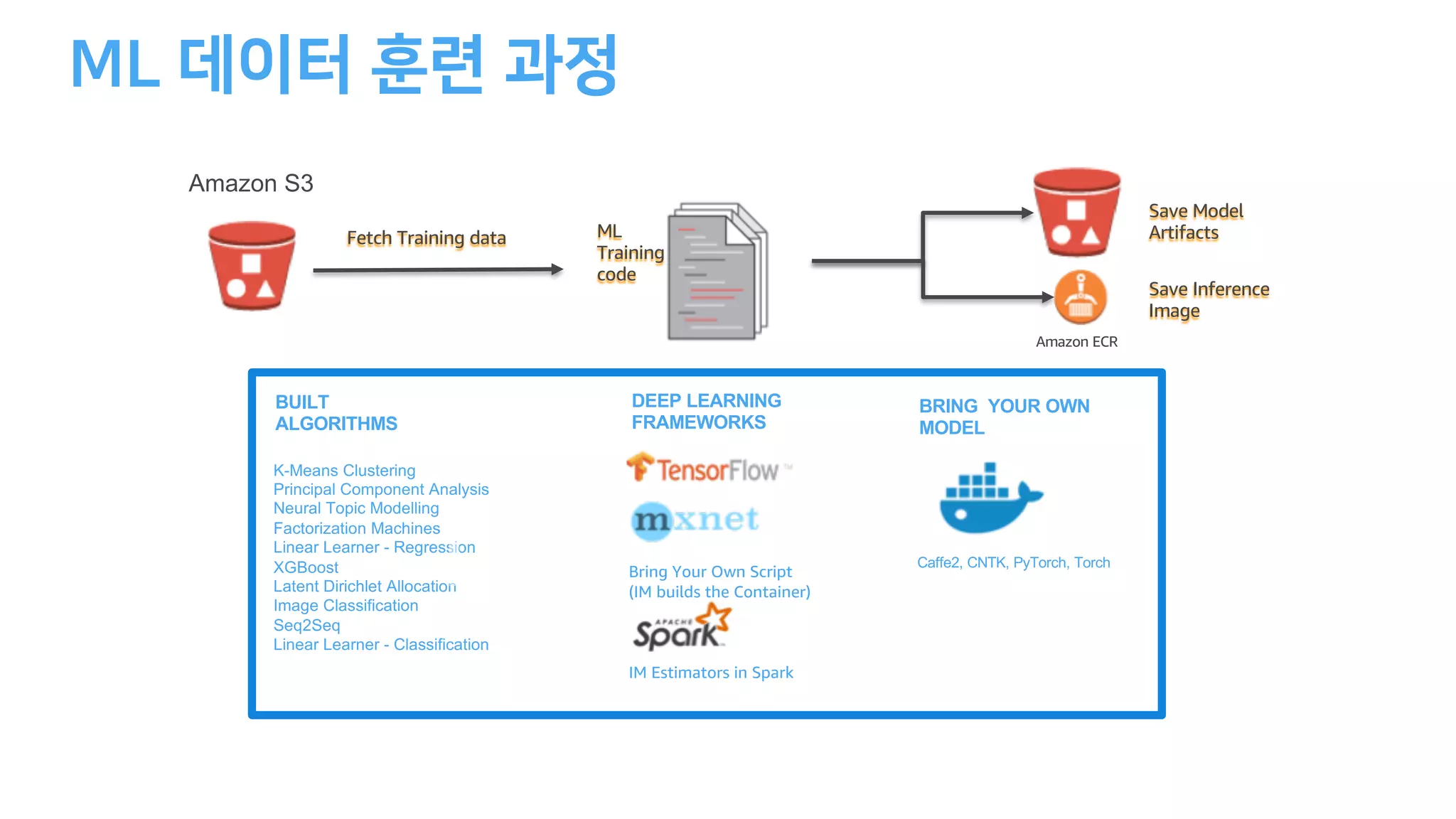

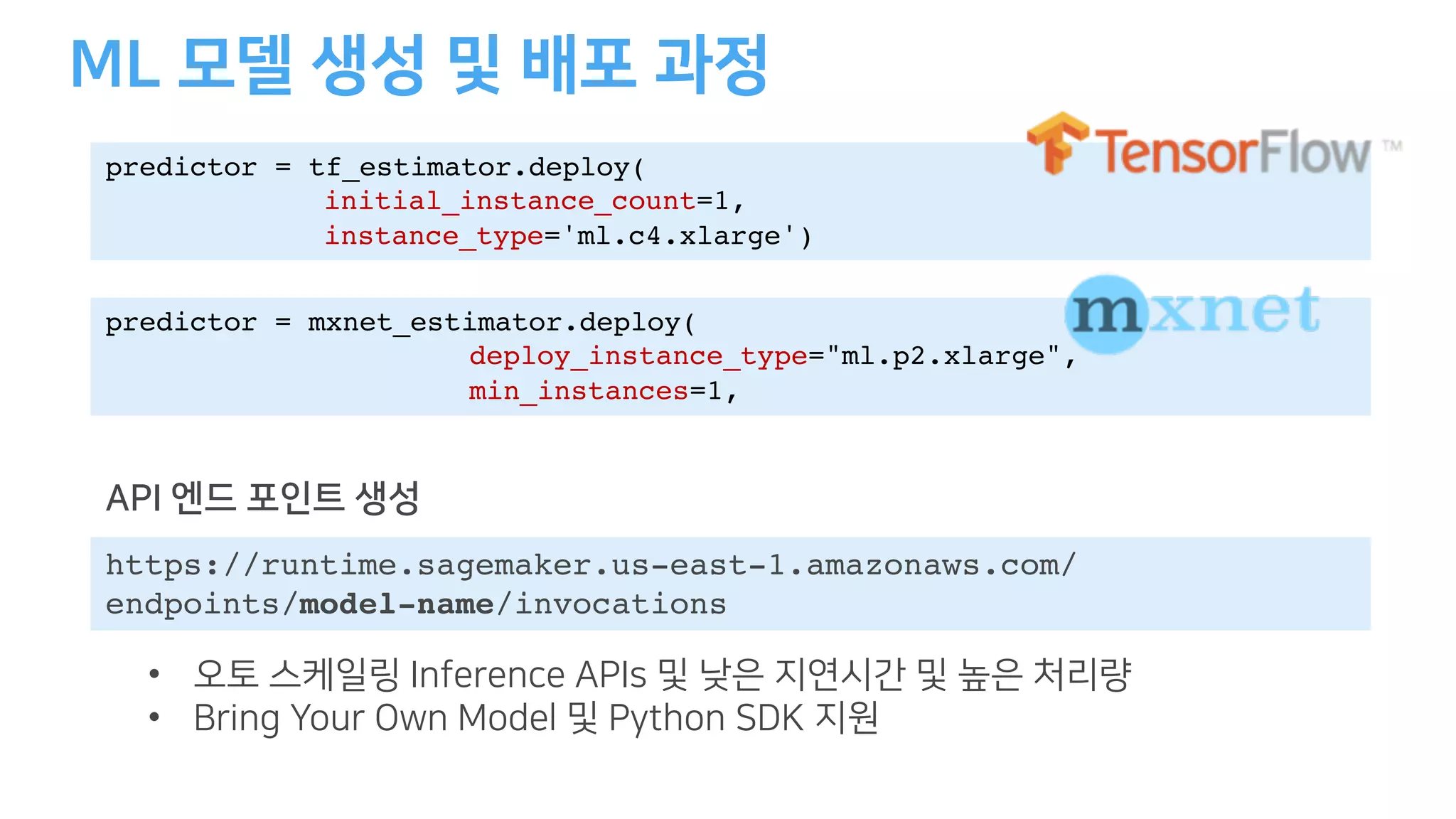

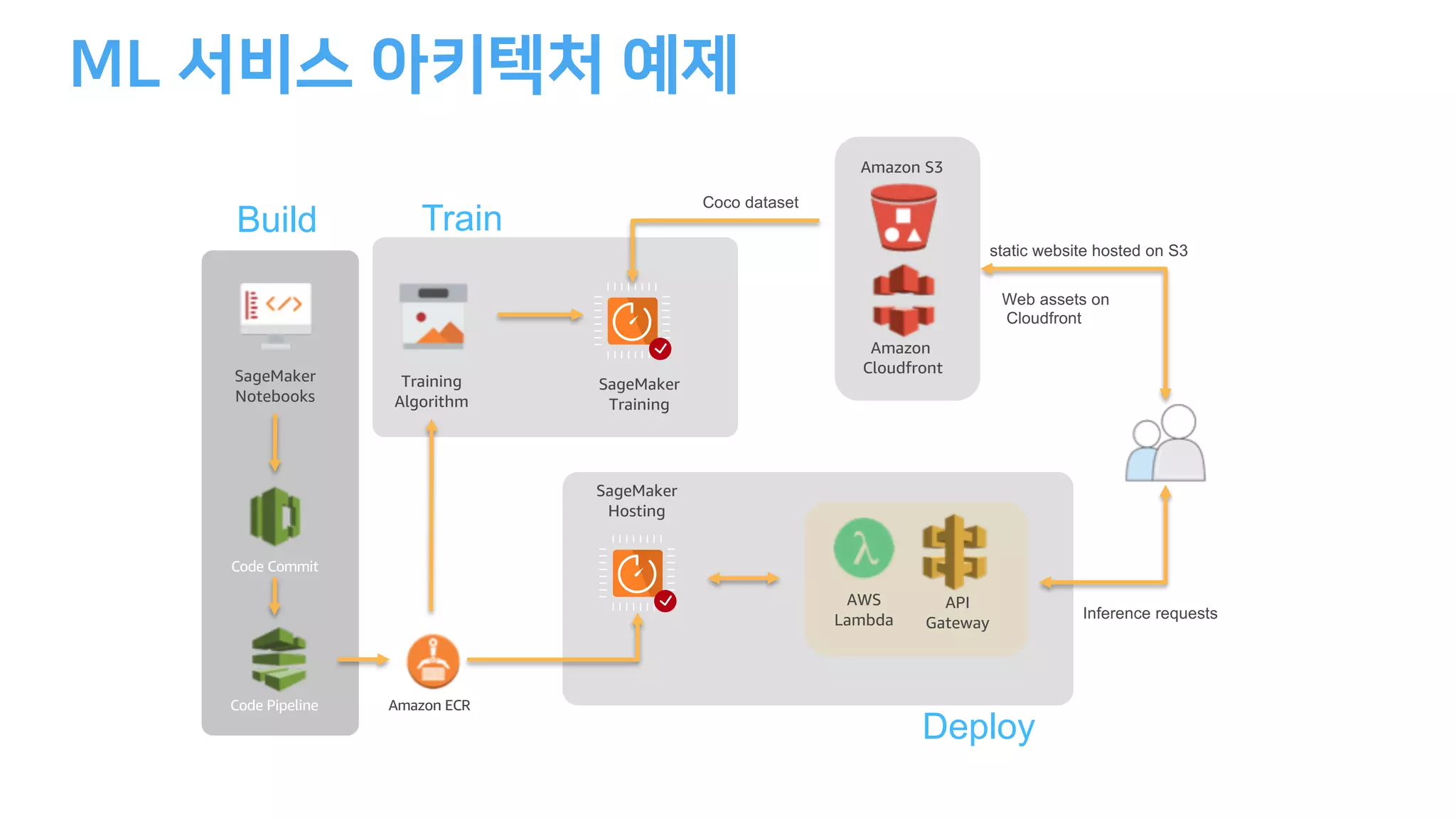

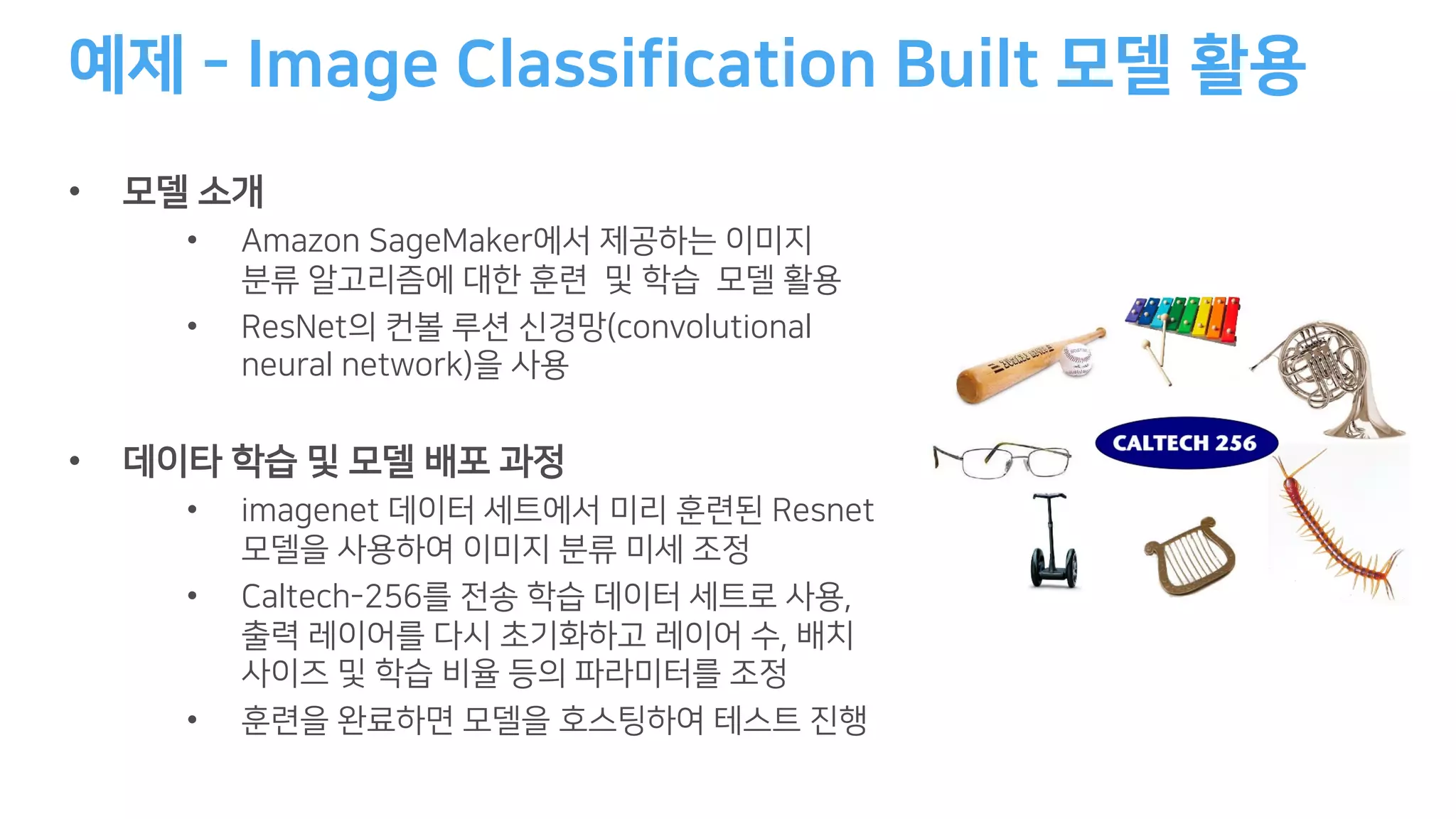

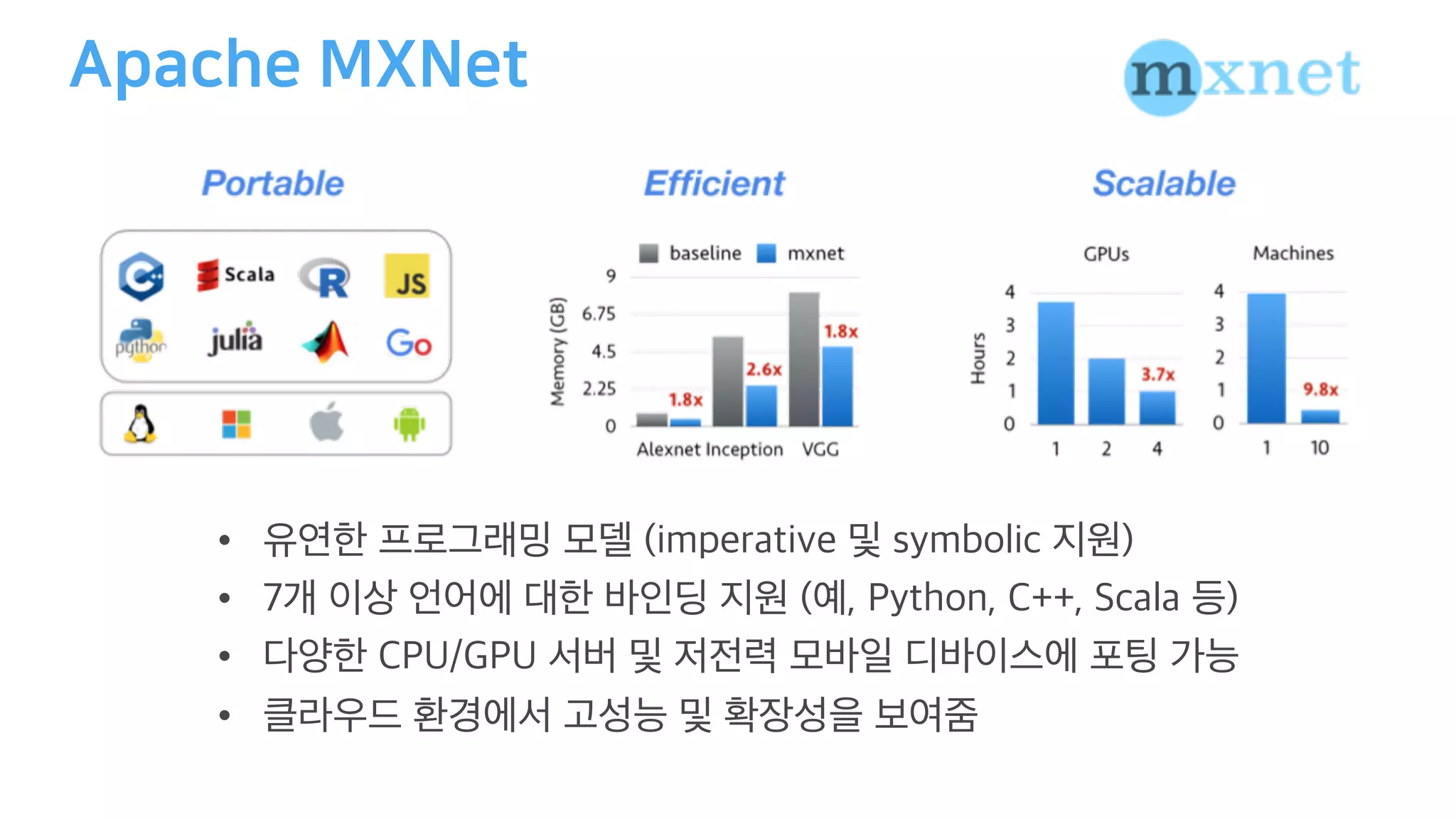

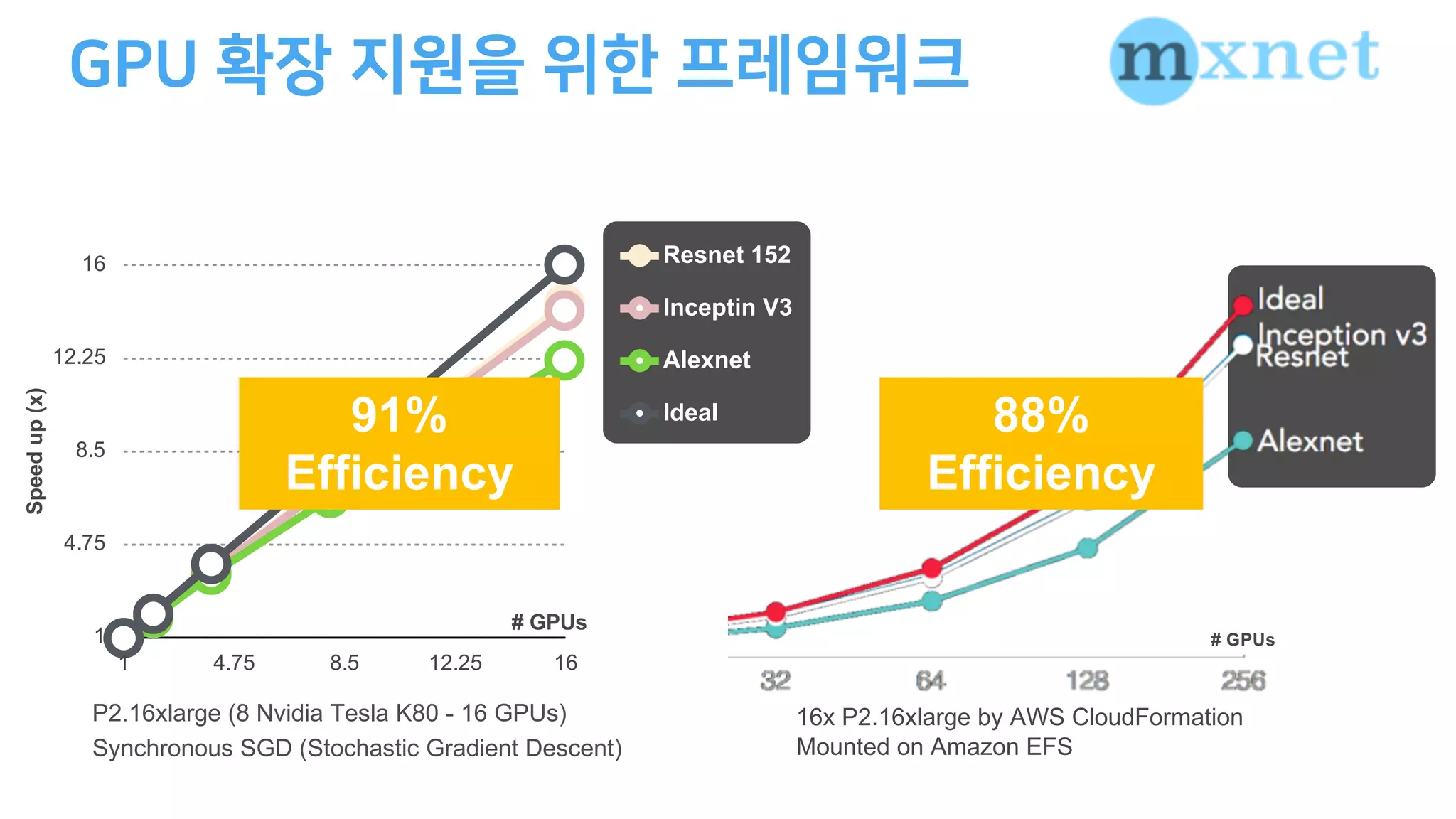

The document provides an overview of Amazon Web Services (AWS) related to deep learning, specifically detailing various machine learning frameworks, instance types, and their capabilities. It highlights the use of Amazon SageMaker for building, training, and deploying machine learning models, including code examples and the advantages of utilizing AWS for AI initiatives. Furthermore, it emphasizes collaborations and statements from industry leaders about leveraging AWS for scalable AI and machine learning solutions.

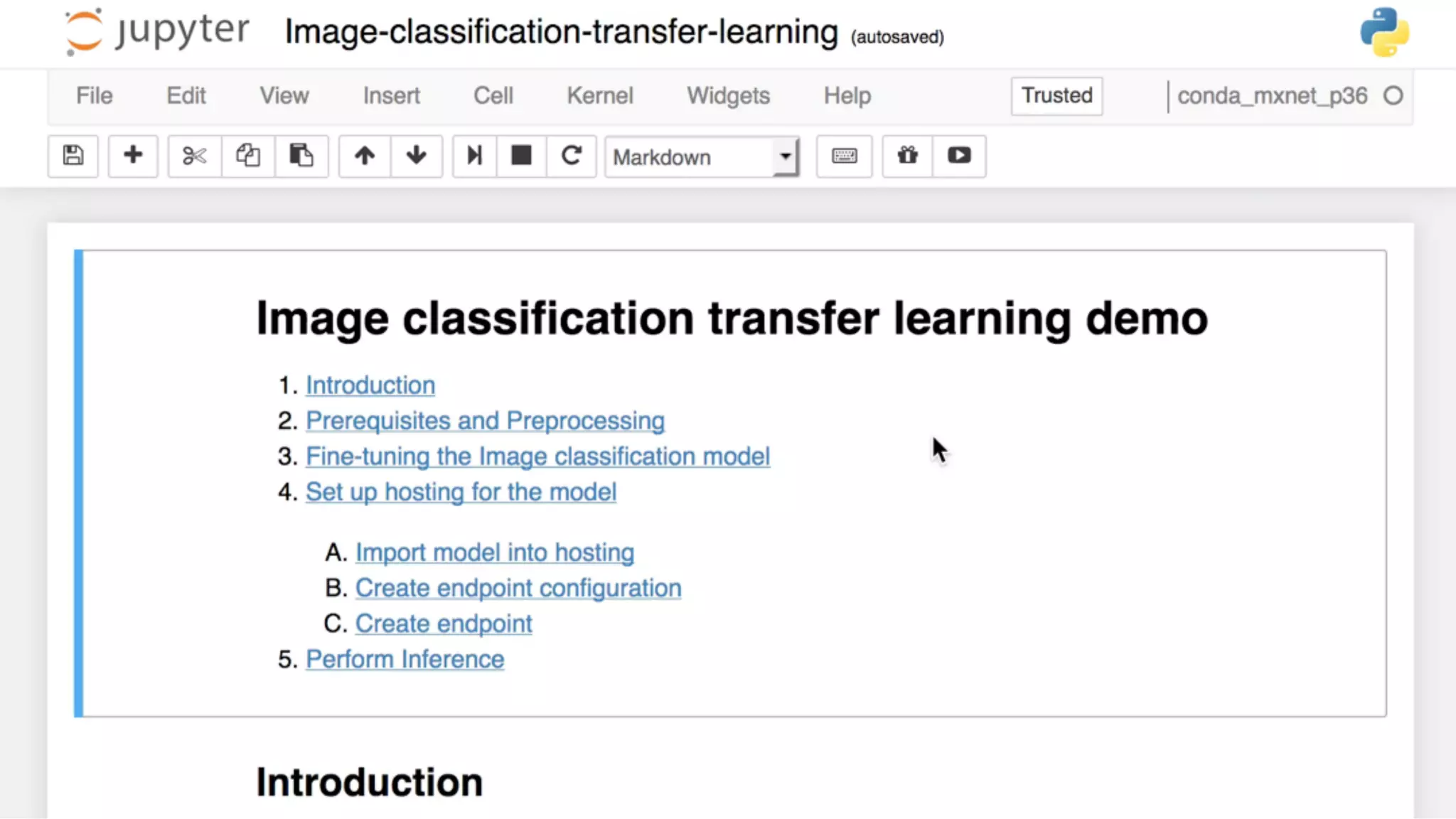

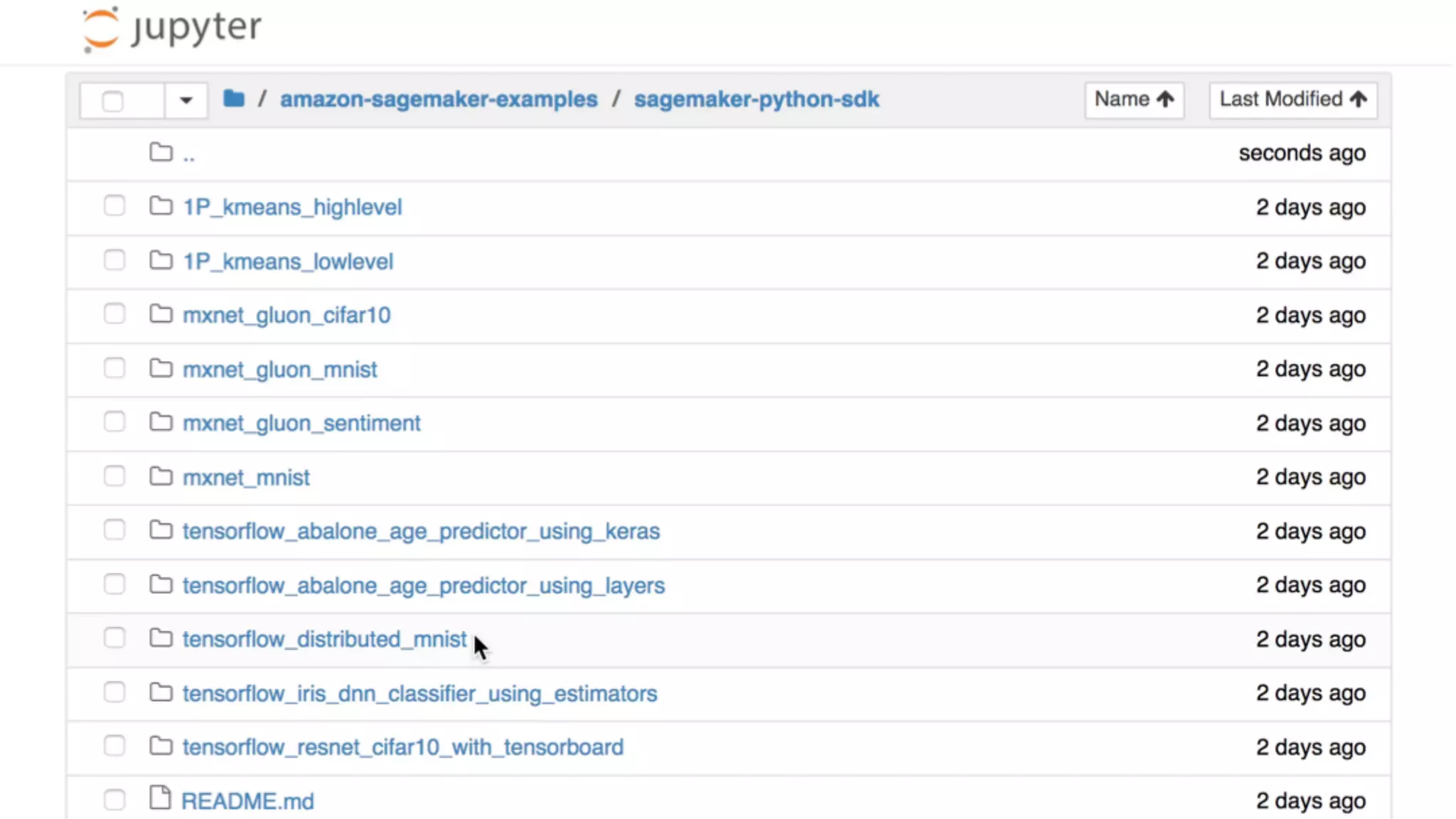

![sagemaker = boto3.client(service_name='sagemaker')

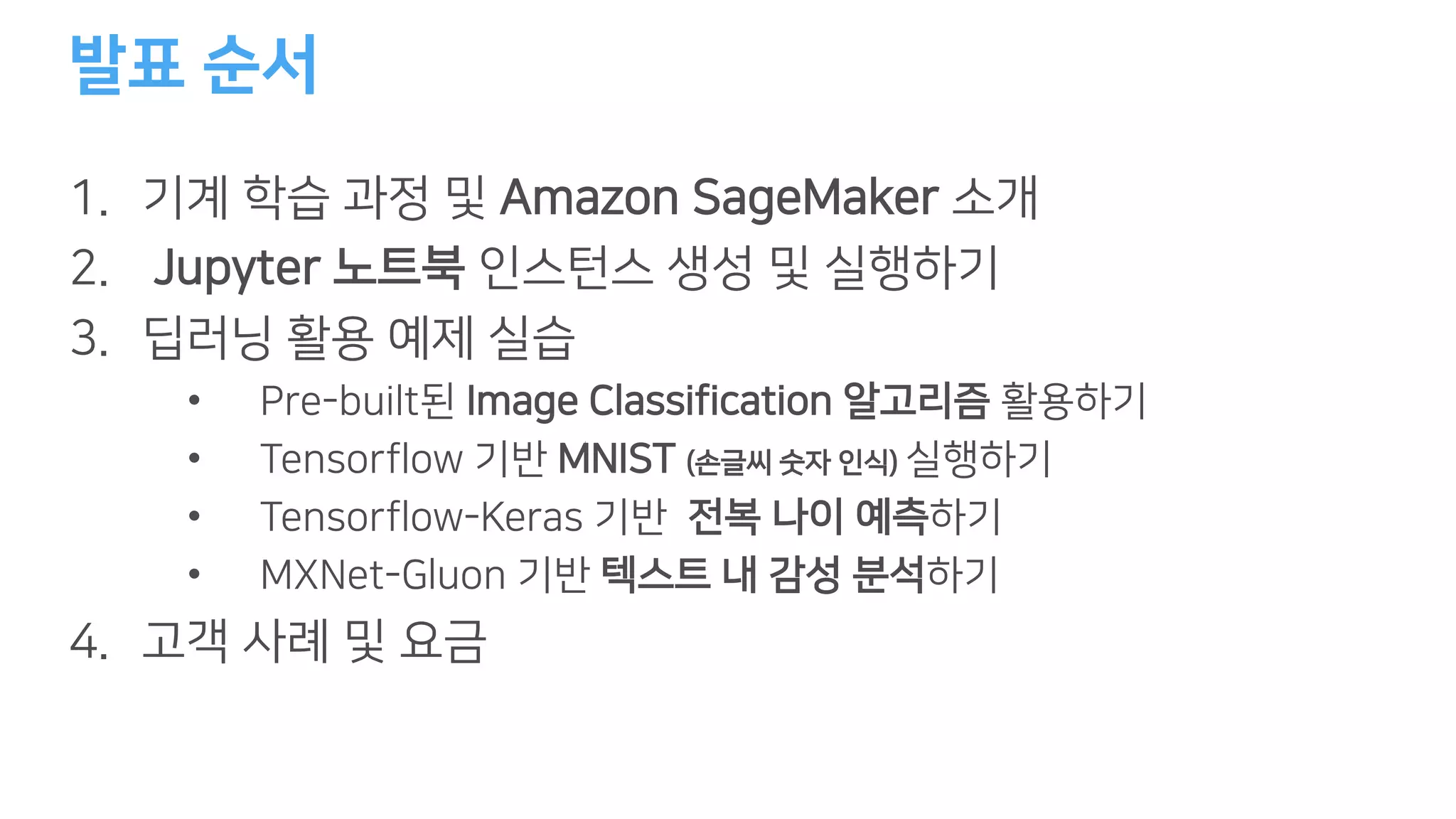

sagemaker.create_training_job(**training_params)

create_model_response = sage.create_model(

ModelName = model_name,

ExecutionRoleArn = role,

PrimaryContainer = primary_container)

endpoint_config_response = sage.create_endpoint_config(

EndpointConfigName = endpoint_config_name,

ProductionVariants=[{

'InstanceType':'ml.m4.xlarge',

'InitialInstanceCount':1,

'ModelName':model_name,

'VariantName':'AllTraffic'}])

endpoint_response = sagemaker.create_endpoint(

'EndpointName': endpoint_name,

'EndpointConfigName': endpoint_config_name

2

.

1 3

.](https://image.slidesharecdn.com/amazonsagemakerjupyternotebookchanny-180222194723/75/Amazon-SageMaker-Jupyter-Notebook-AWS-21-2048.jpg)

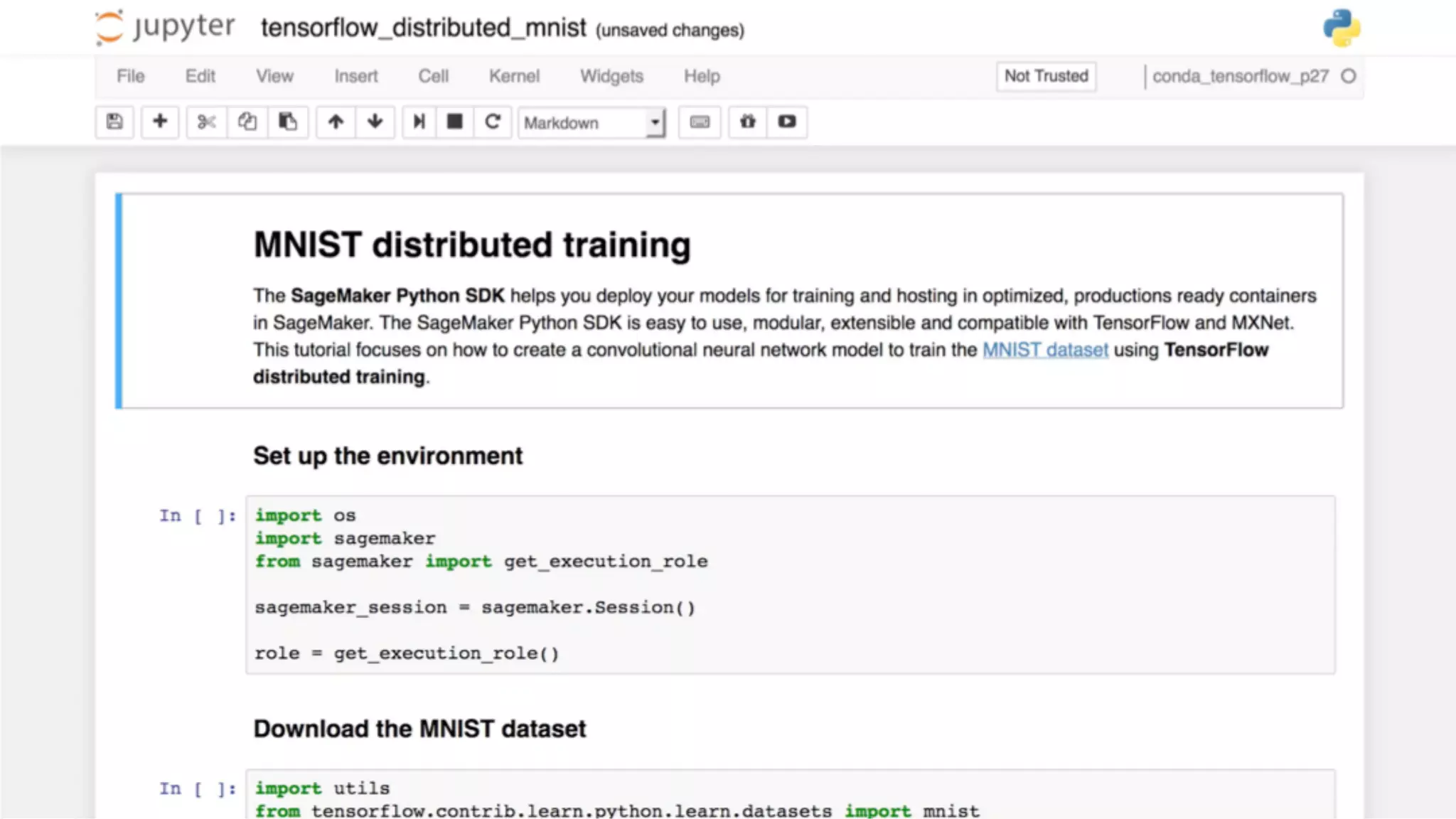

![## train data

num_gpus = 4

gpus = [mx.gpu(i) for i in range(num_gpus)]

model = mx.model.FeedForward(

ctx = gpus,

symbol = softmax,

num_round = 20,

learning_rate = 0.01,

momentum = 0.9,

wd = 0.00001)

model.fit(X = train, eval_data = val,

batch_end_callback =

mx.callback.Speedometer(batch_size=batch_size))](https://image.slidesharecdn.com/amazonsagemakerjupyternotebookchanny-180222194723/75/Amazon-SageMaker-Jupyter-Notebook-AWS-29-2048.jpg)