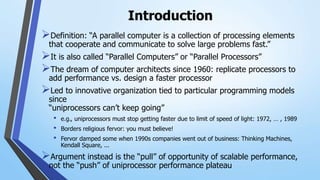

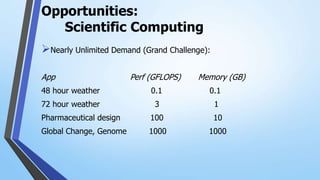

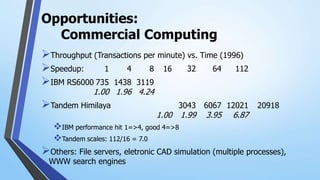

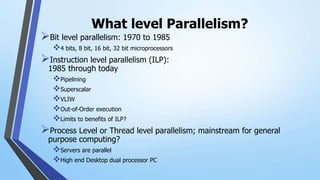

This document provides an introduction to dedicated fully parallel architectures. It defines parallel computers as collections of processing elements that cooperate and communicate to solve large problems quickly. The document discusses how computer architects have sought to replicate processors since the 1960s to add performance rather than design faster single processors. It outlines opportunities for parallelism in scientific and commercial computing. Finally, it examines fundamental issues in parallel architectures and different models of parallel processing including single instruction single/multiple data stream and multiple instruction single/multiple data stream architectures.