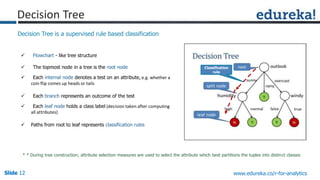

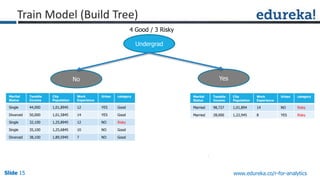

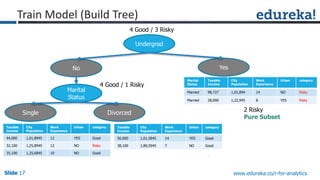

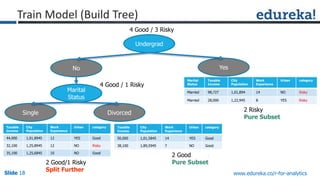

The document discusses decision trees and their use for classification problems. It covers:

1) The agenda includes an overview of decision trees, how they work, and a demonstration on a banking customer classification problem.

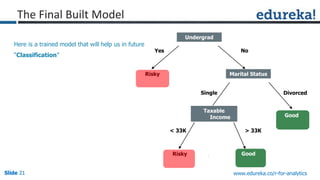

2) Decision trees can be used to classify banking customers as "Risky" or "Good" based on attributes like income, marital status, etc.

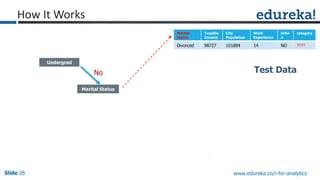

3) The document demonstrates how a decision tree is built on sample customer data and how a new customer can then be classified.