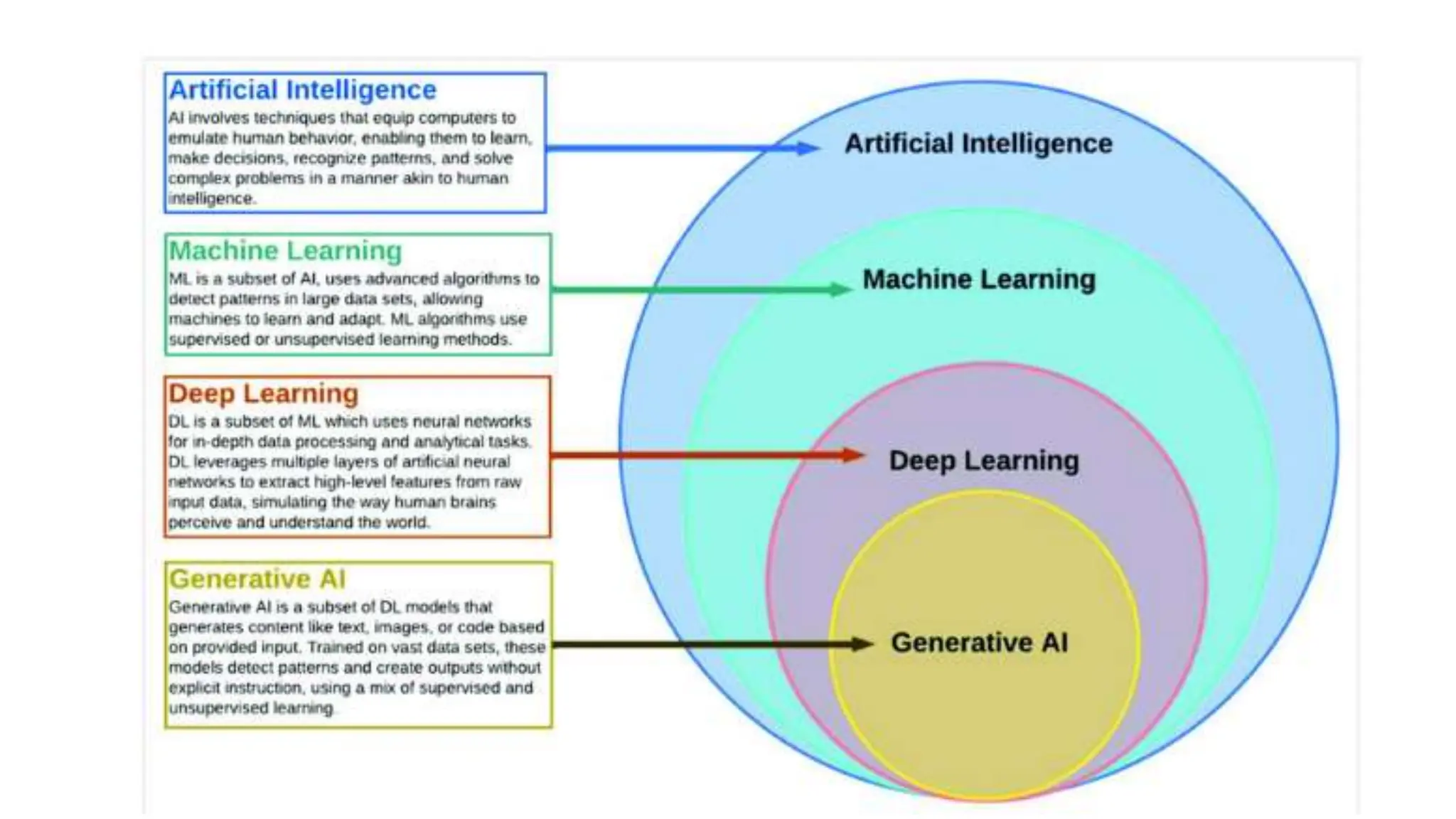

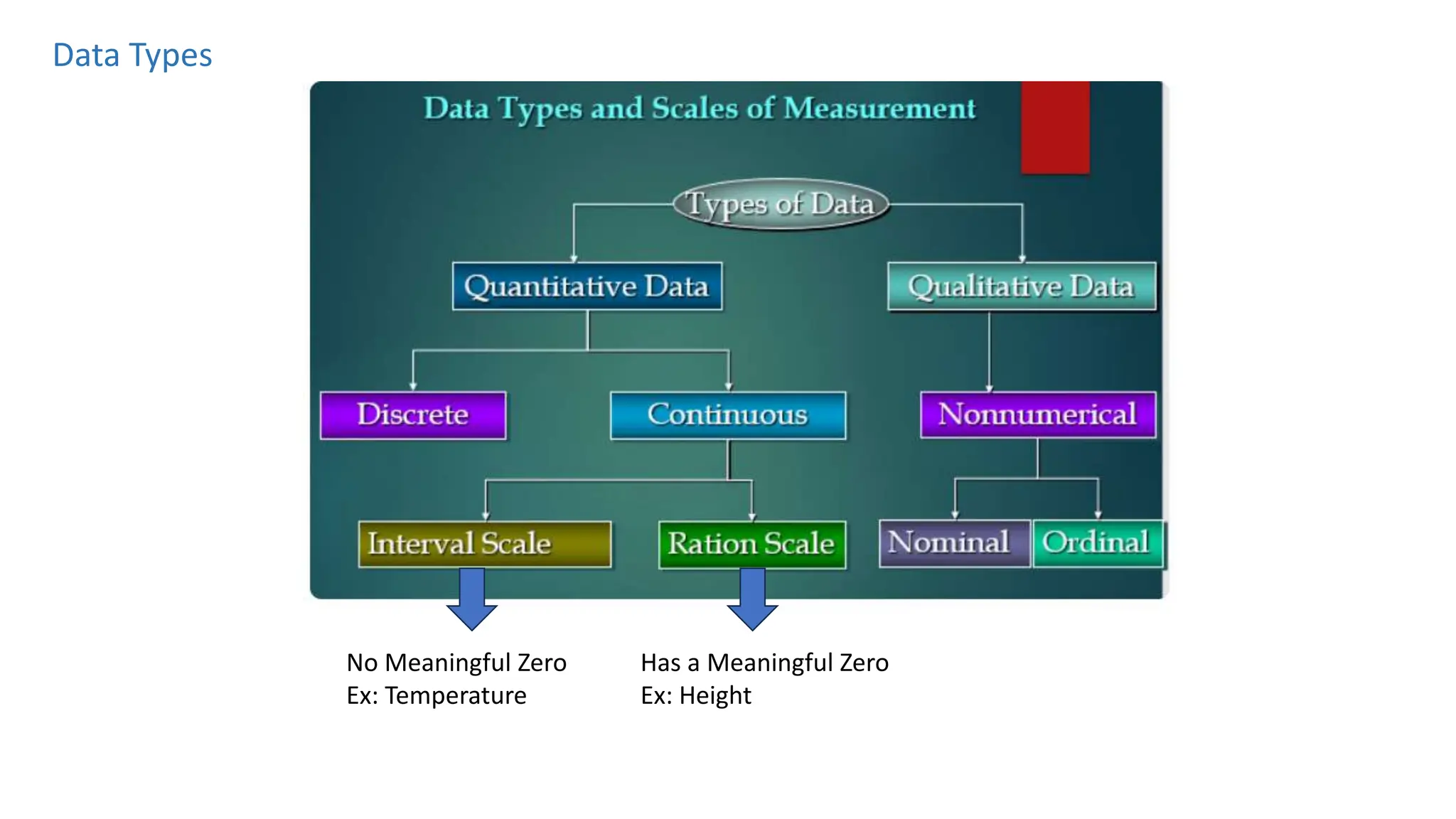

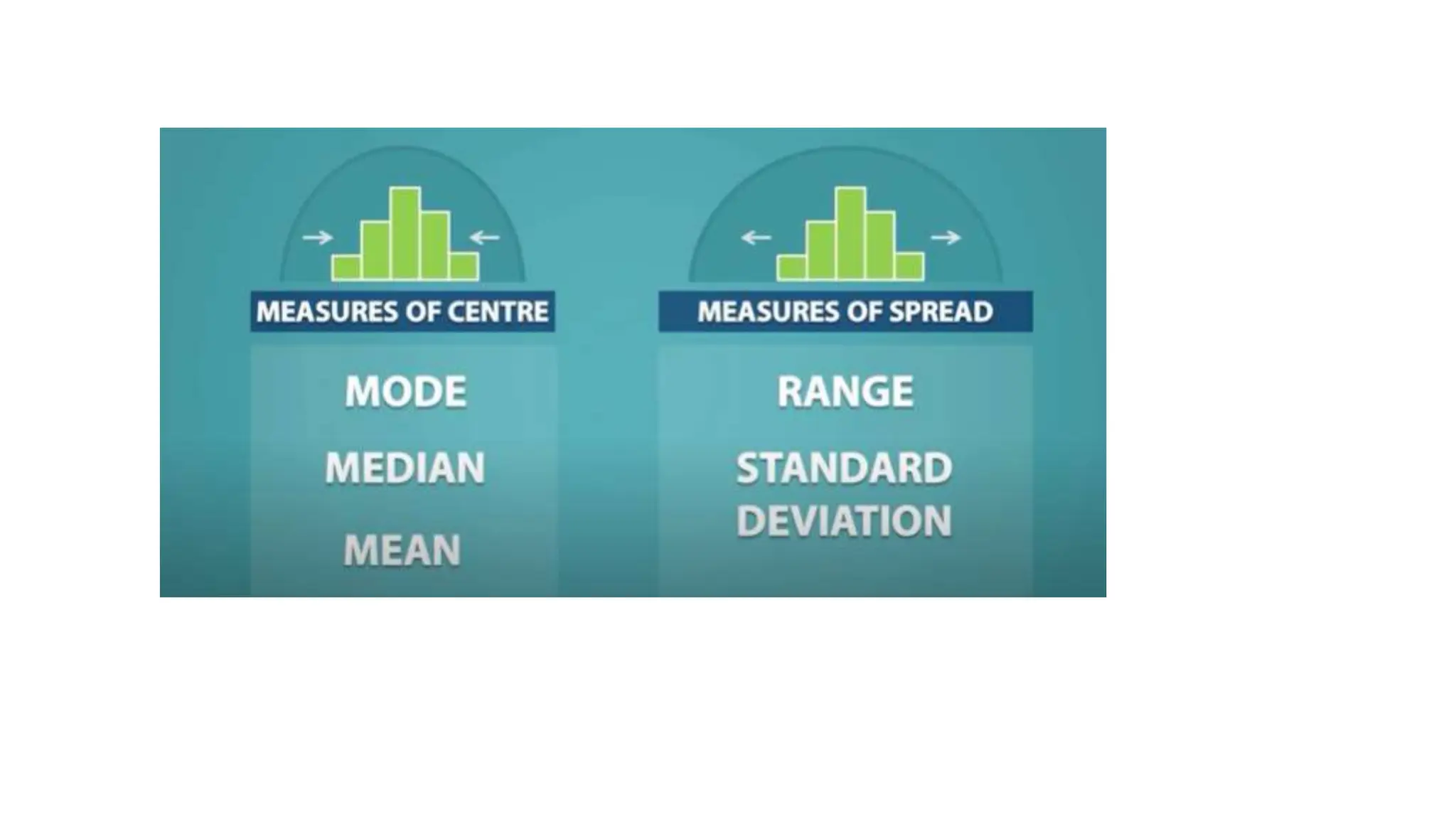

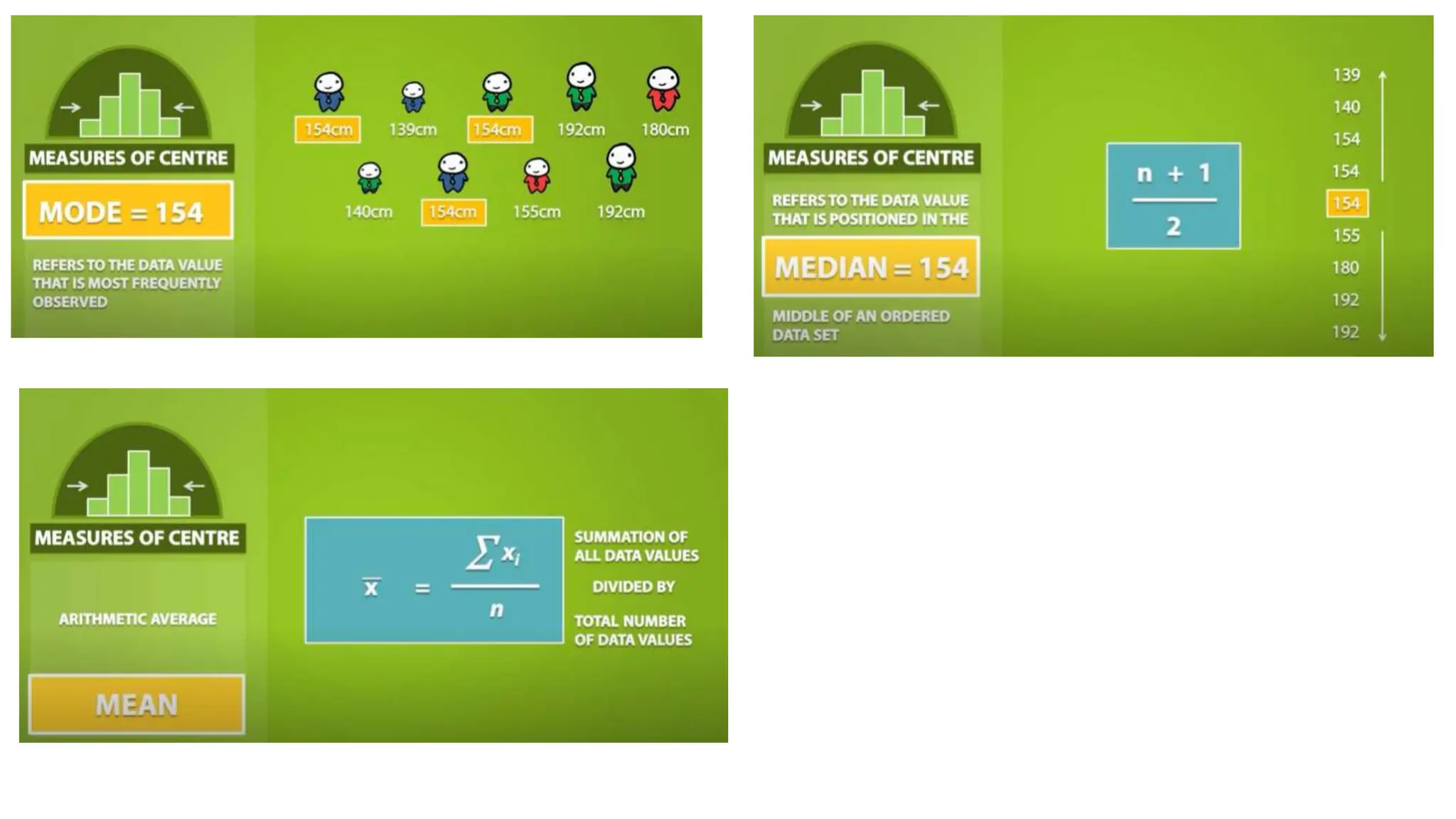

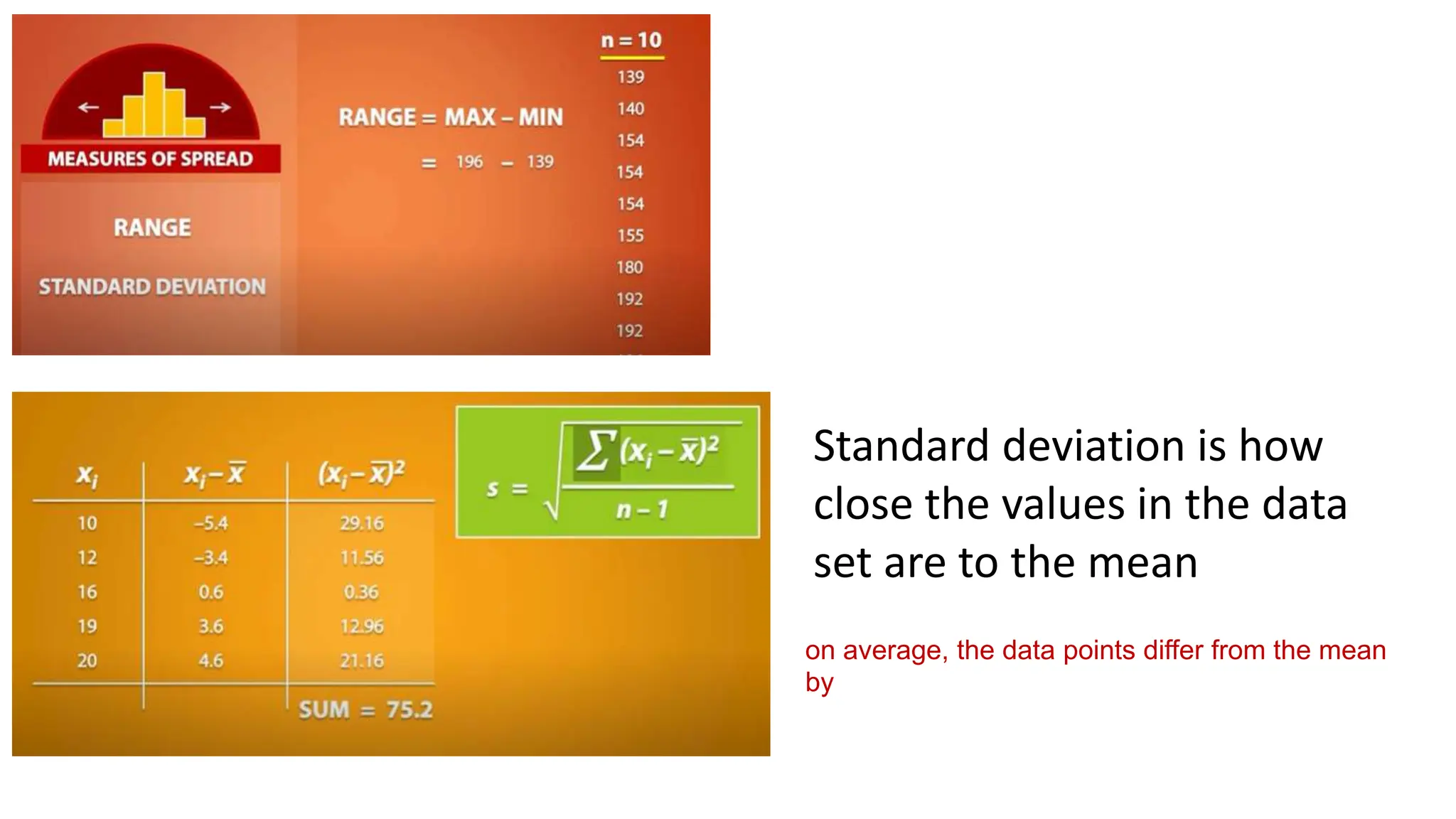

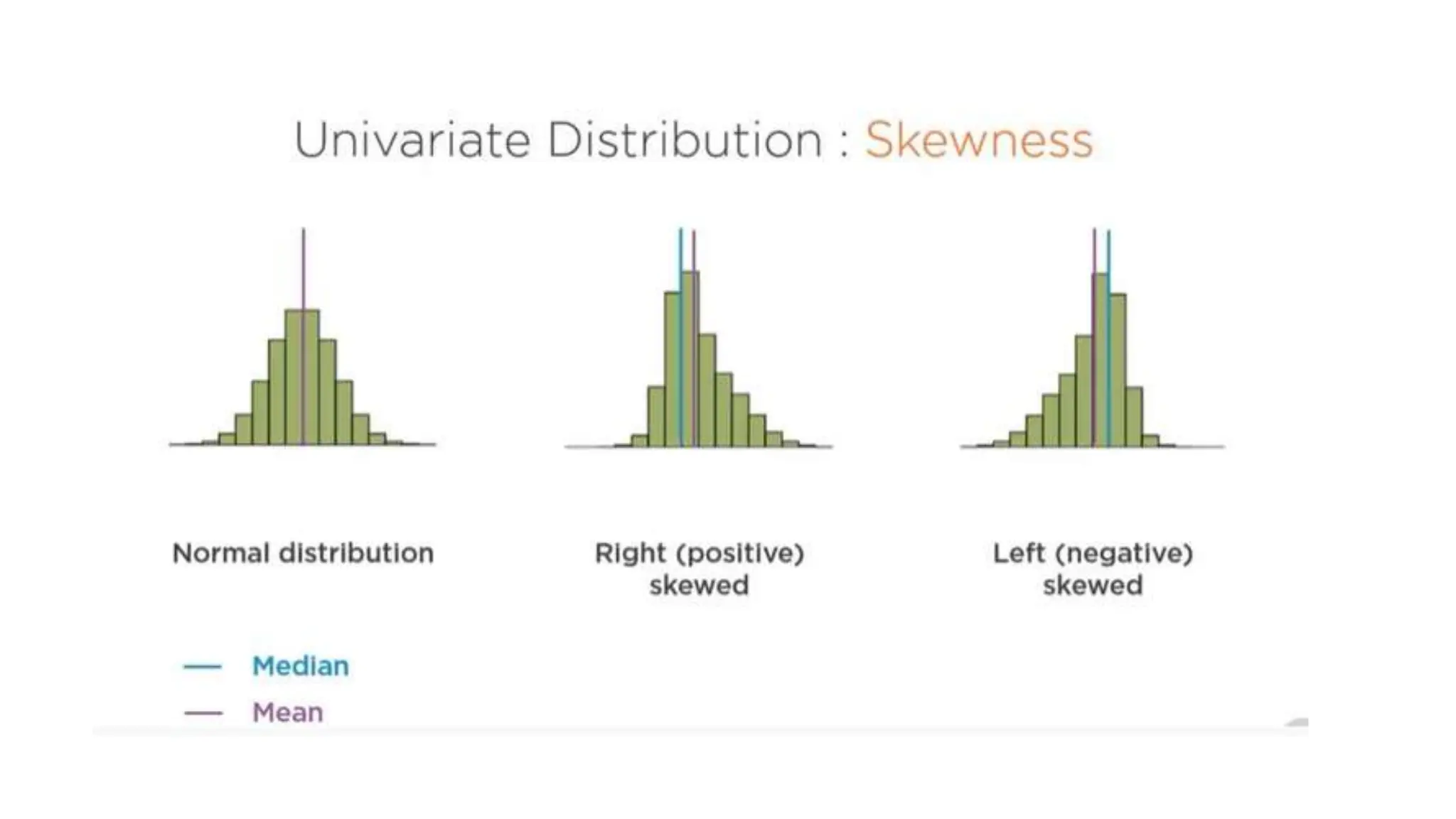

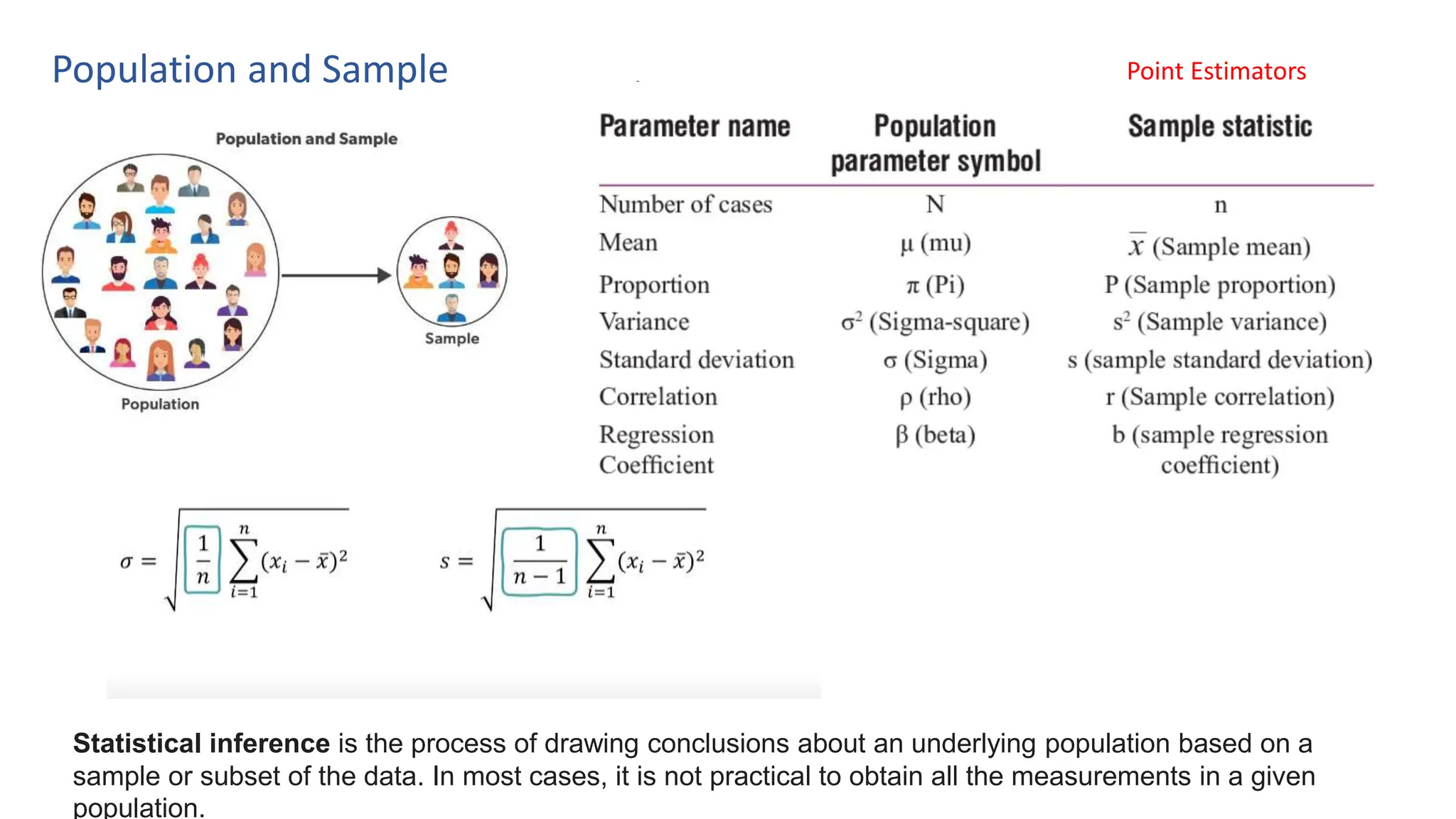

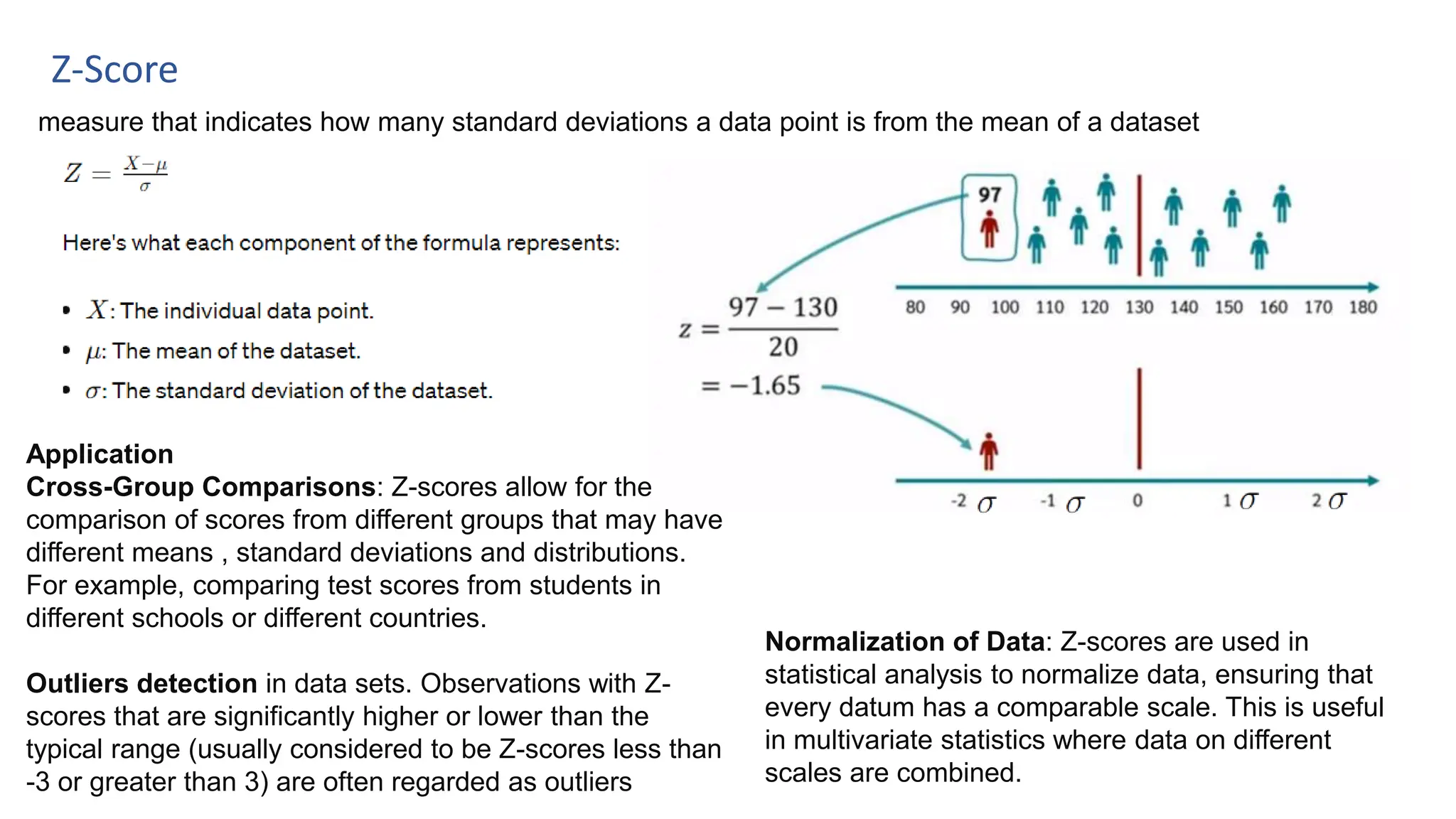

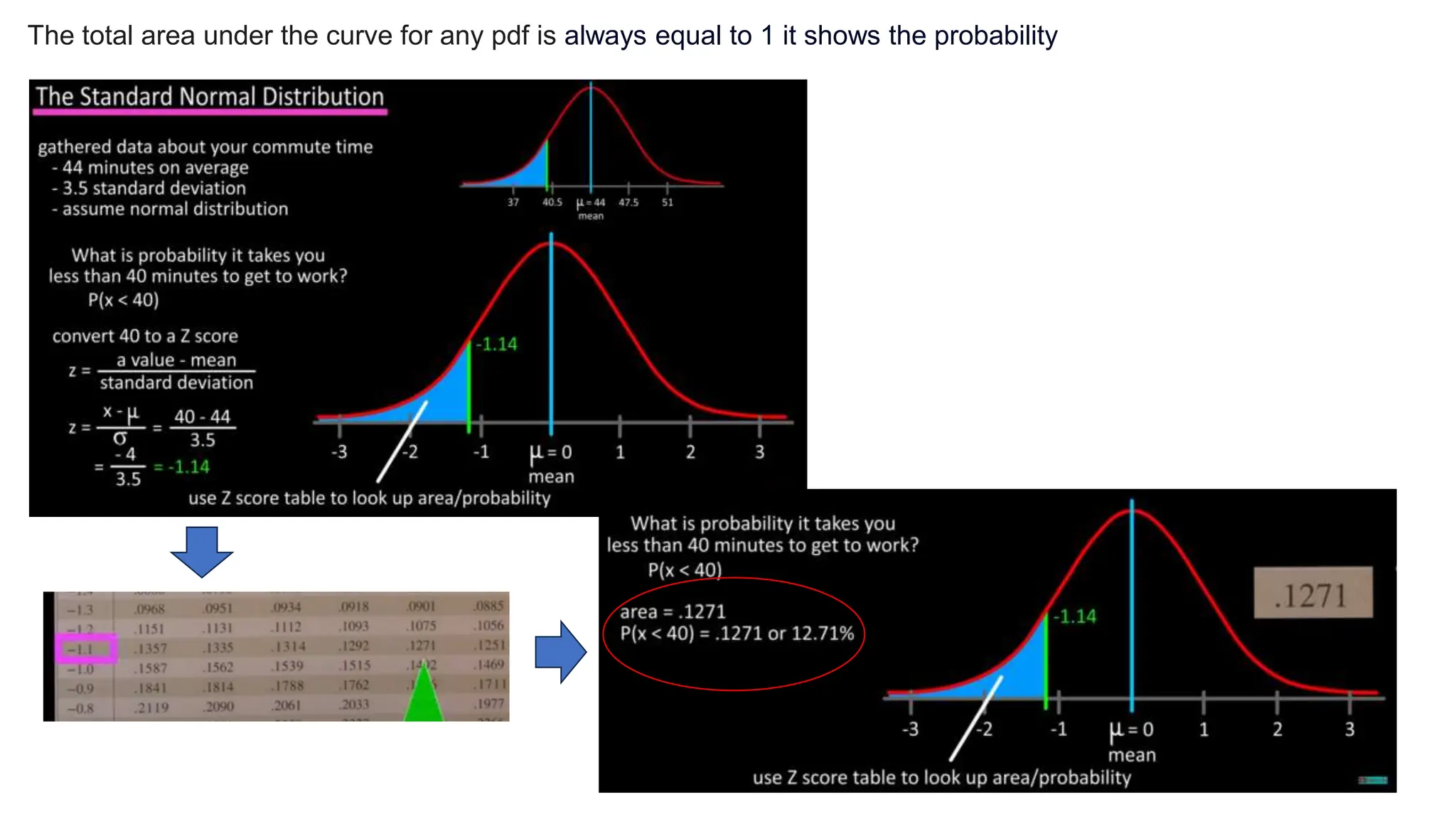

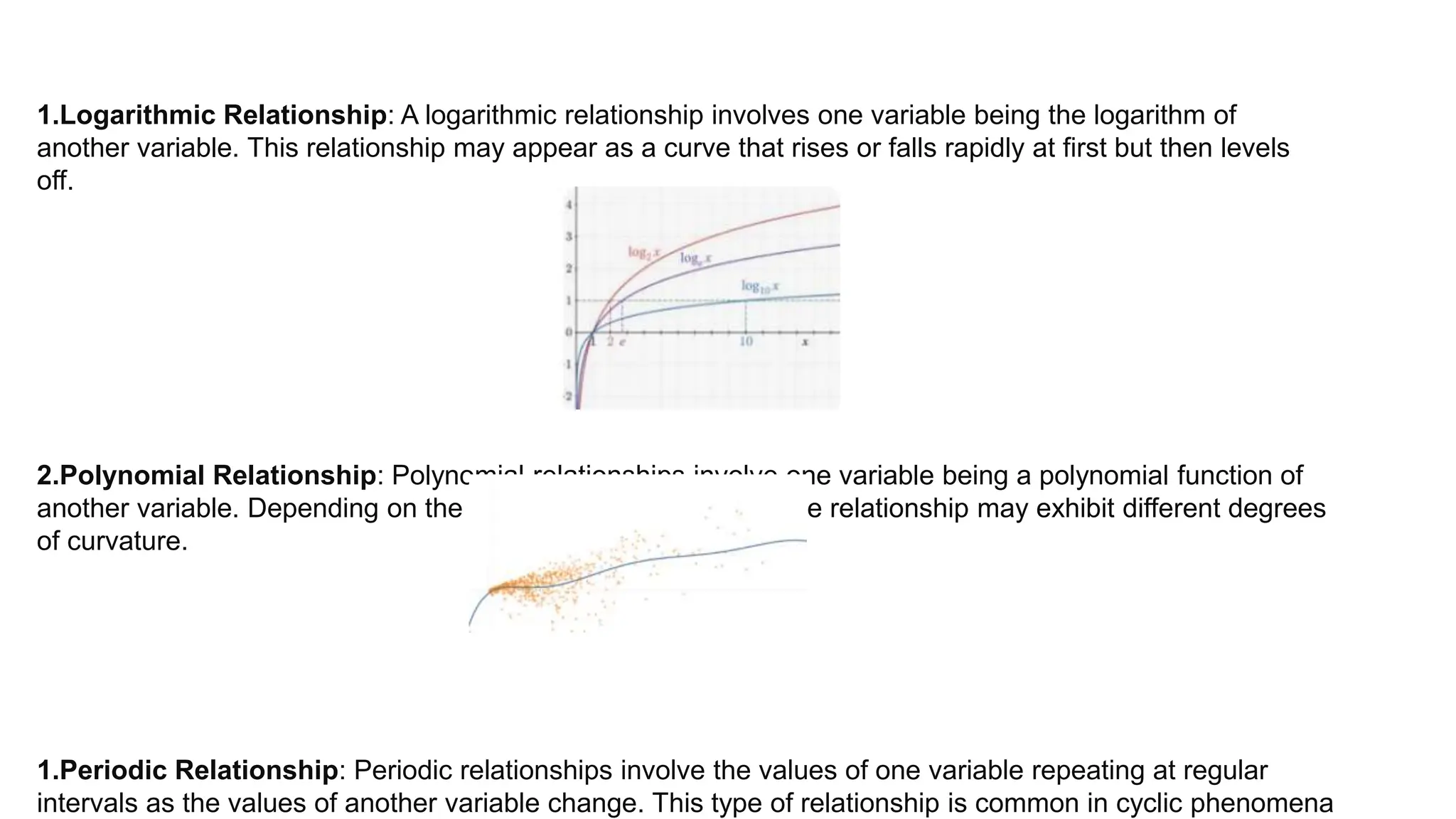

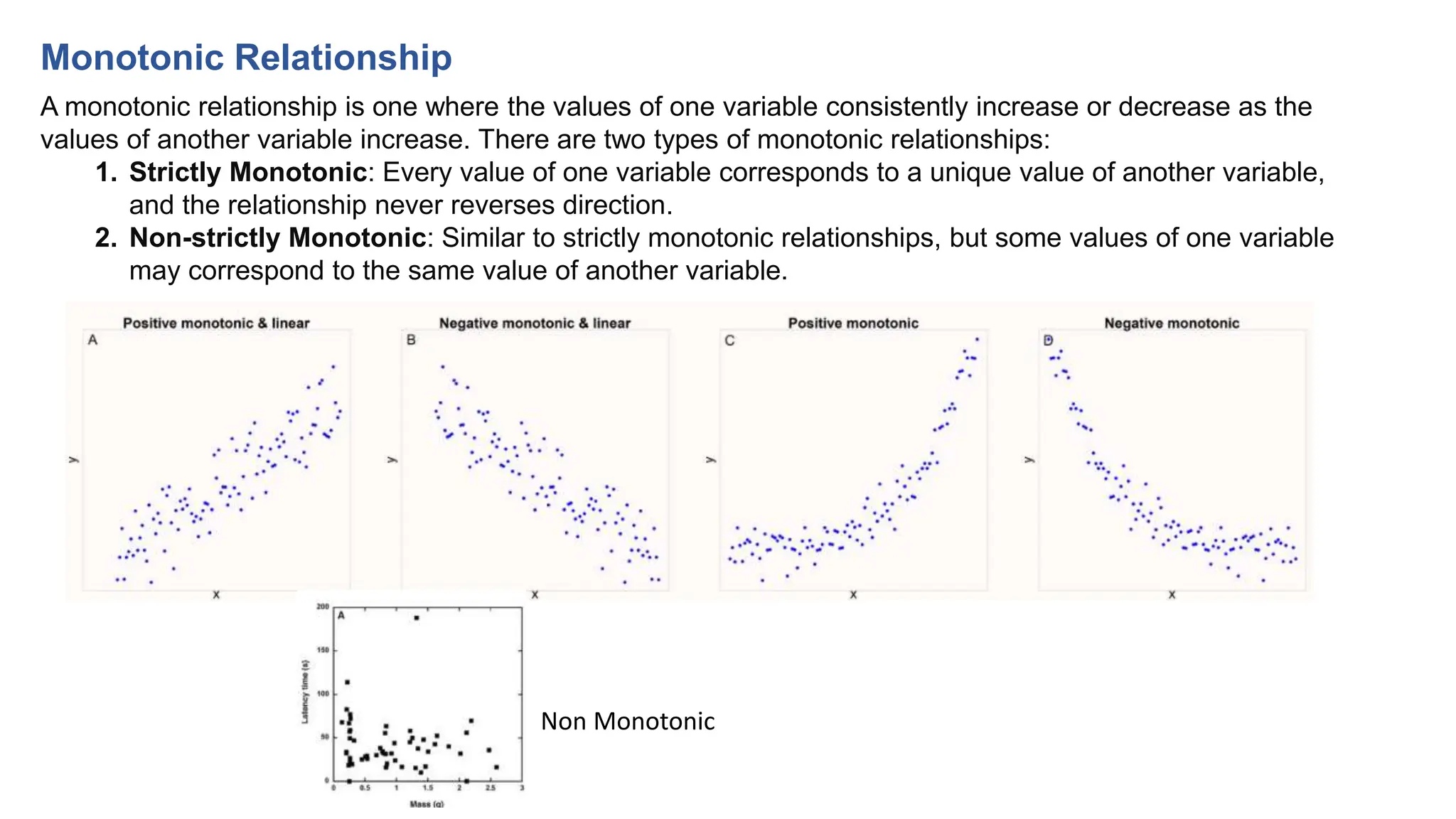

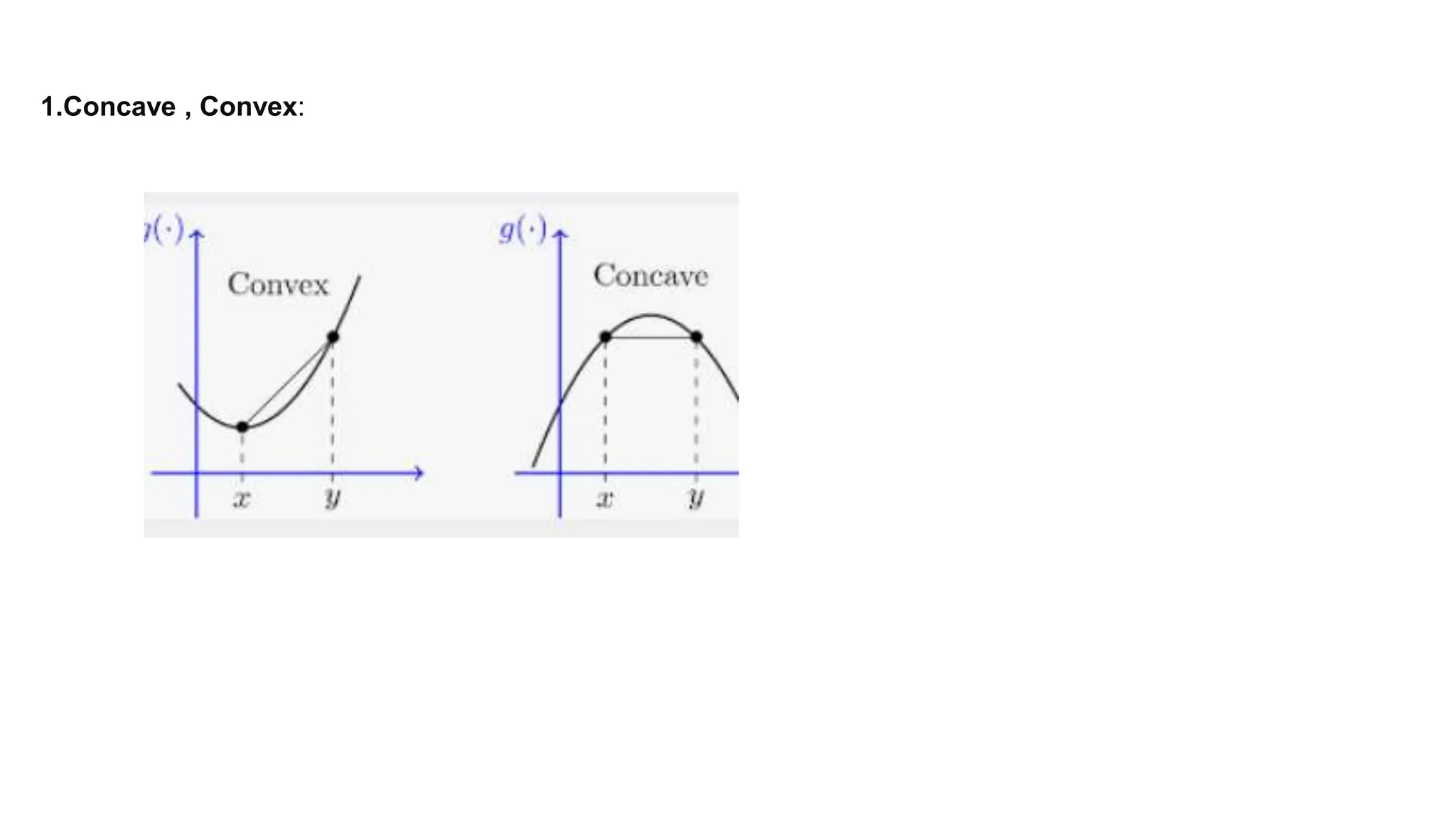

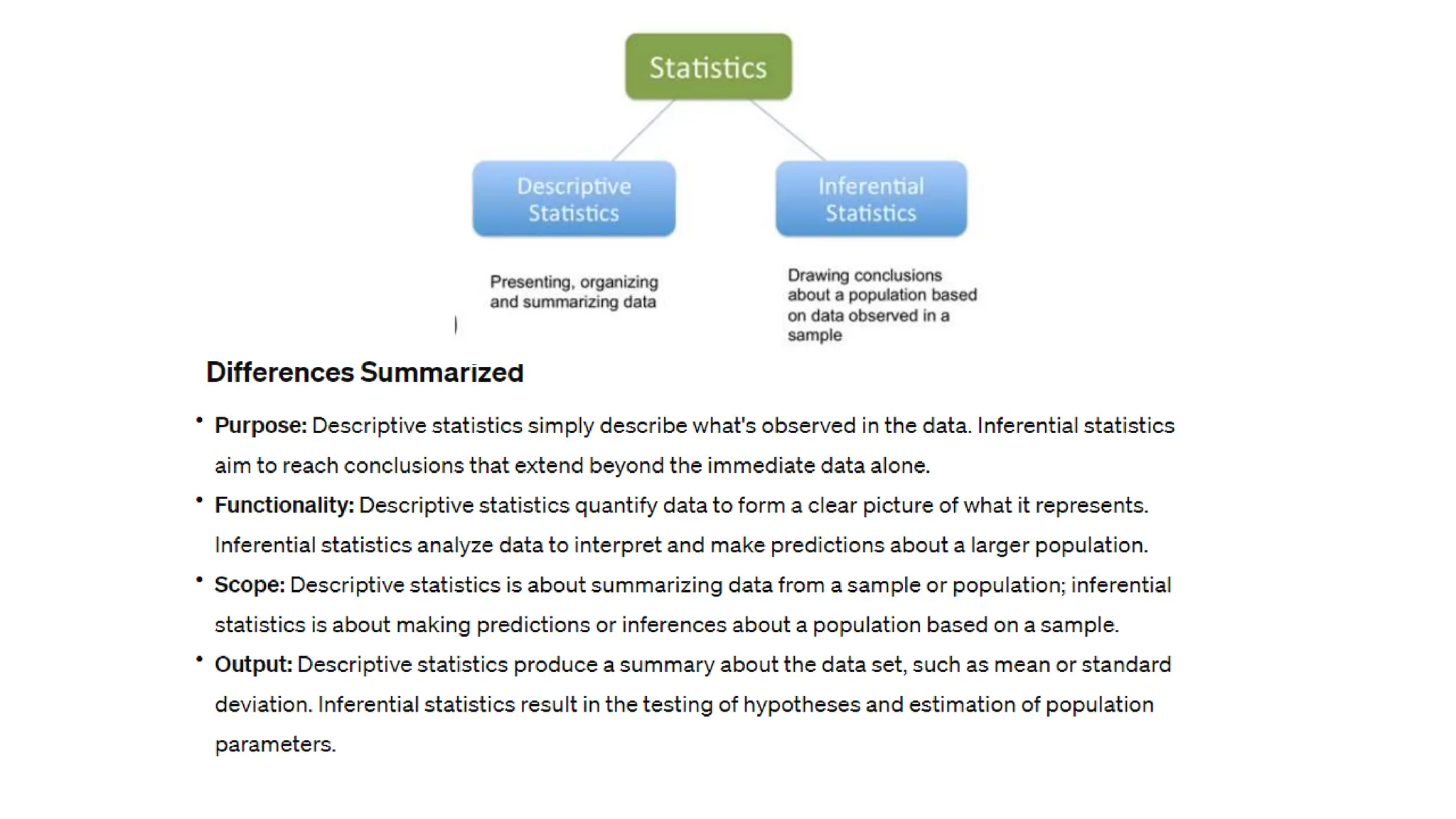

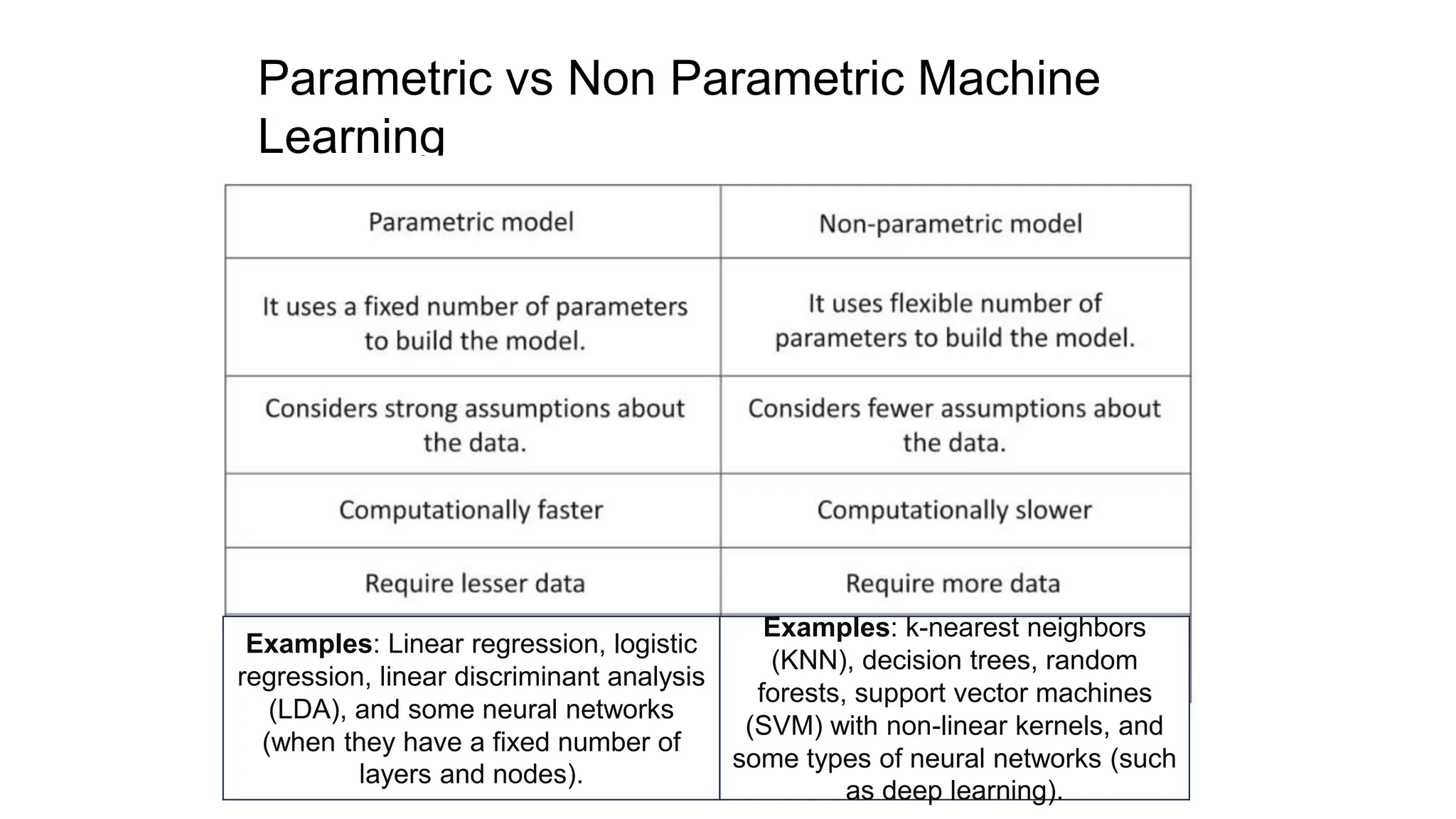

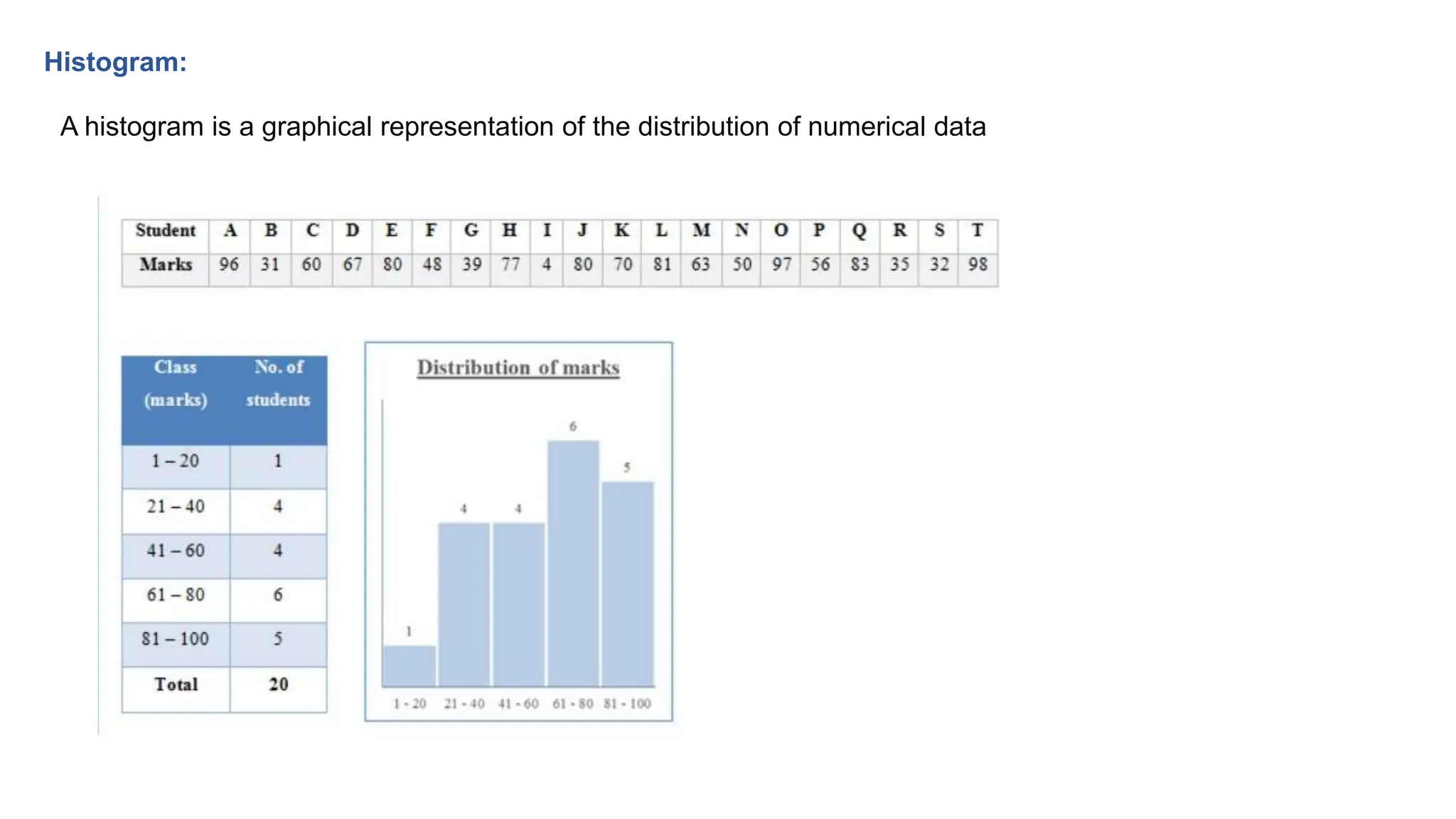

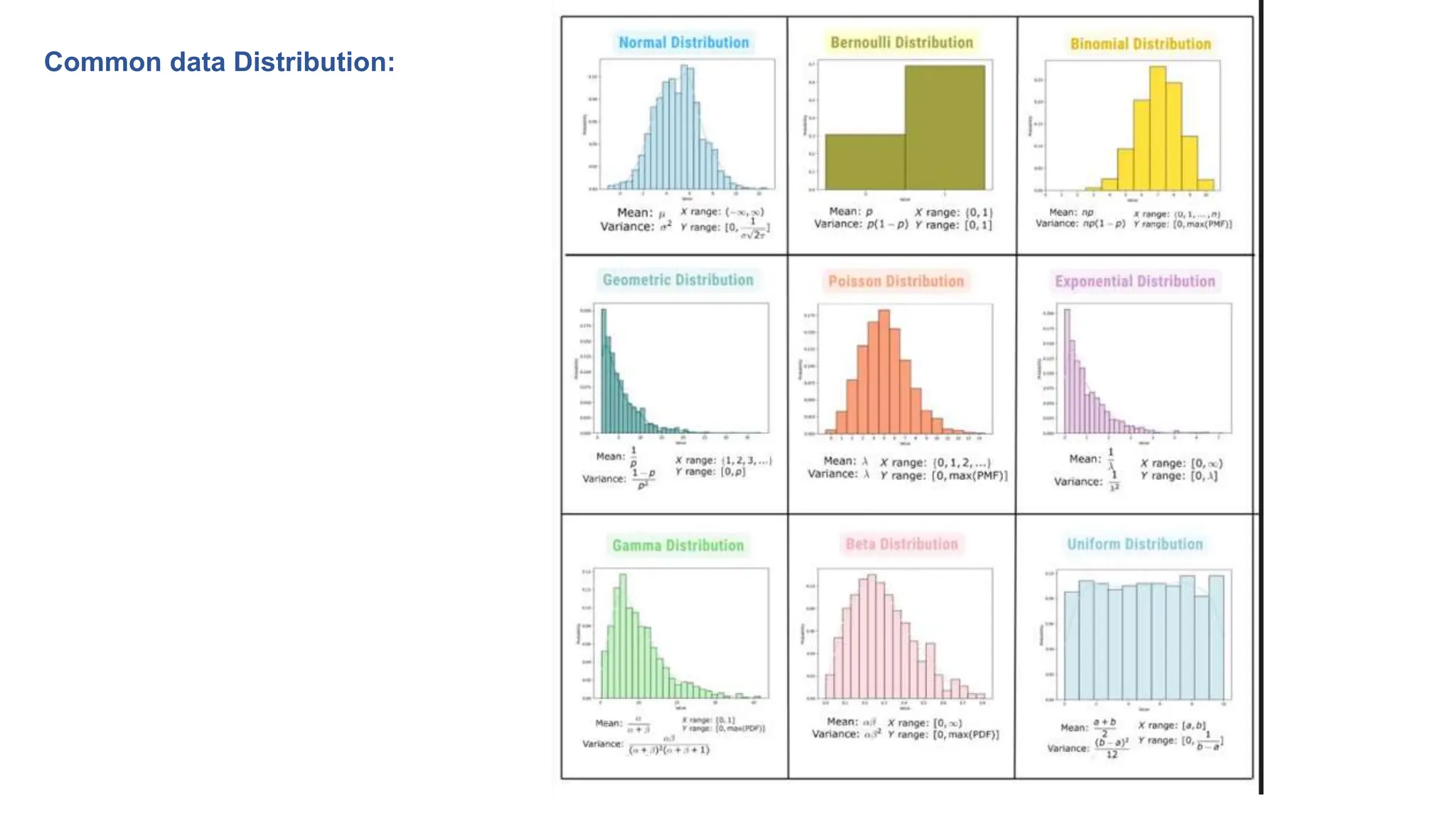

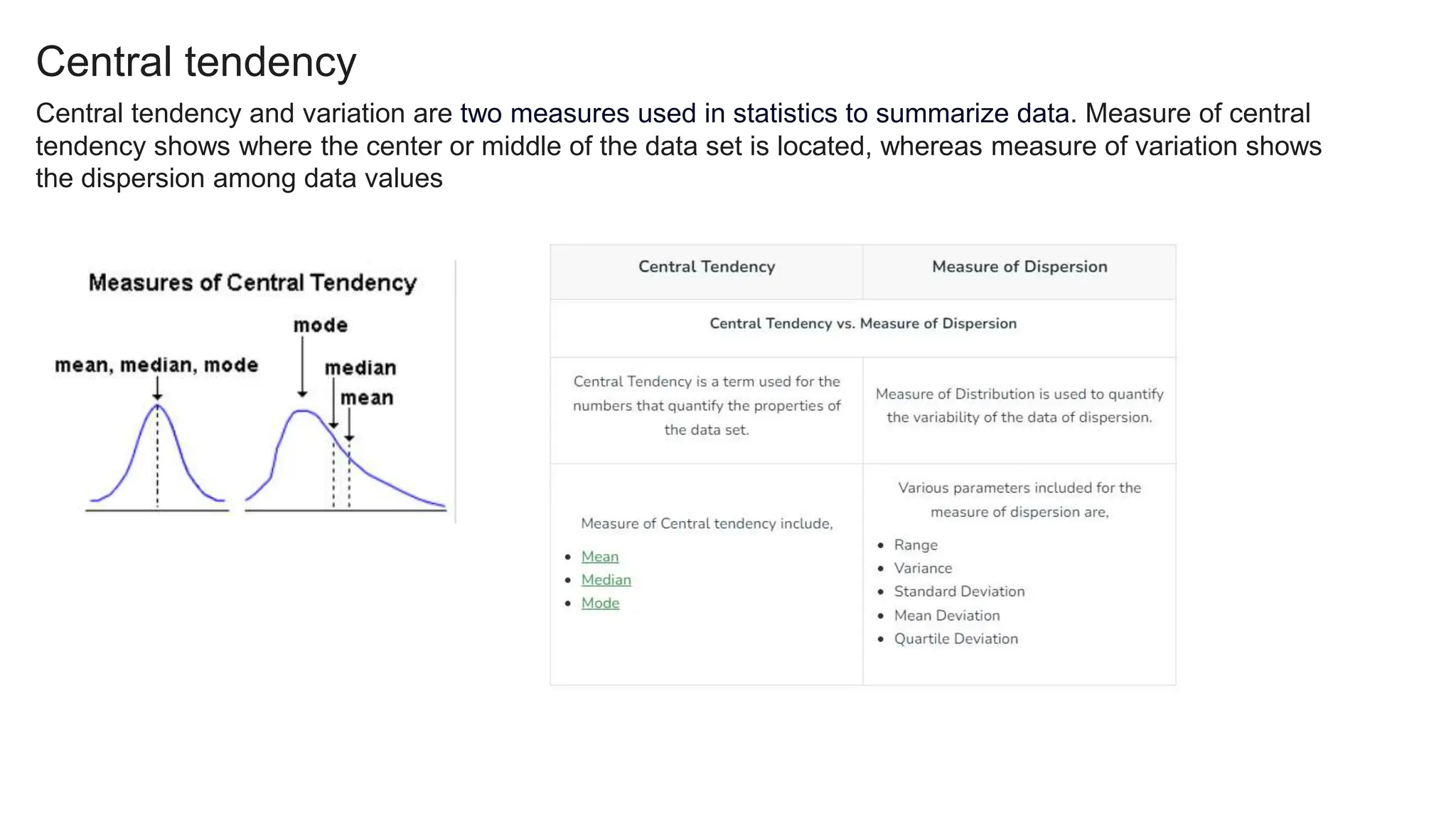

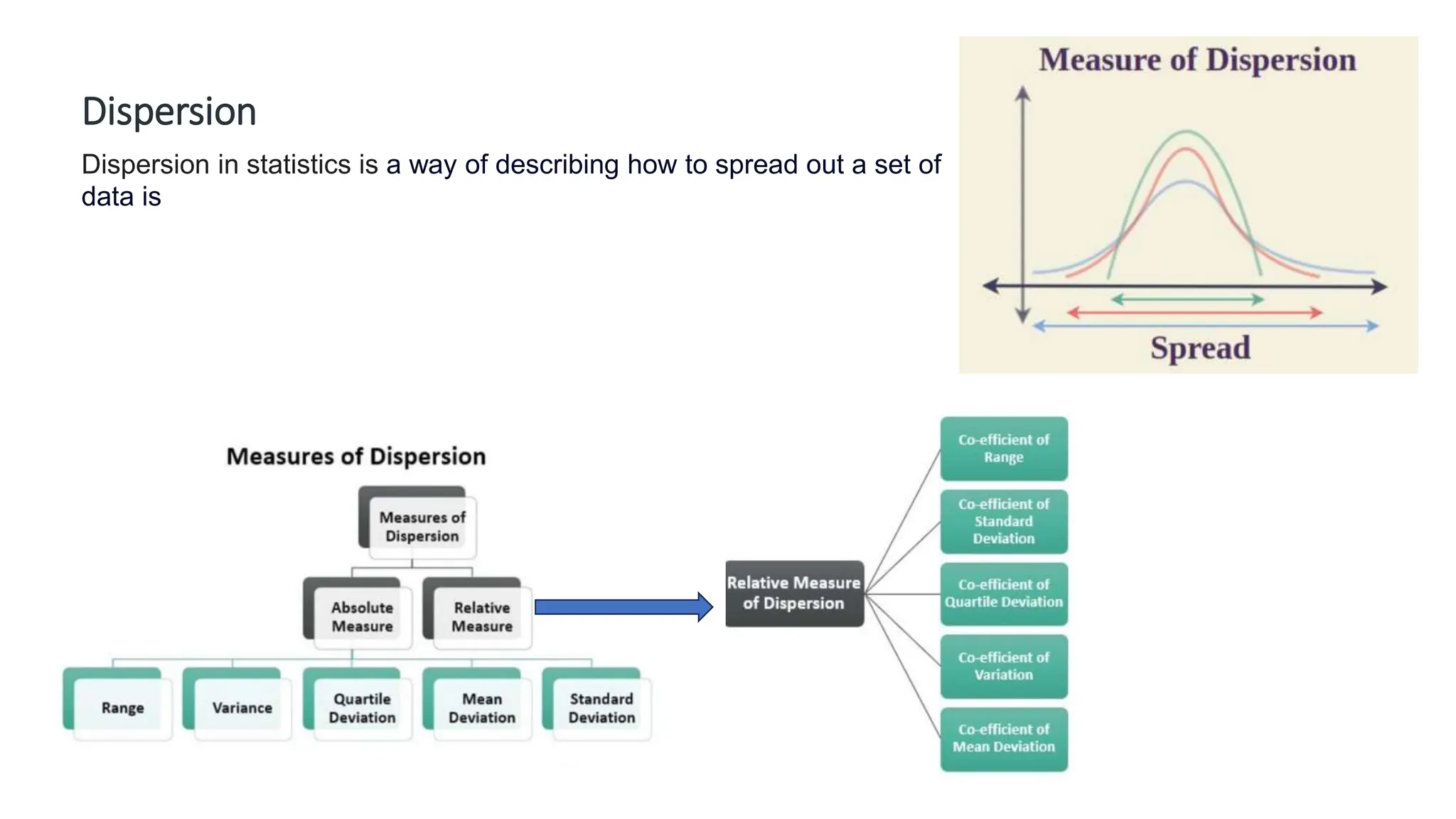

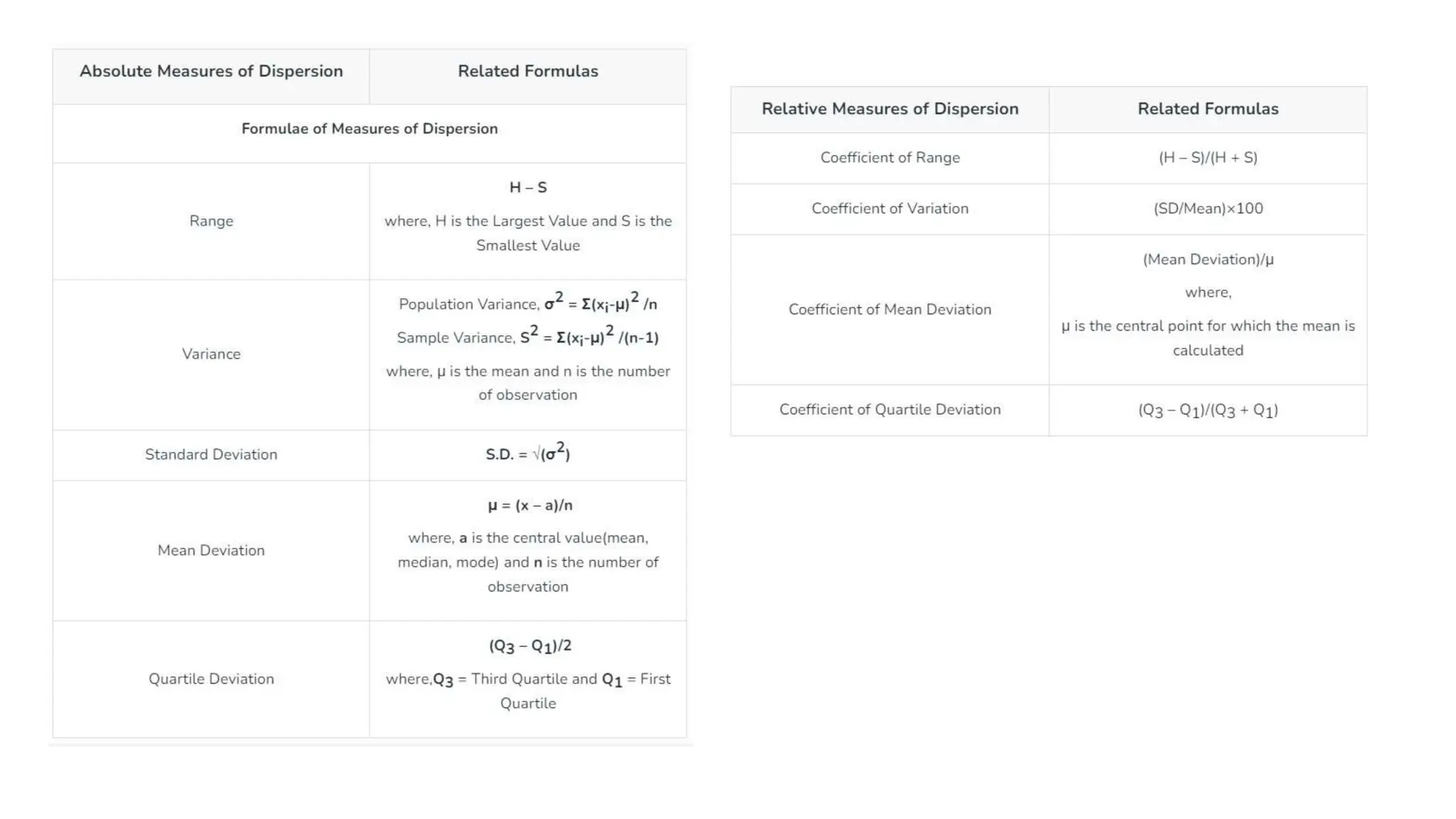

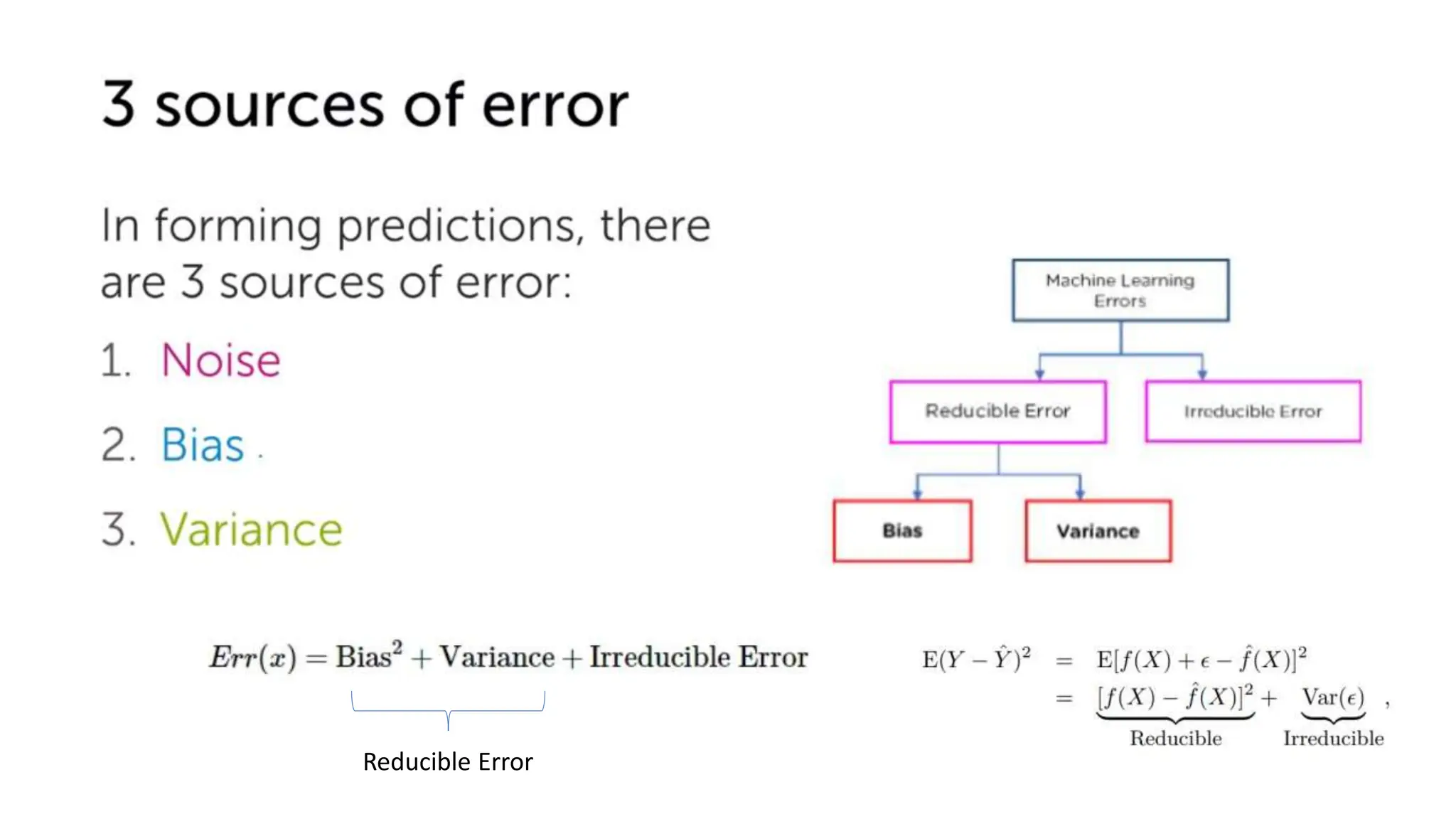

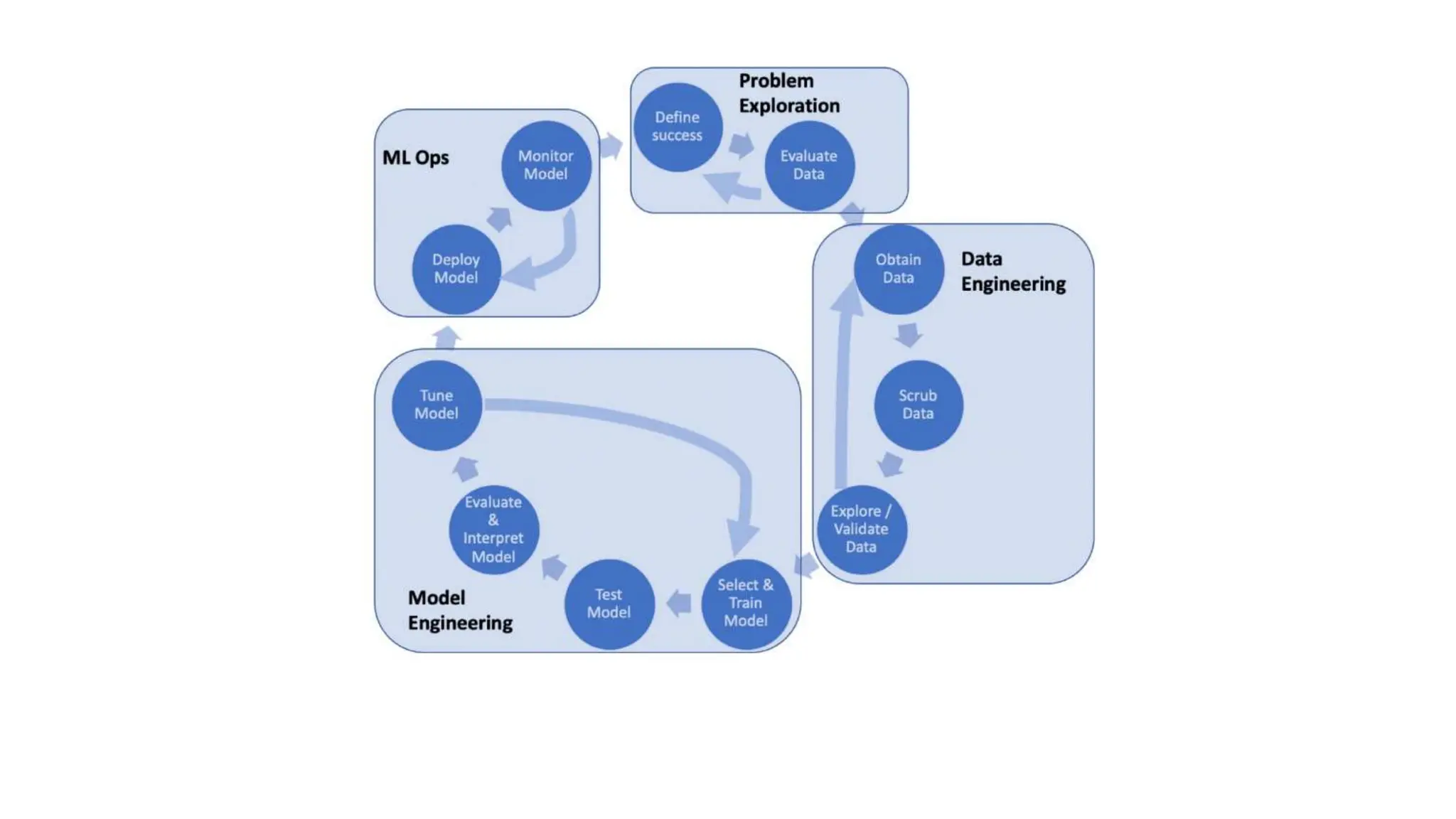

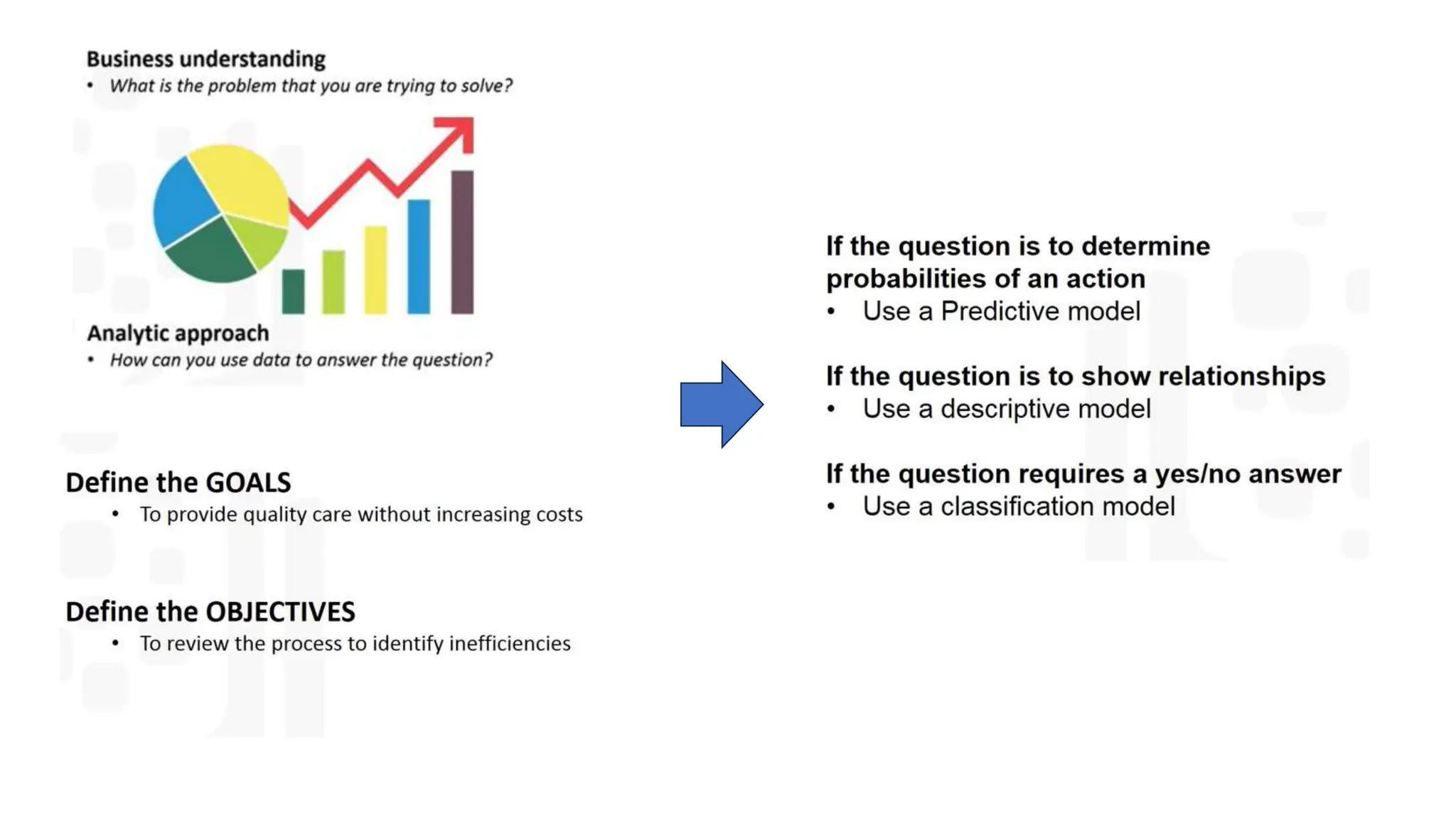

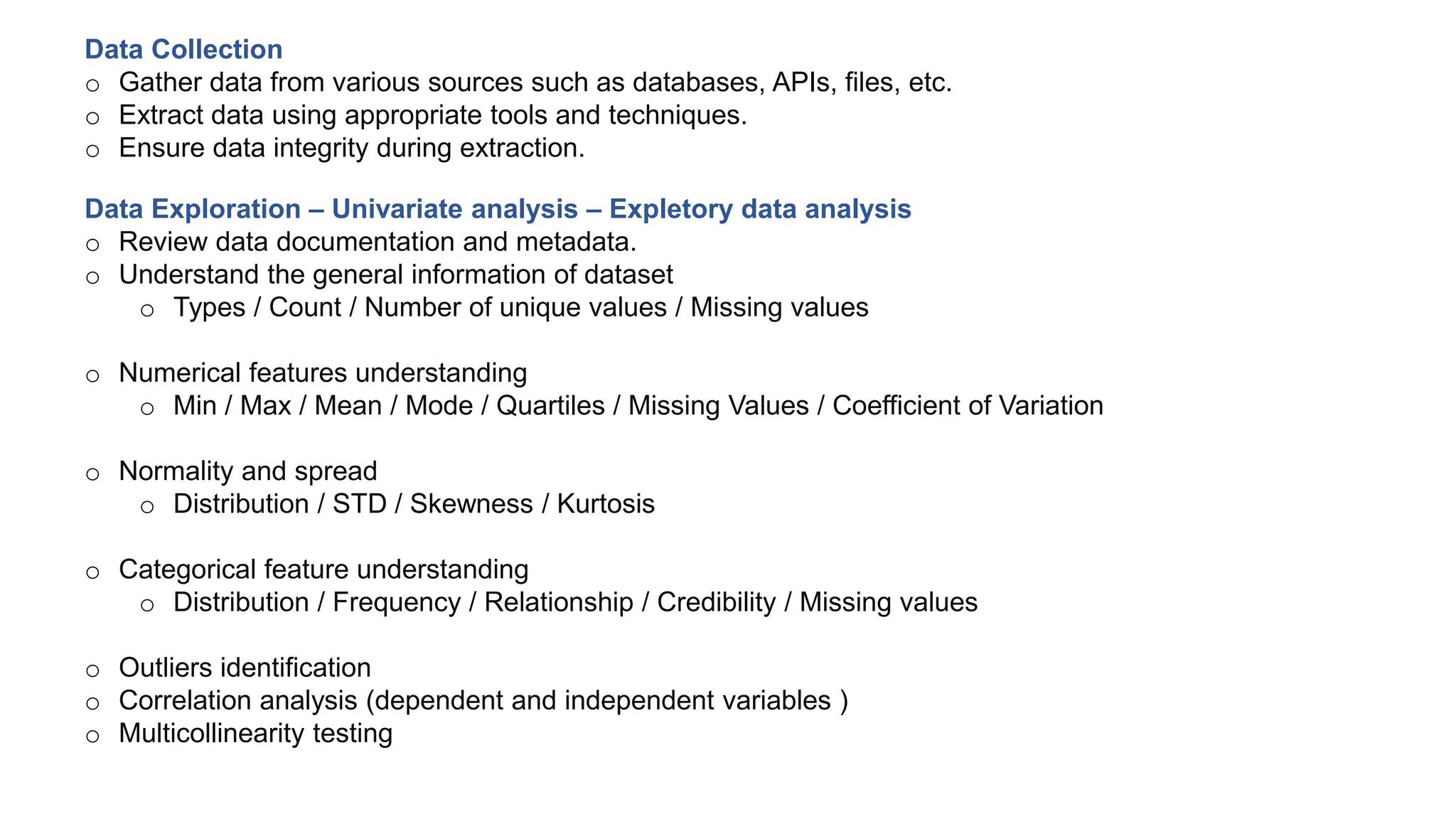

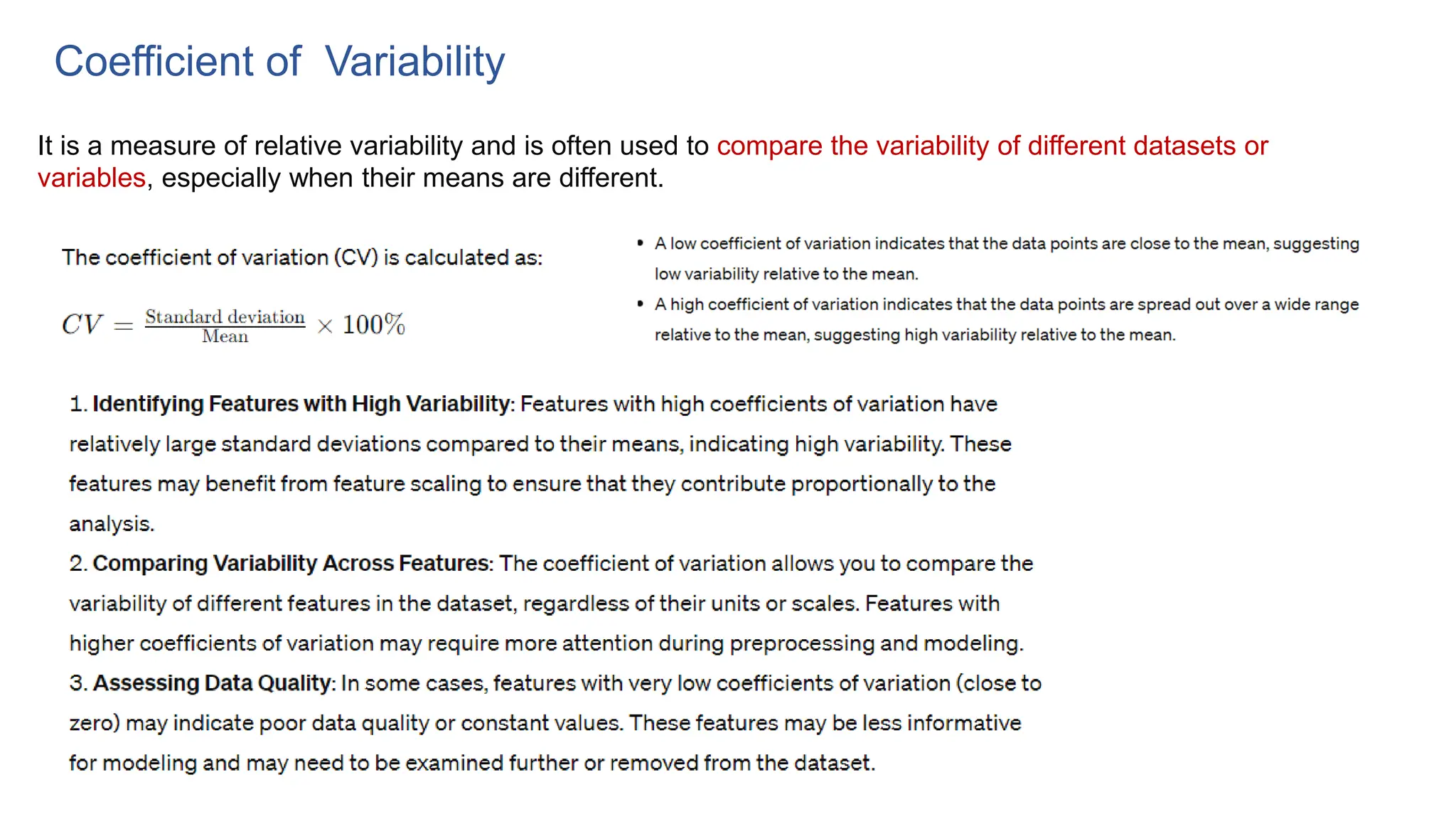

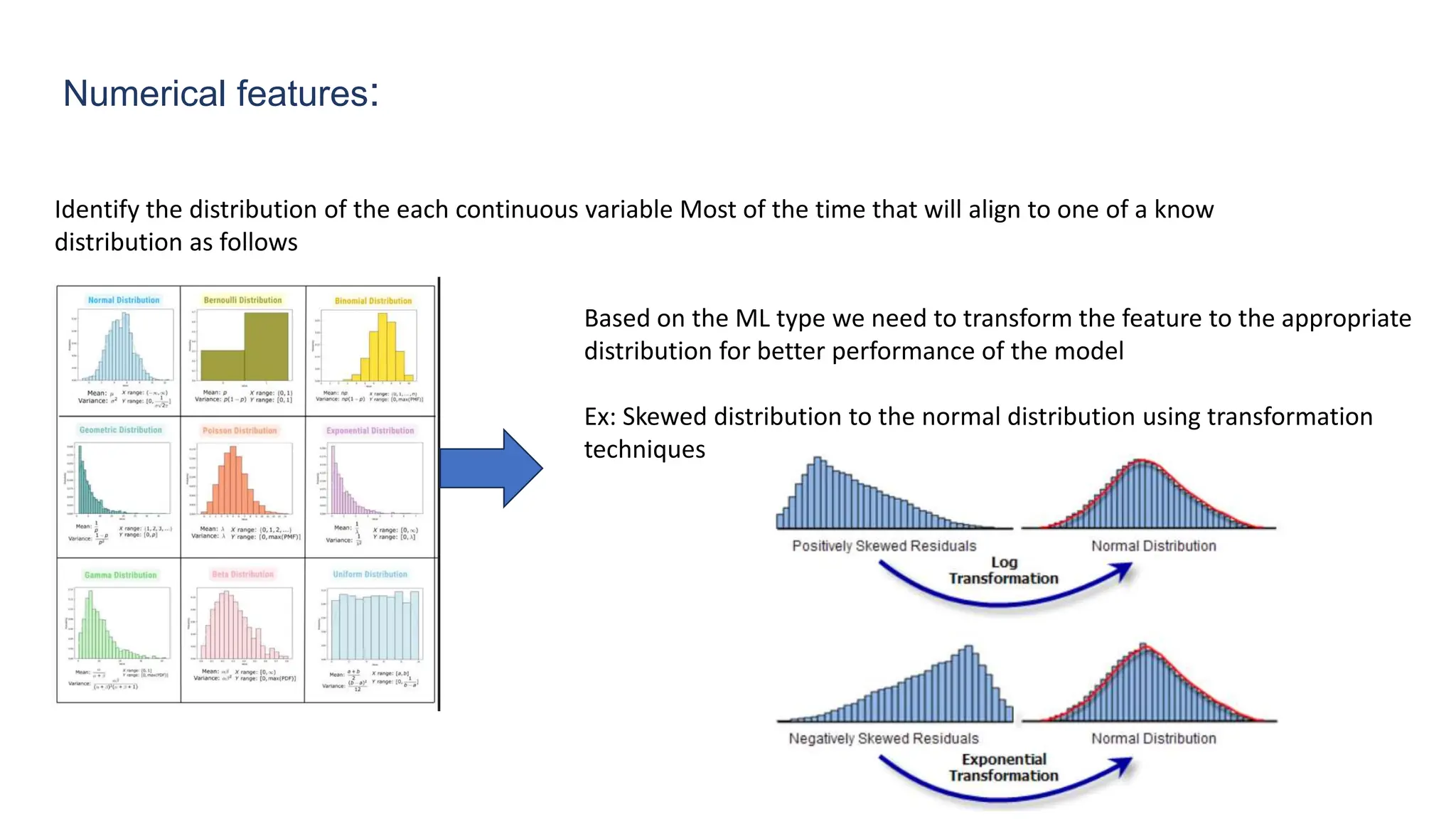

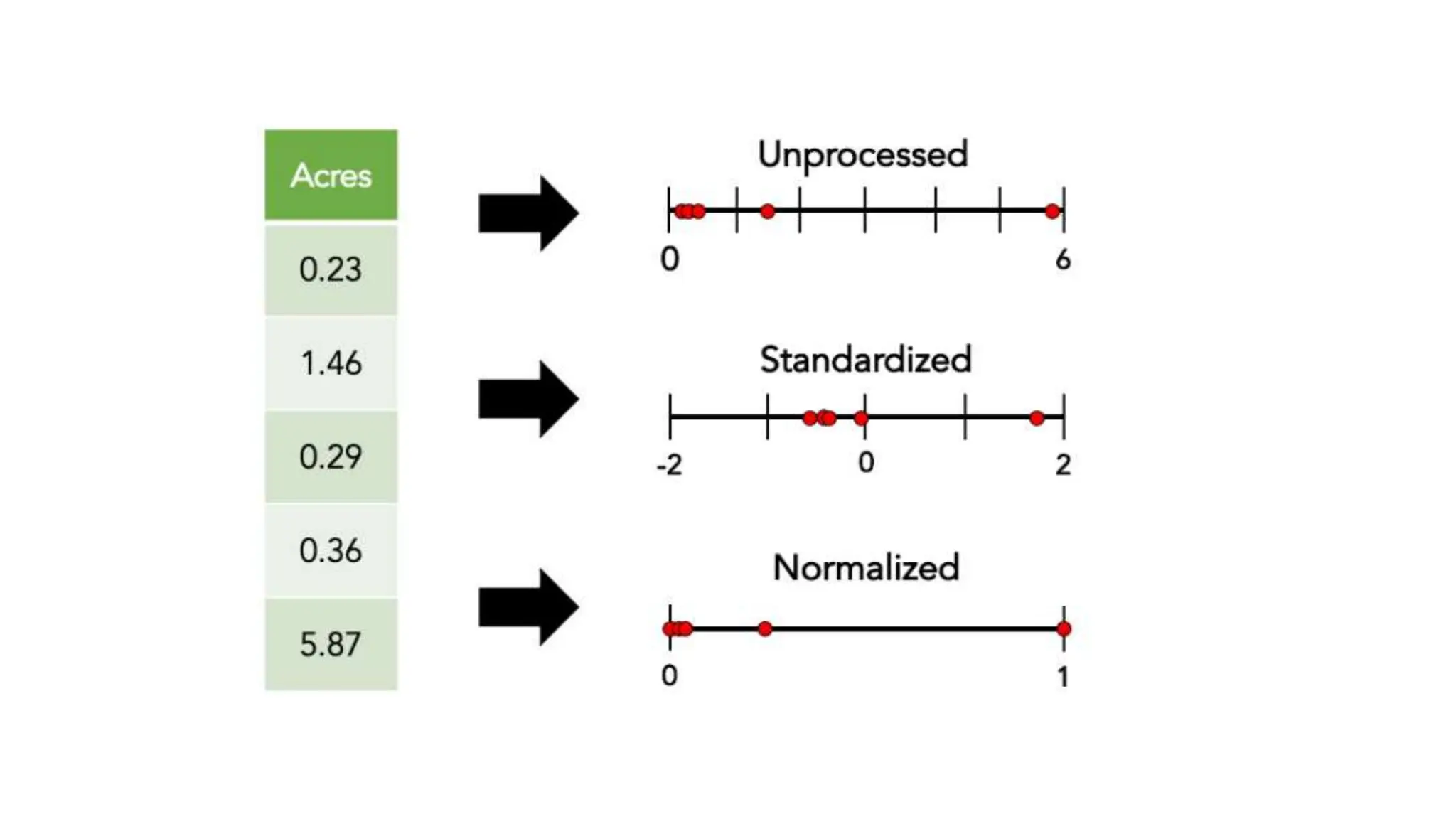

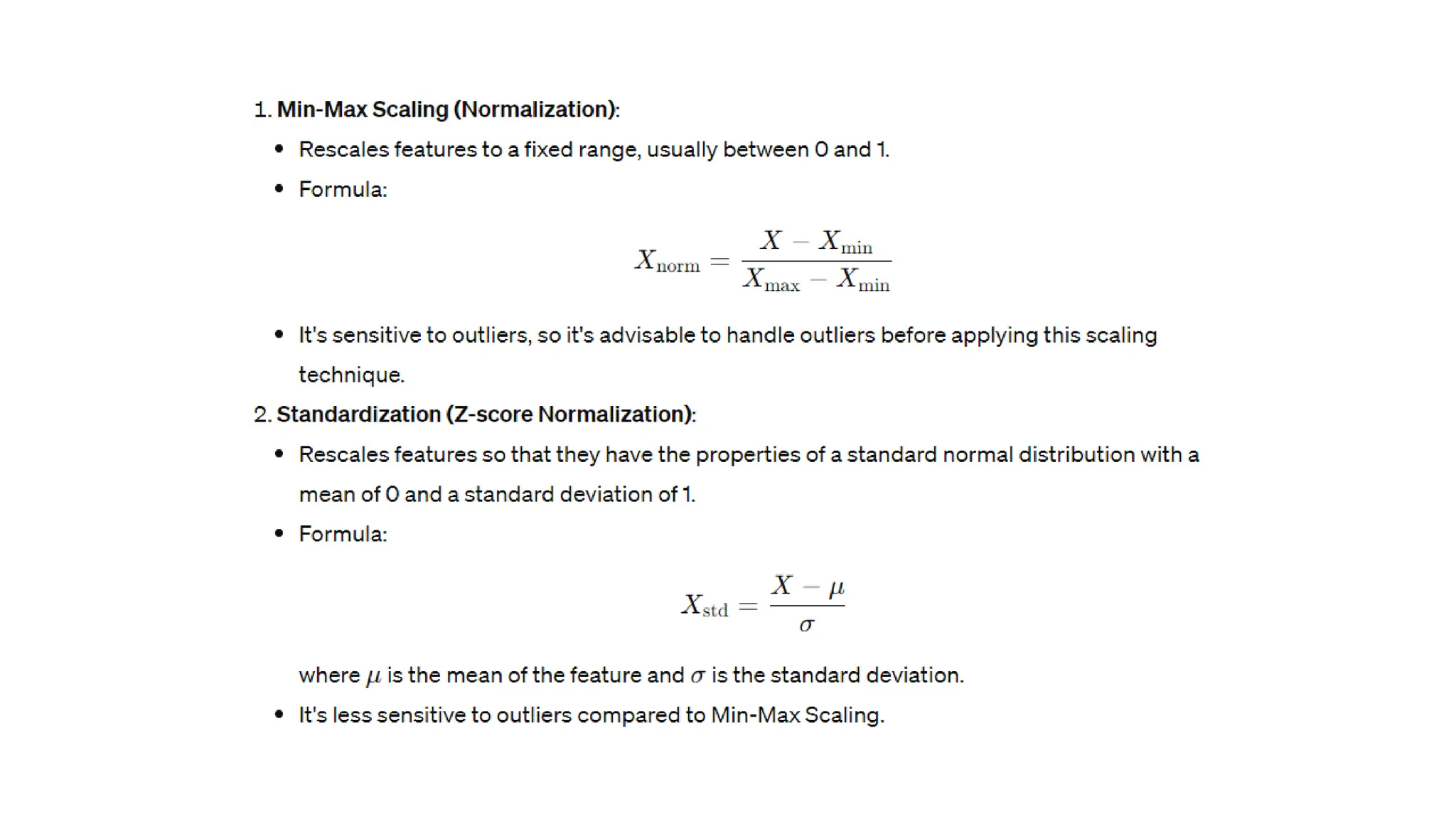

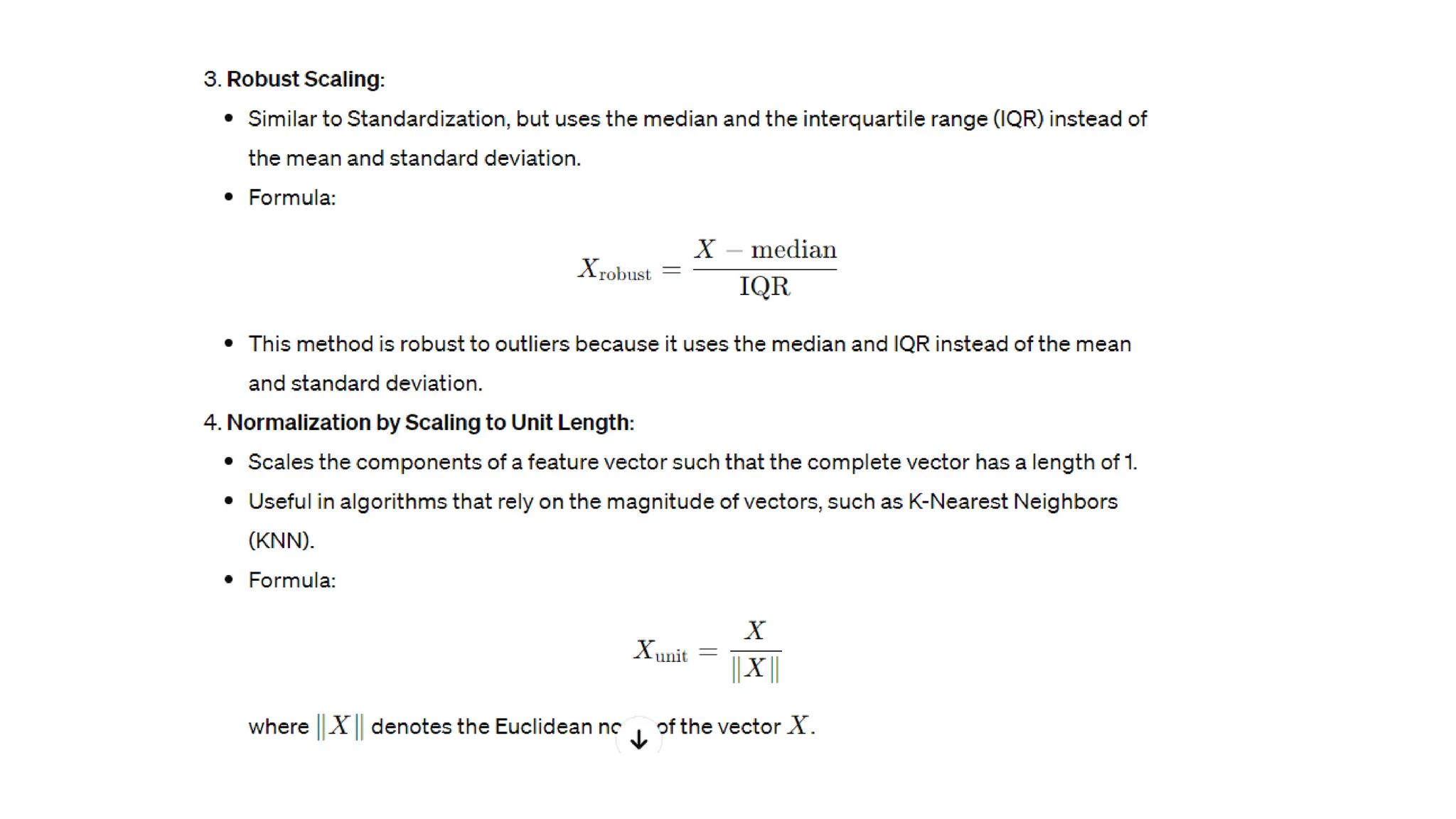

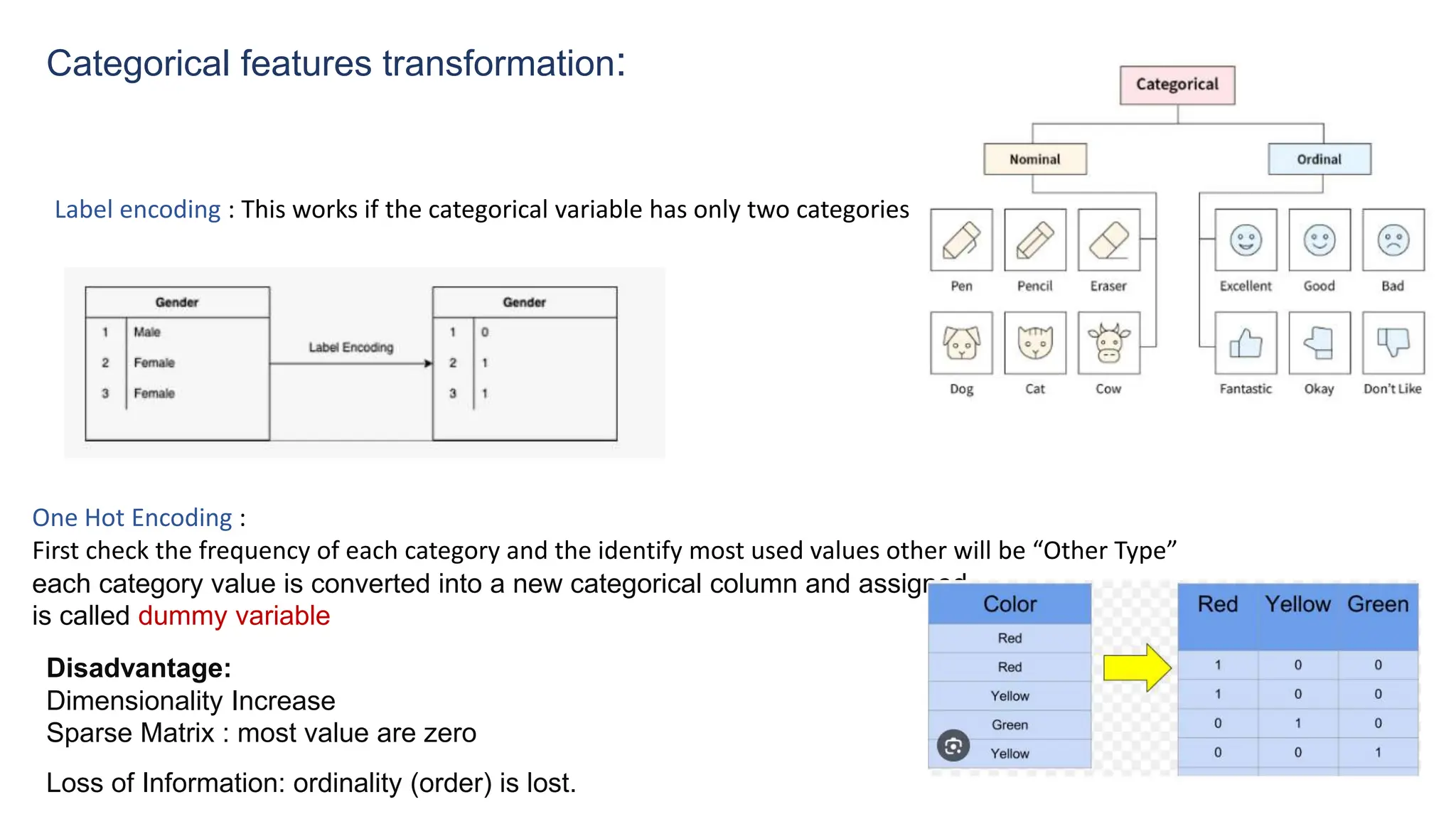

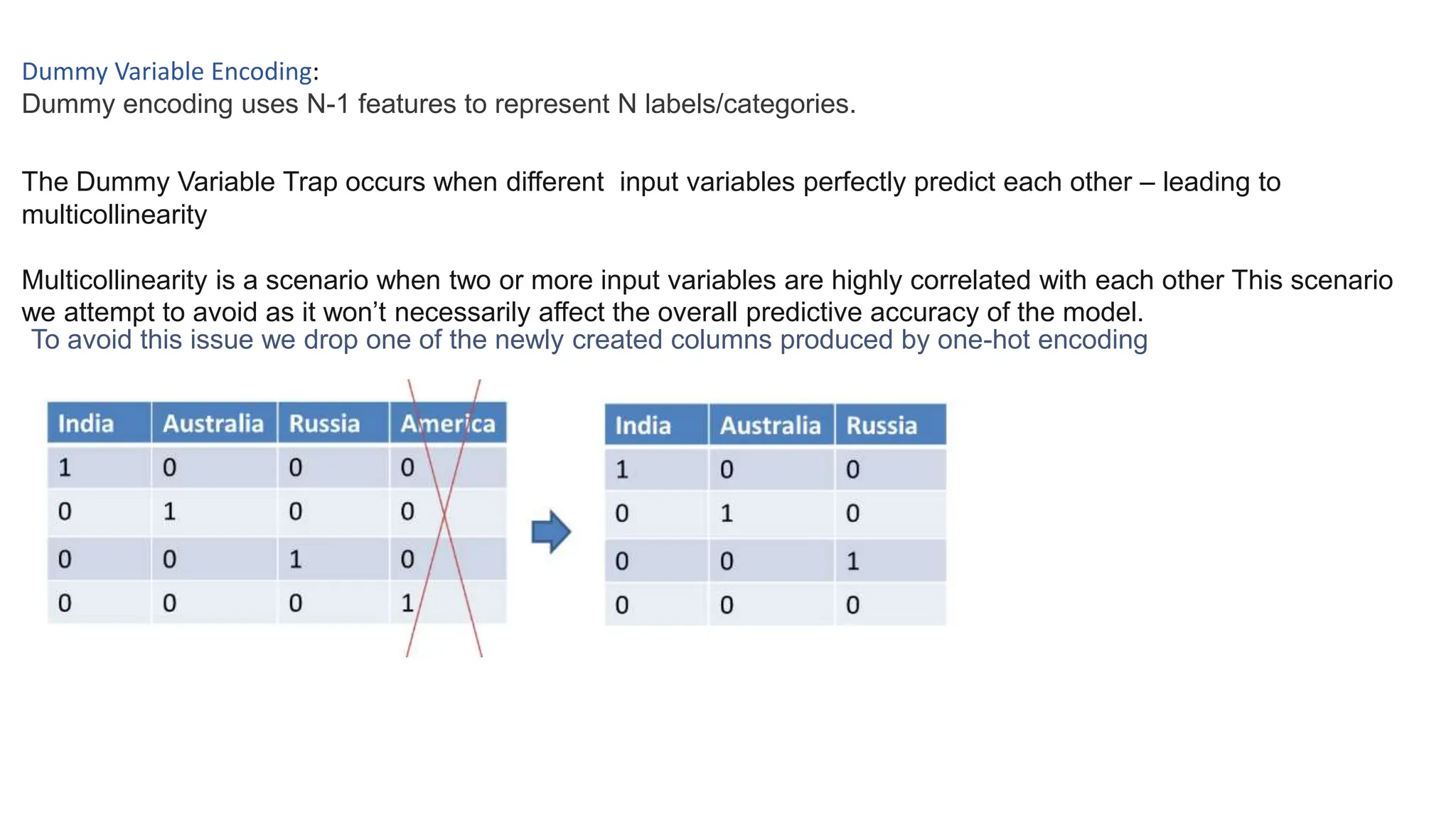

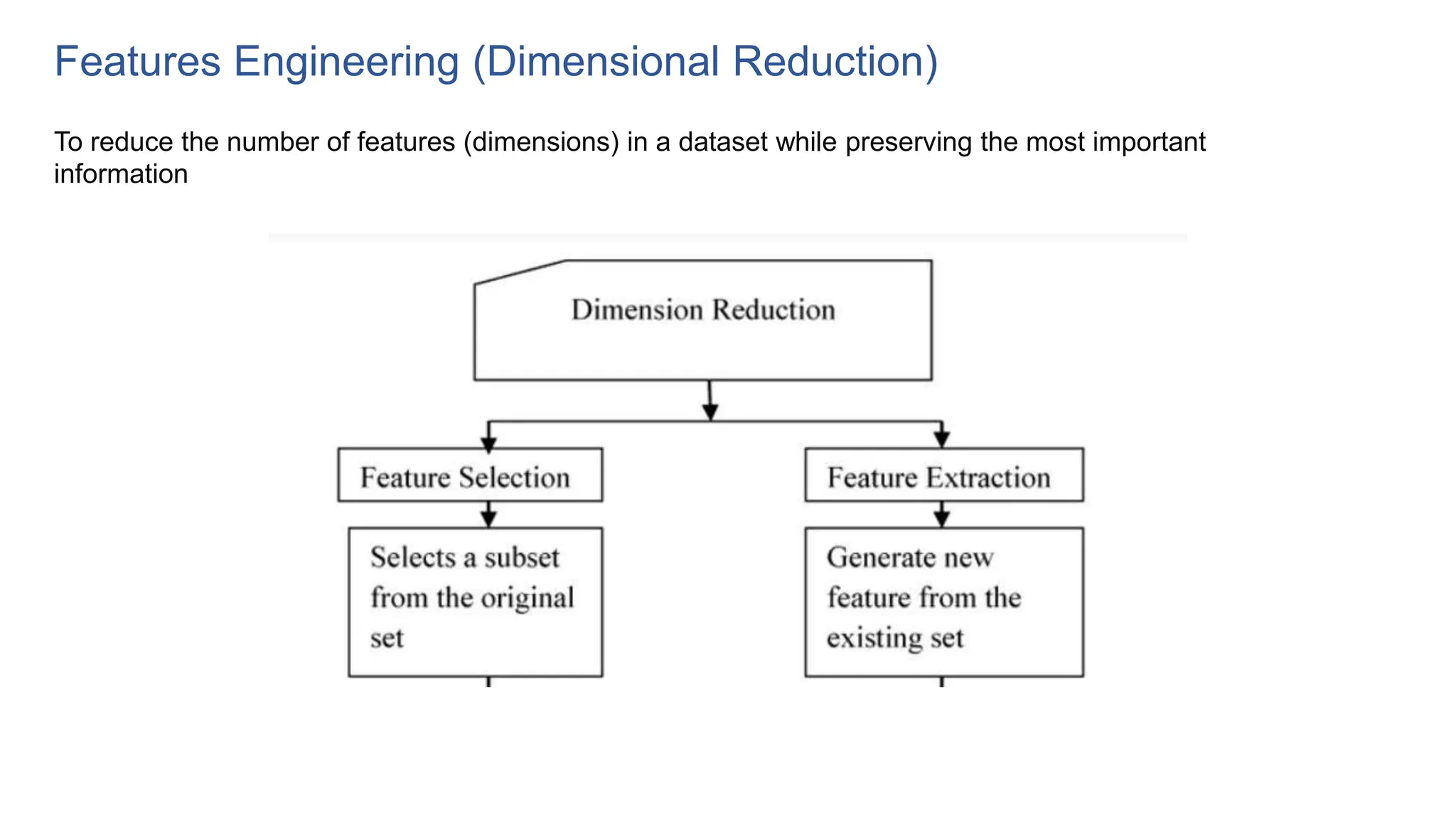

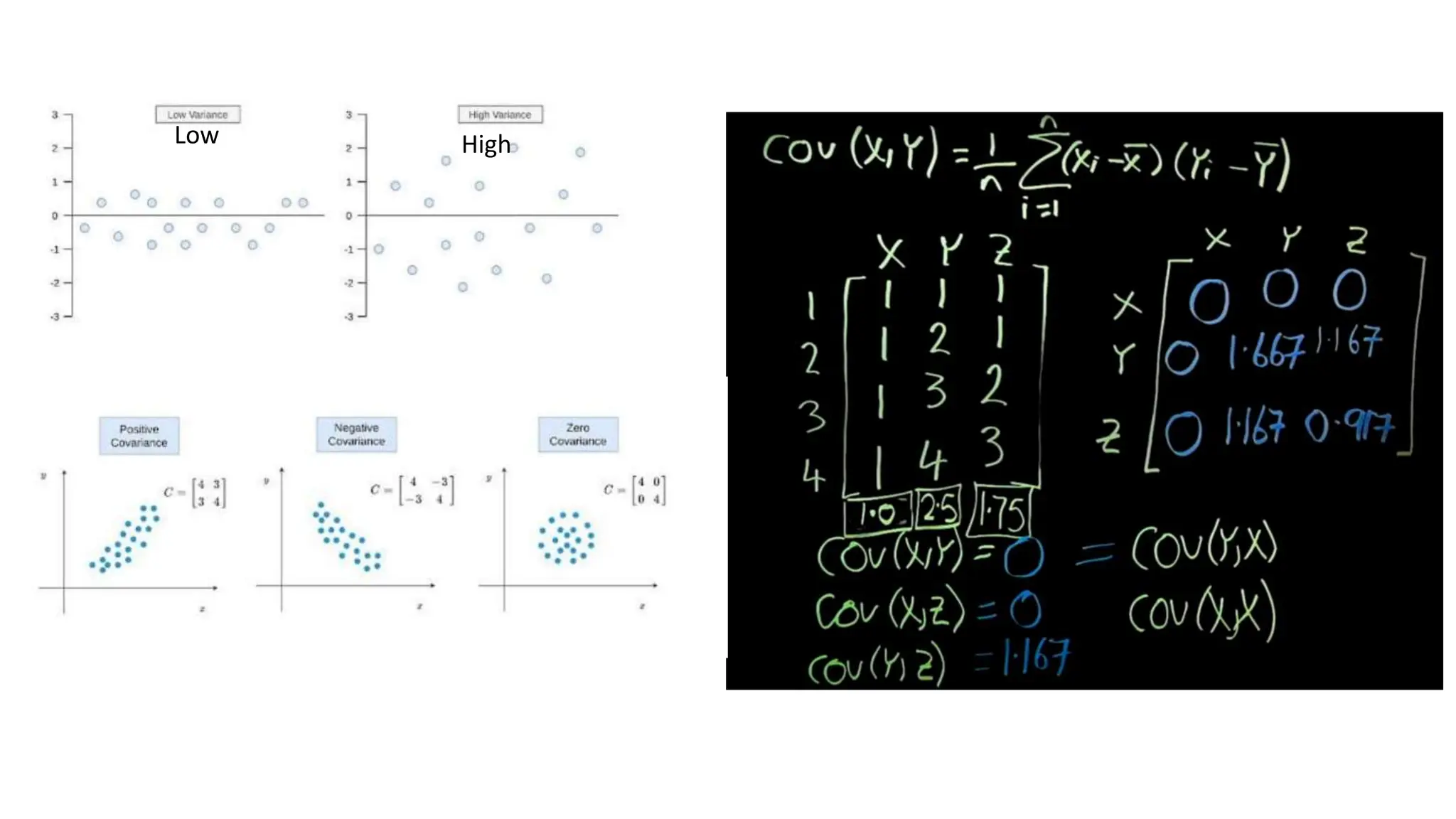

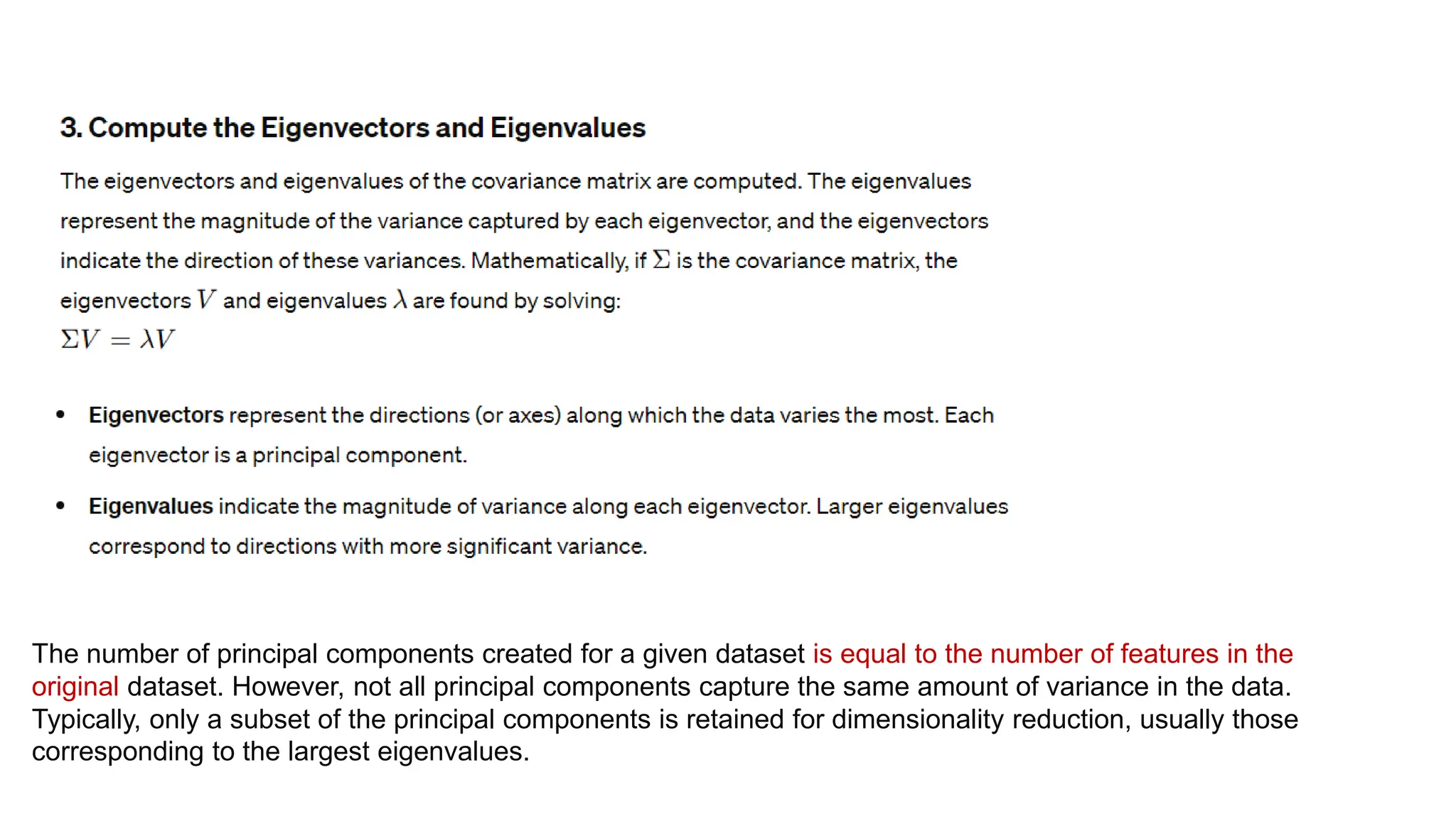

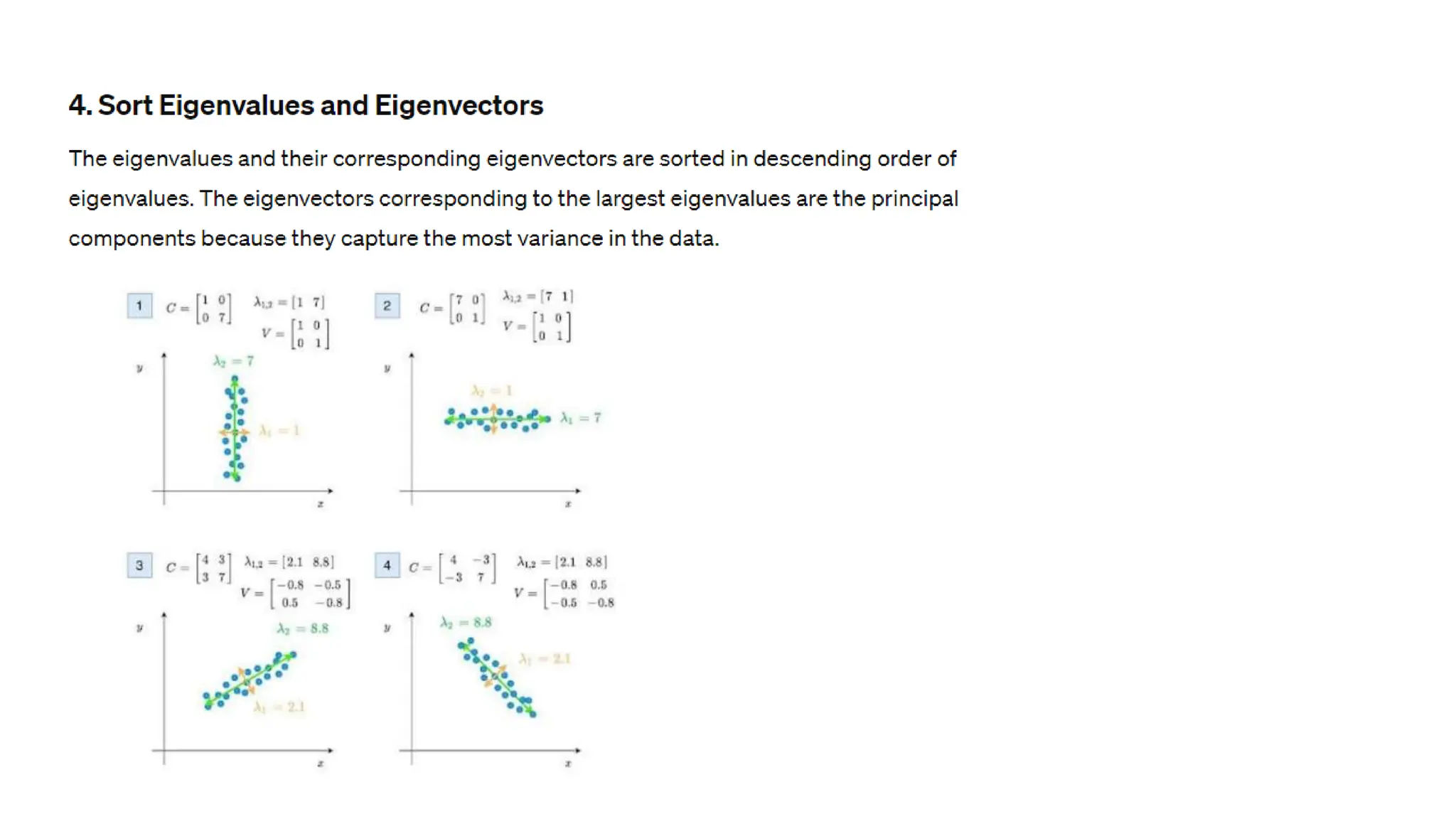

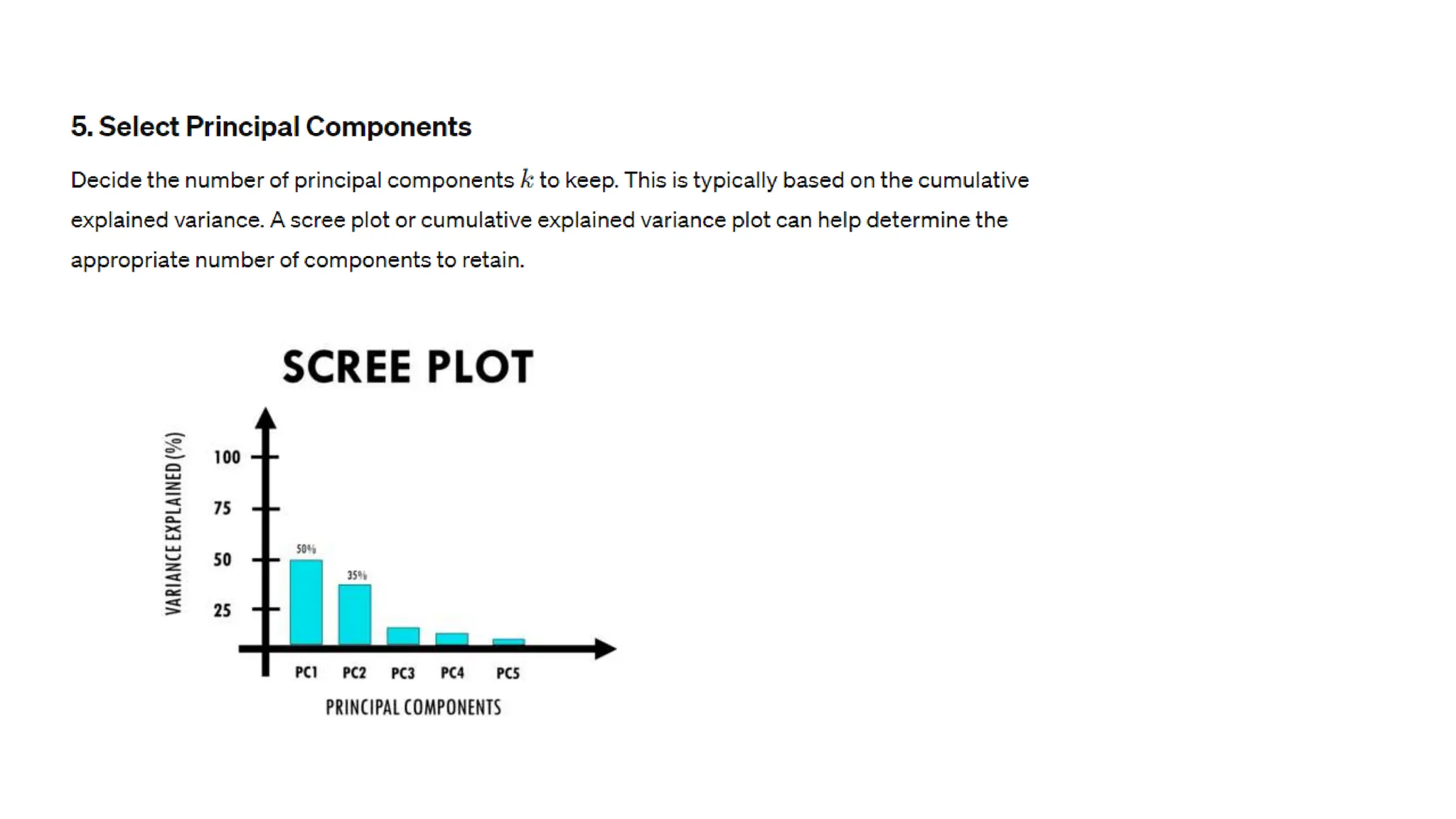

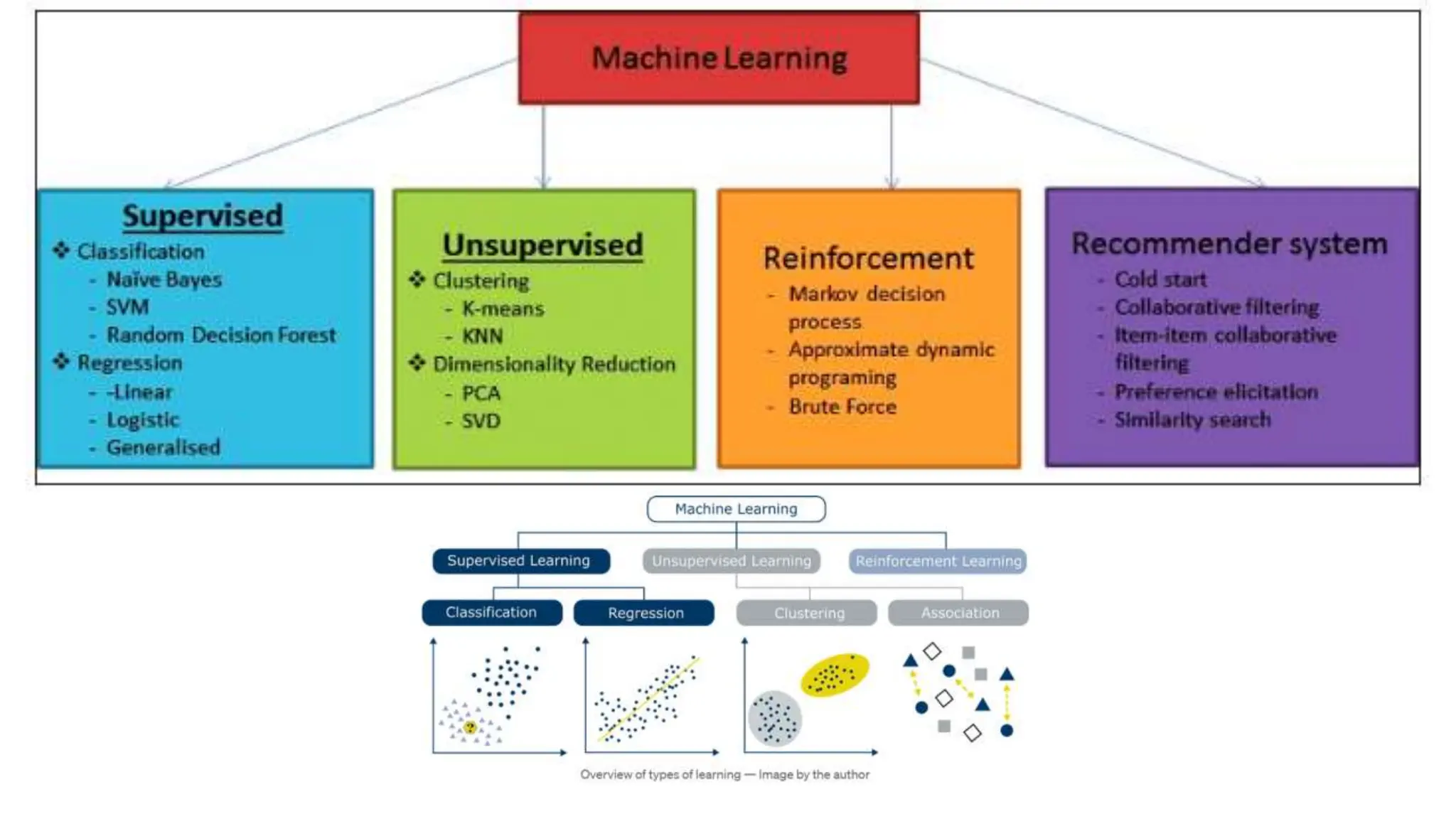

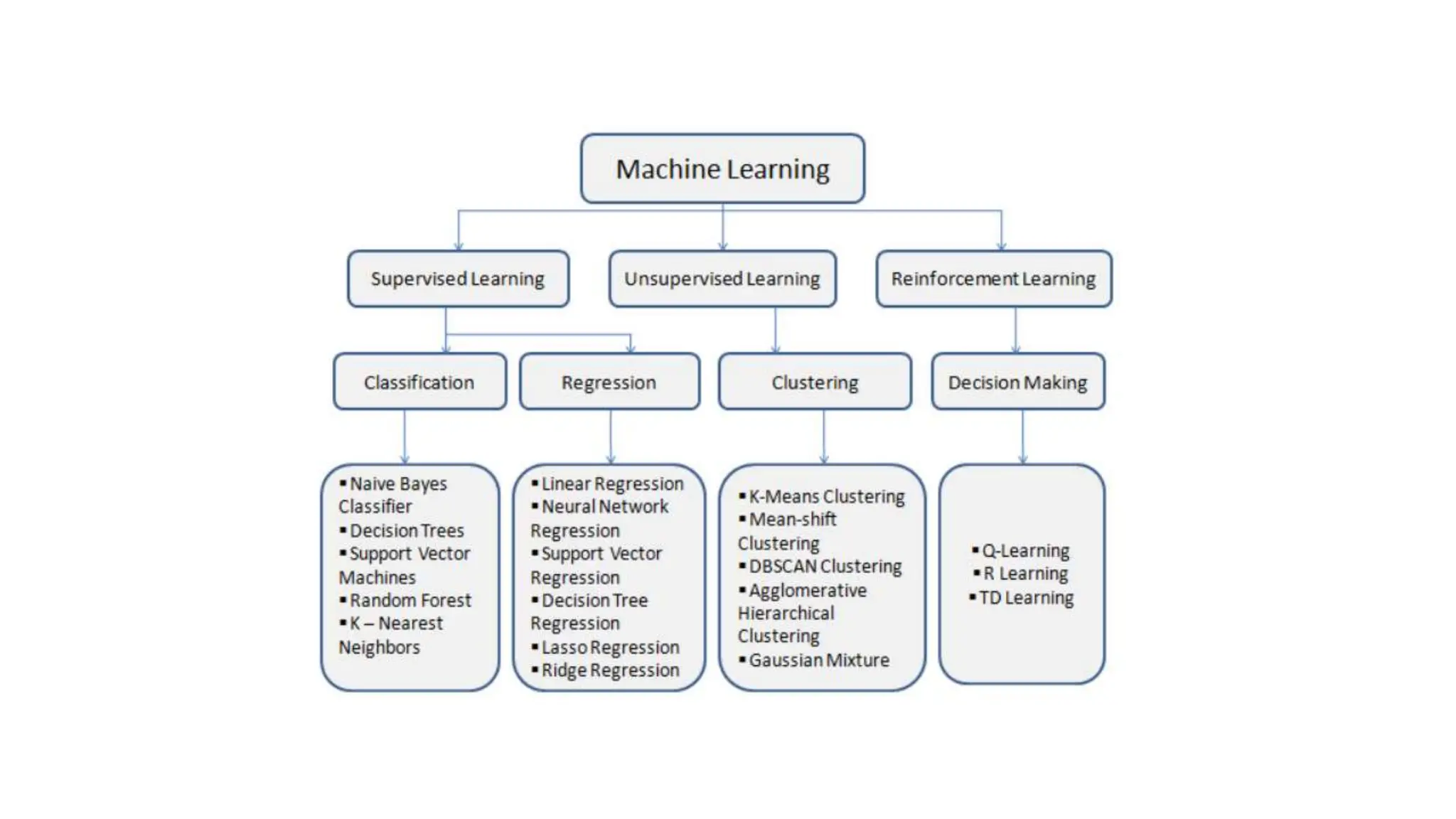

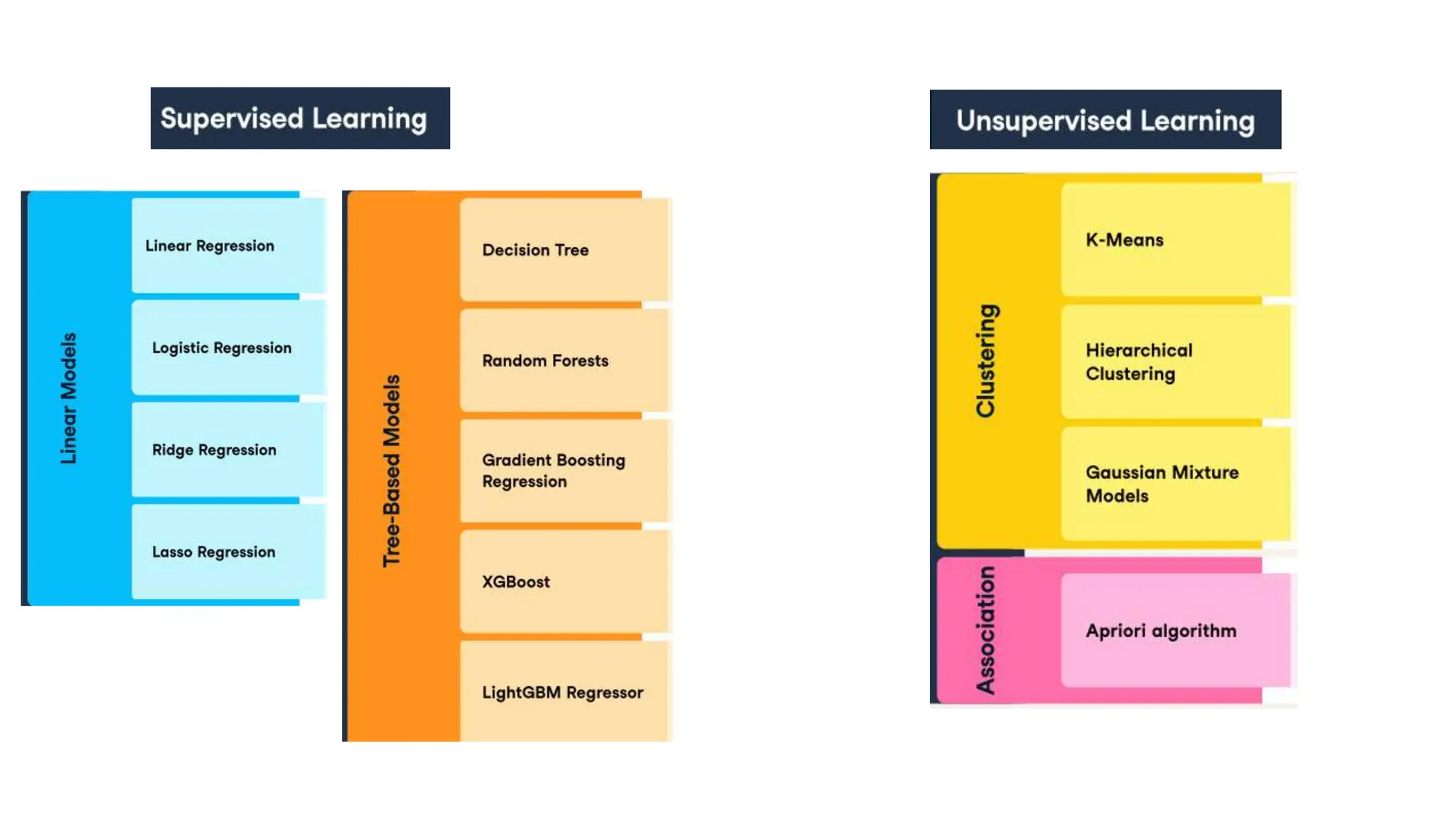

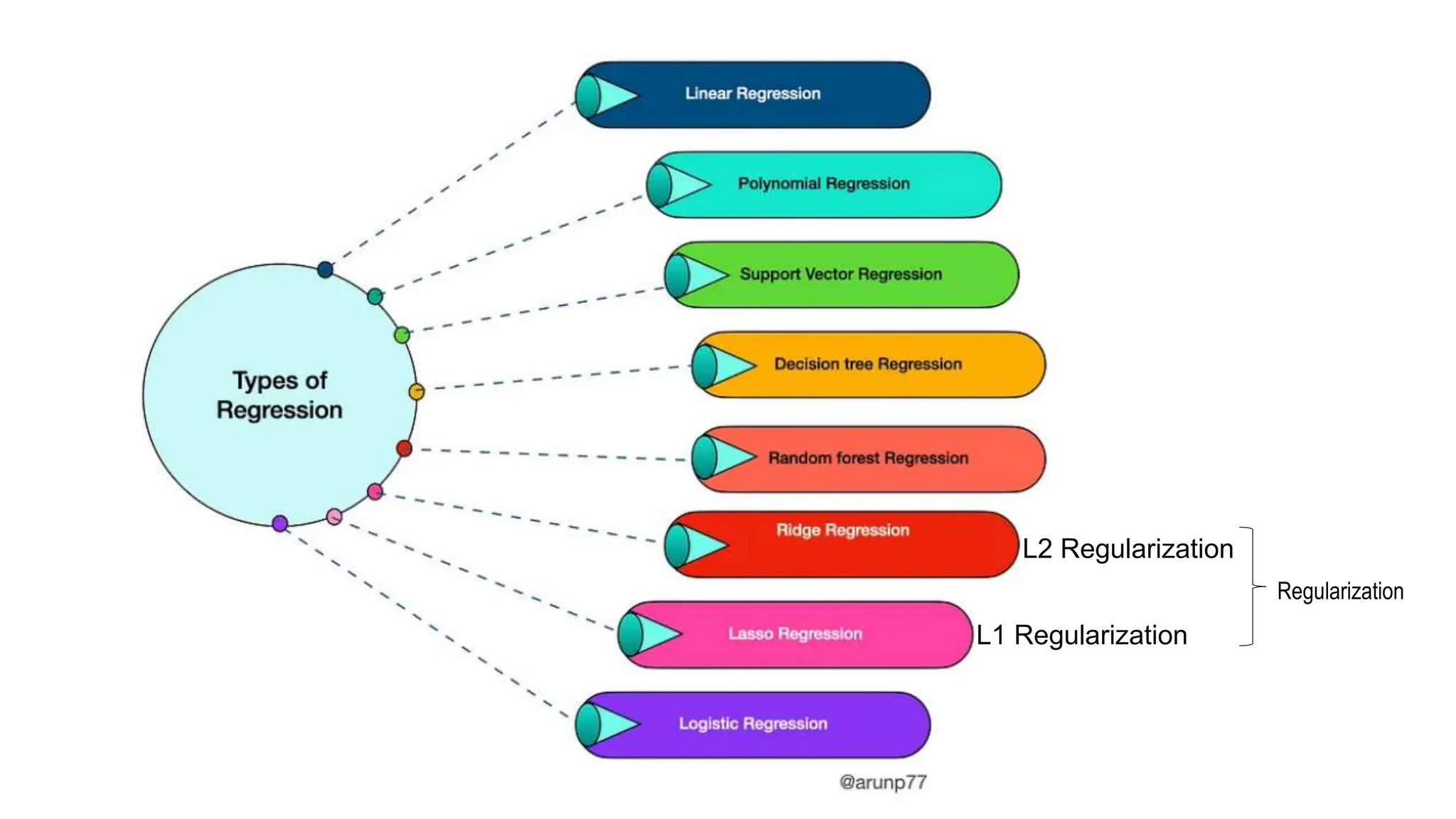

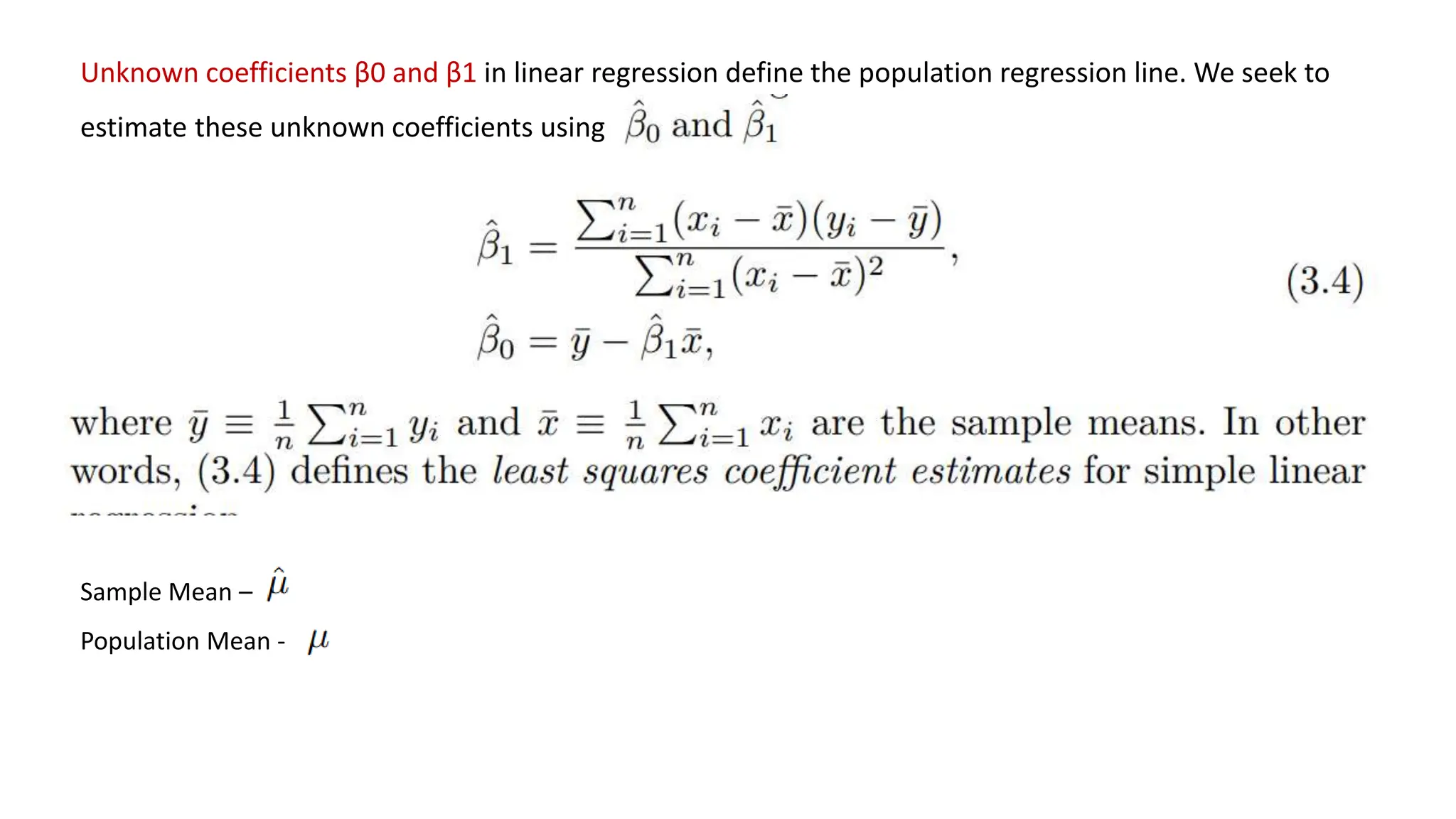

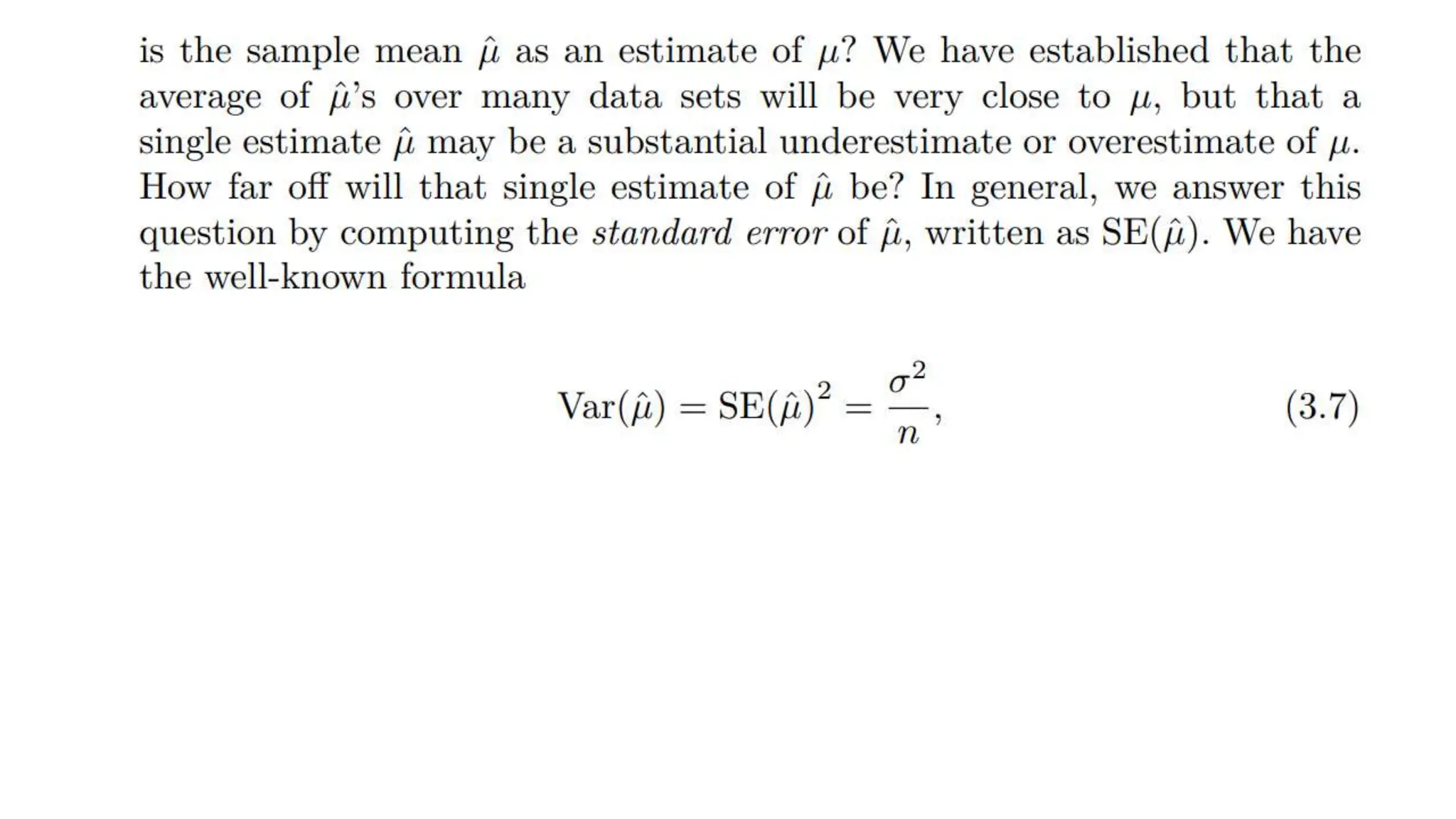

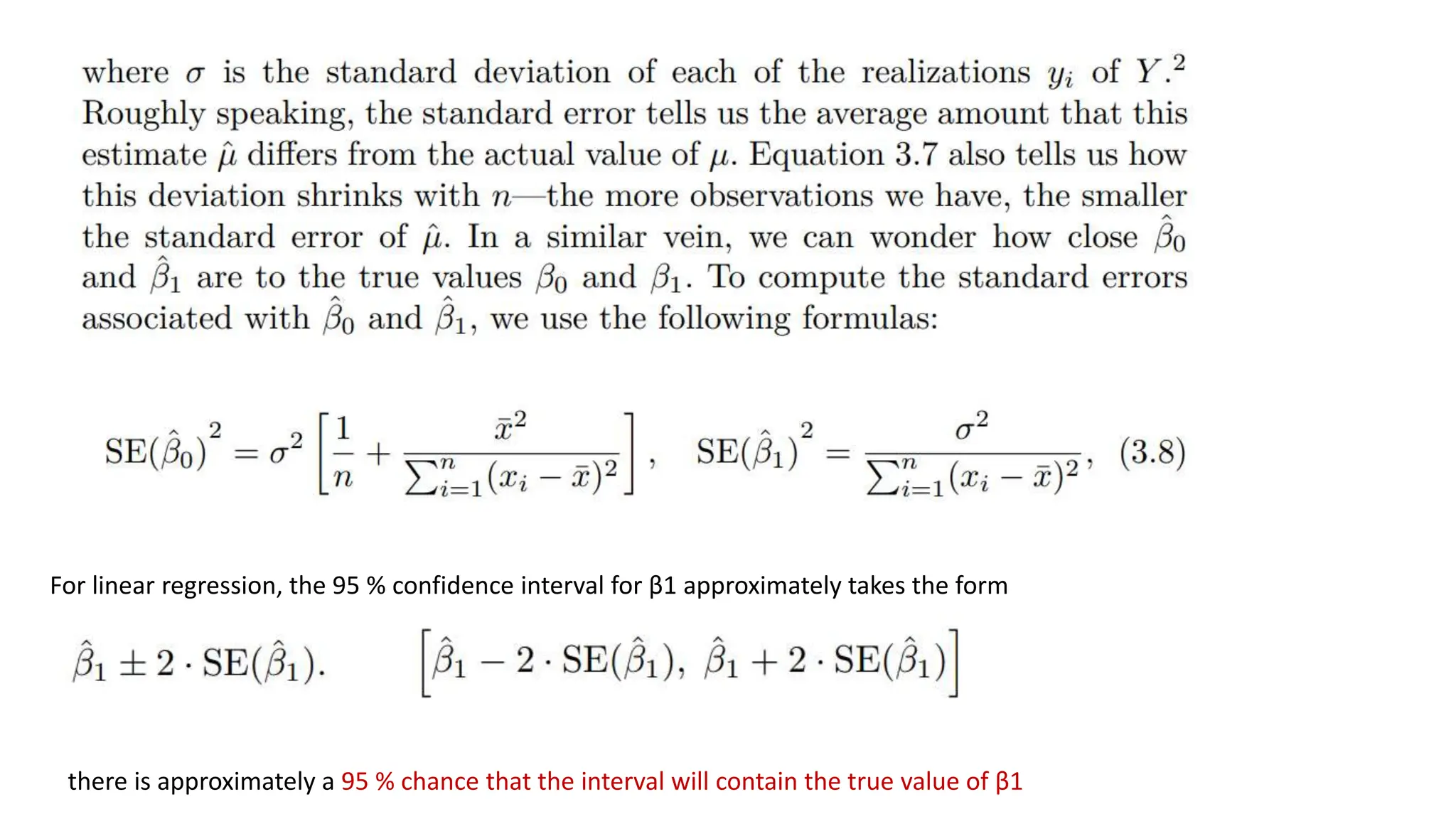

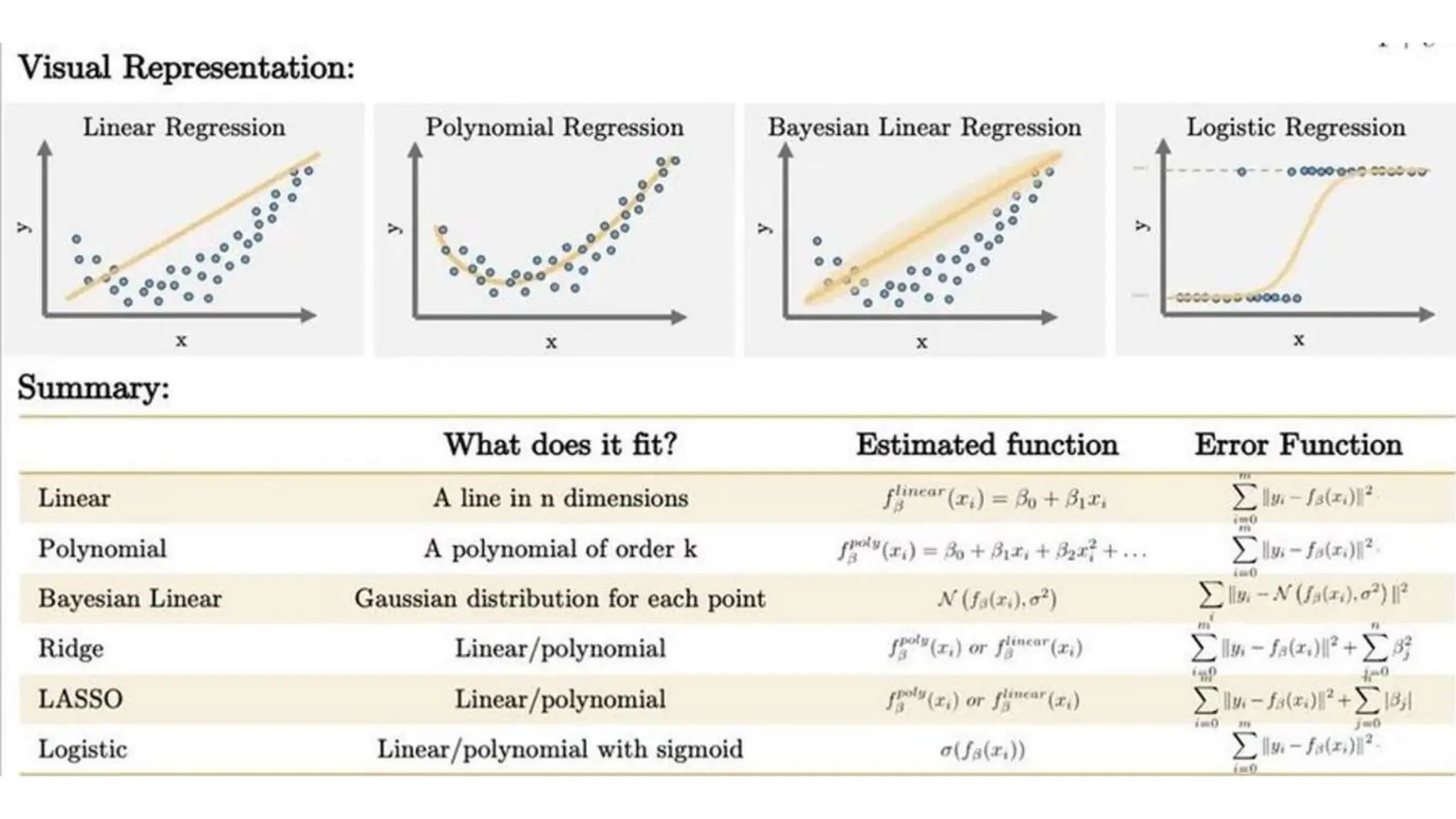

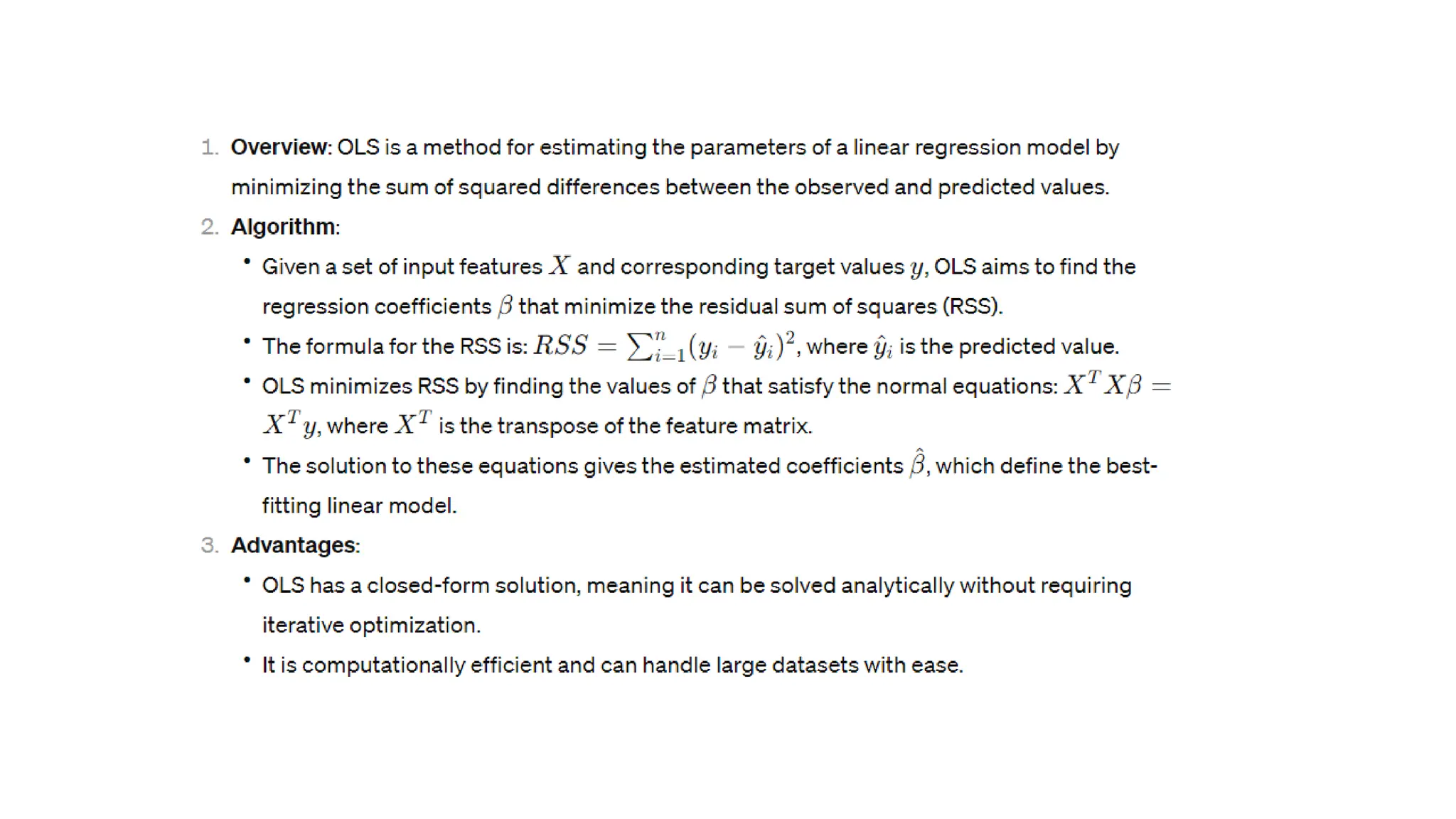

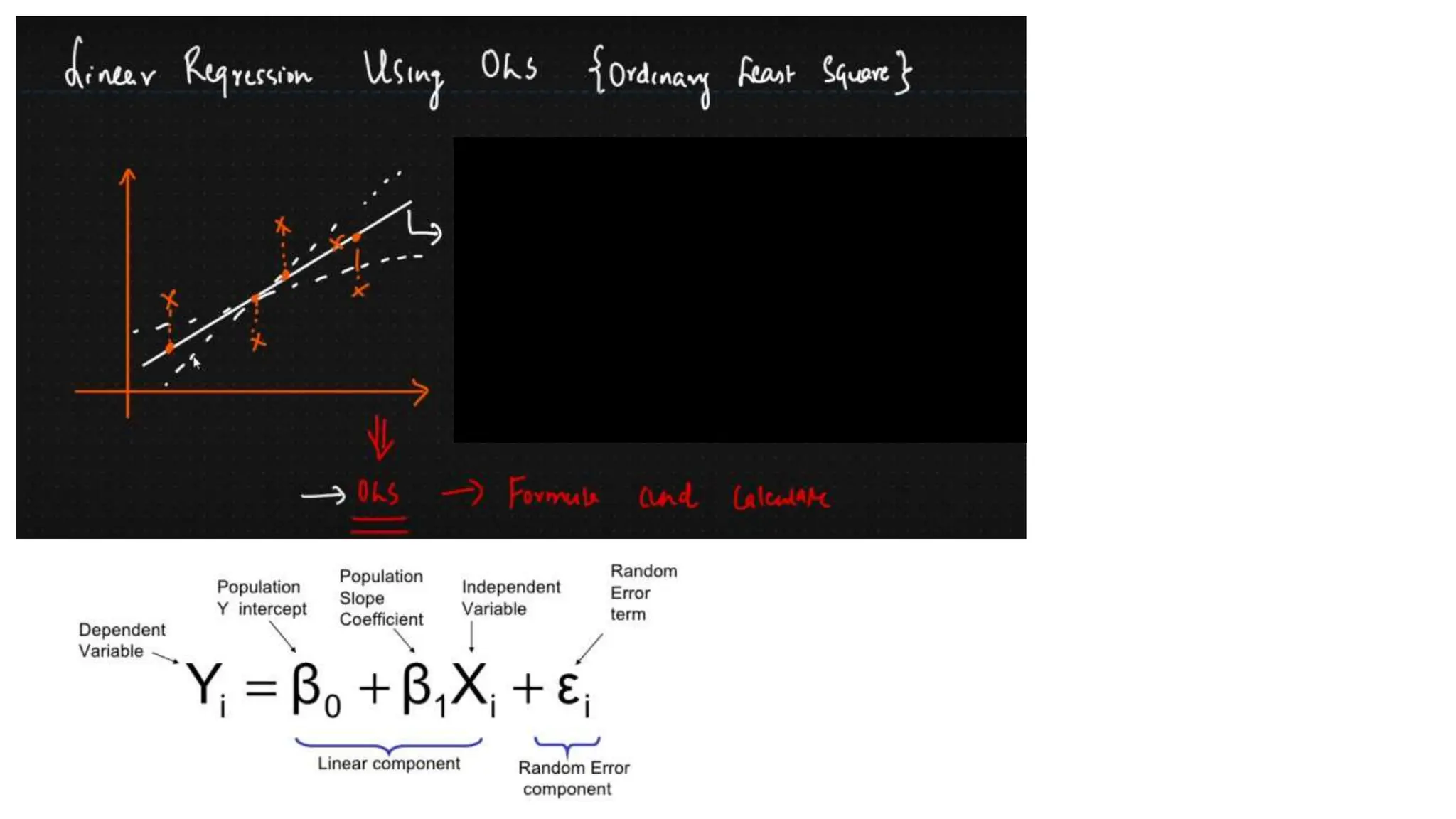

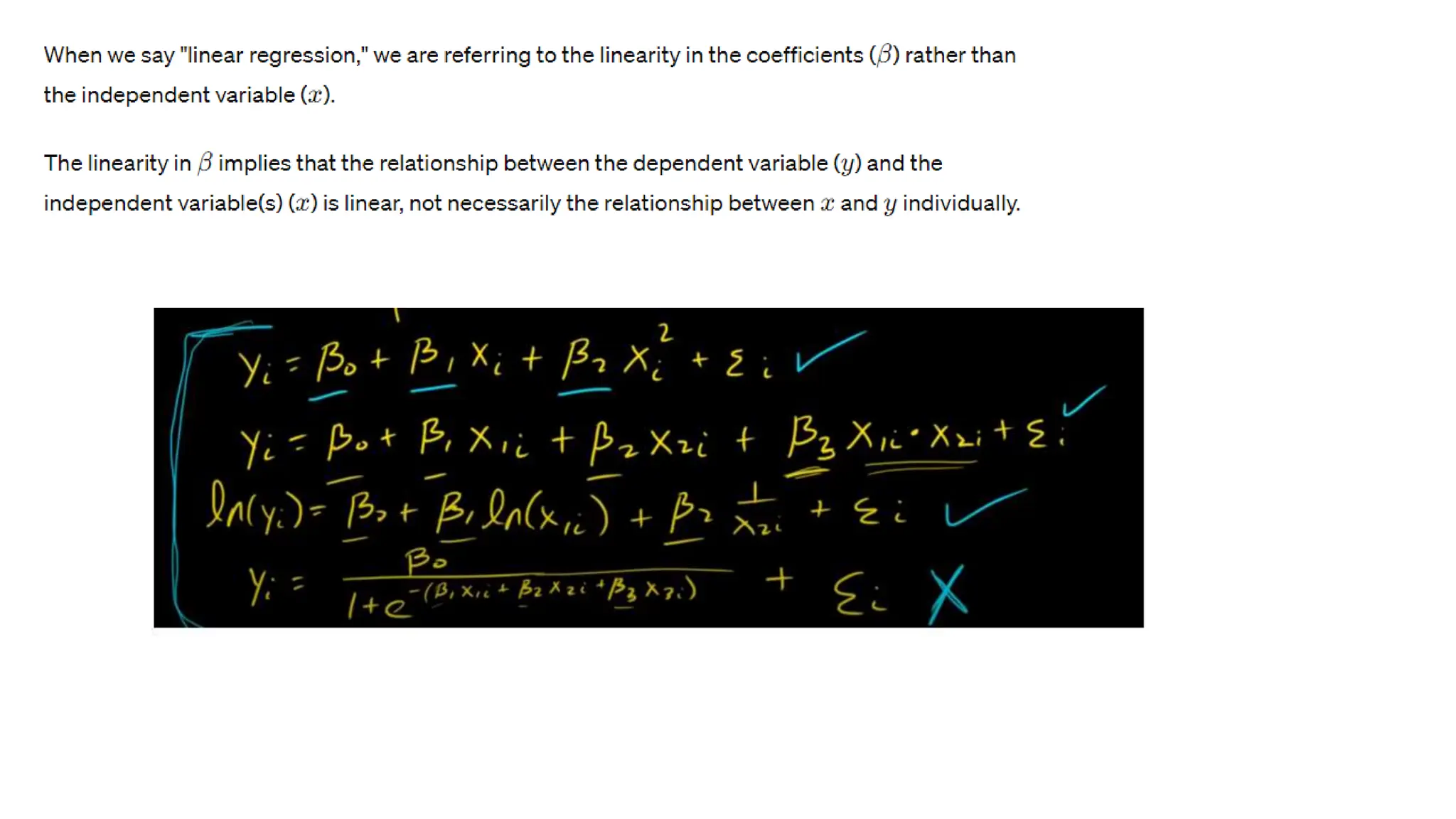

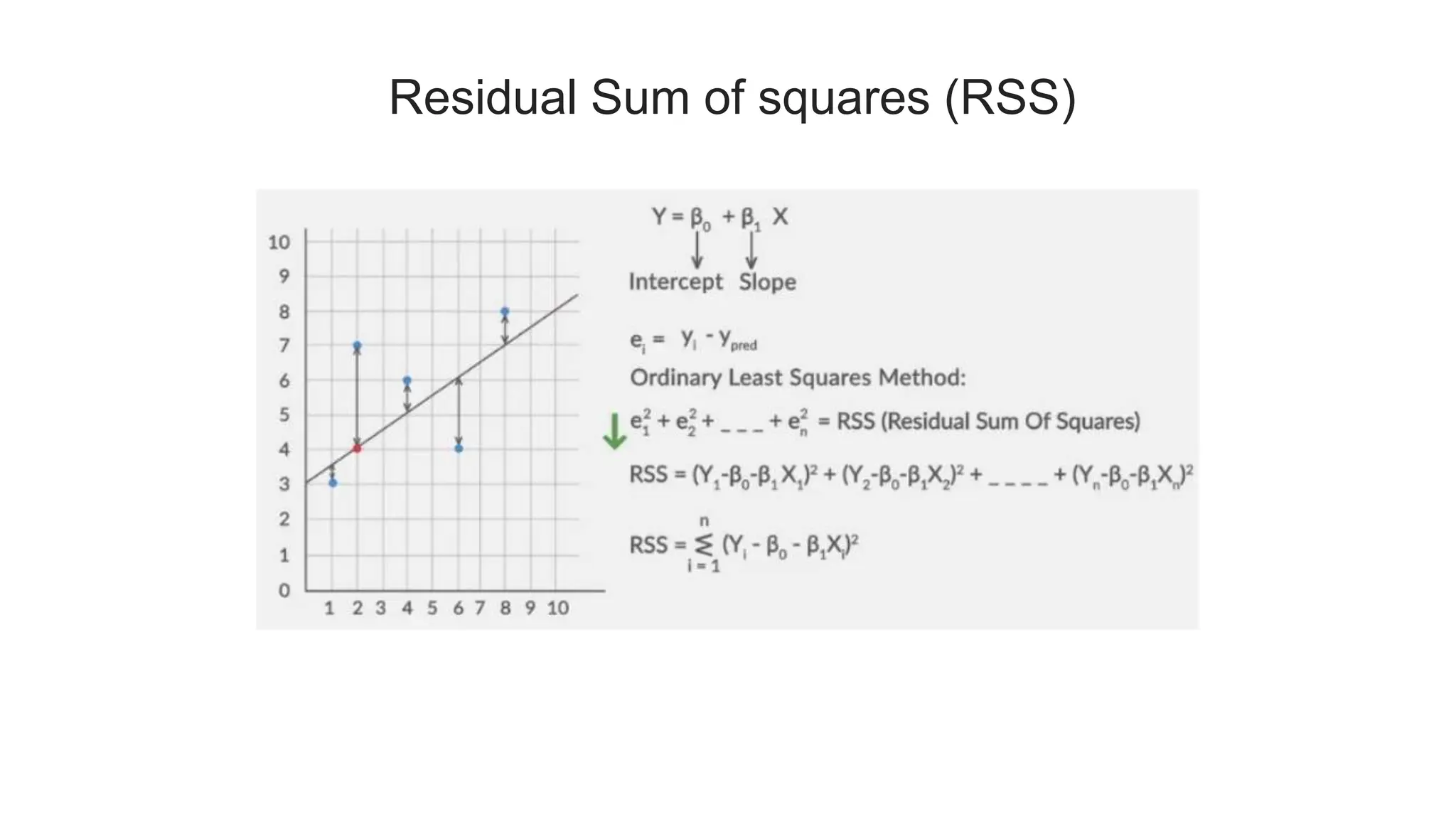

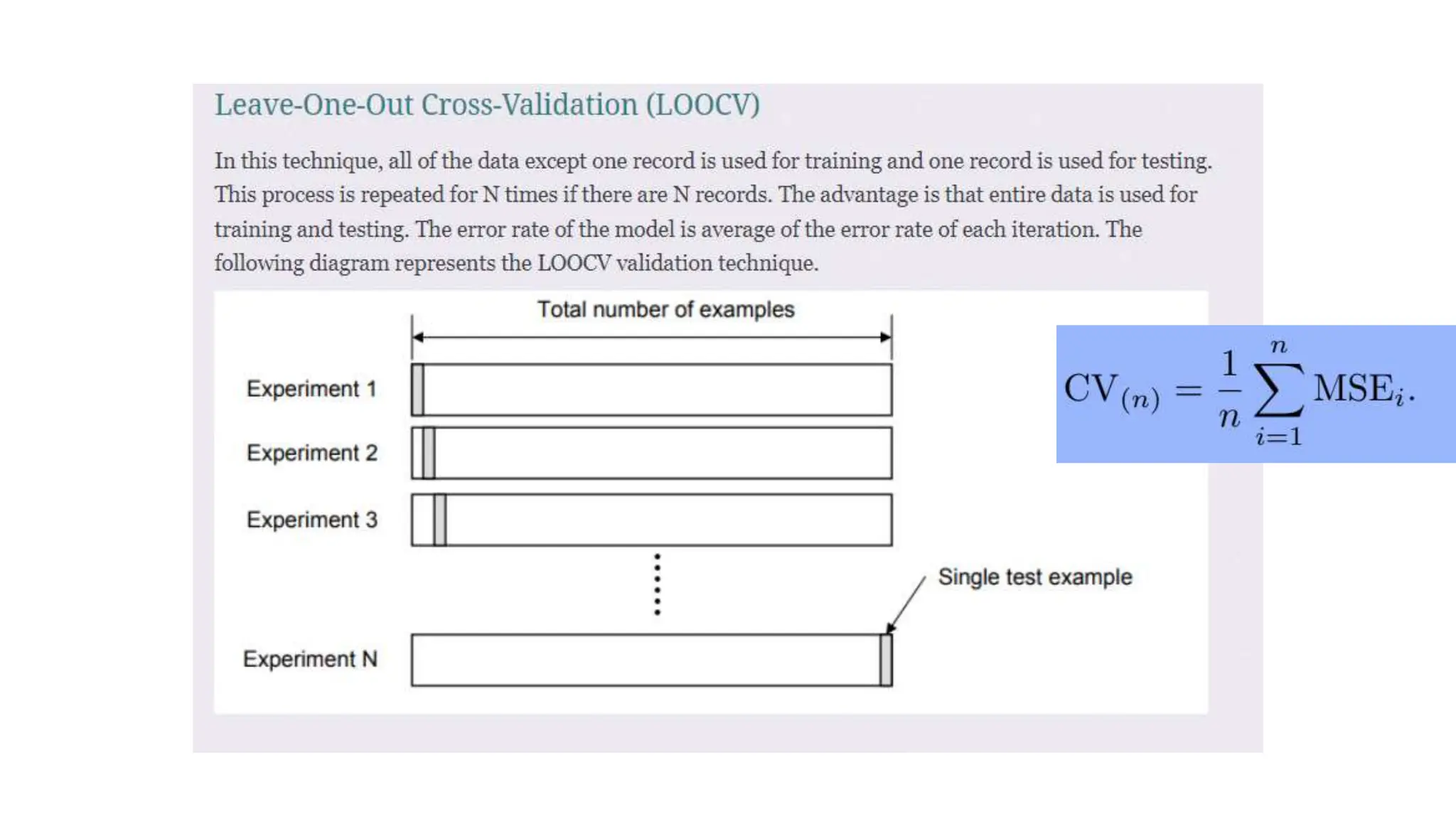

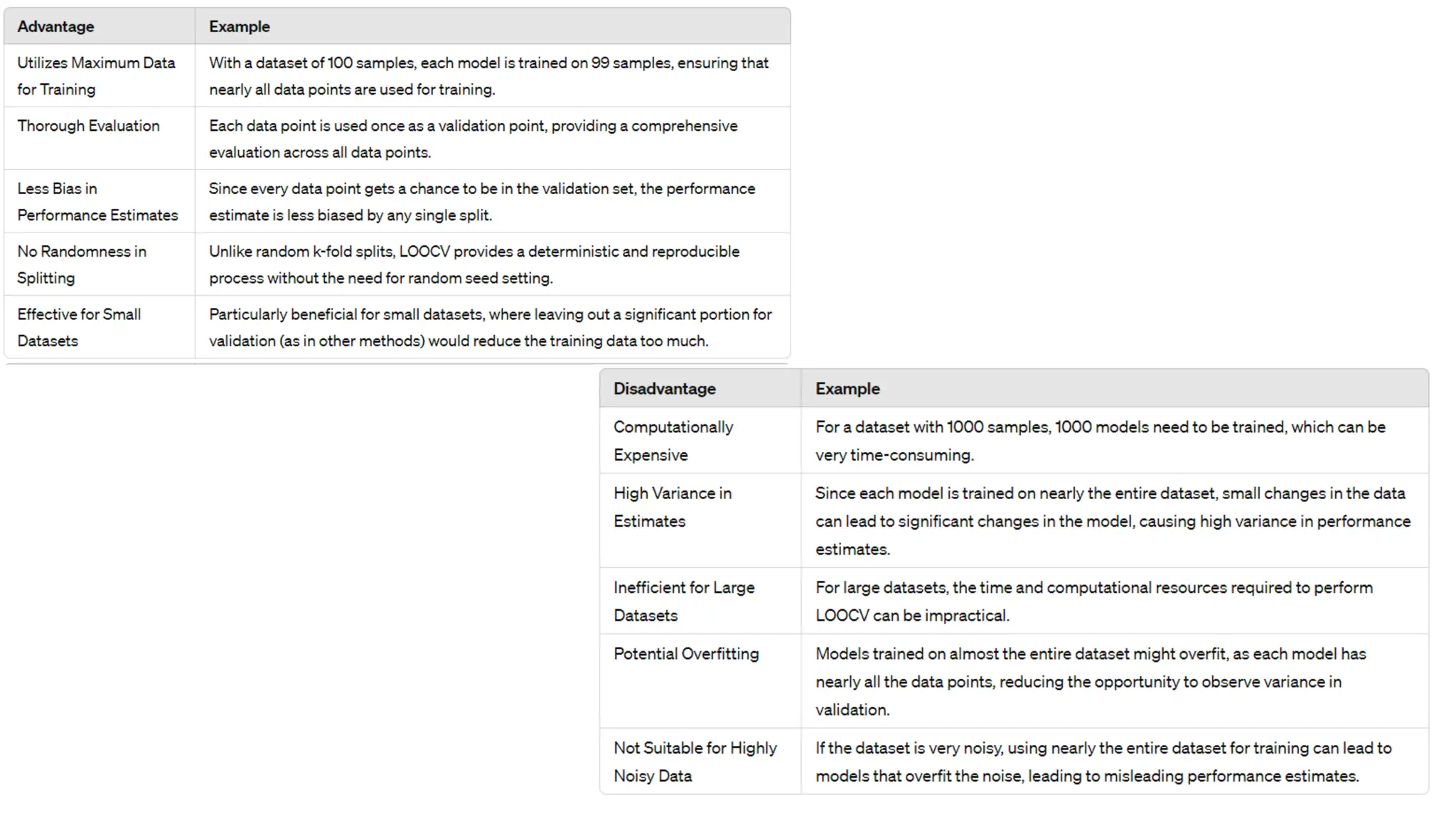

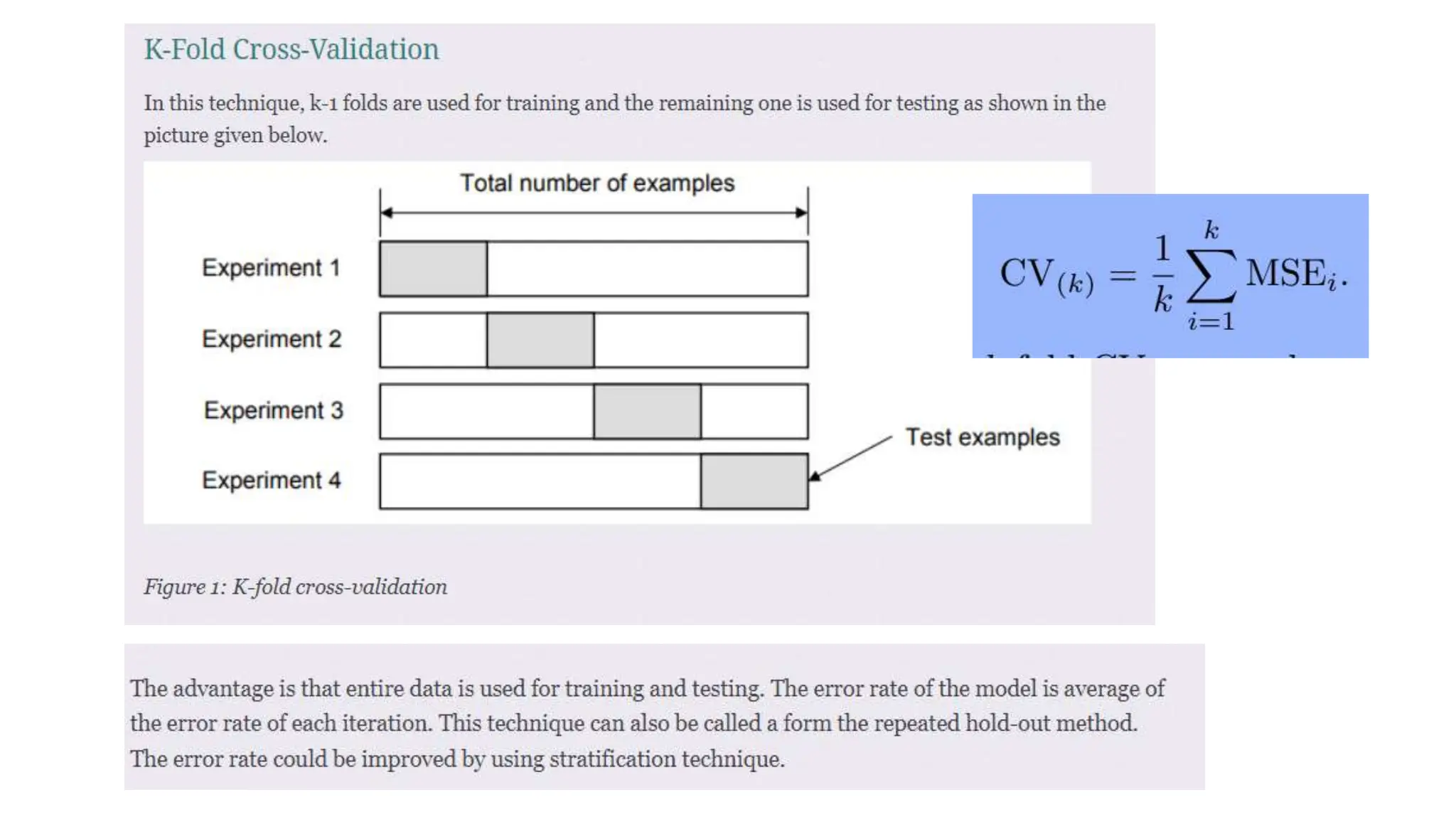

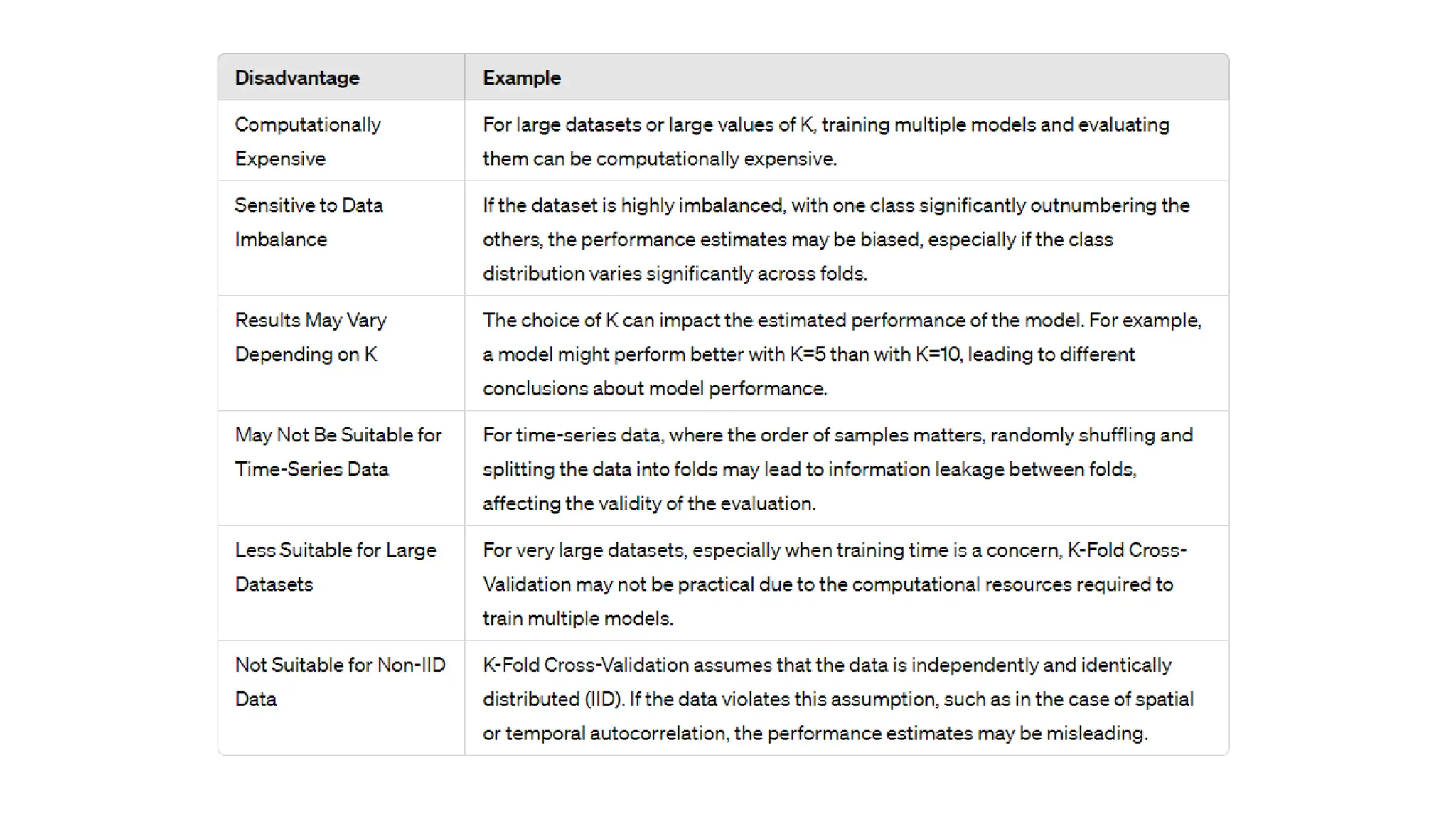

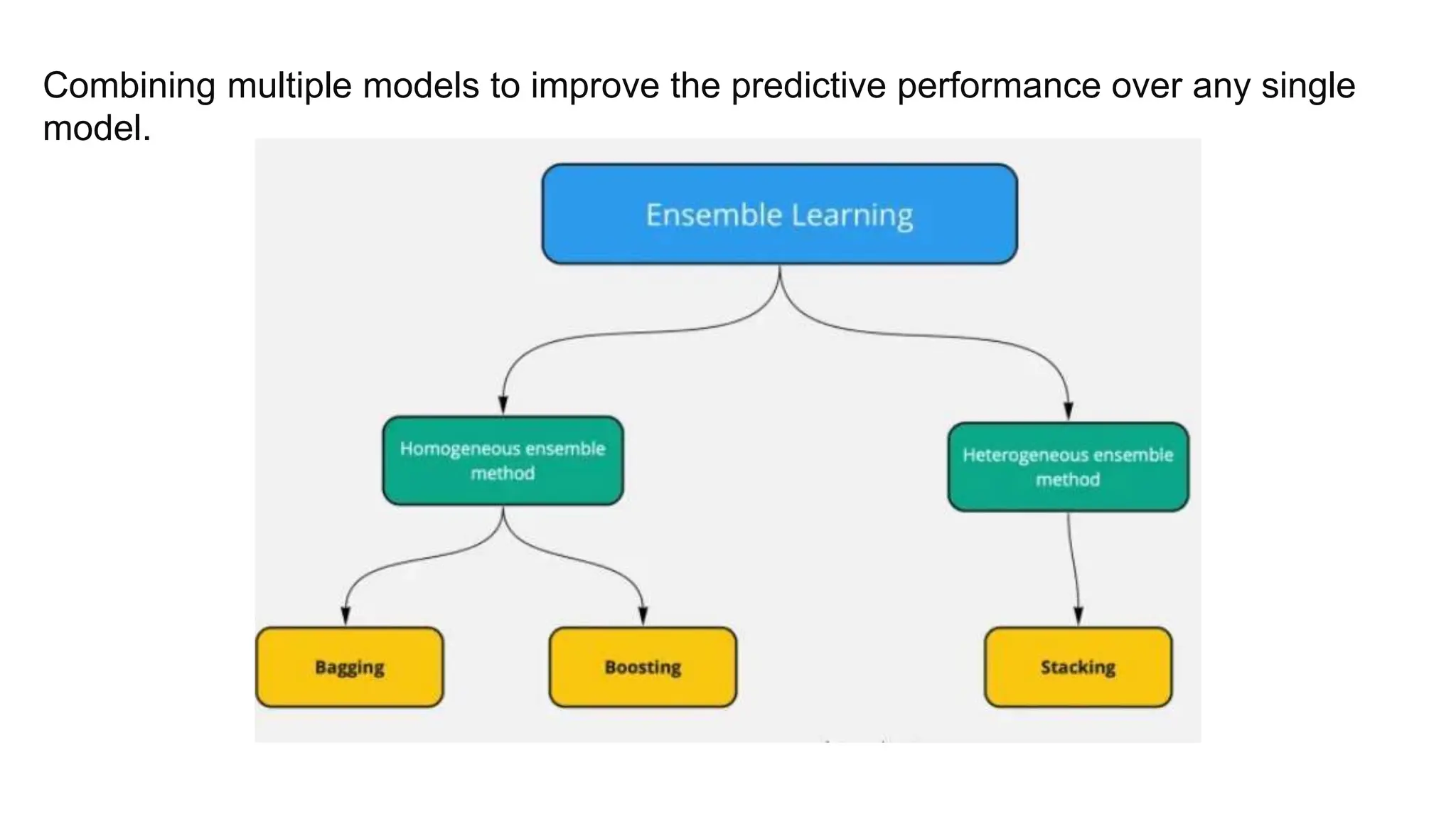

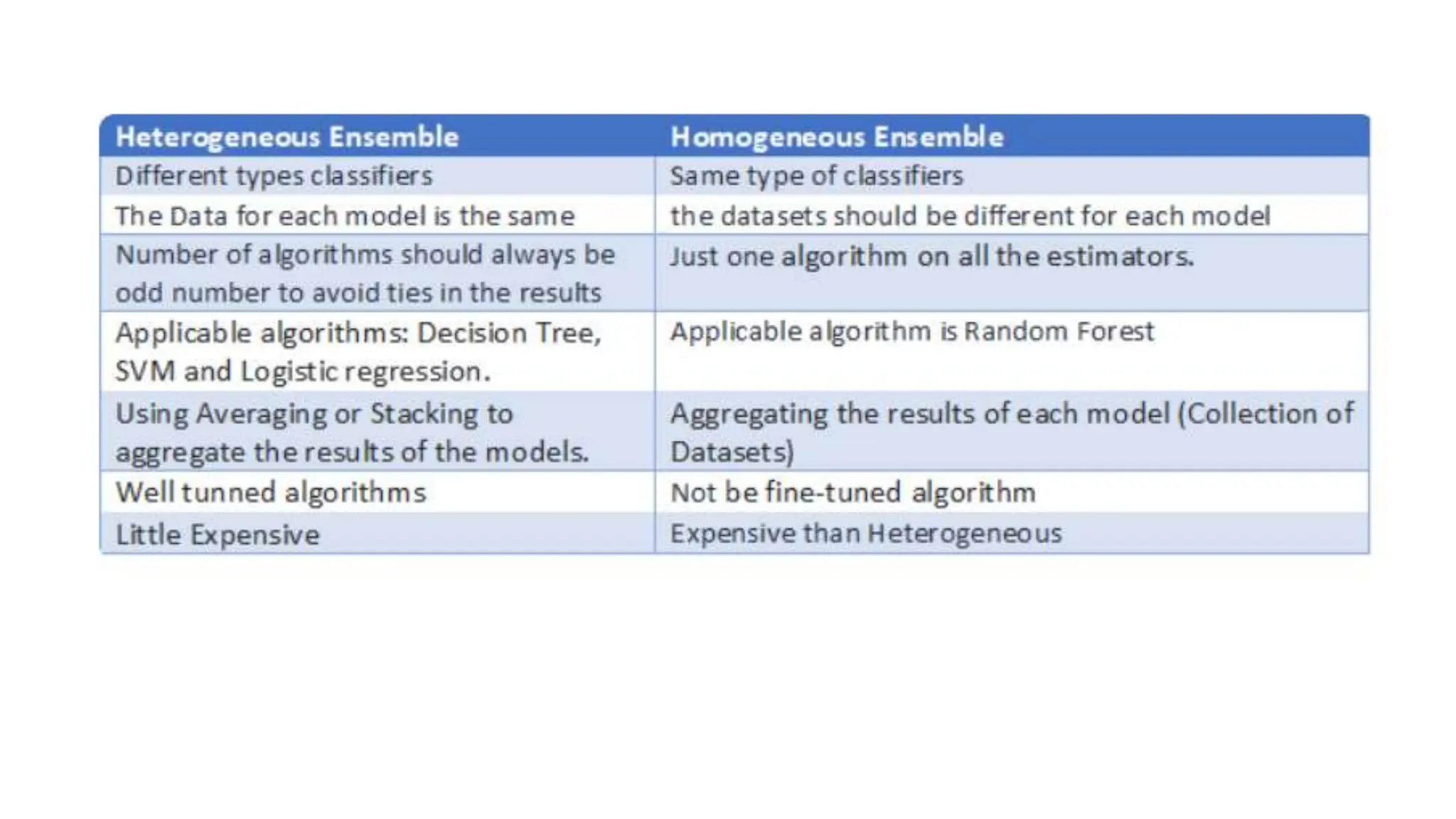

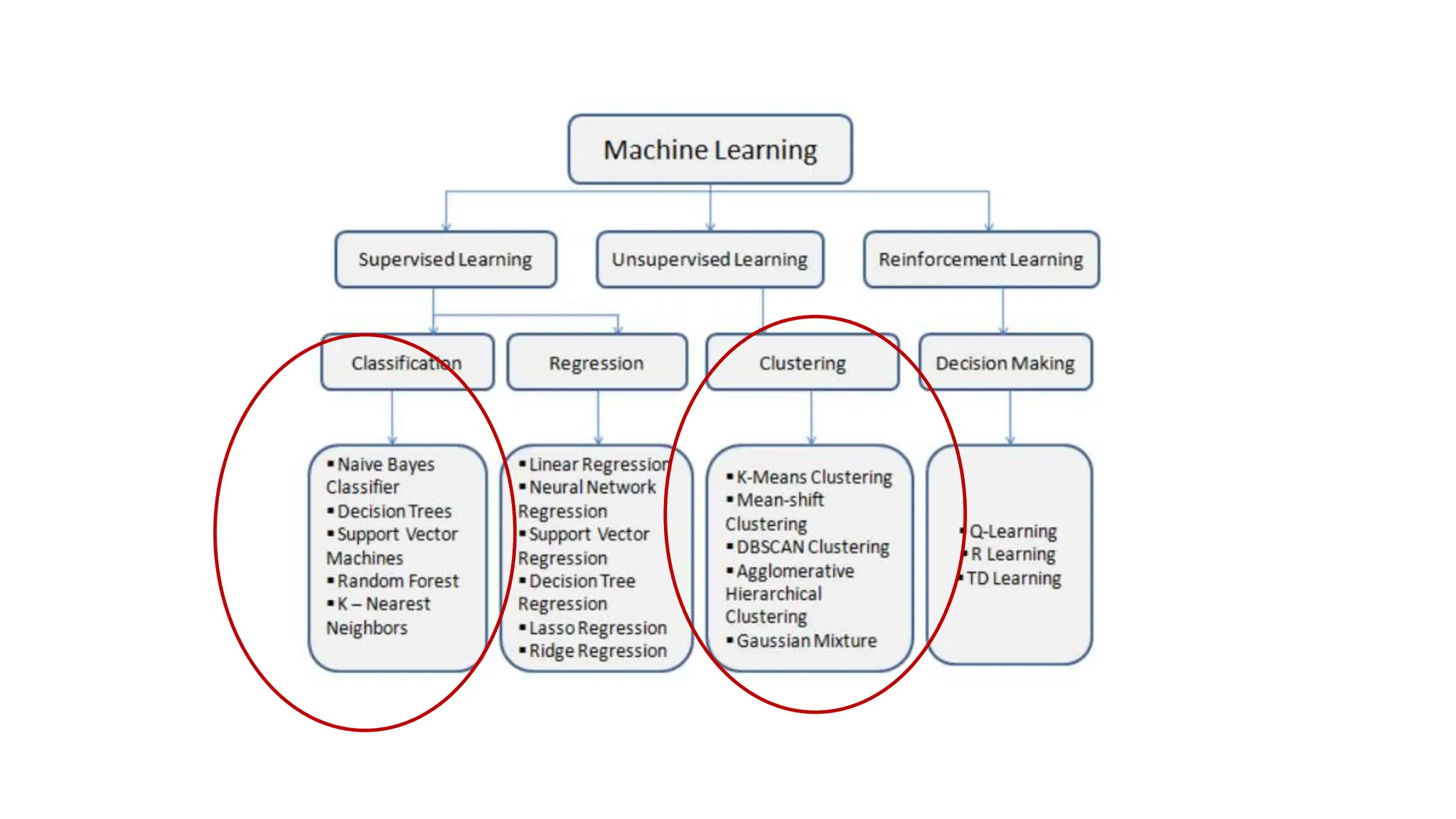

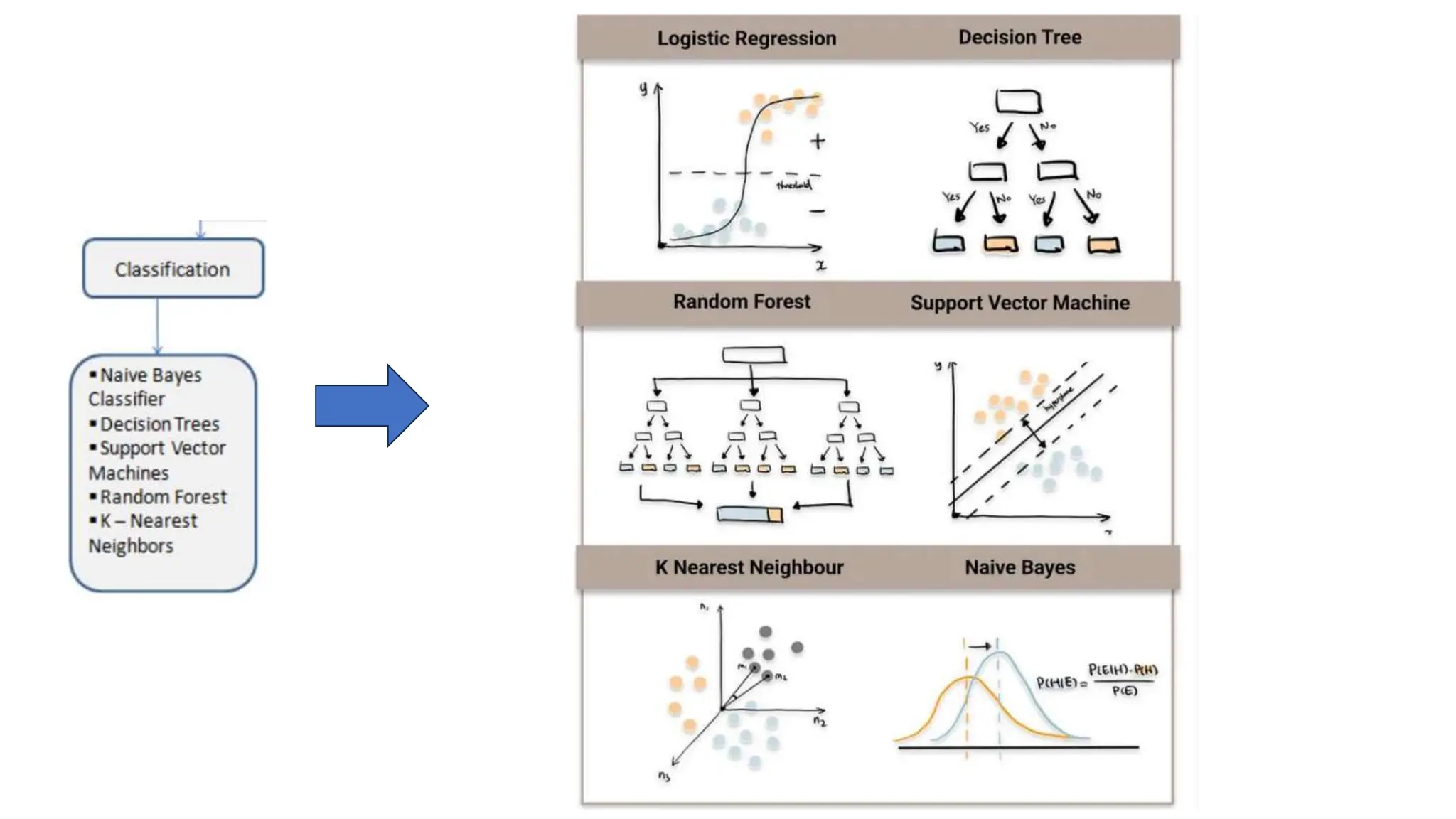

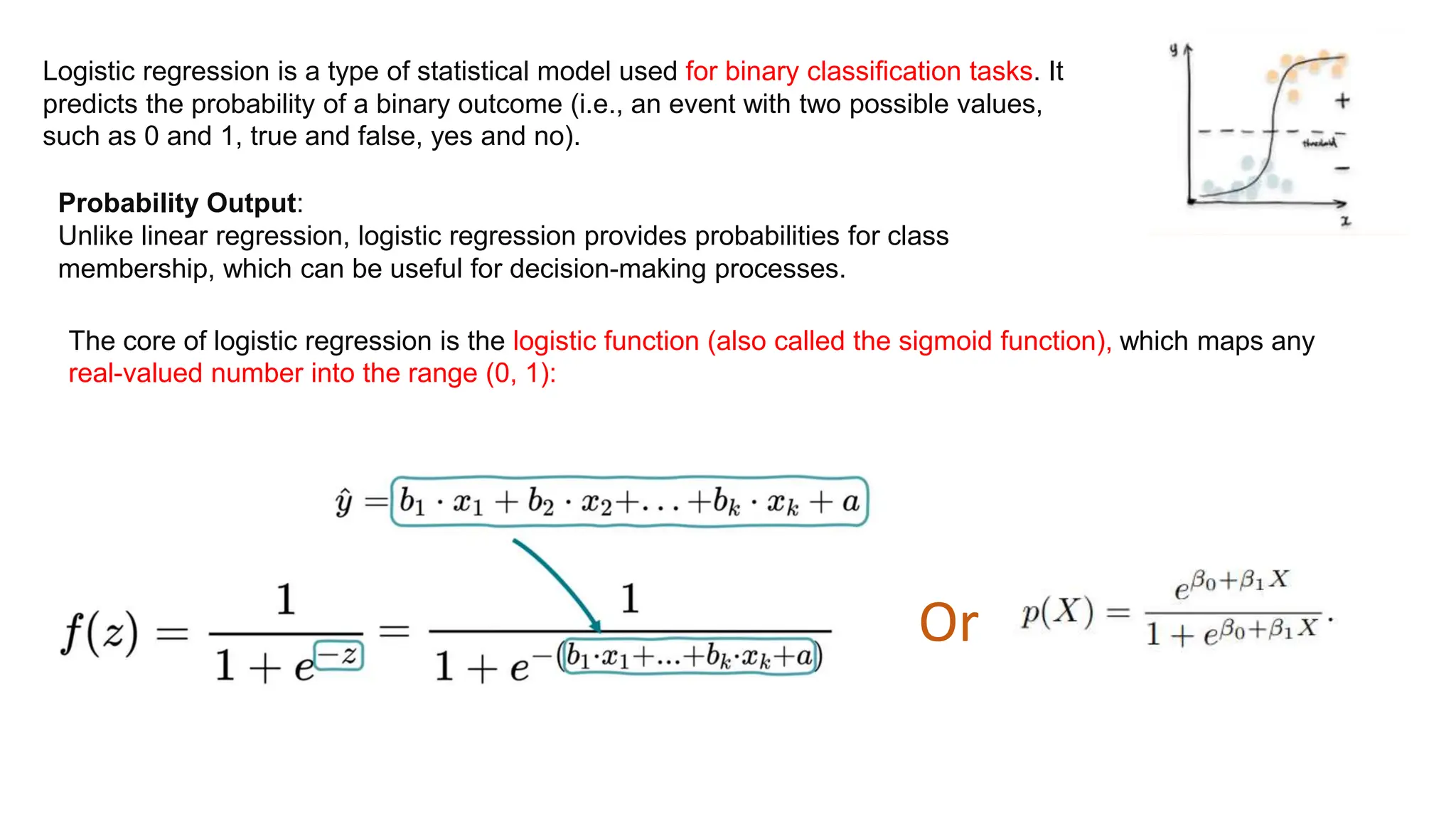

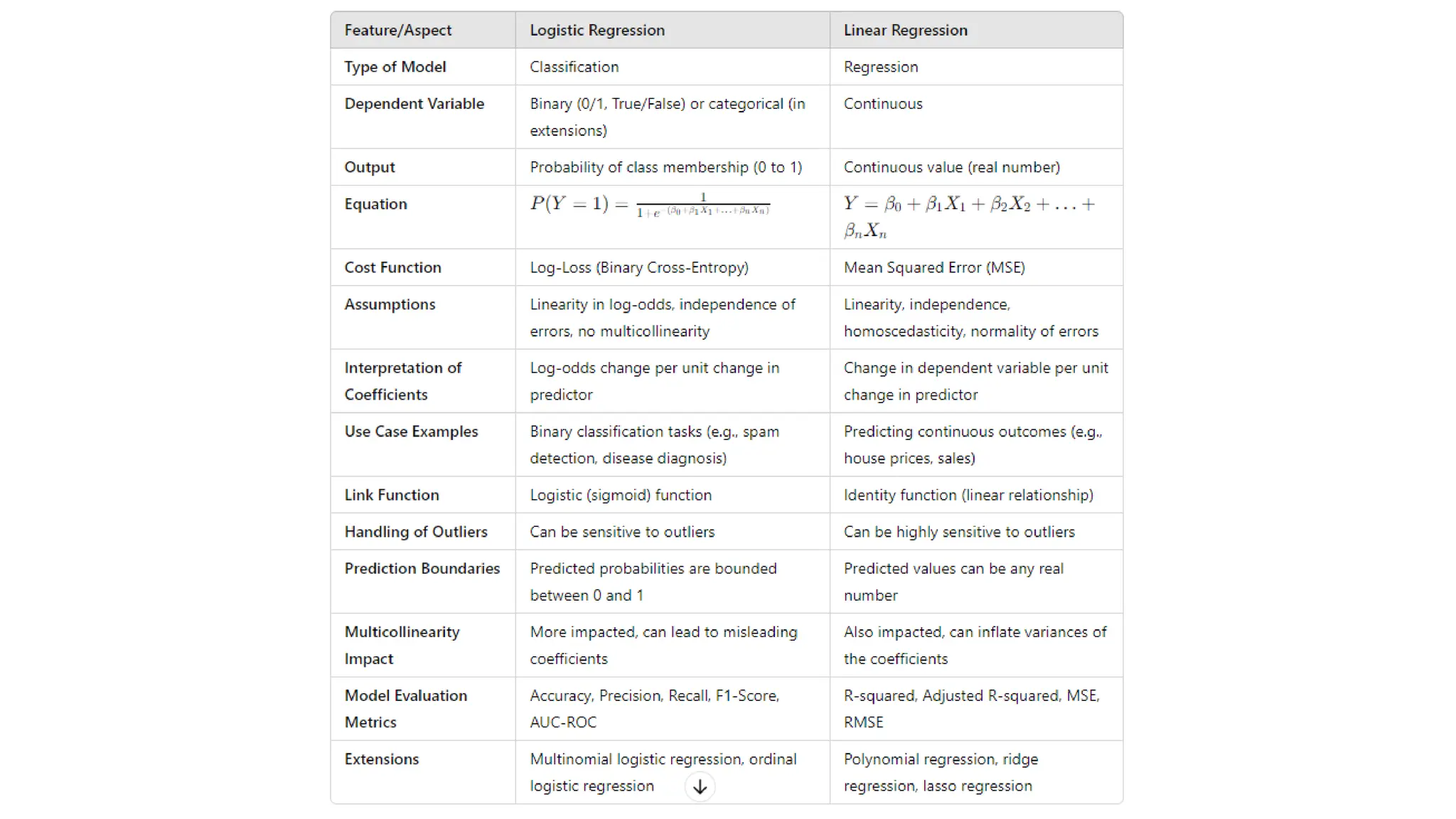

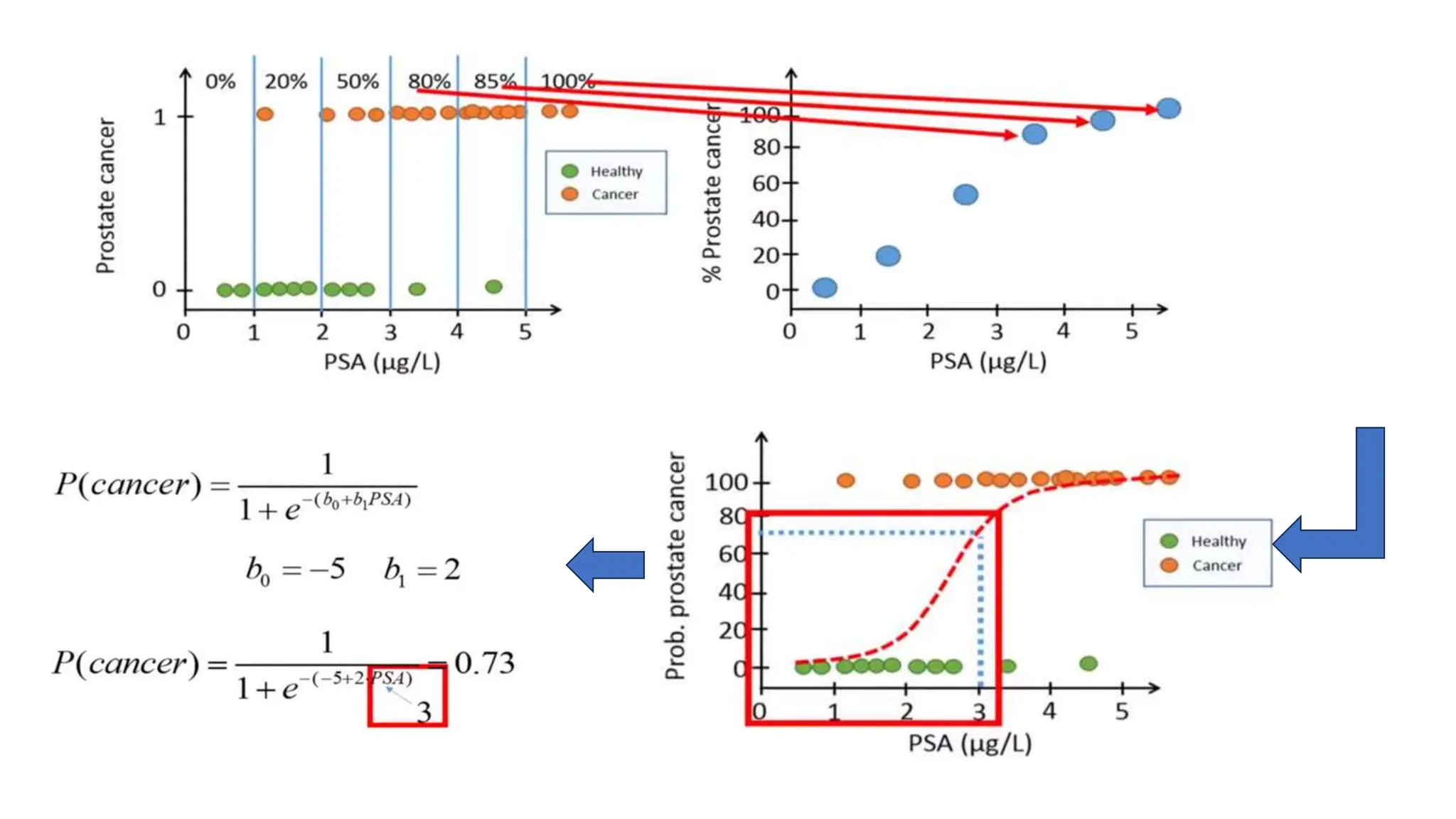

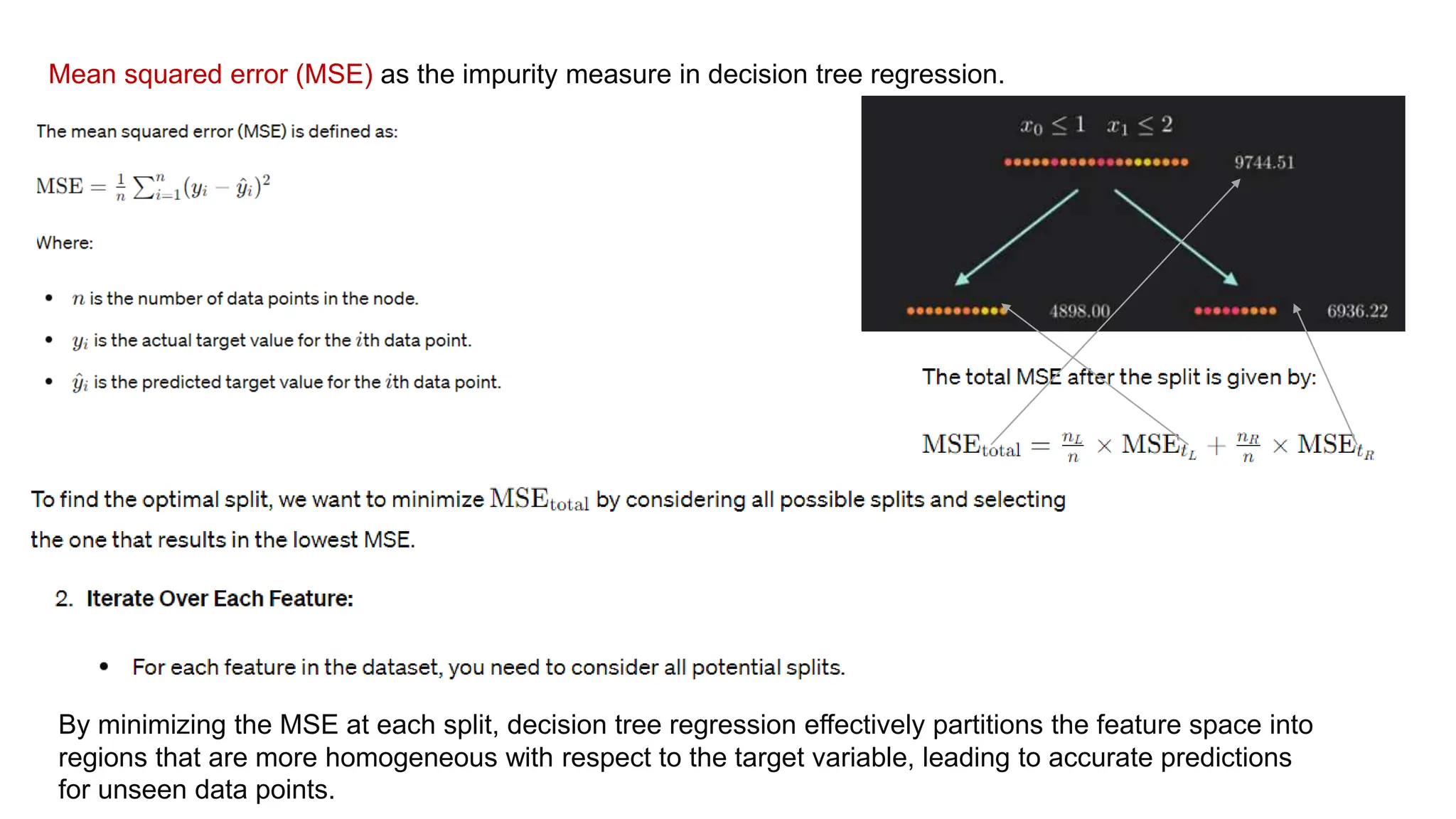

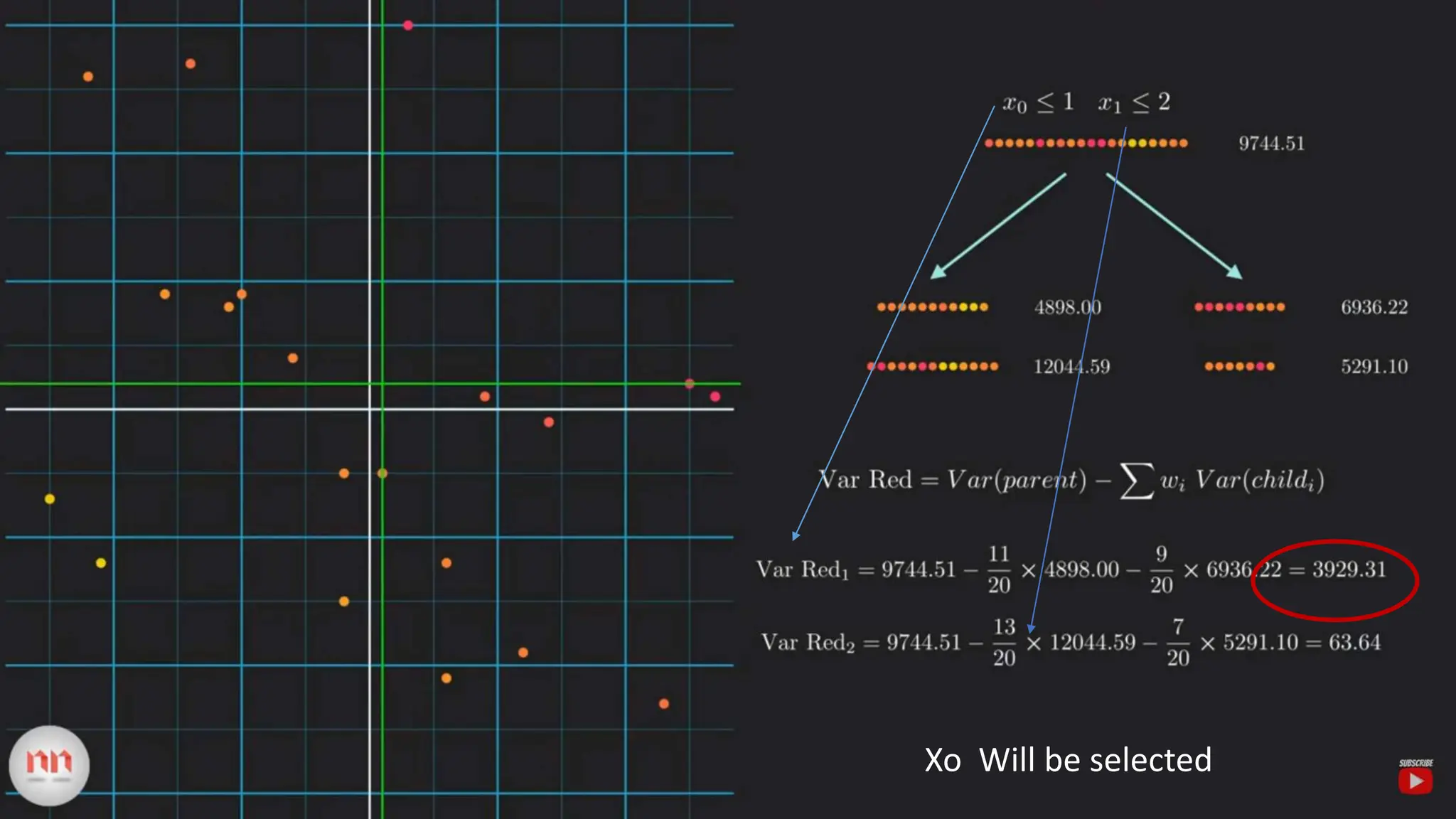

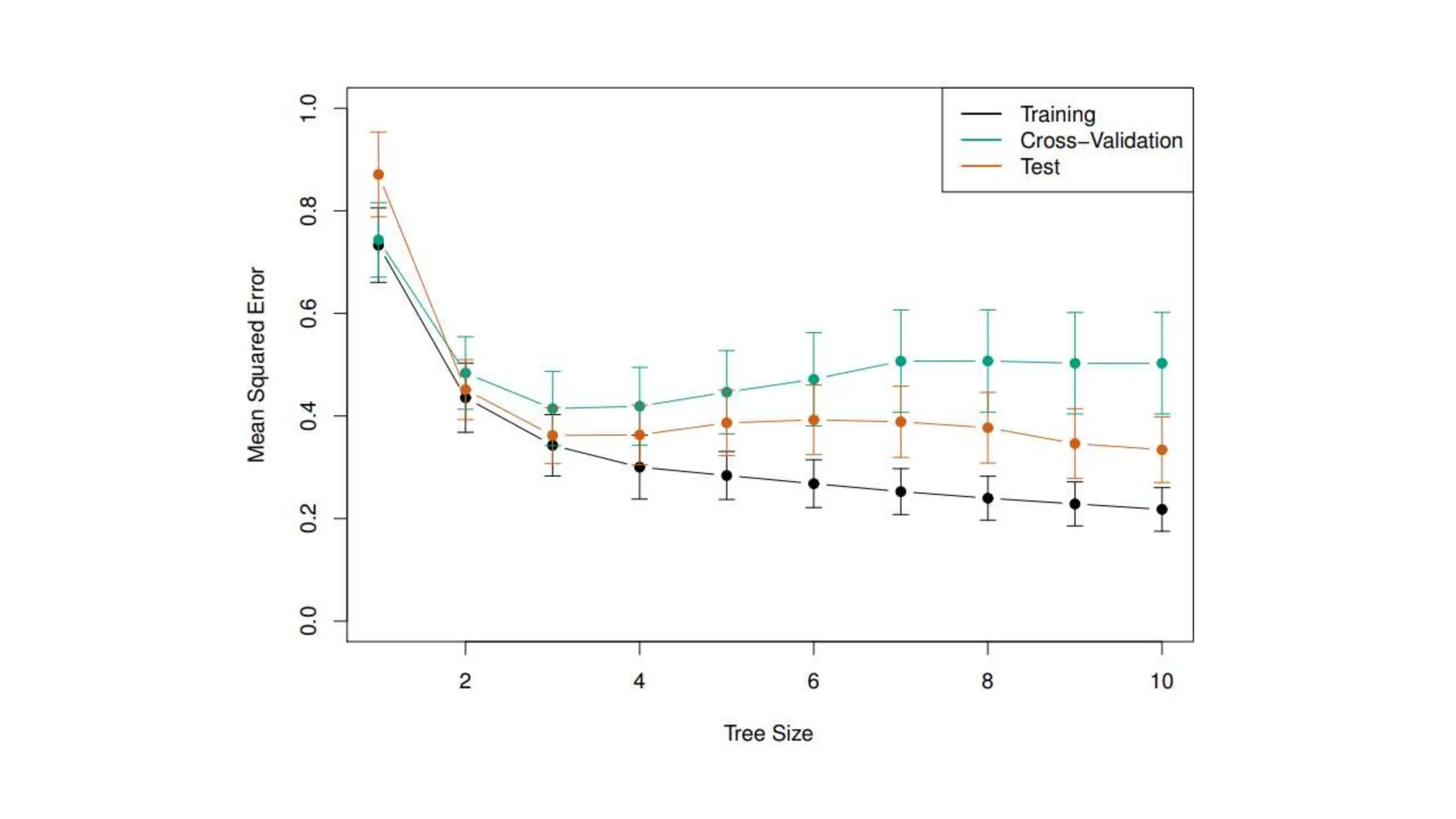

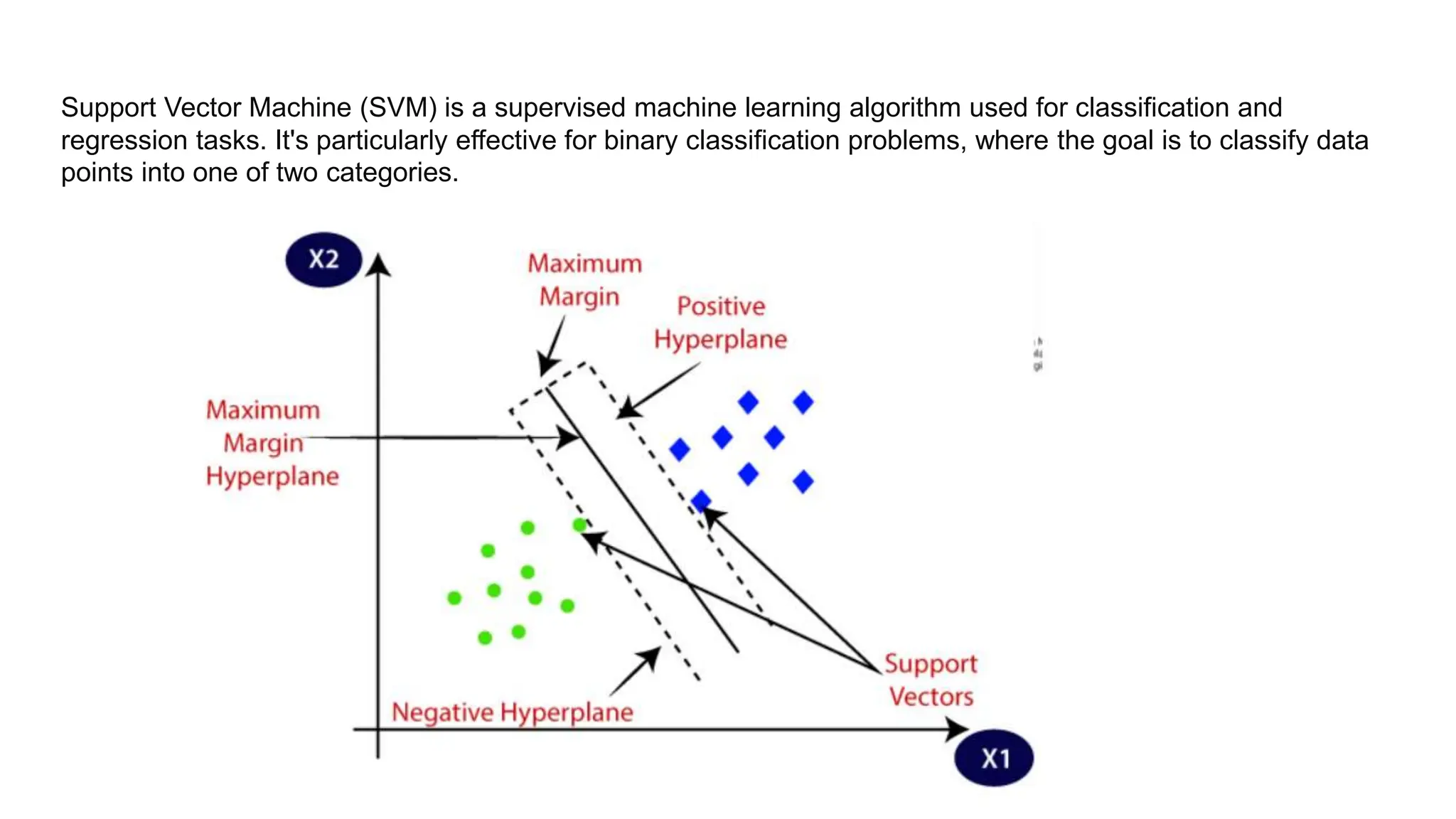

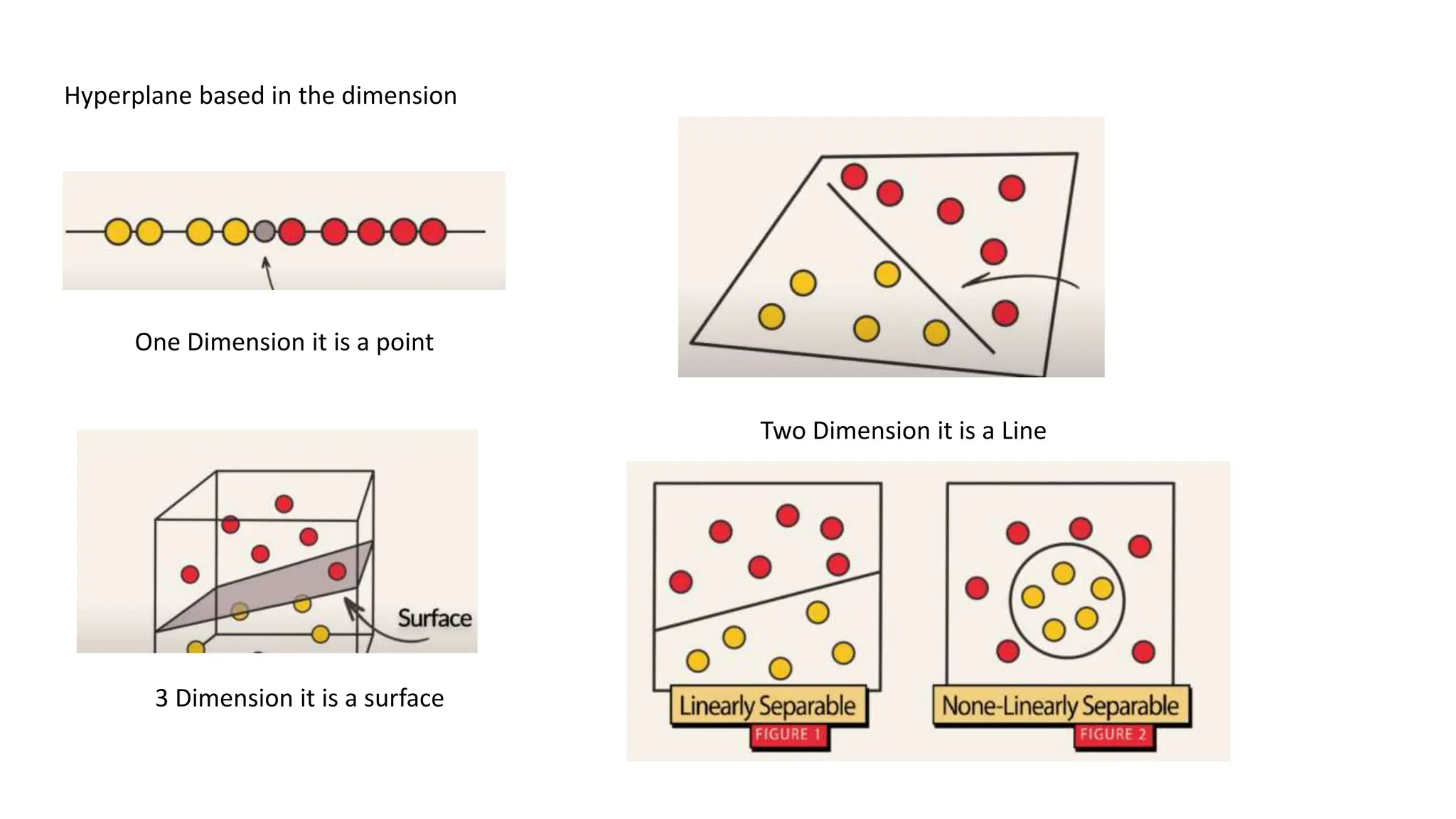

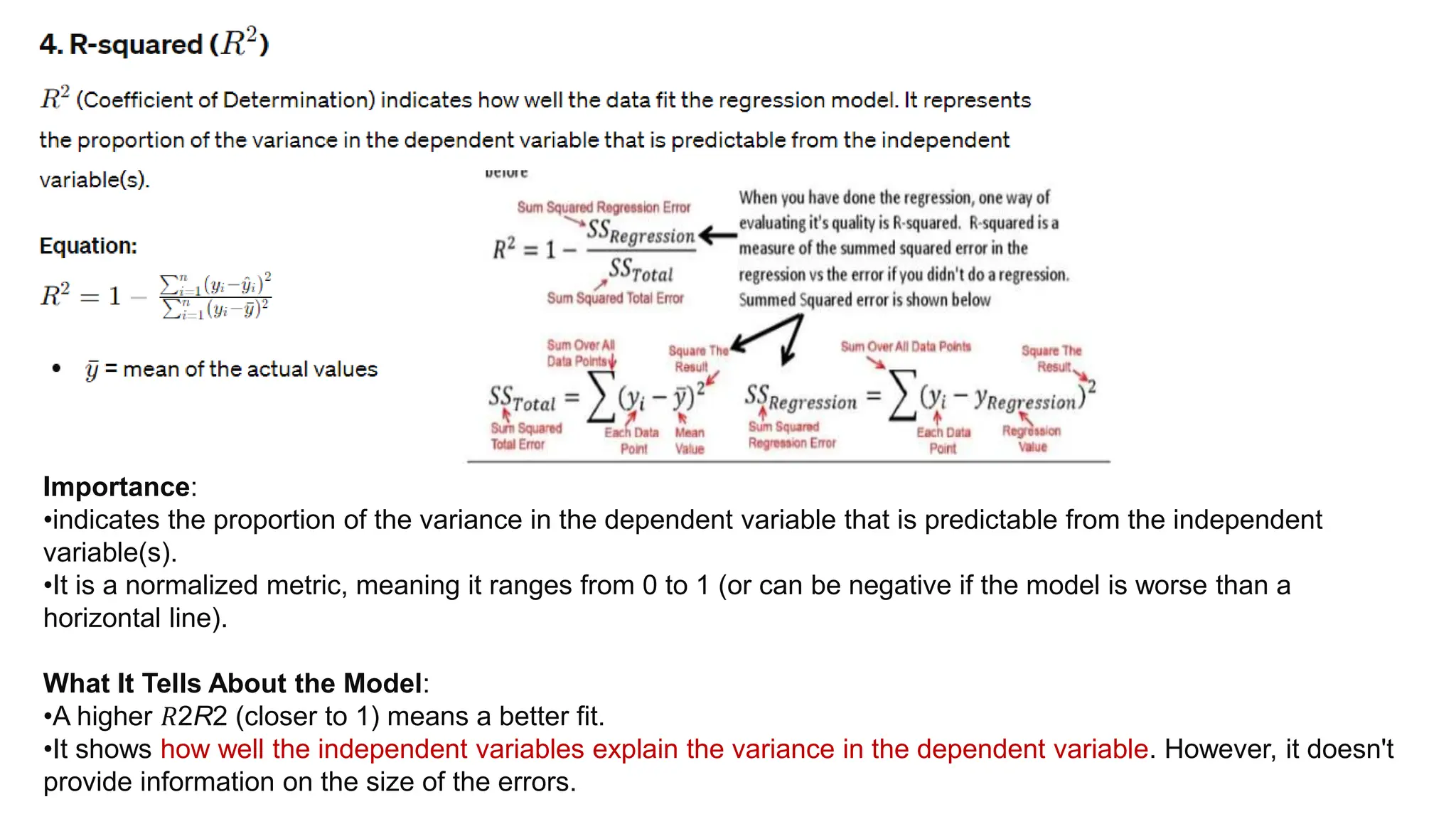

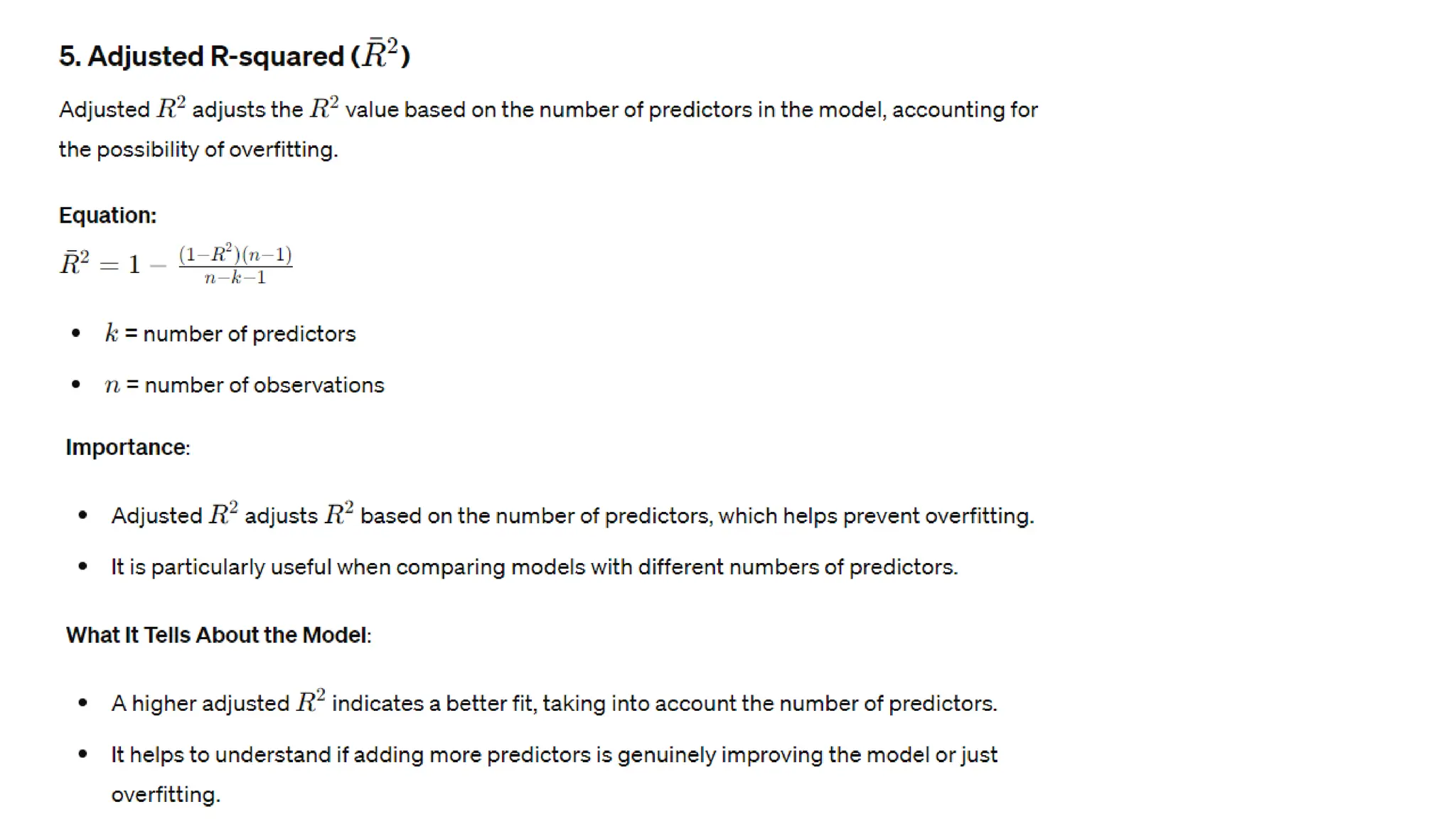

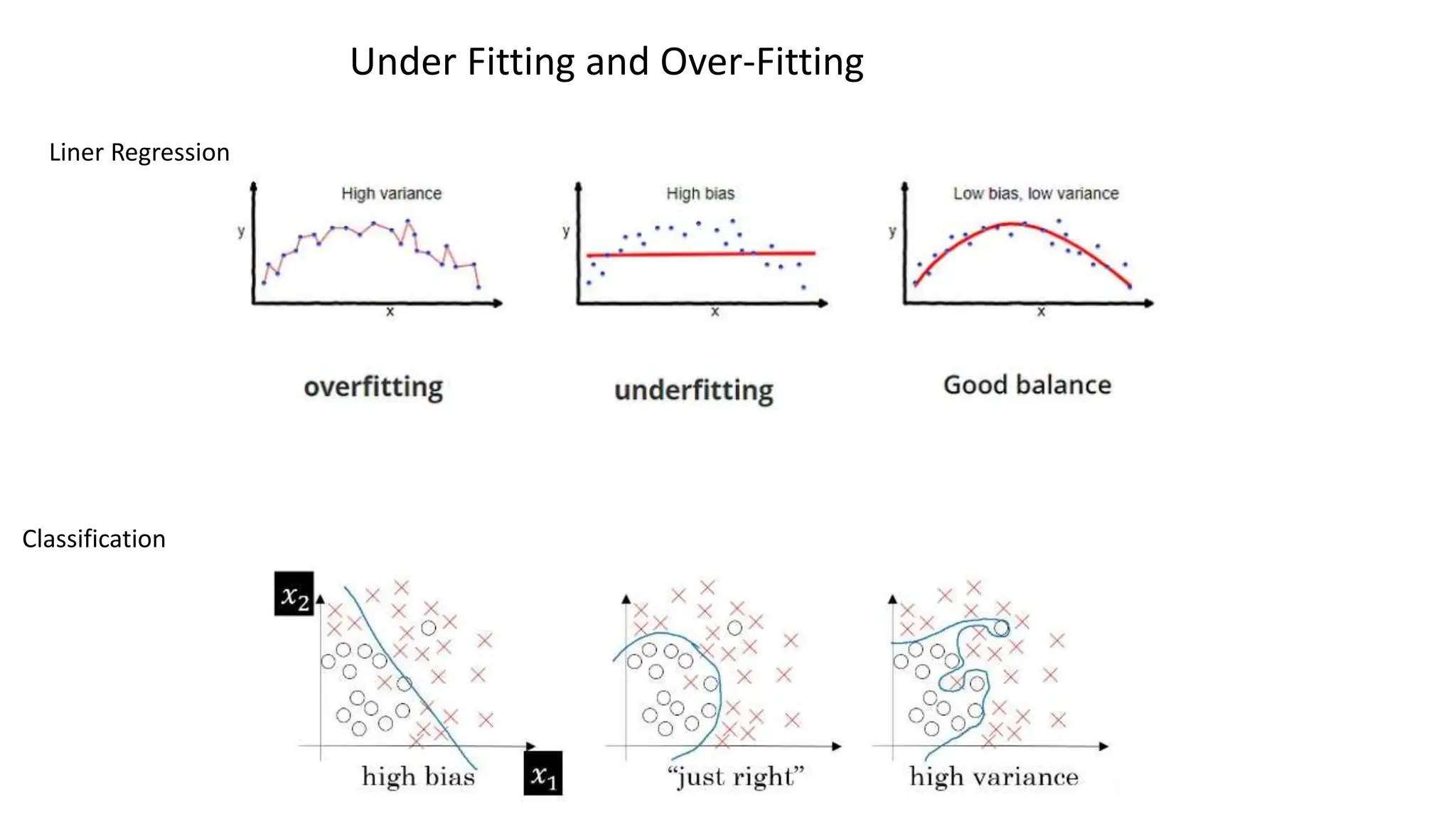

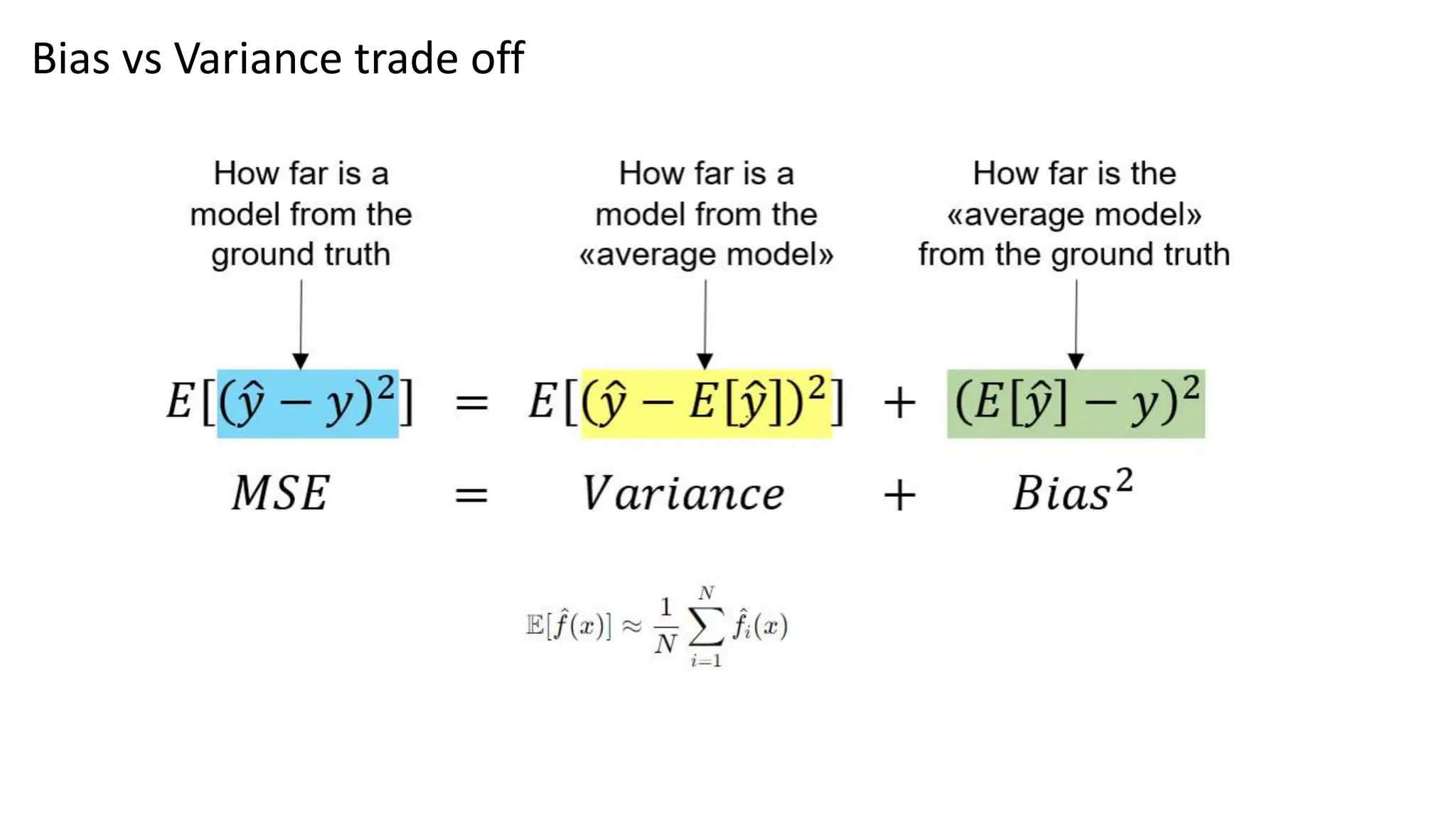

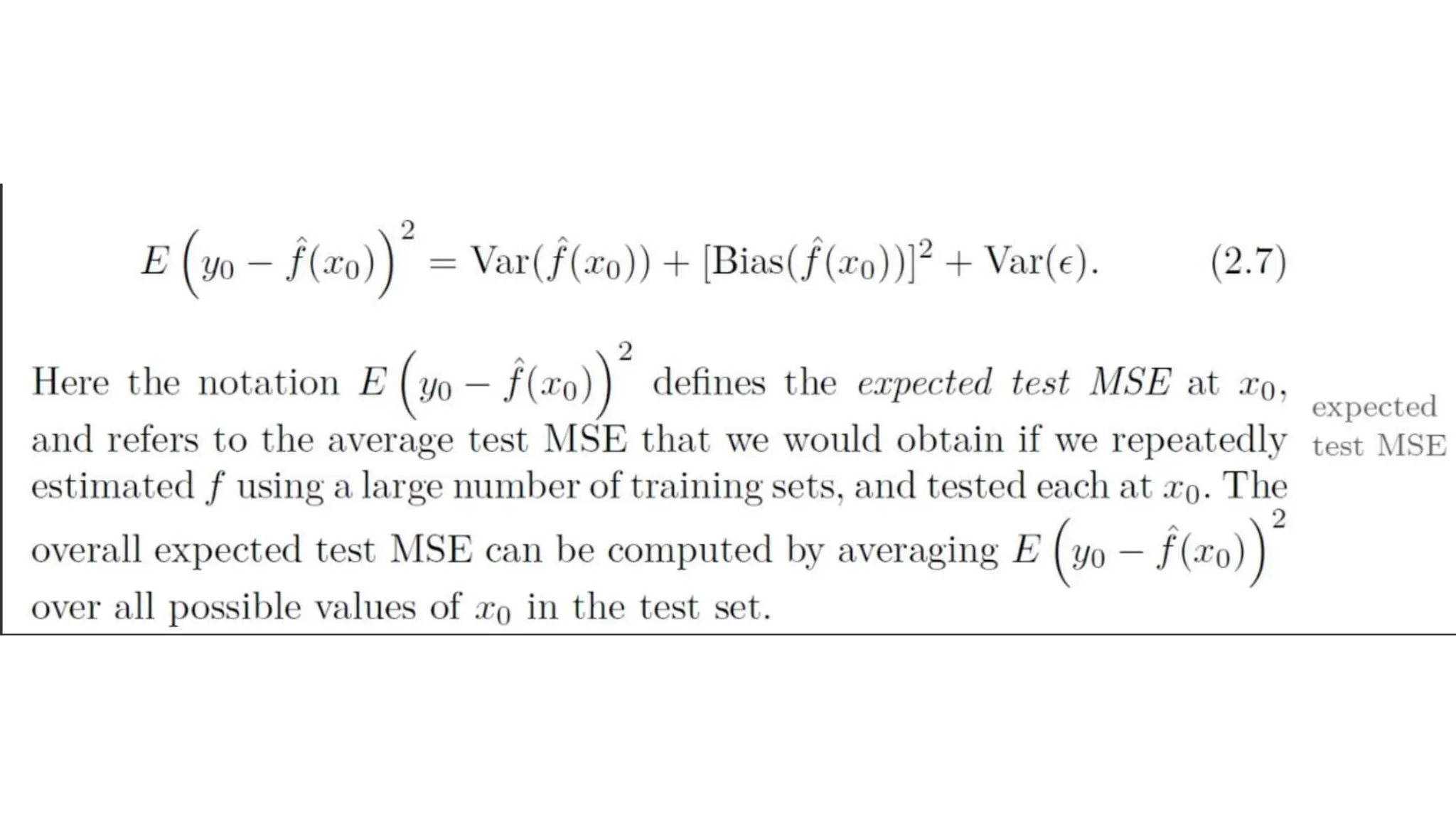

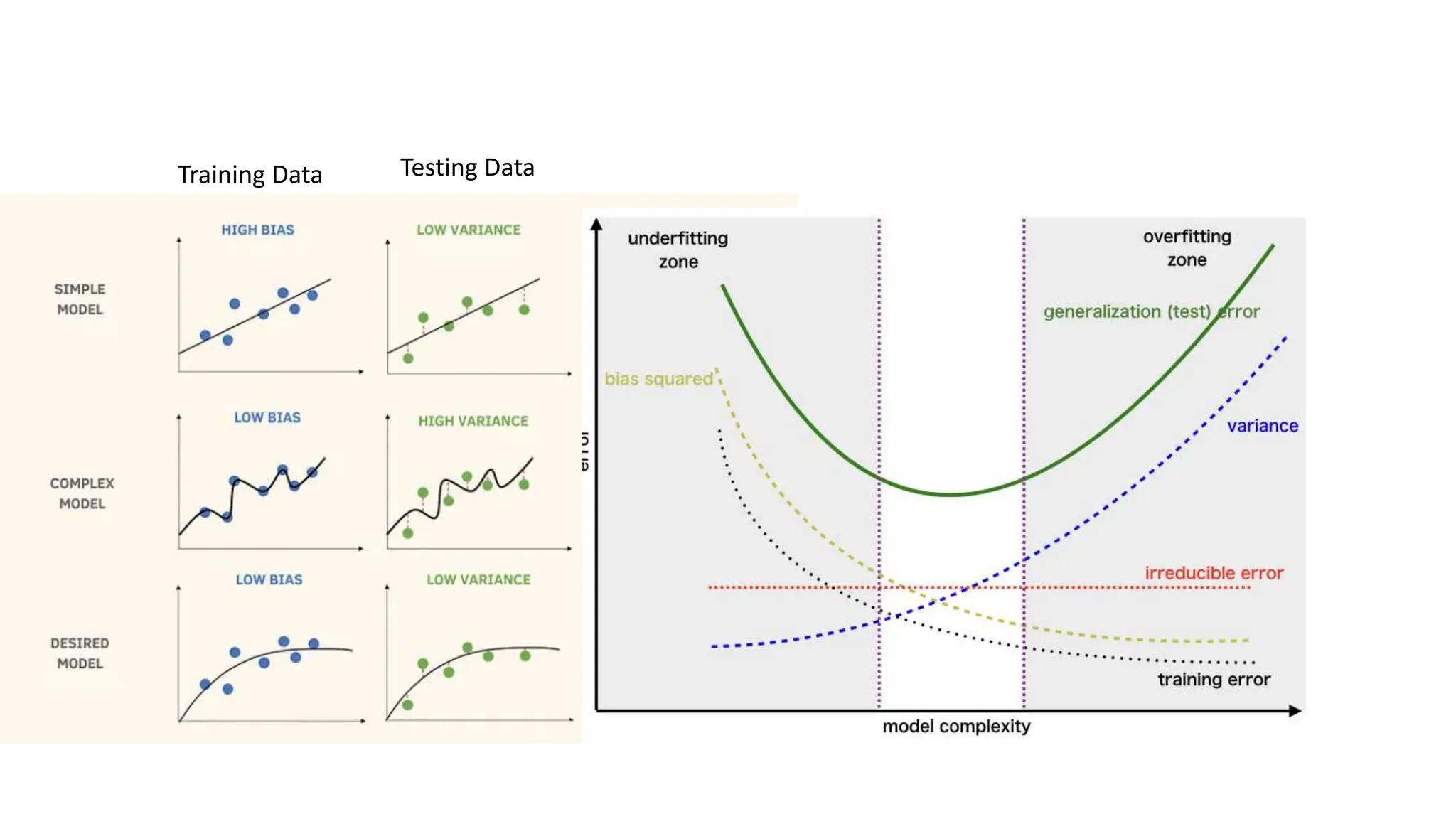

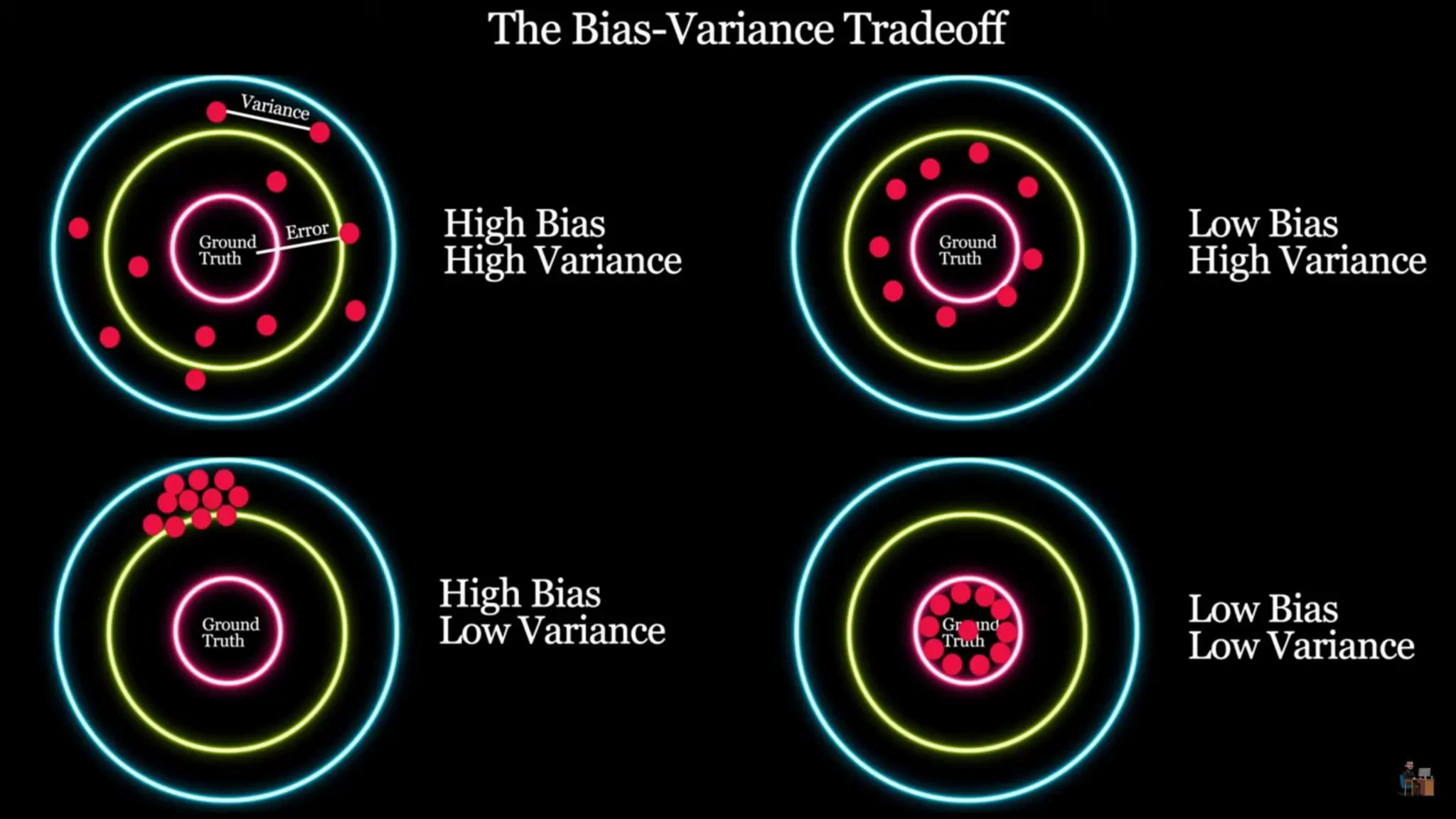

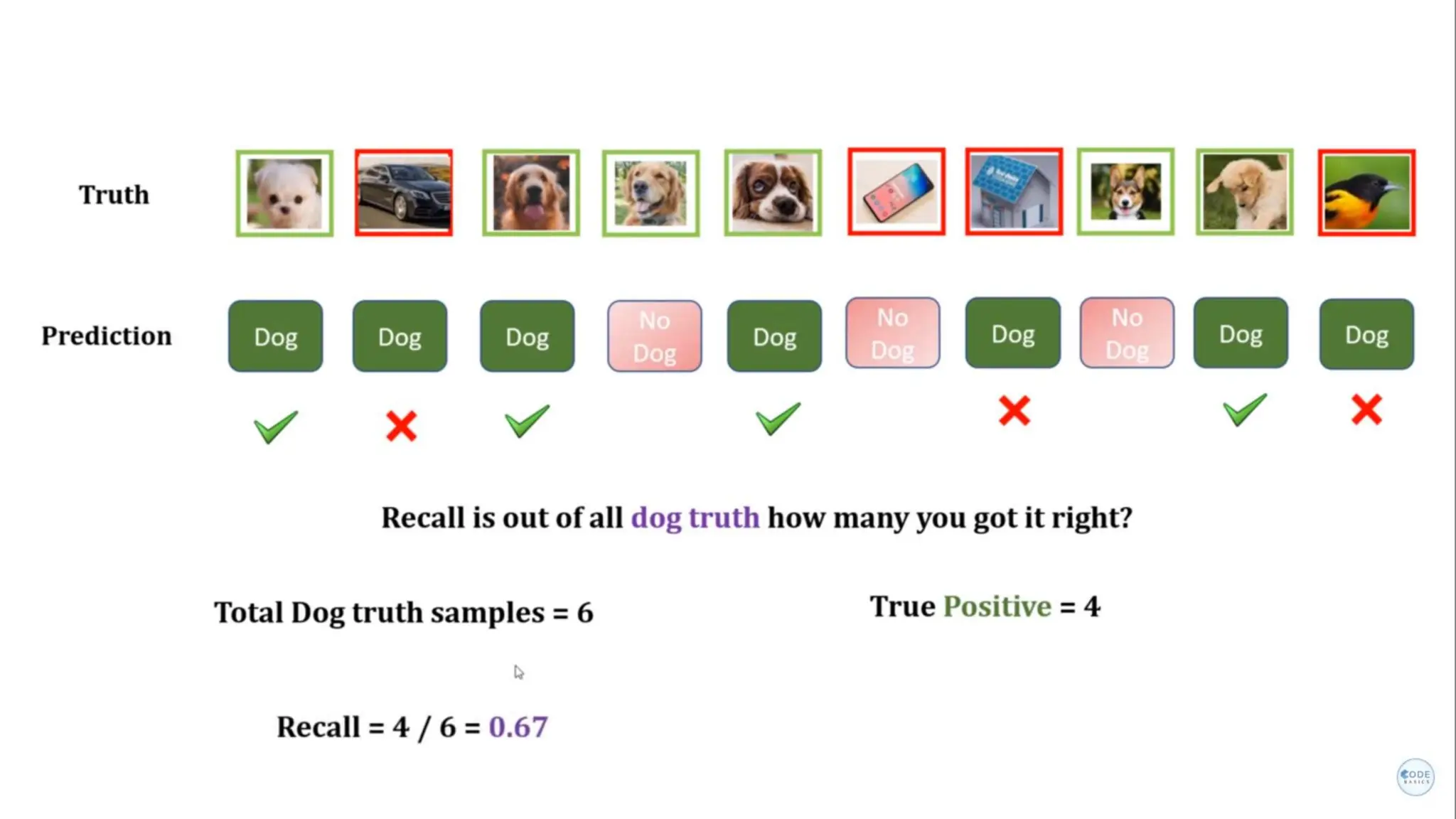

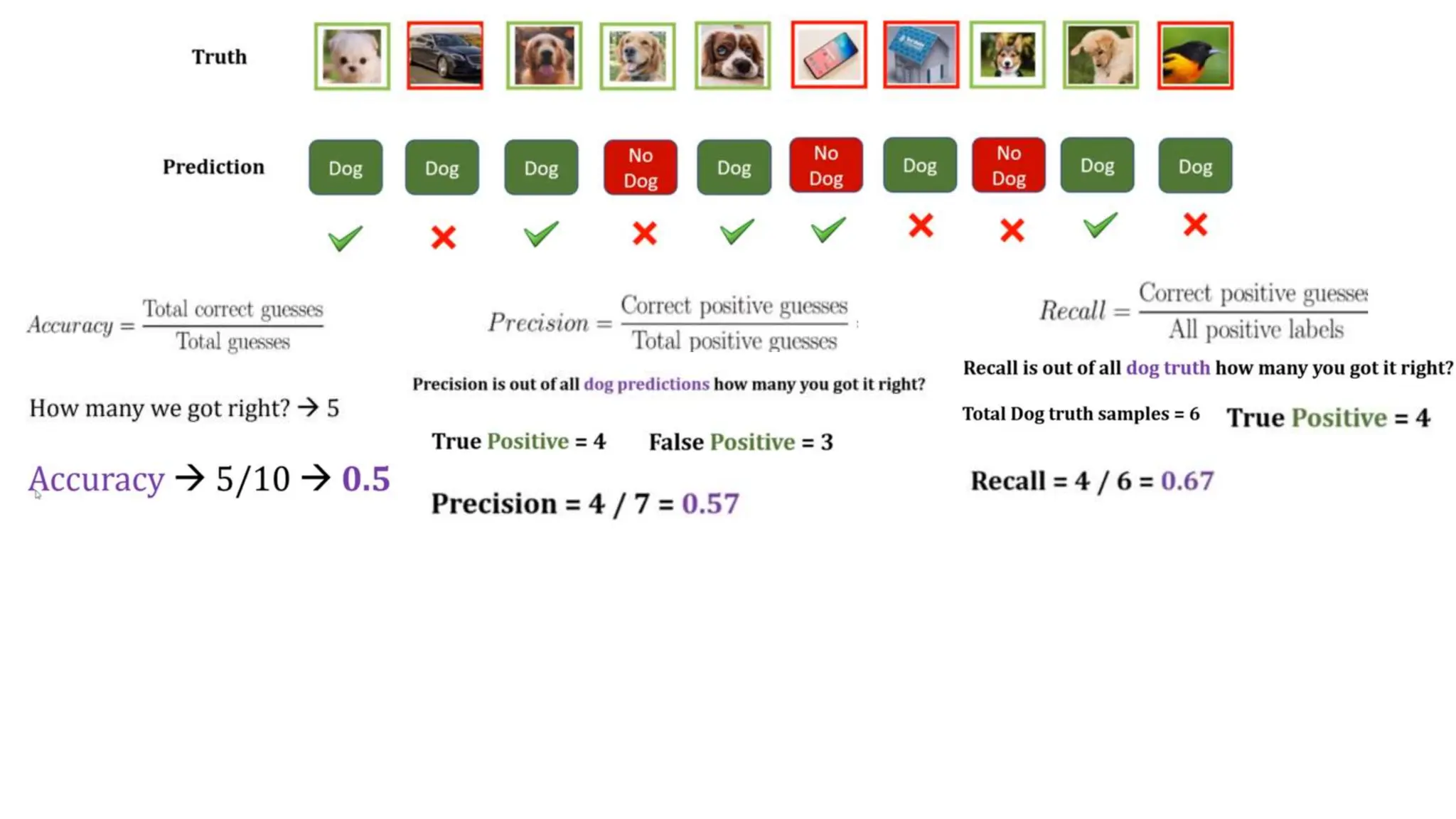

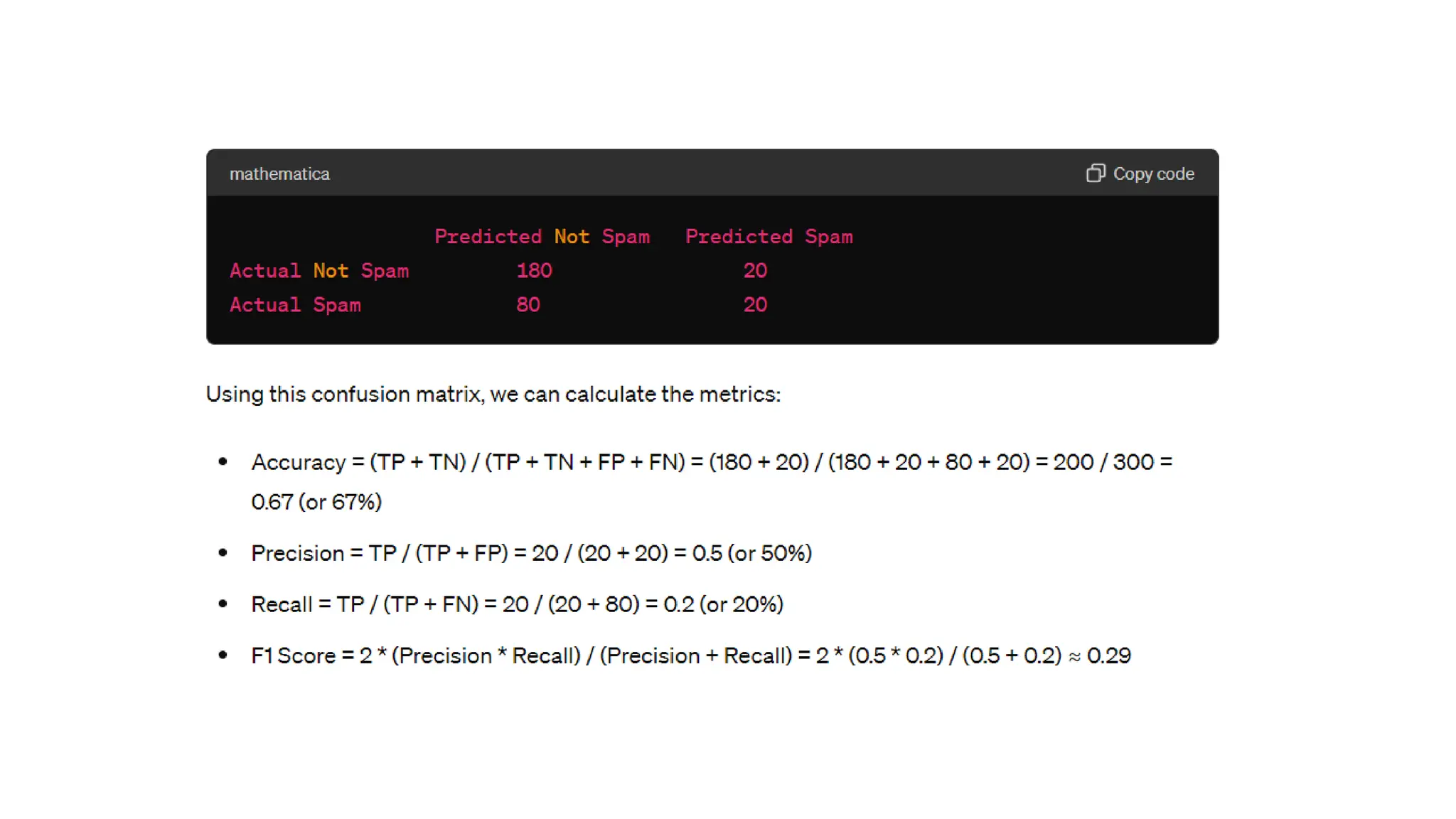

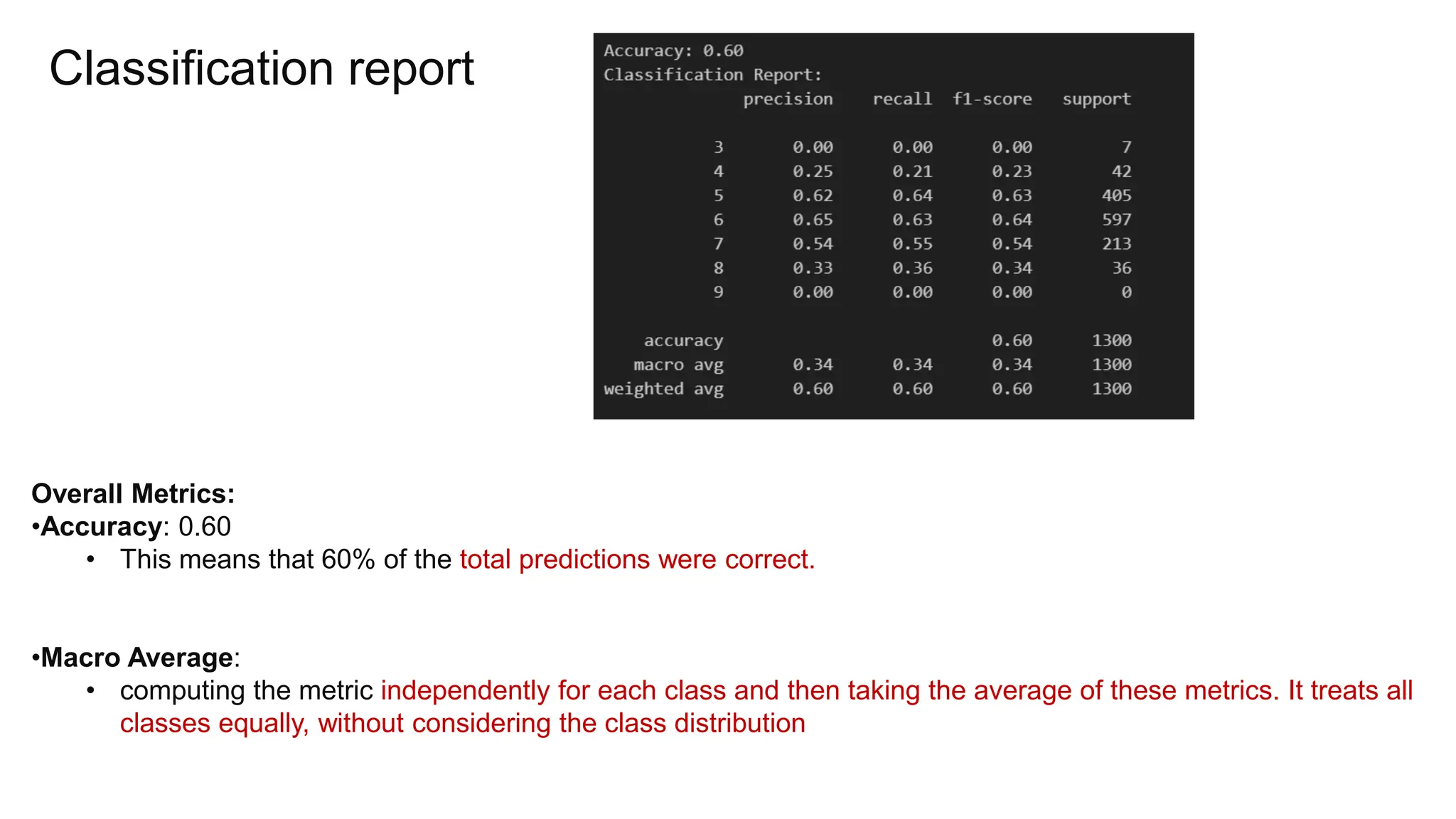

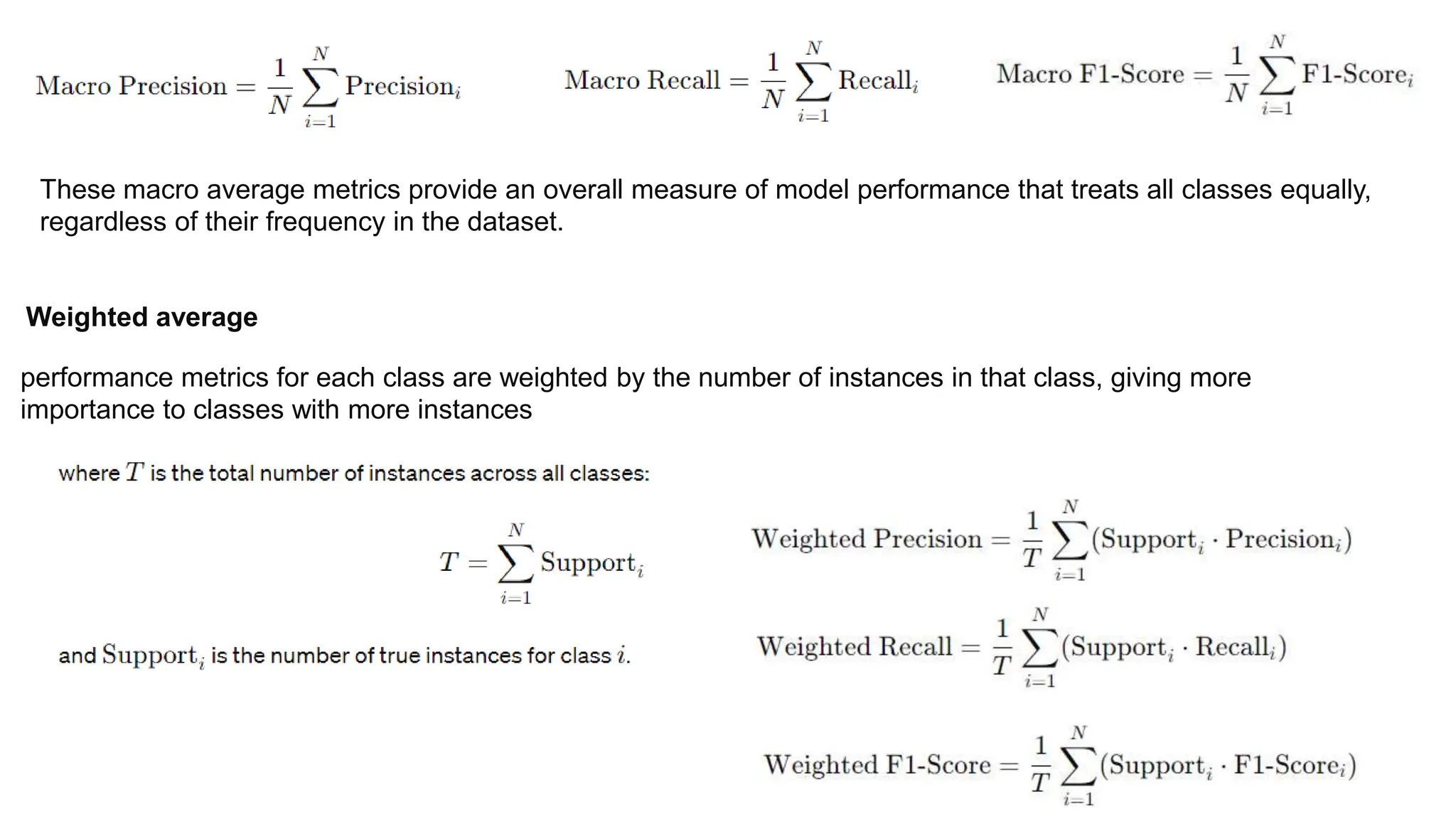

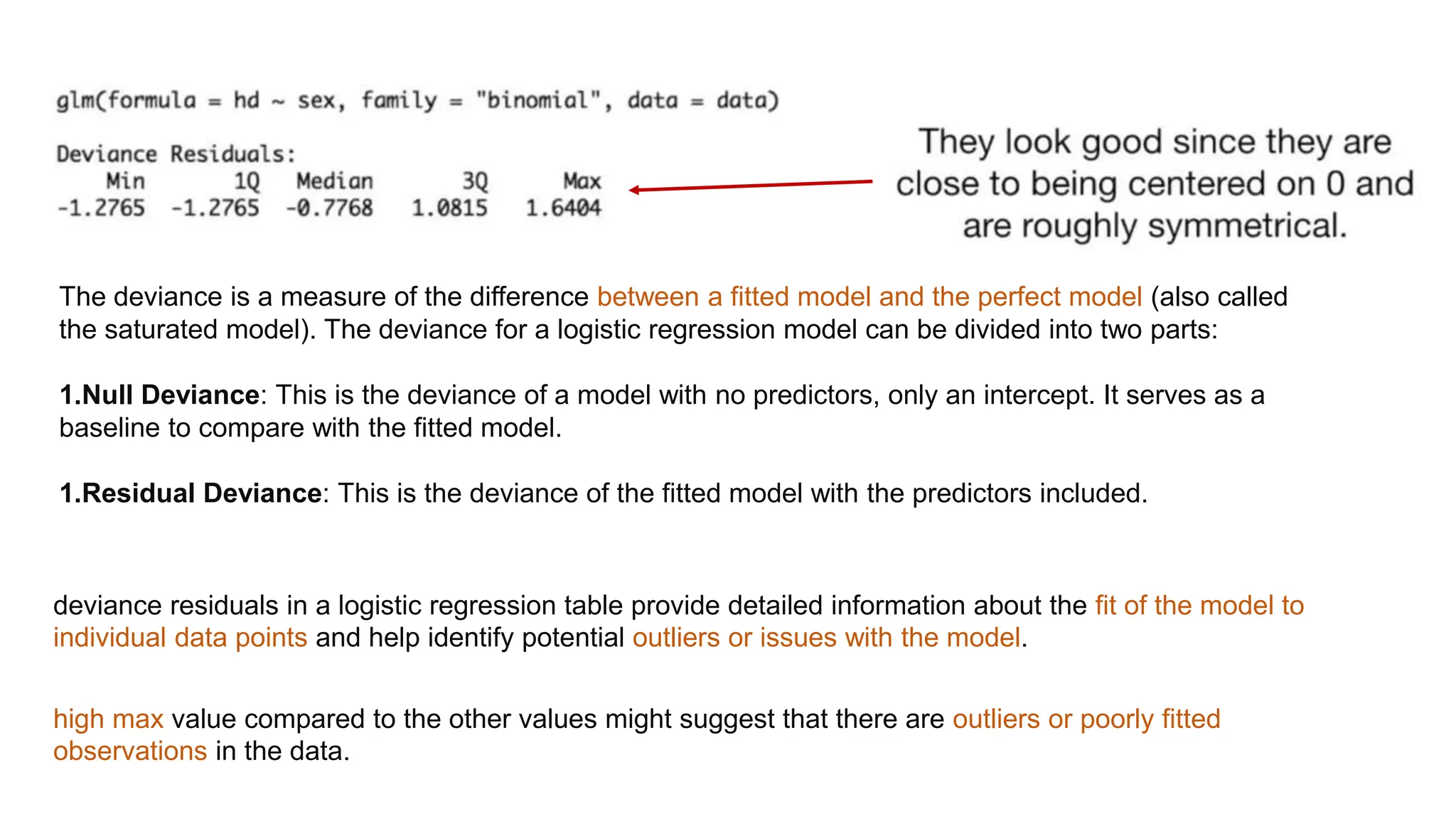

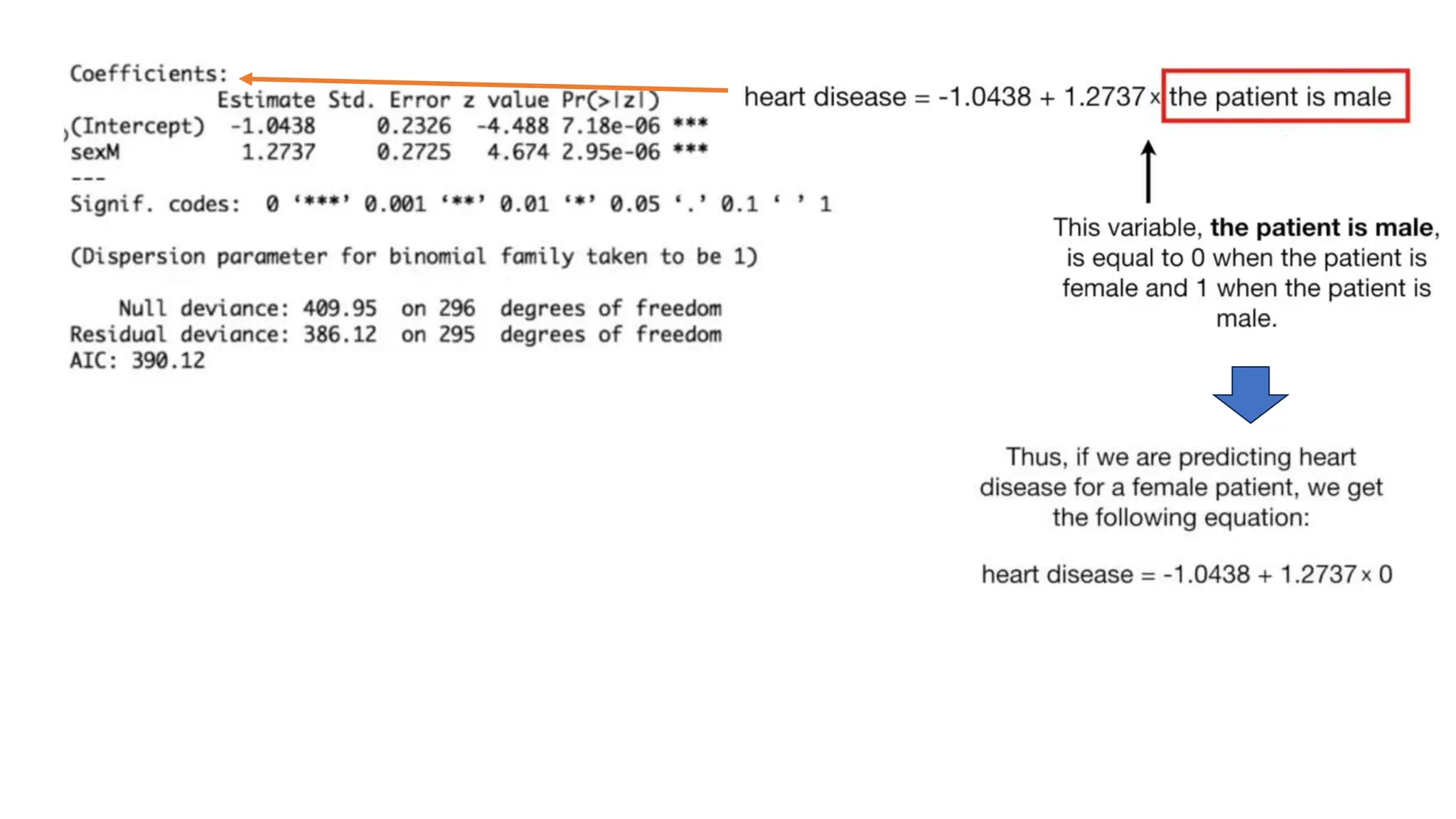

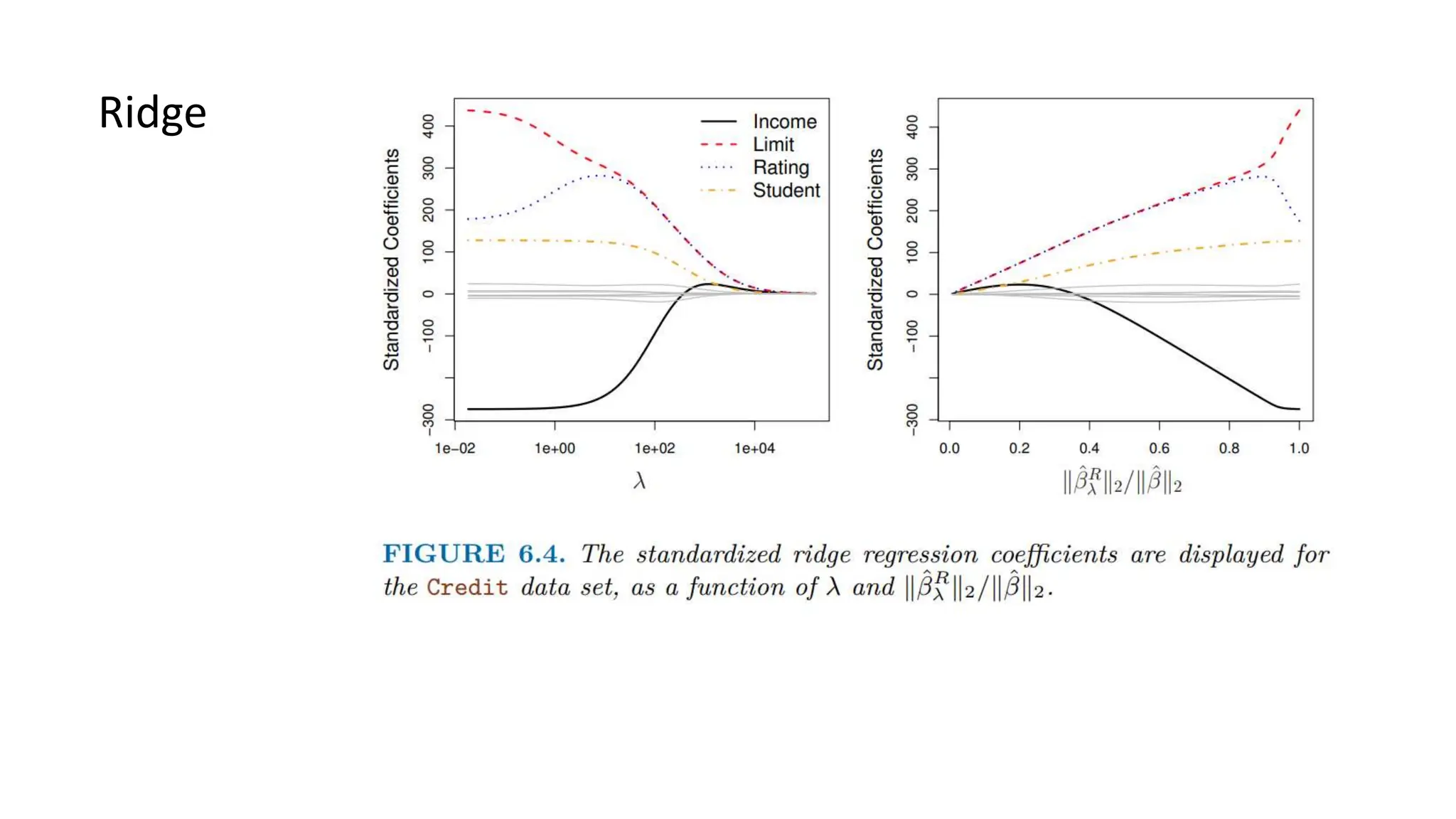

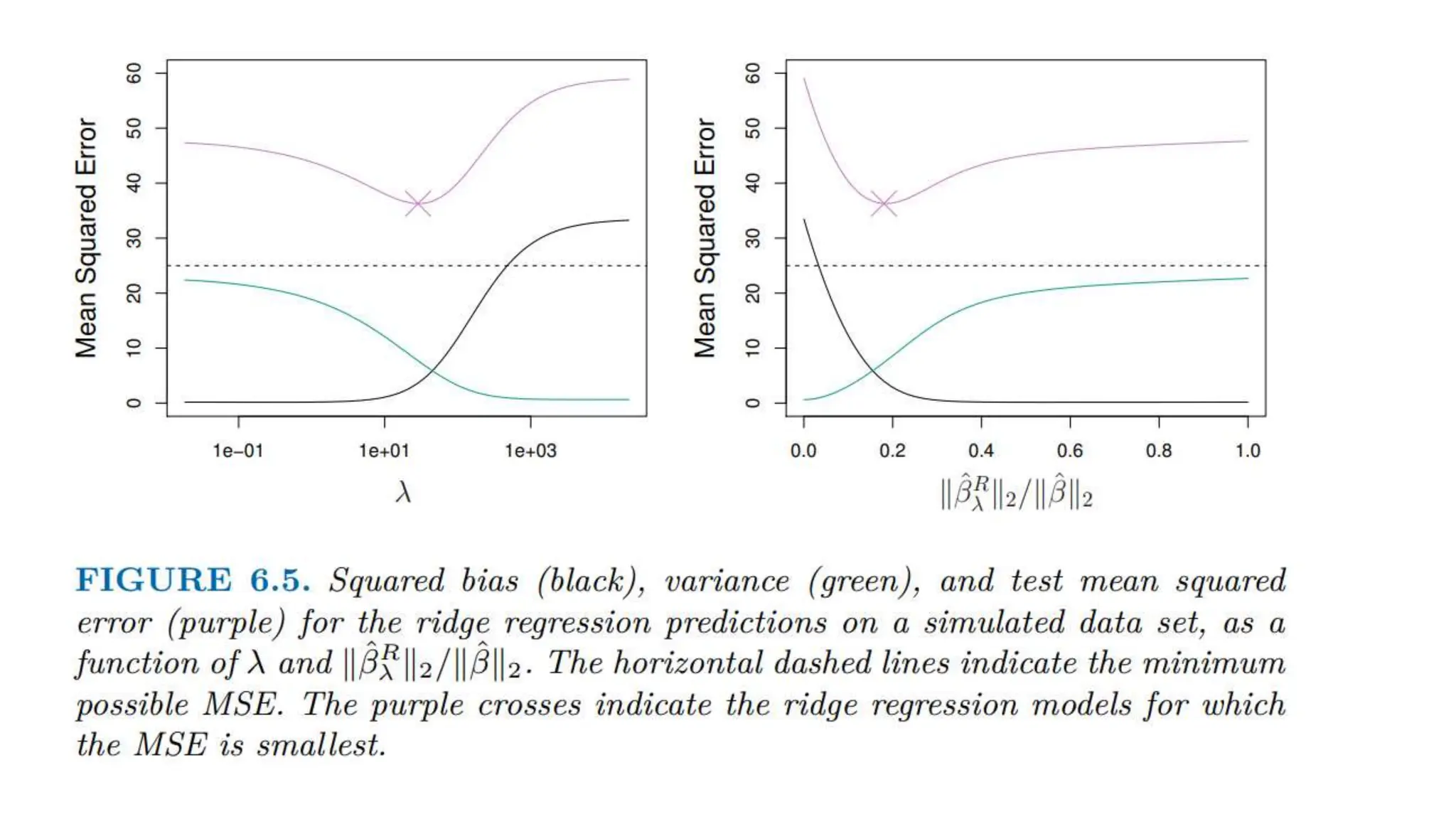

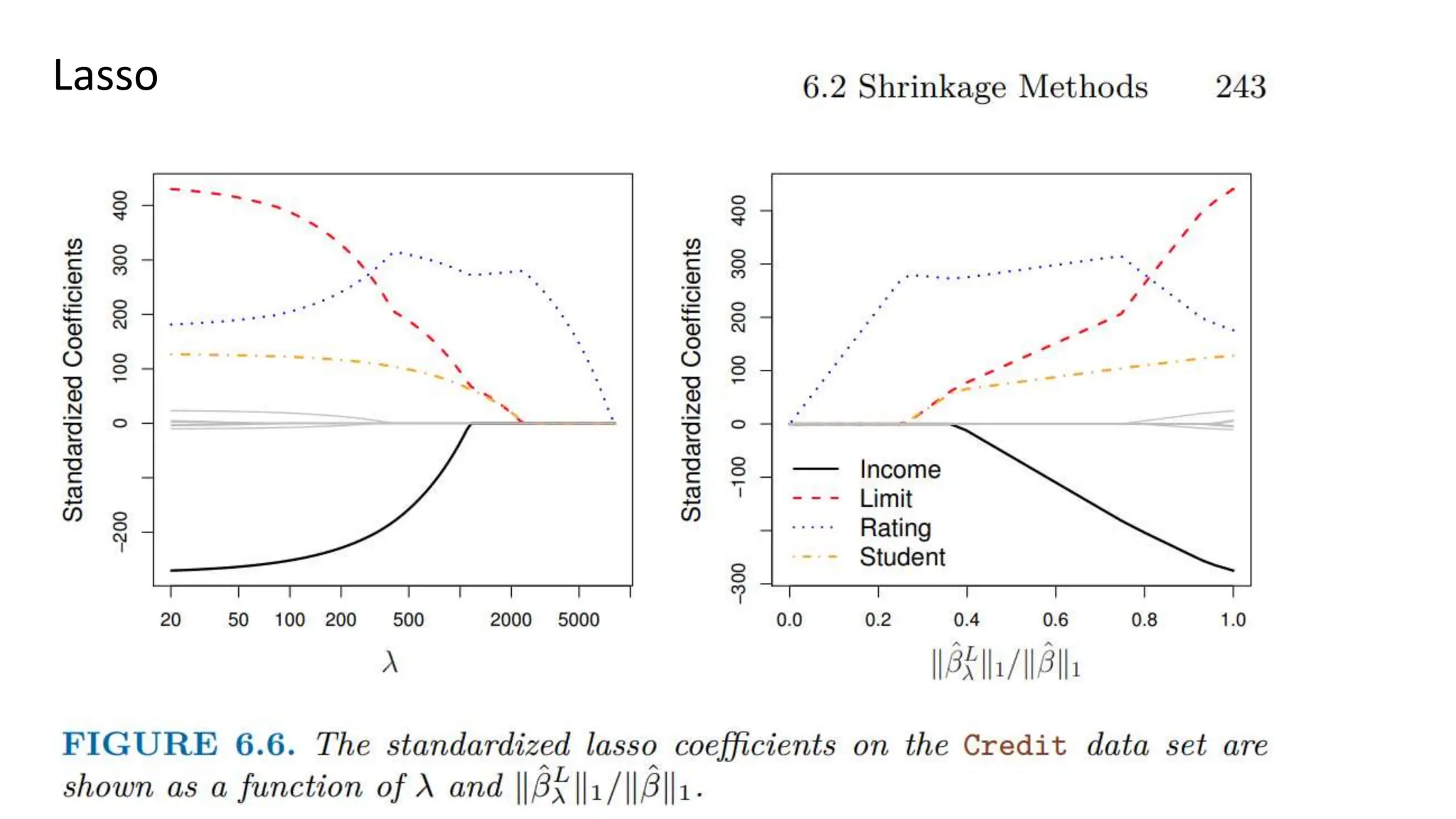

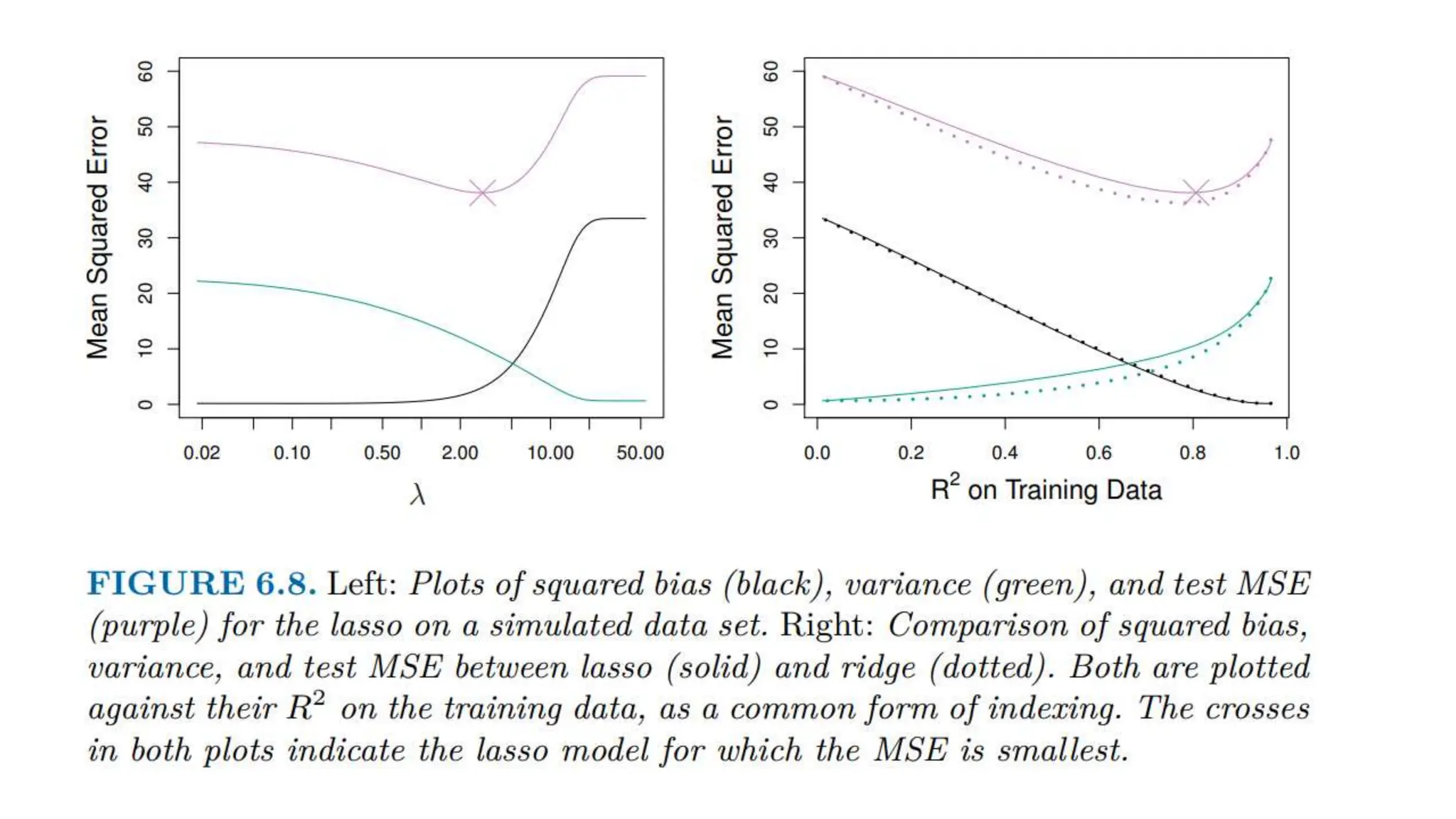

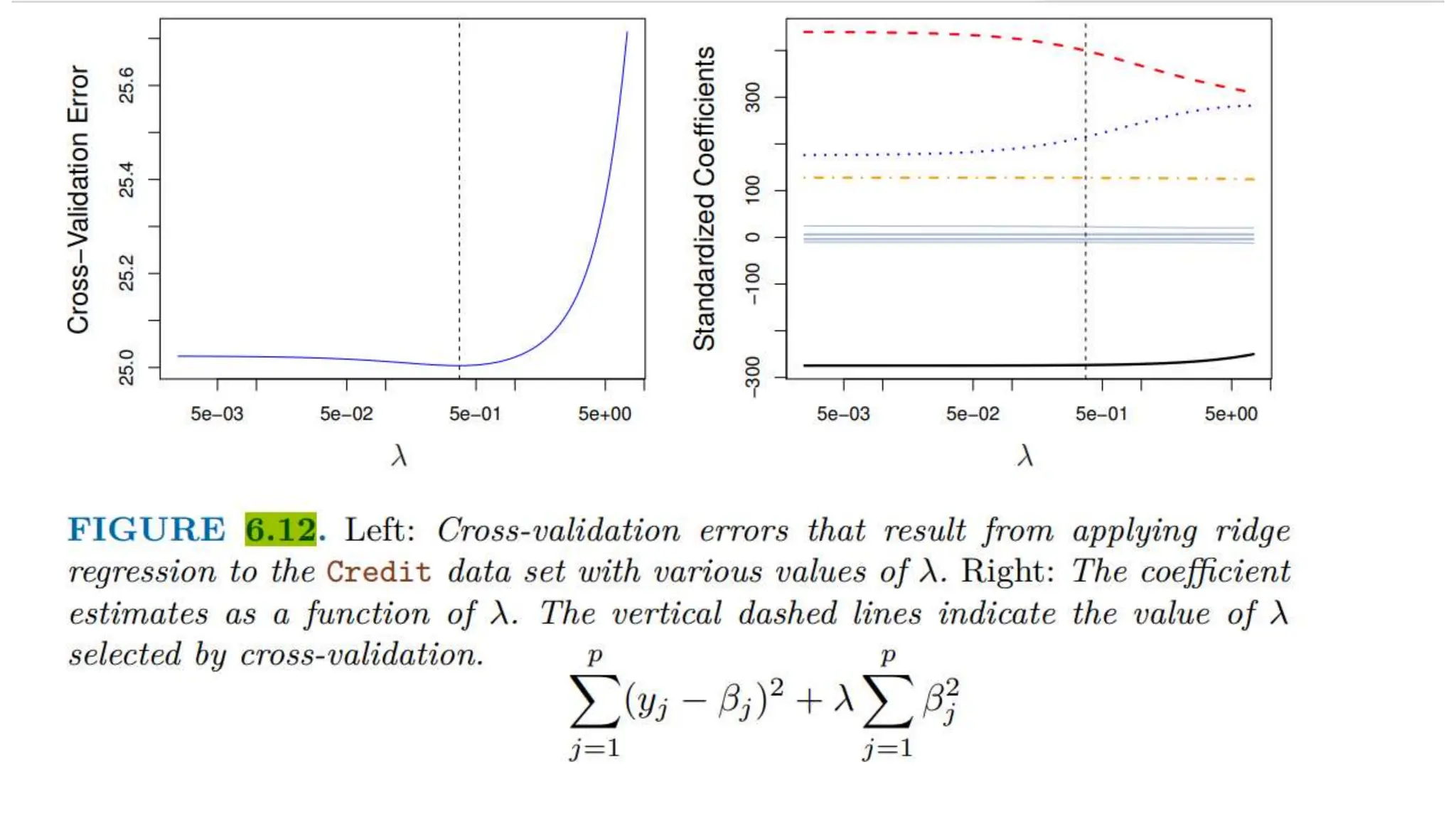

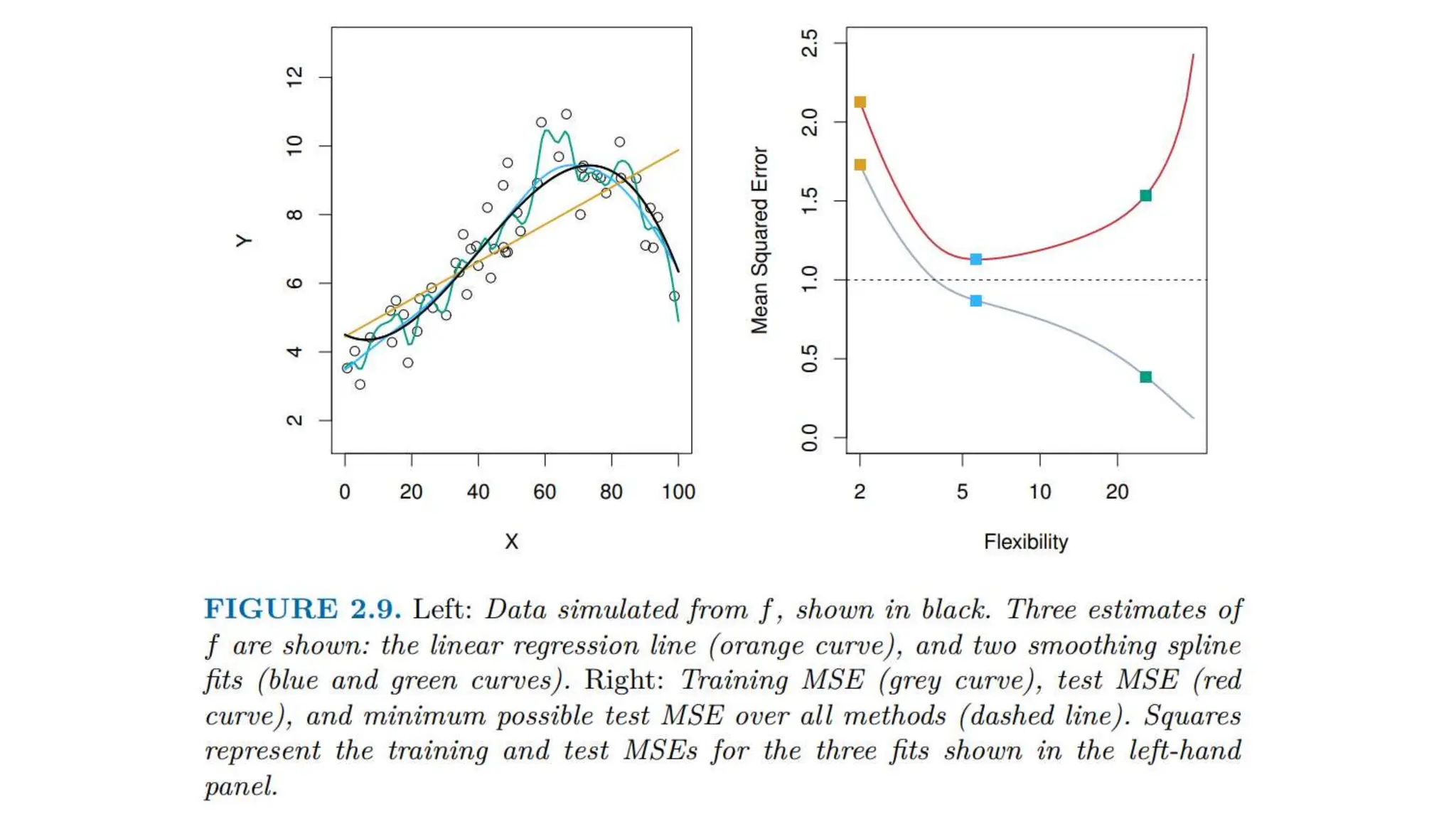

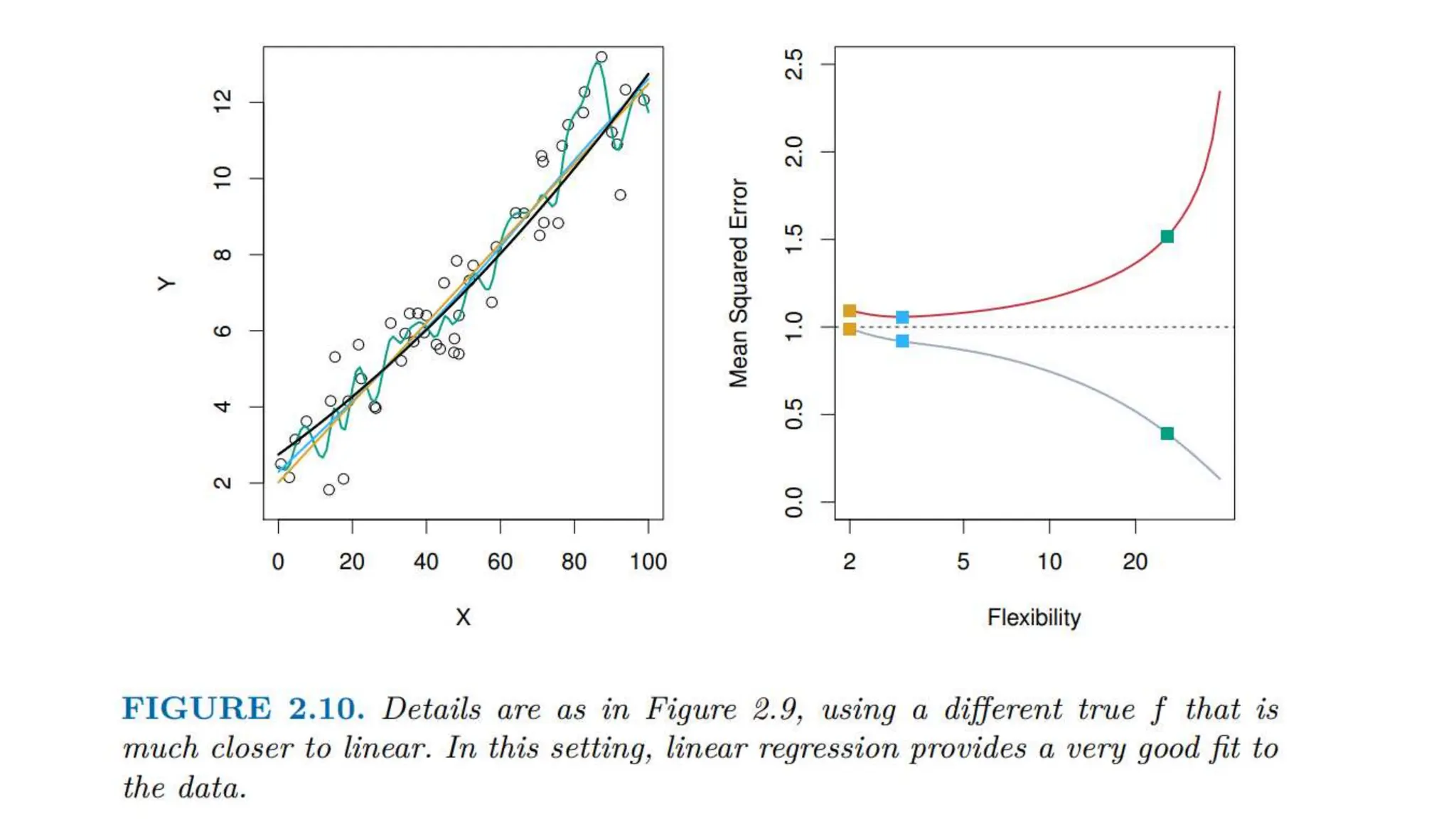

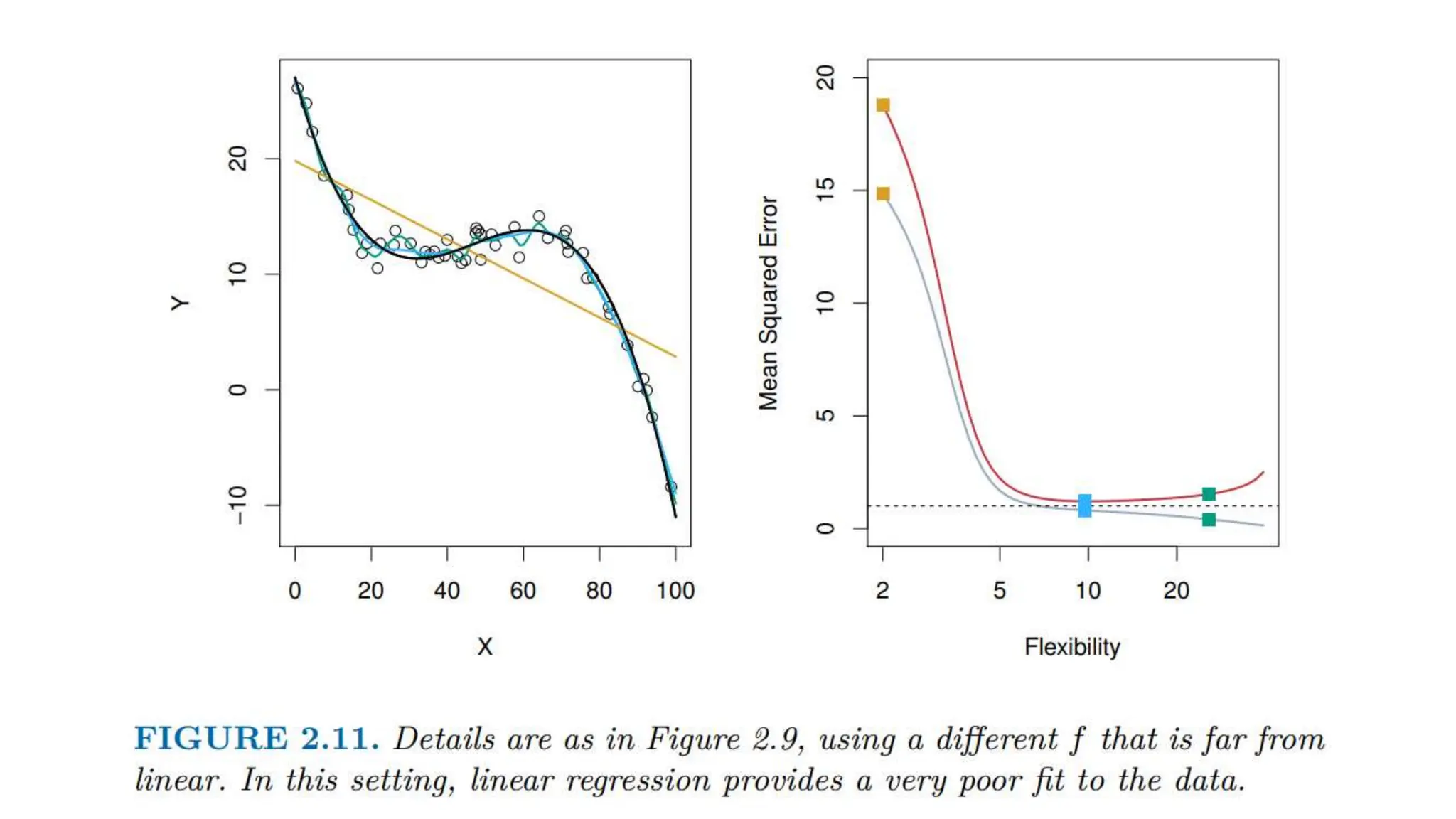

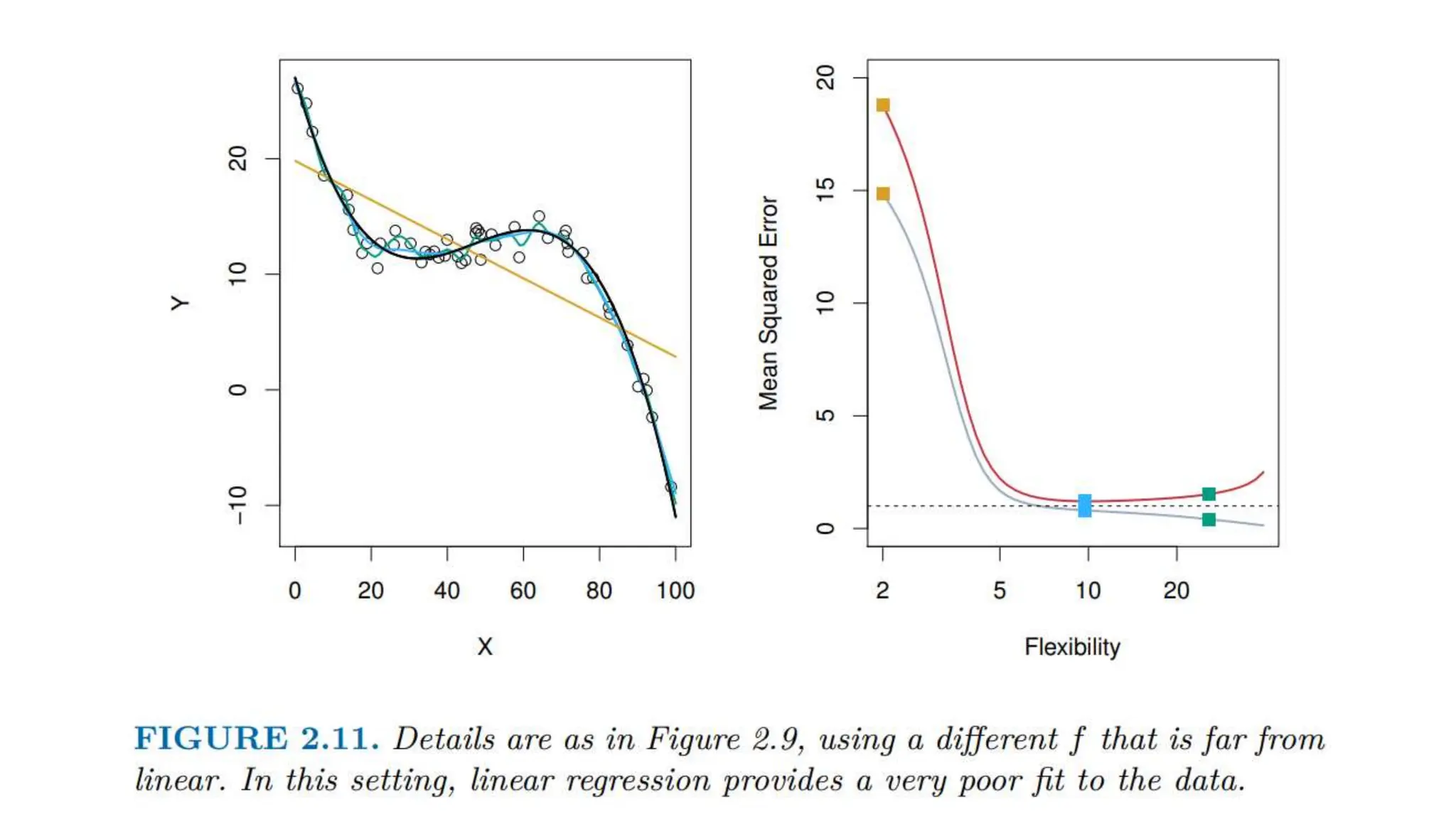

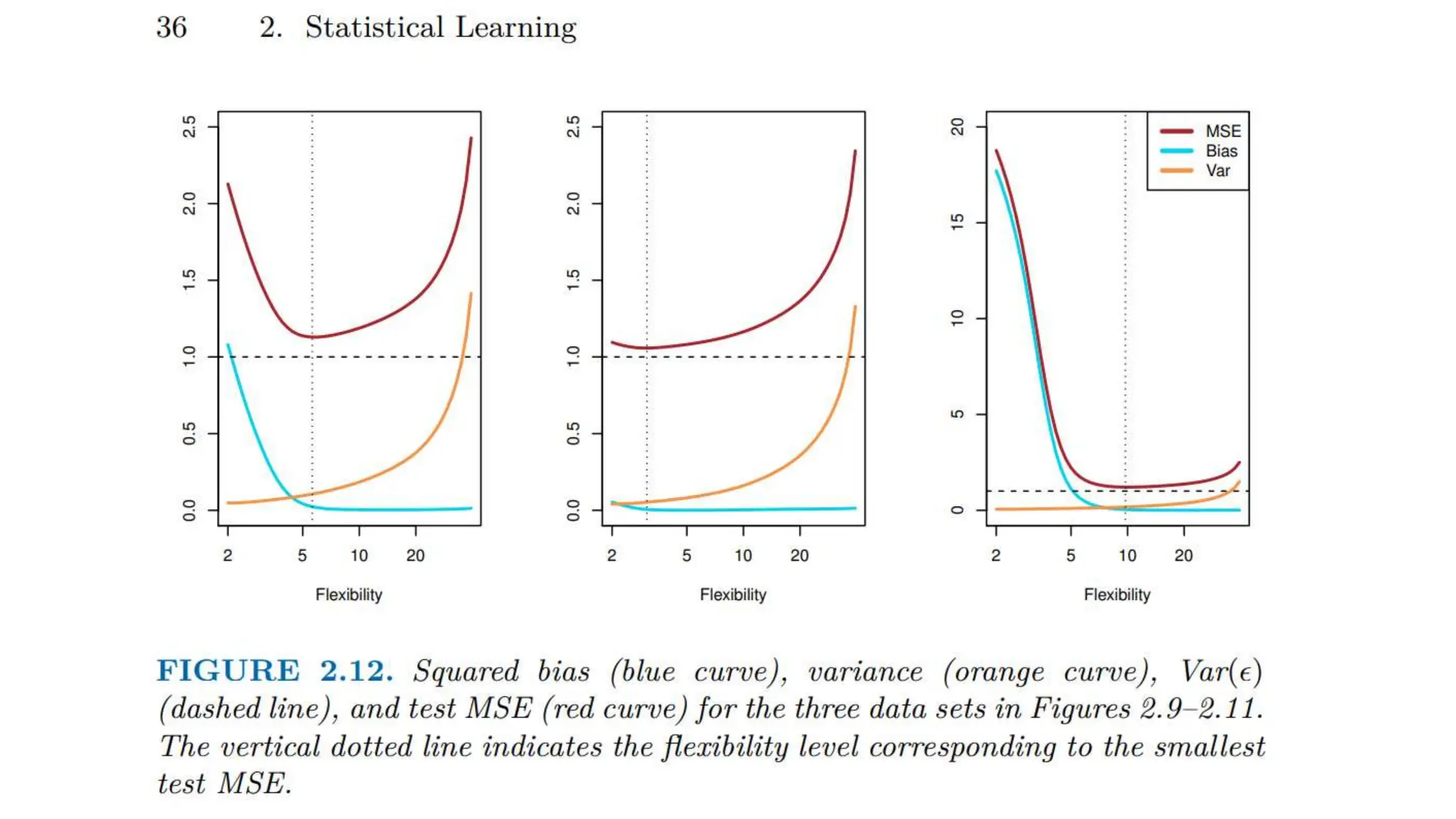

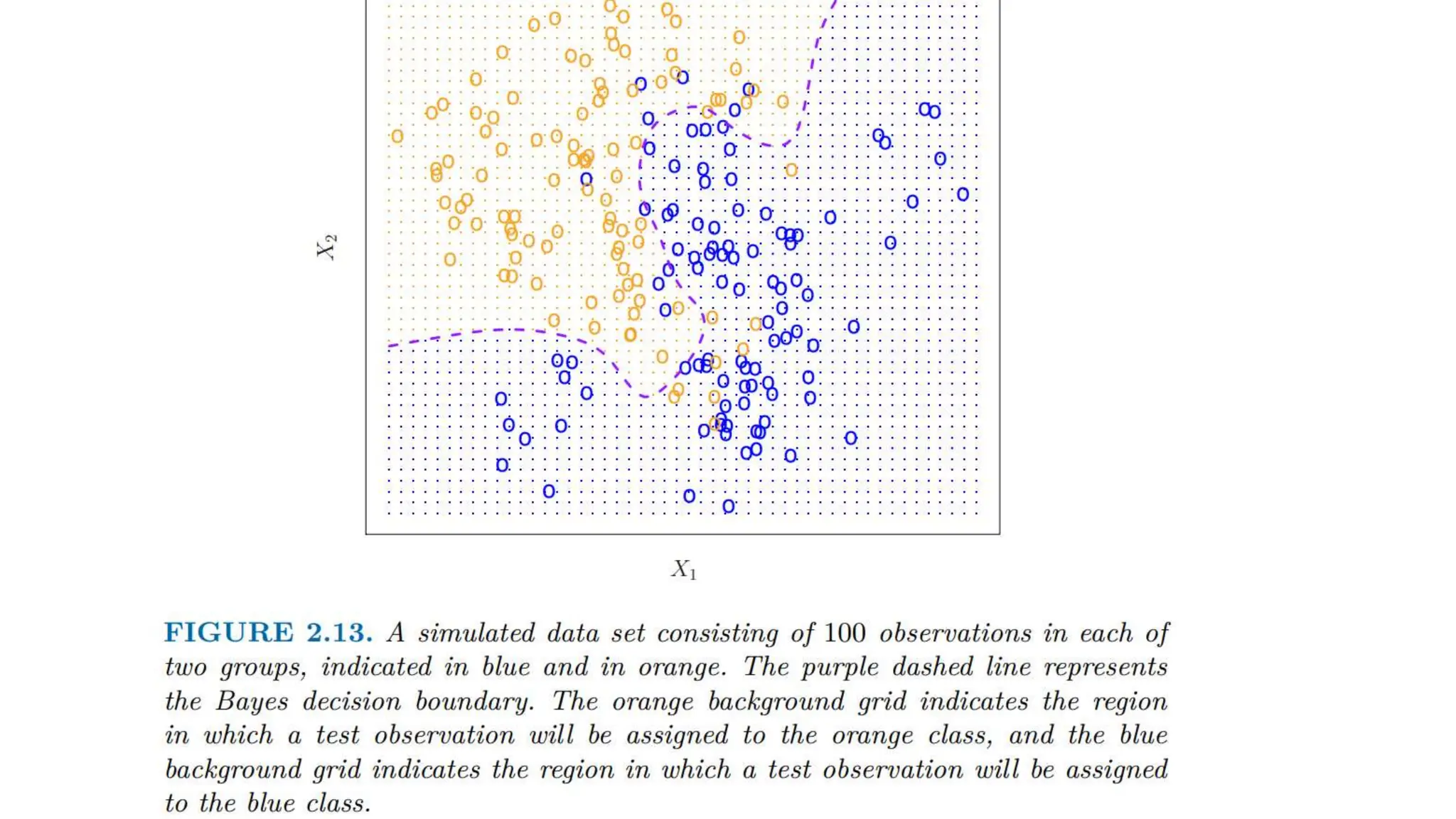

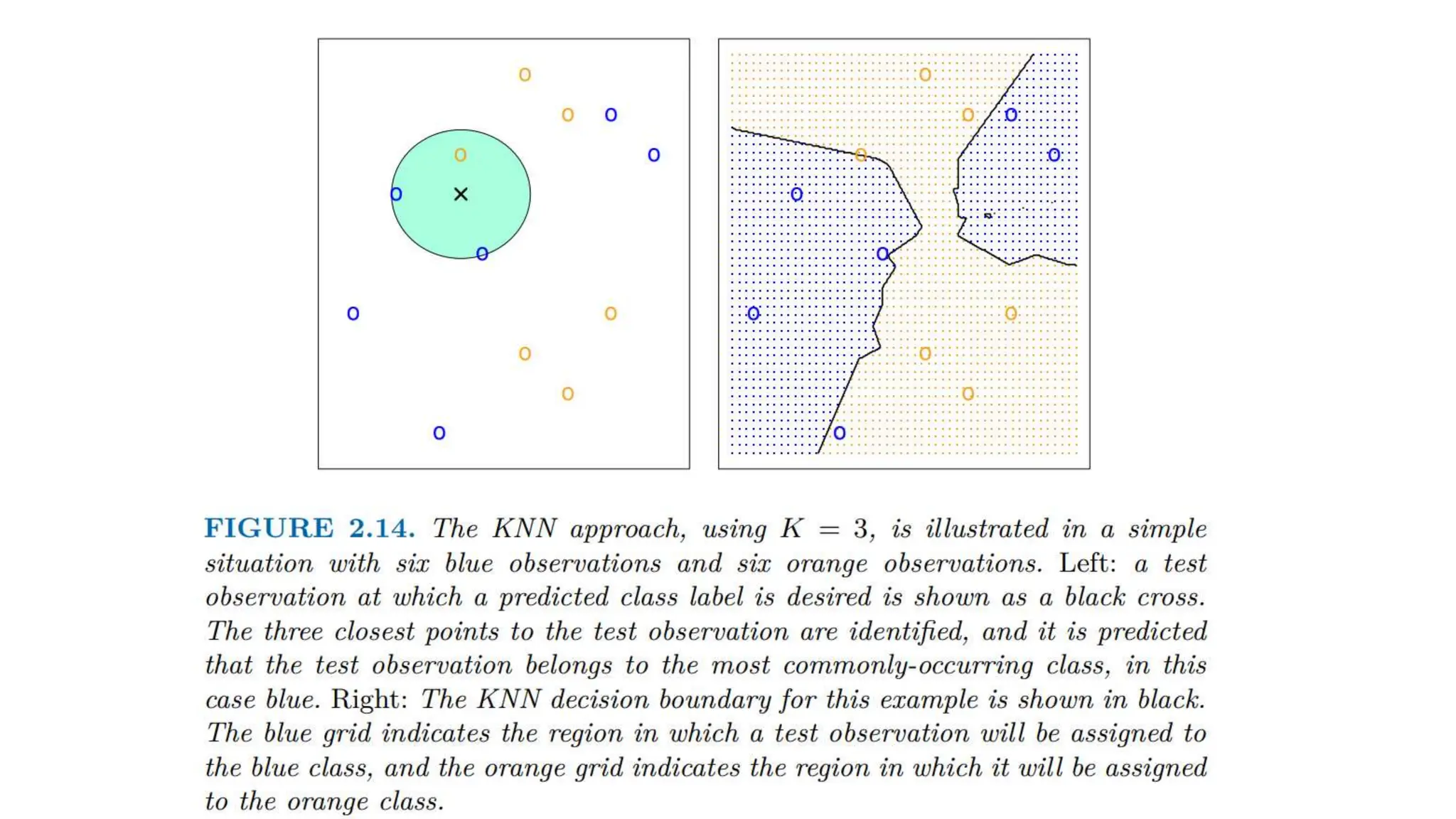

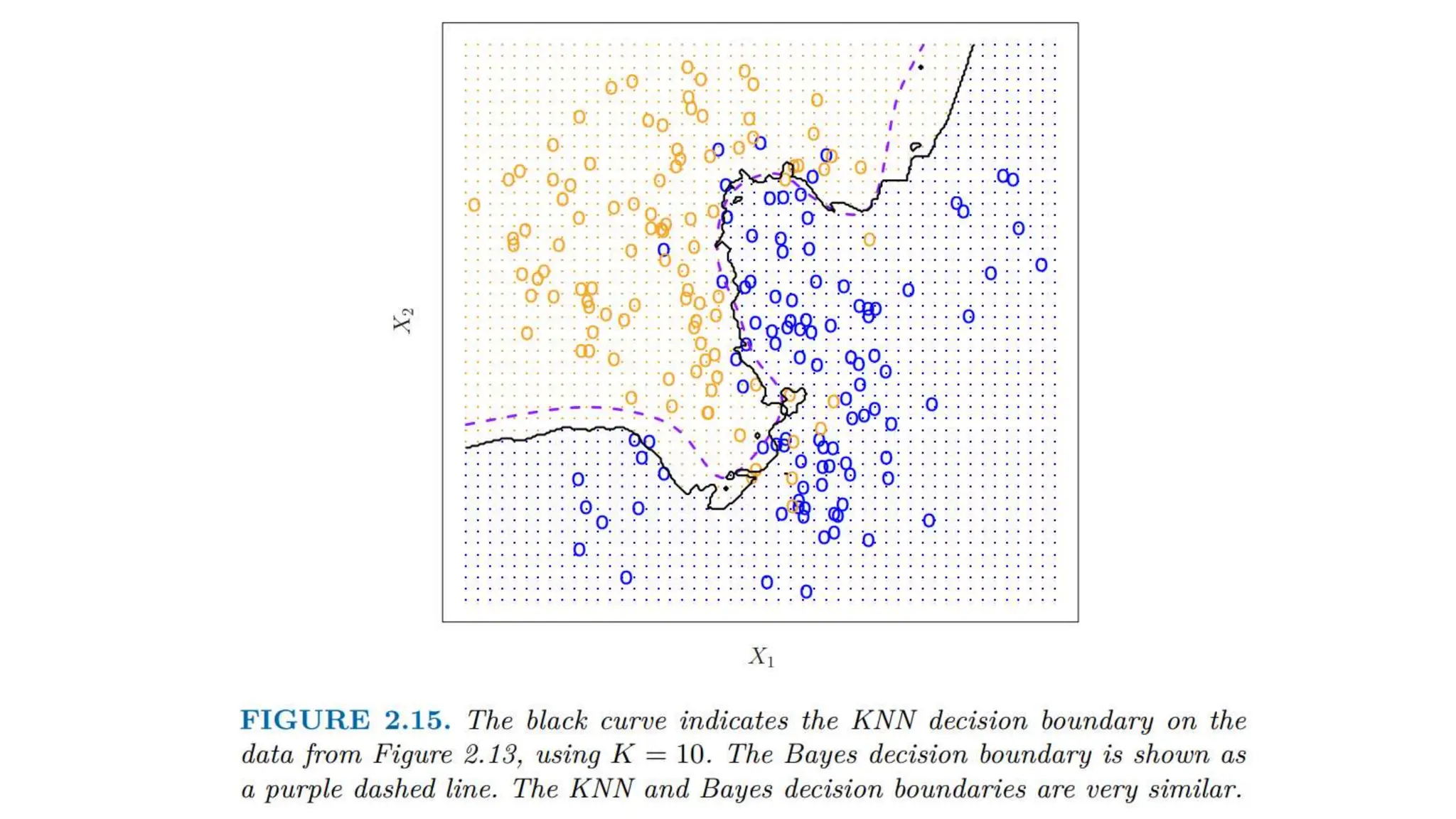

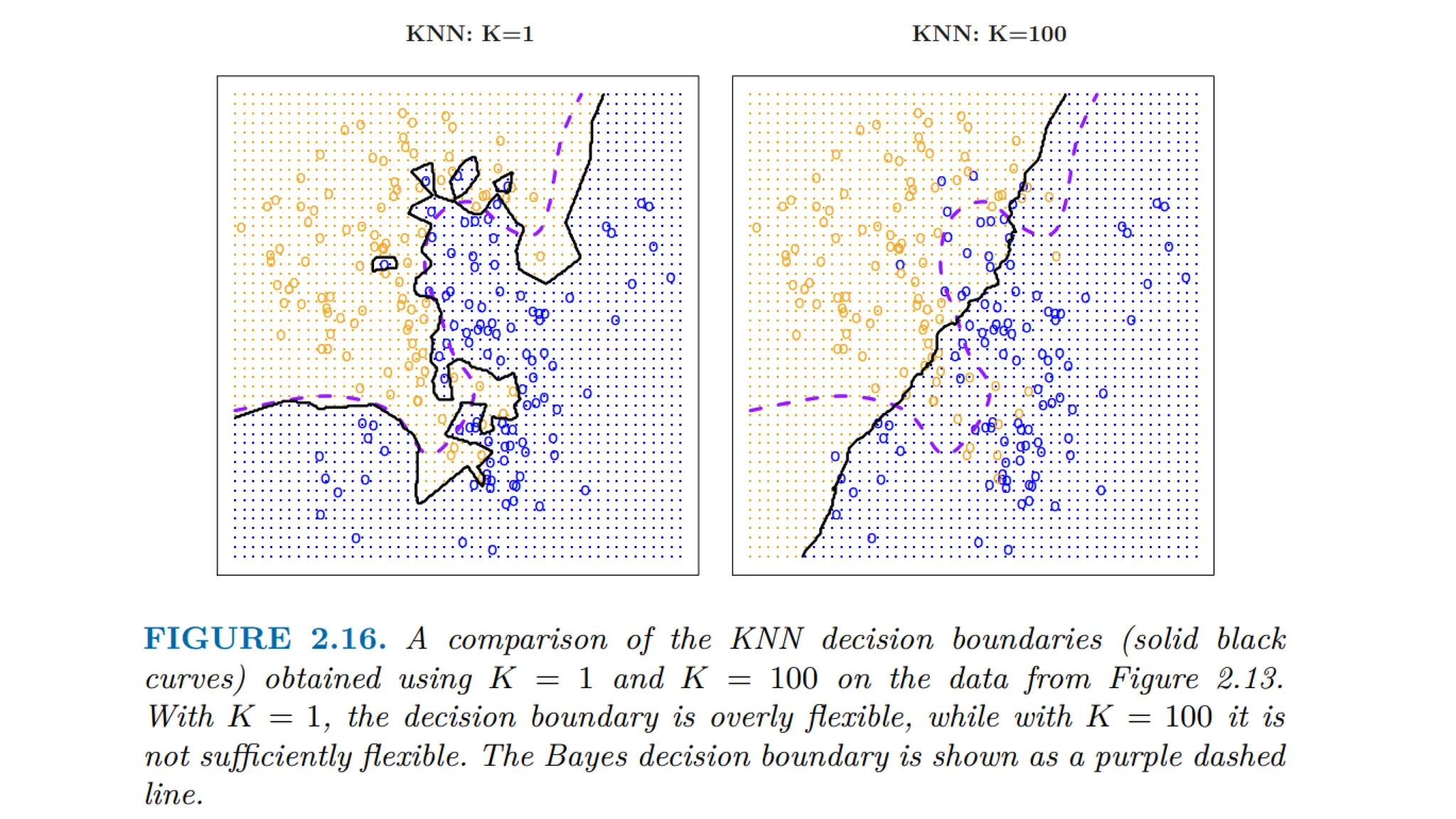

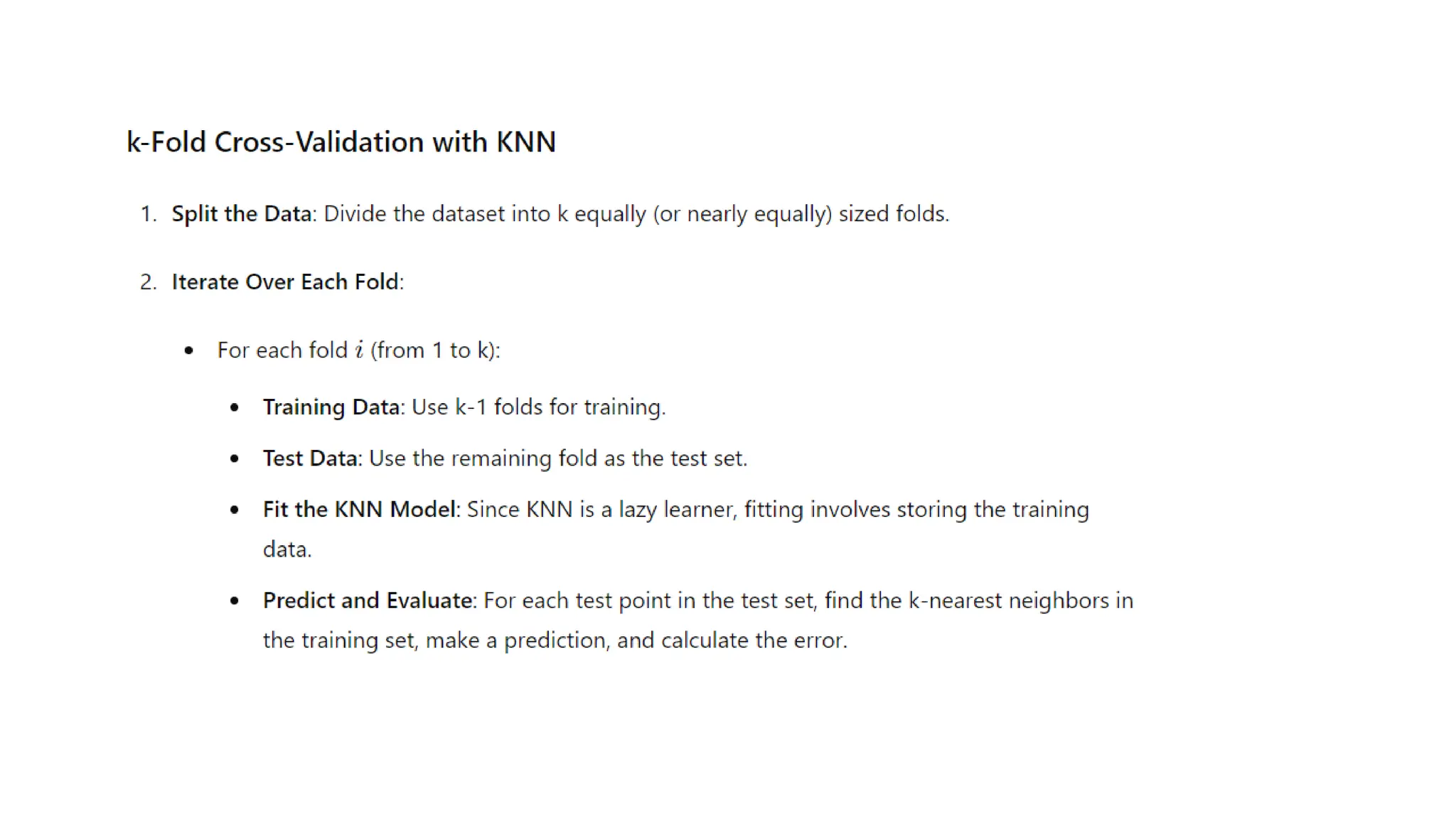

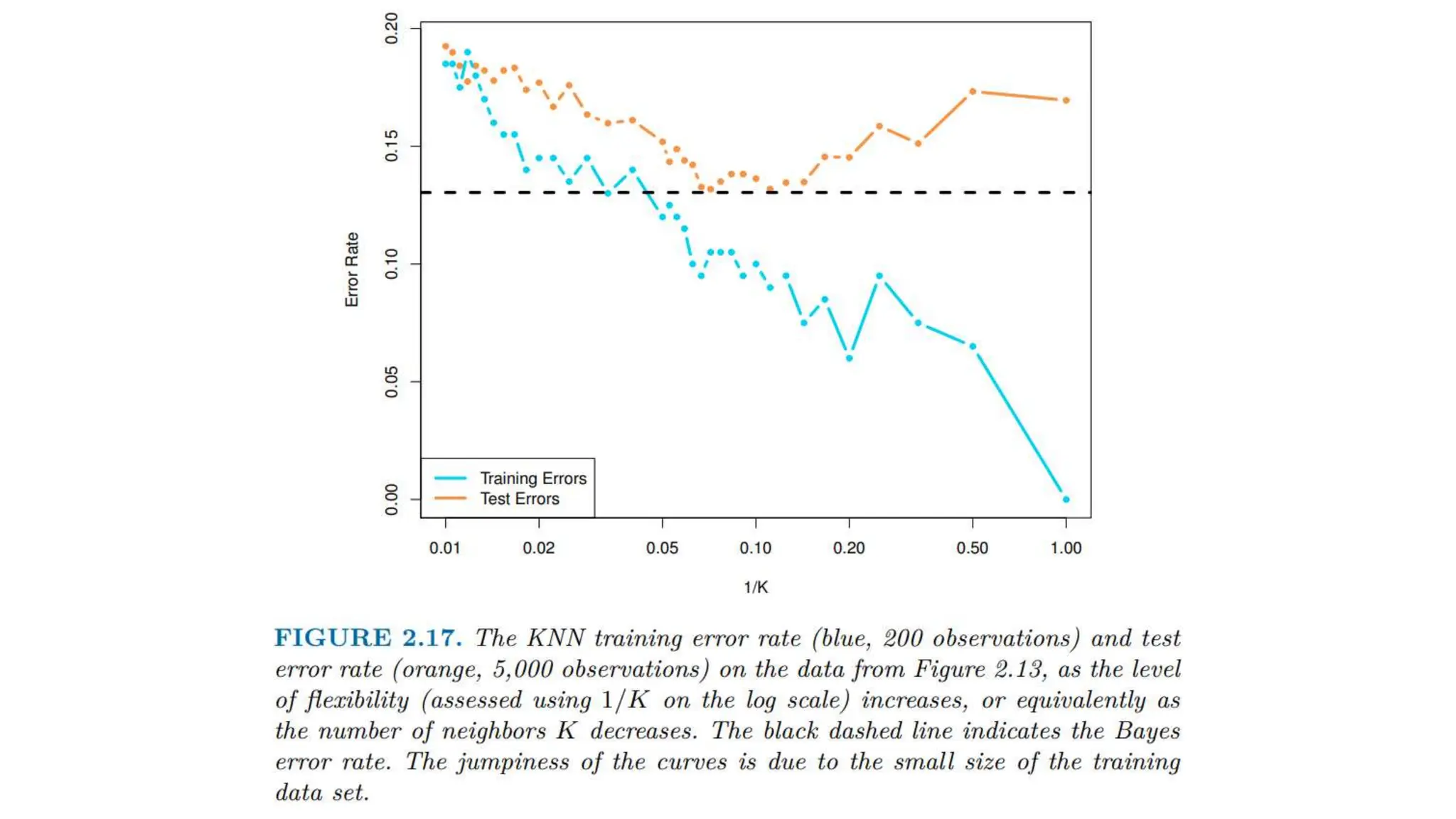

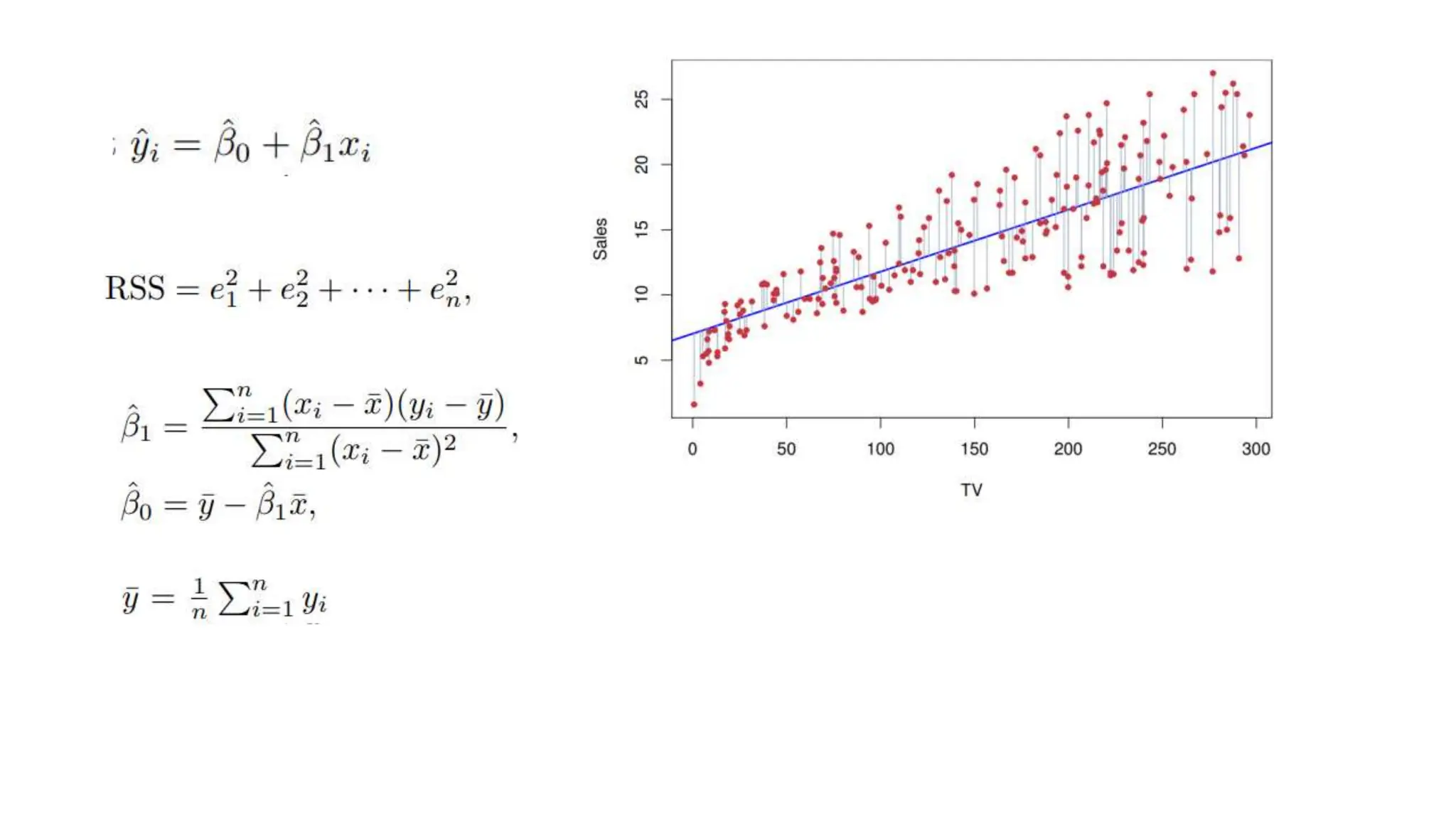

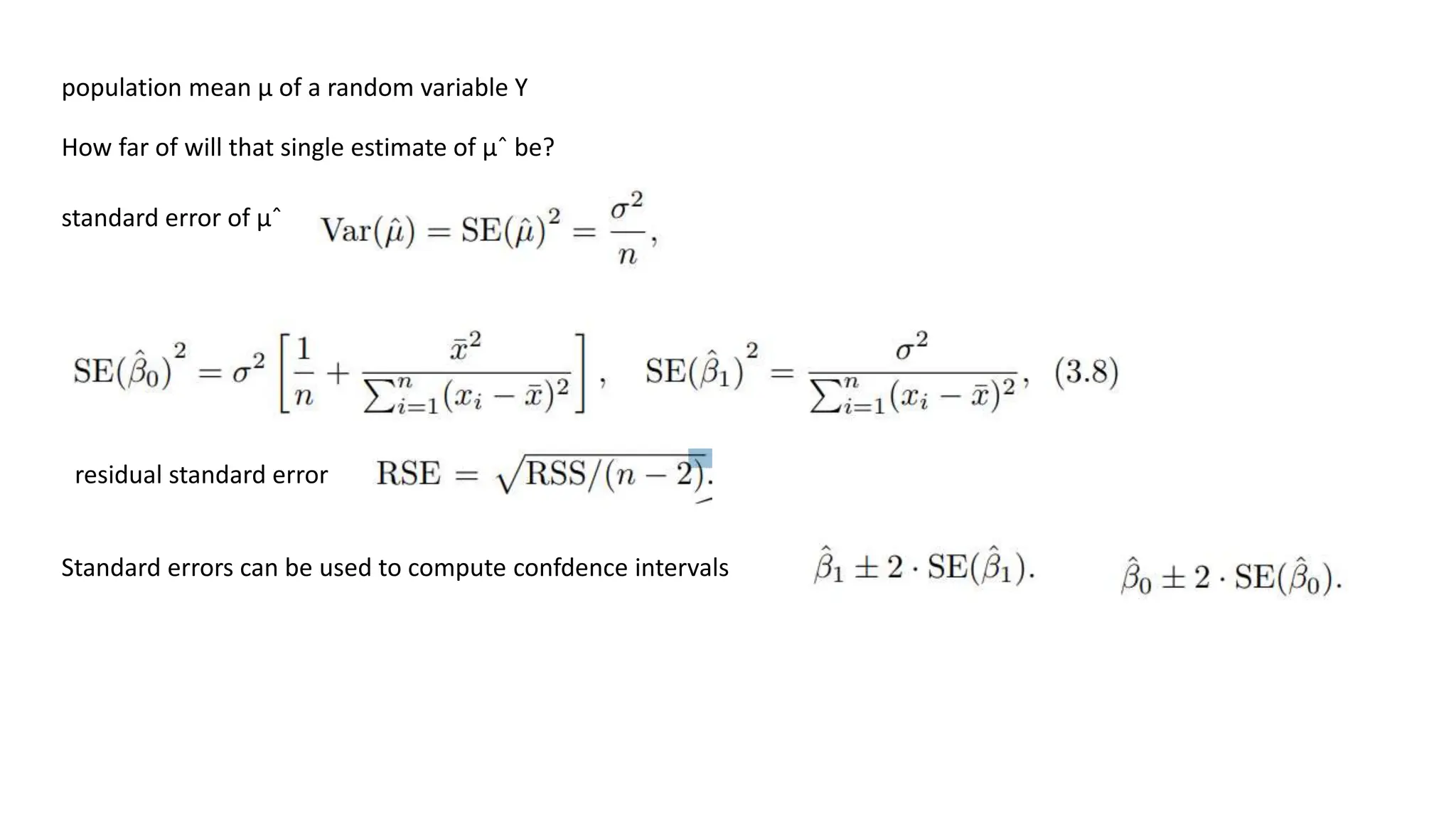

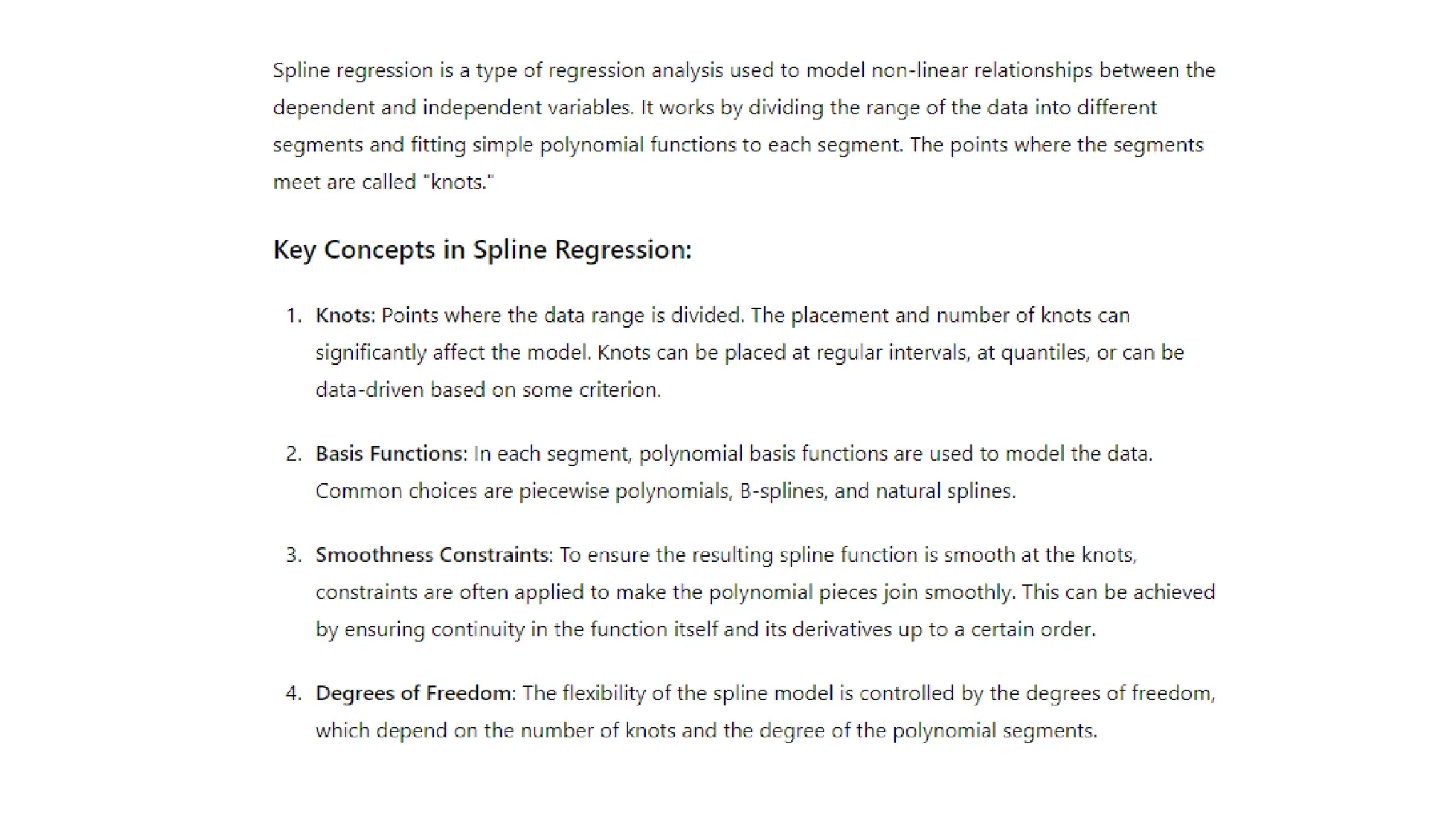

The document provides an overview of data science fundamentals, including data types, statistical measures, relationships among data, and machine learning concepts. It discusses the importance of exploratory data analysis (EDA), feature engineering, and feature selection techniques to improve model performance. Additionally, it distinguishes between PCA and traditional feature selection methods while highlighting their applications in machine learning.