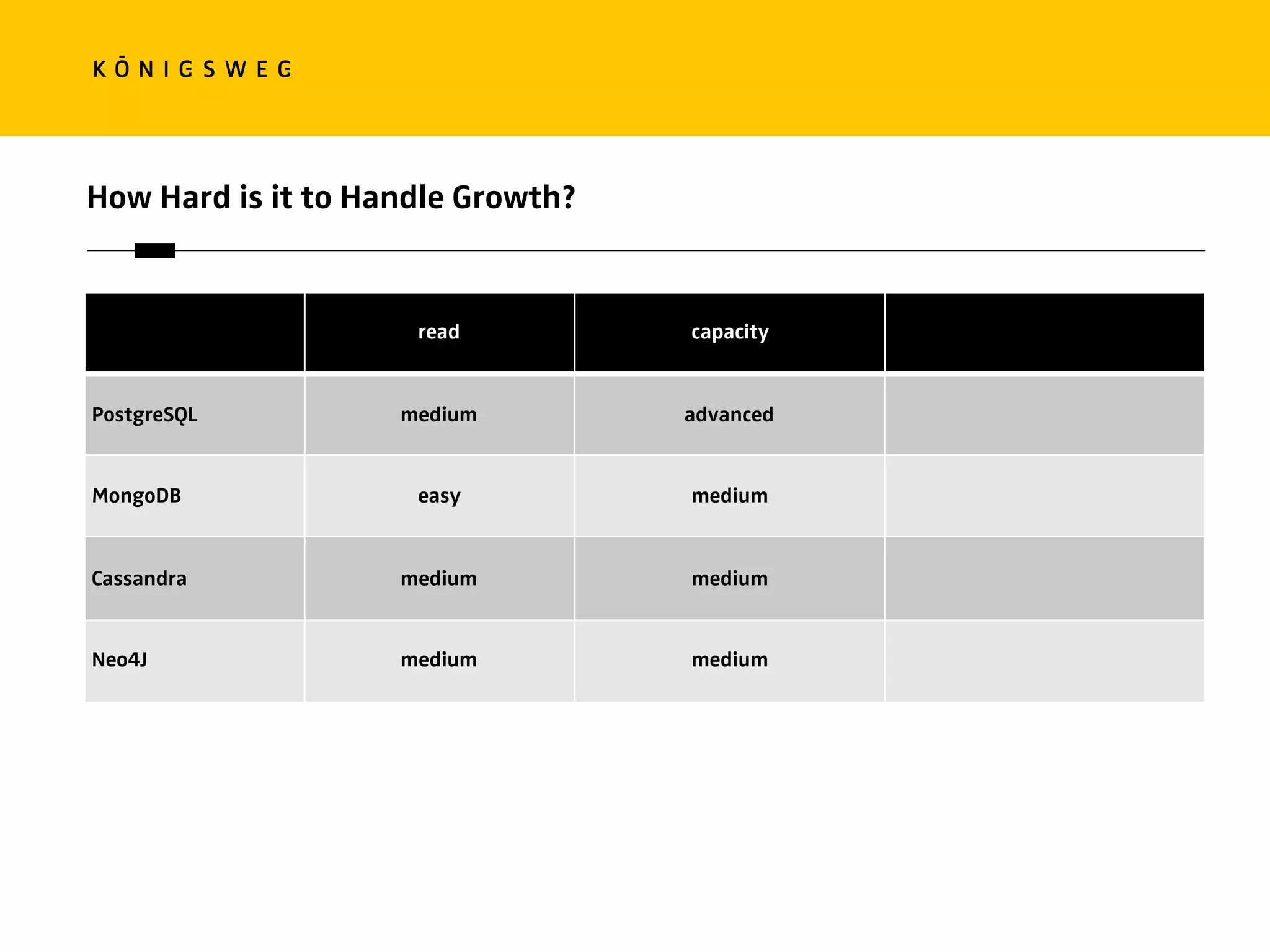

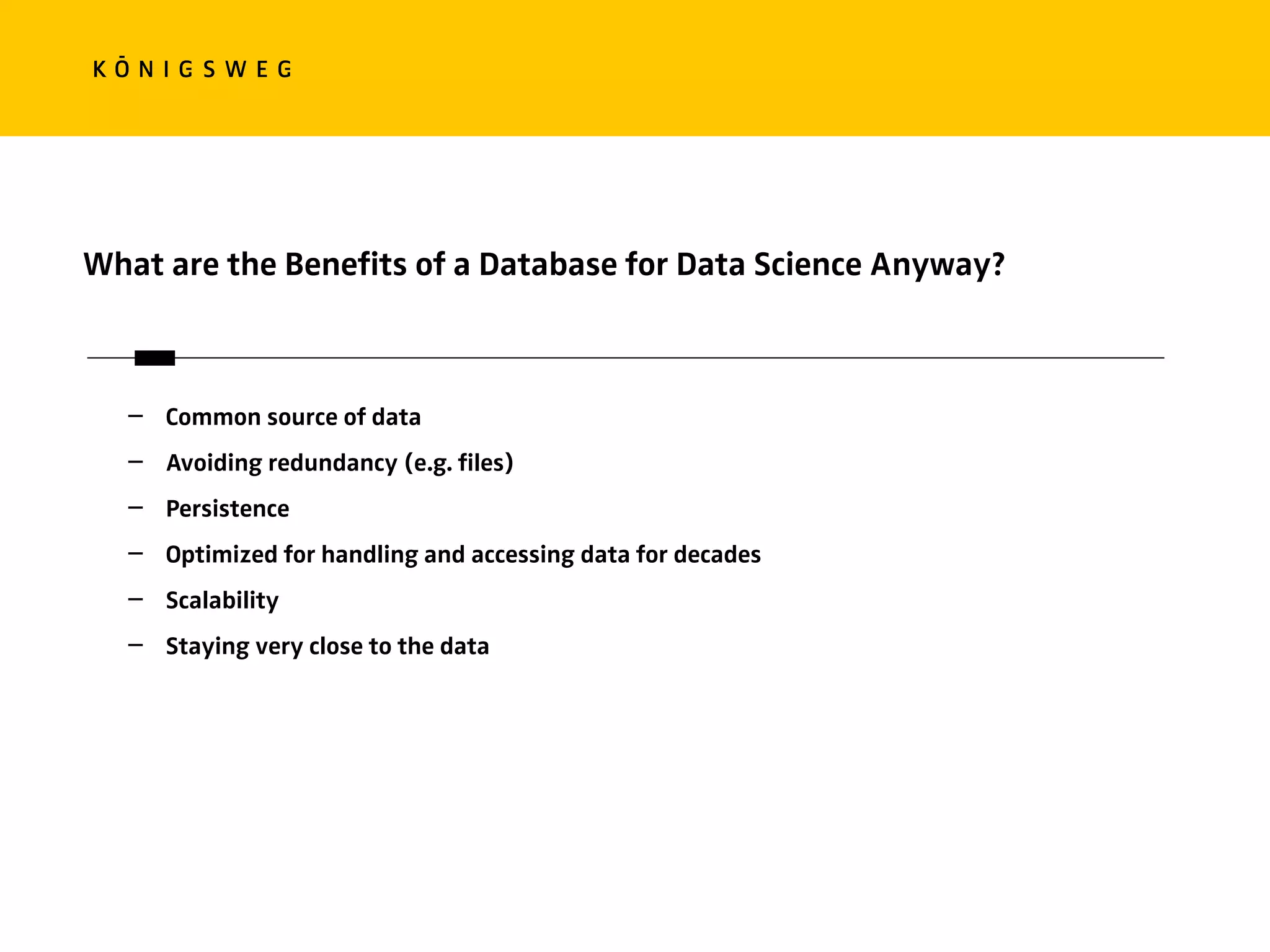

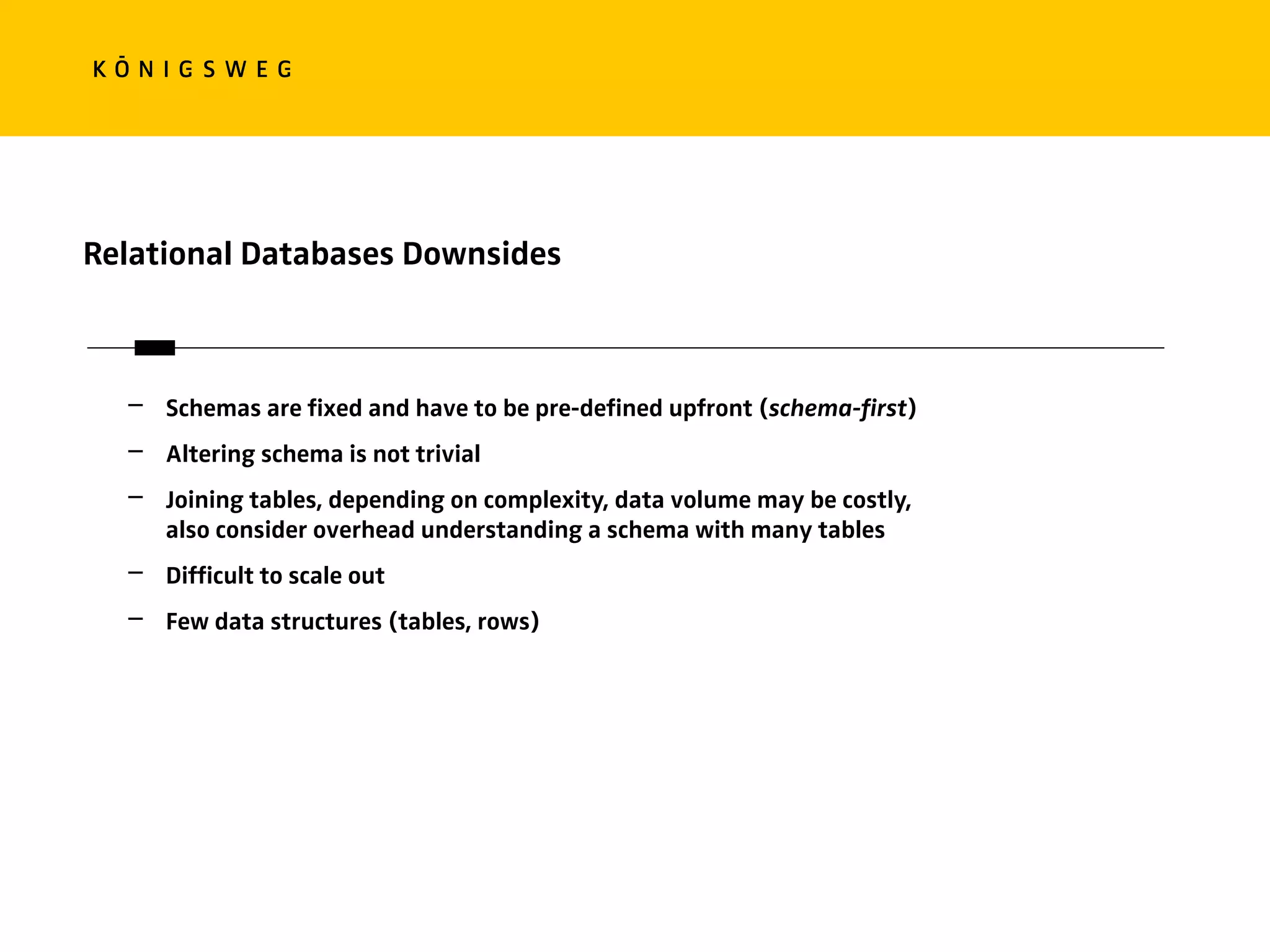

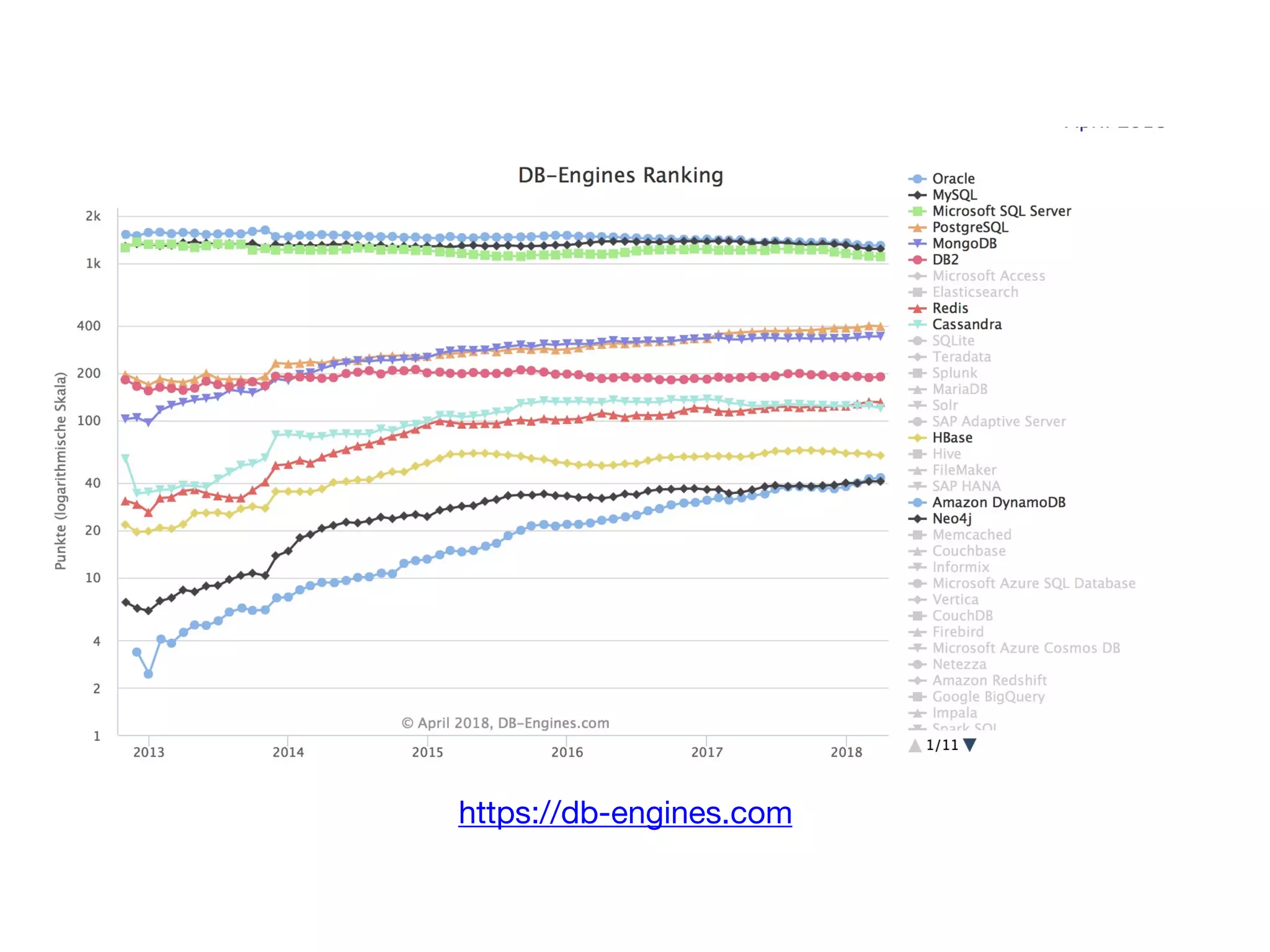

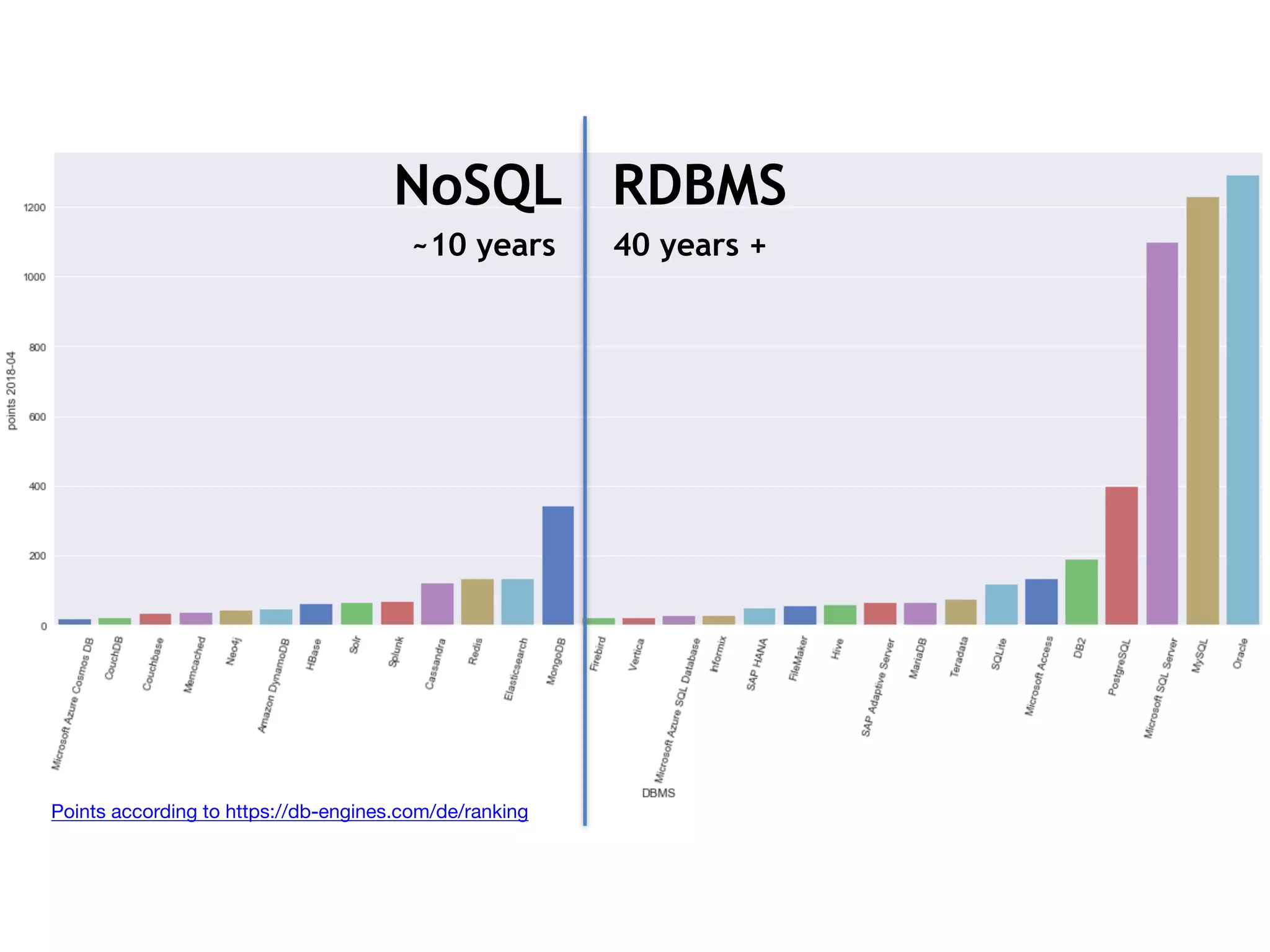

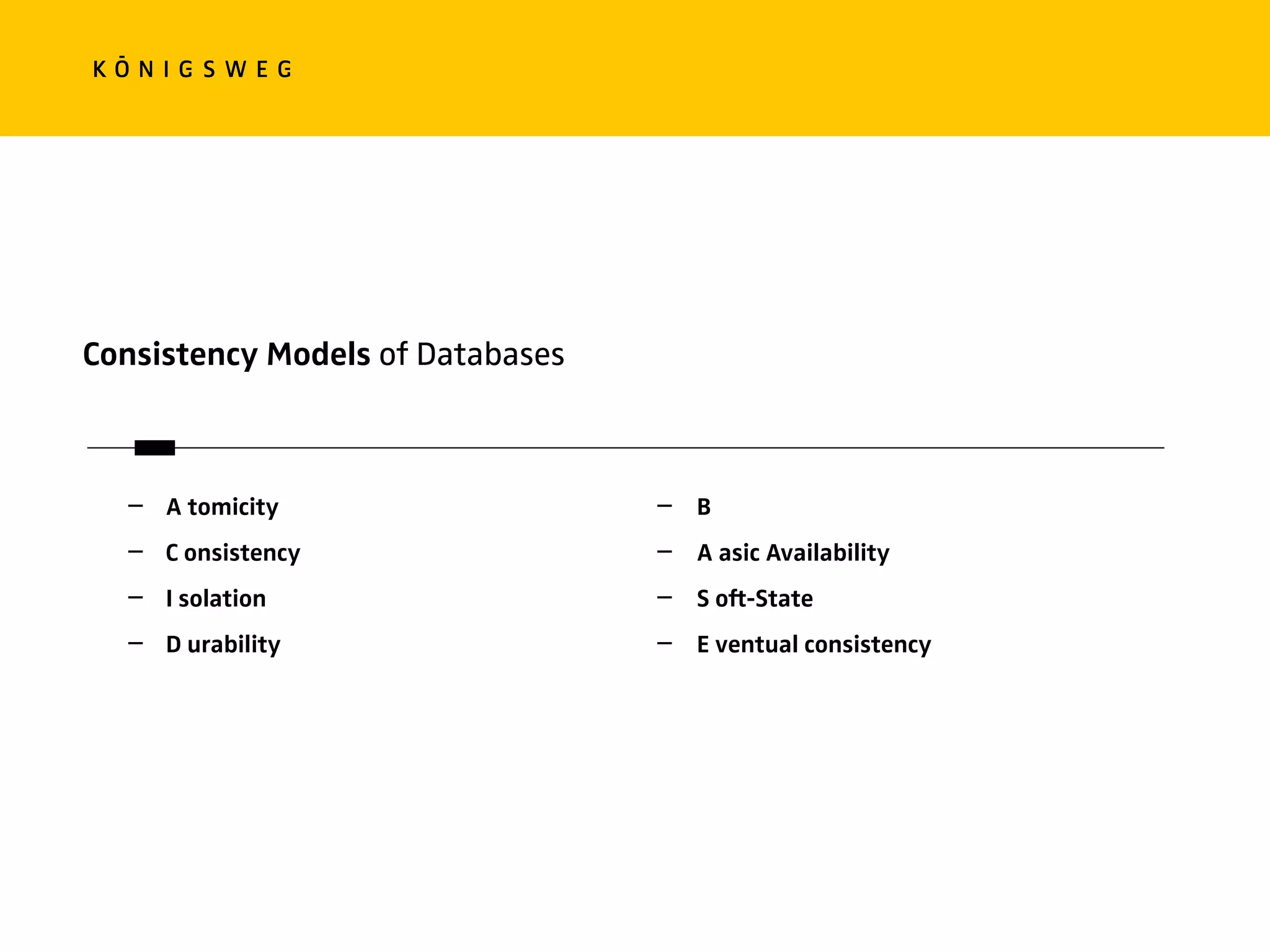

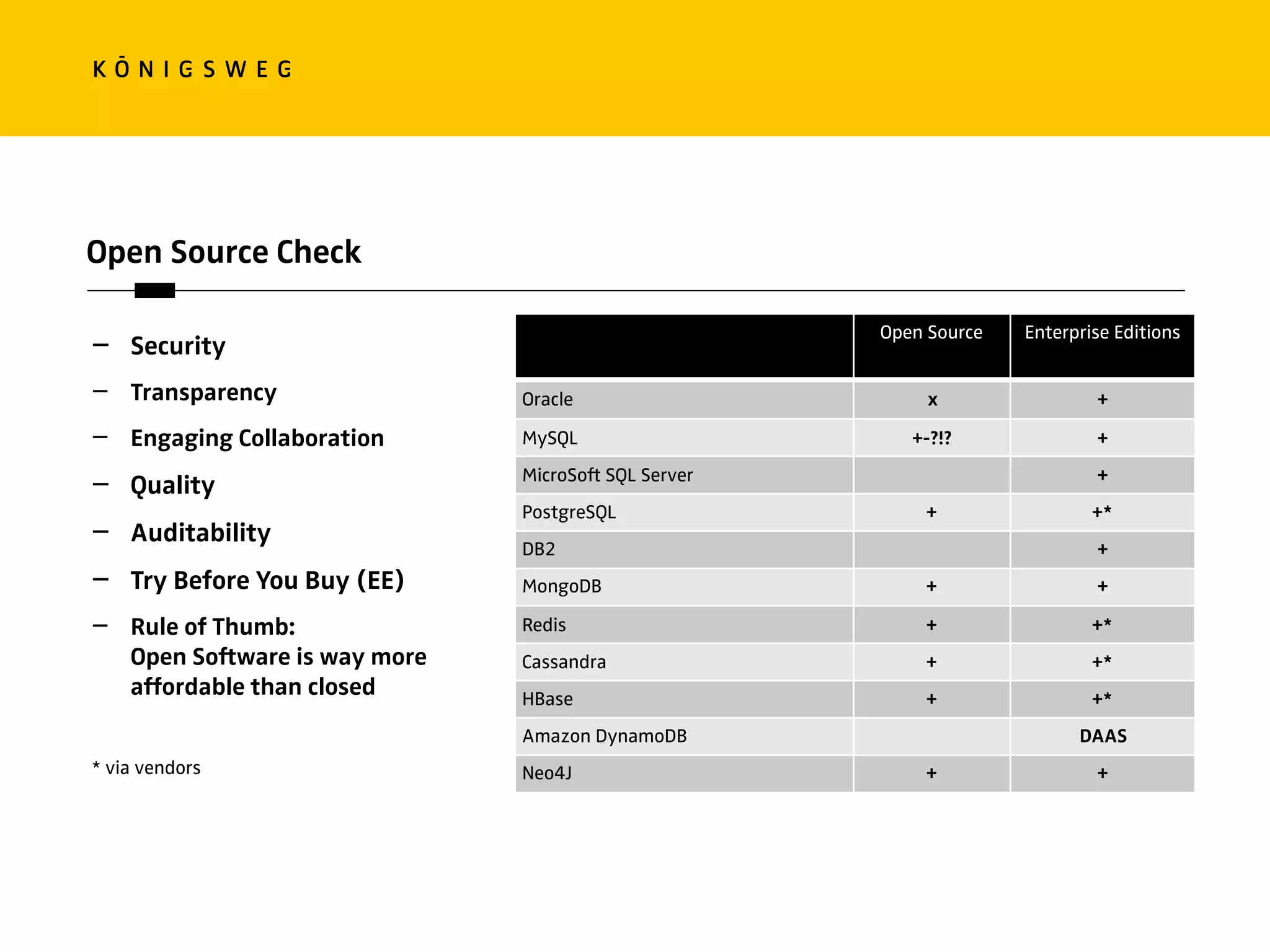

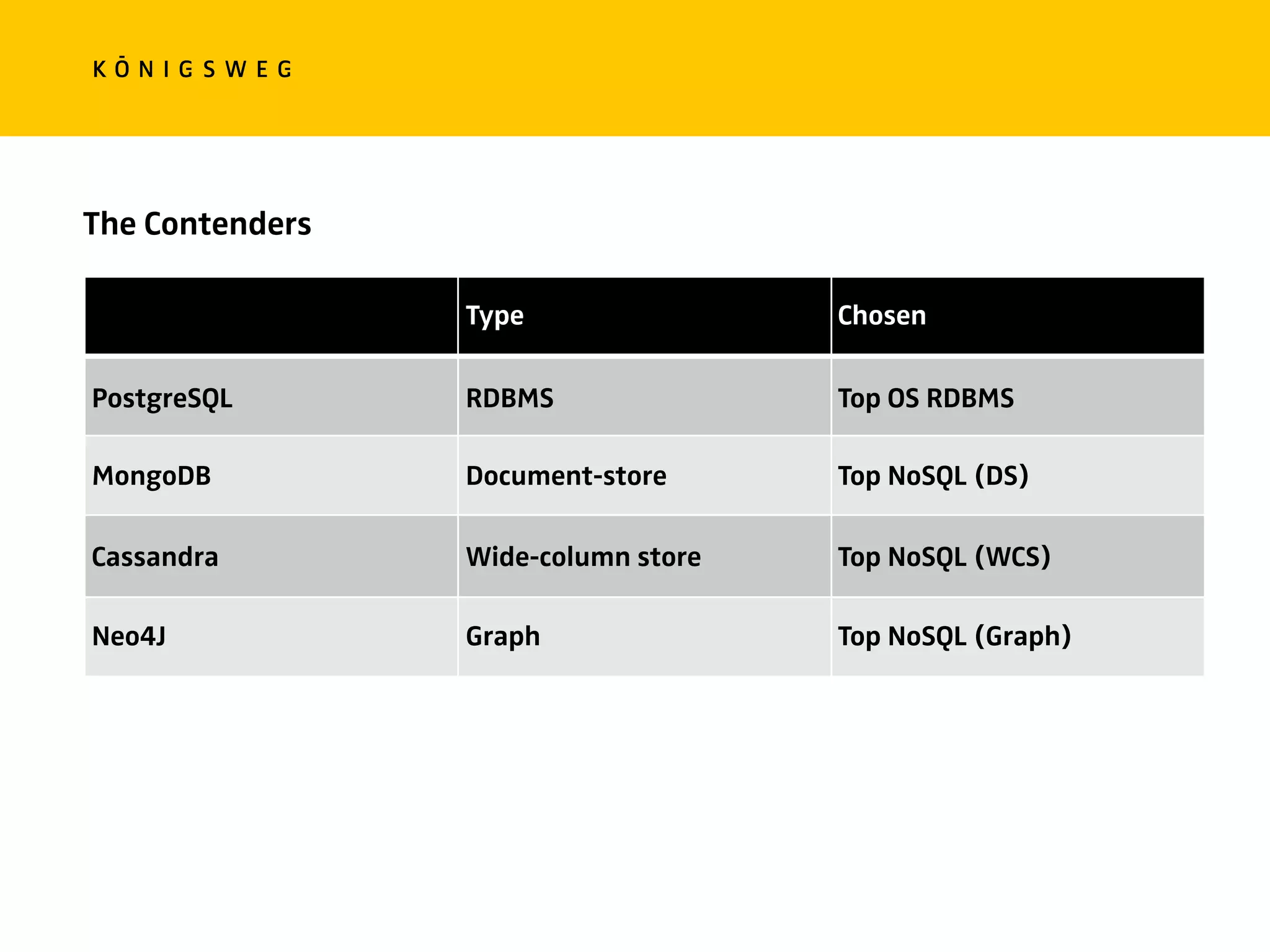

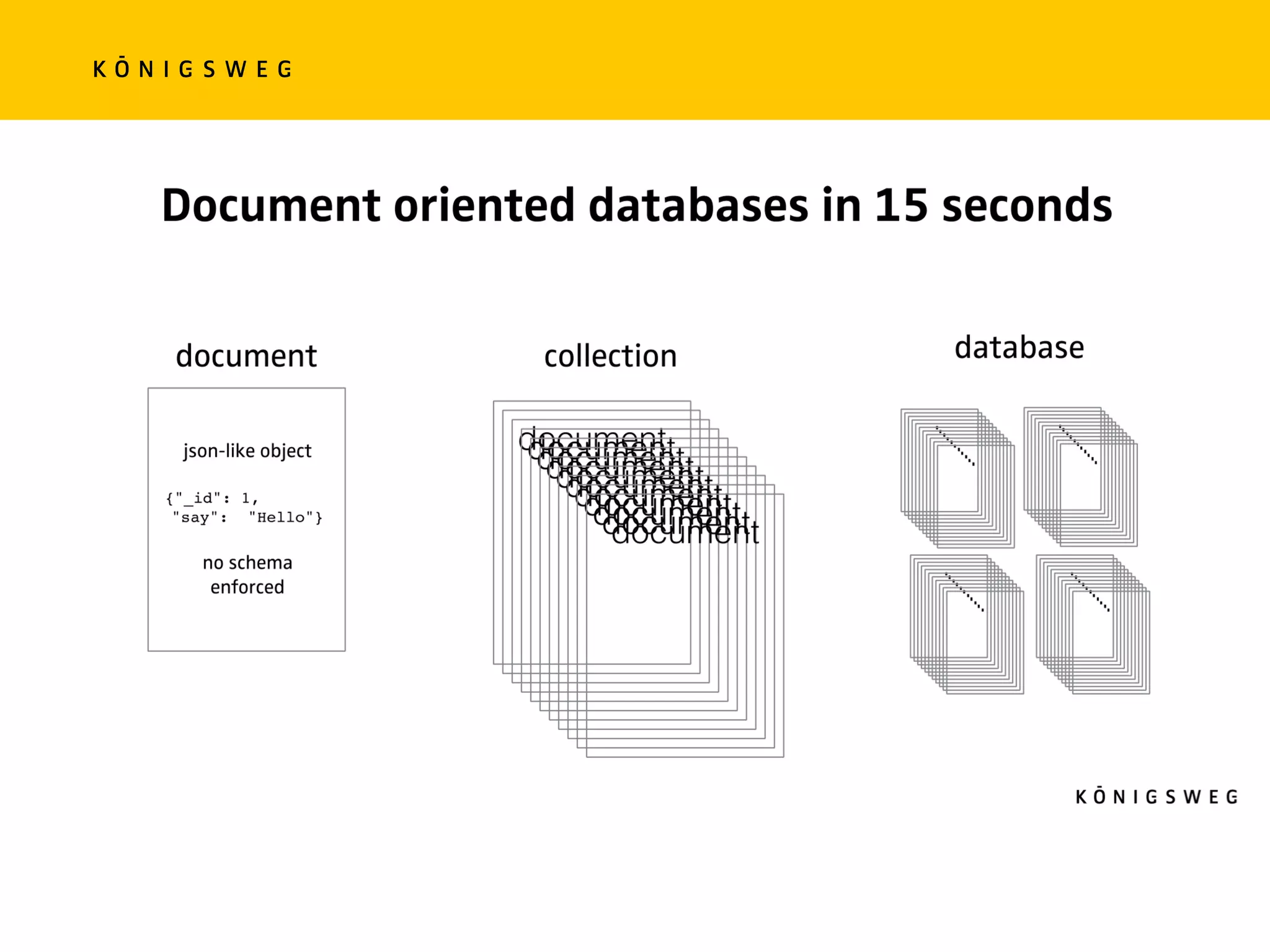

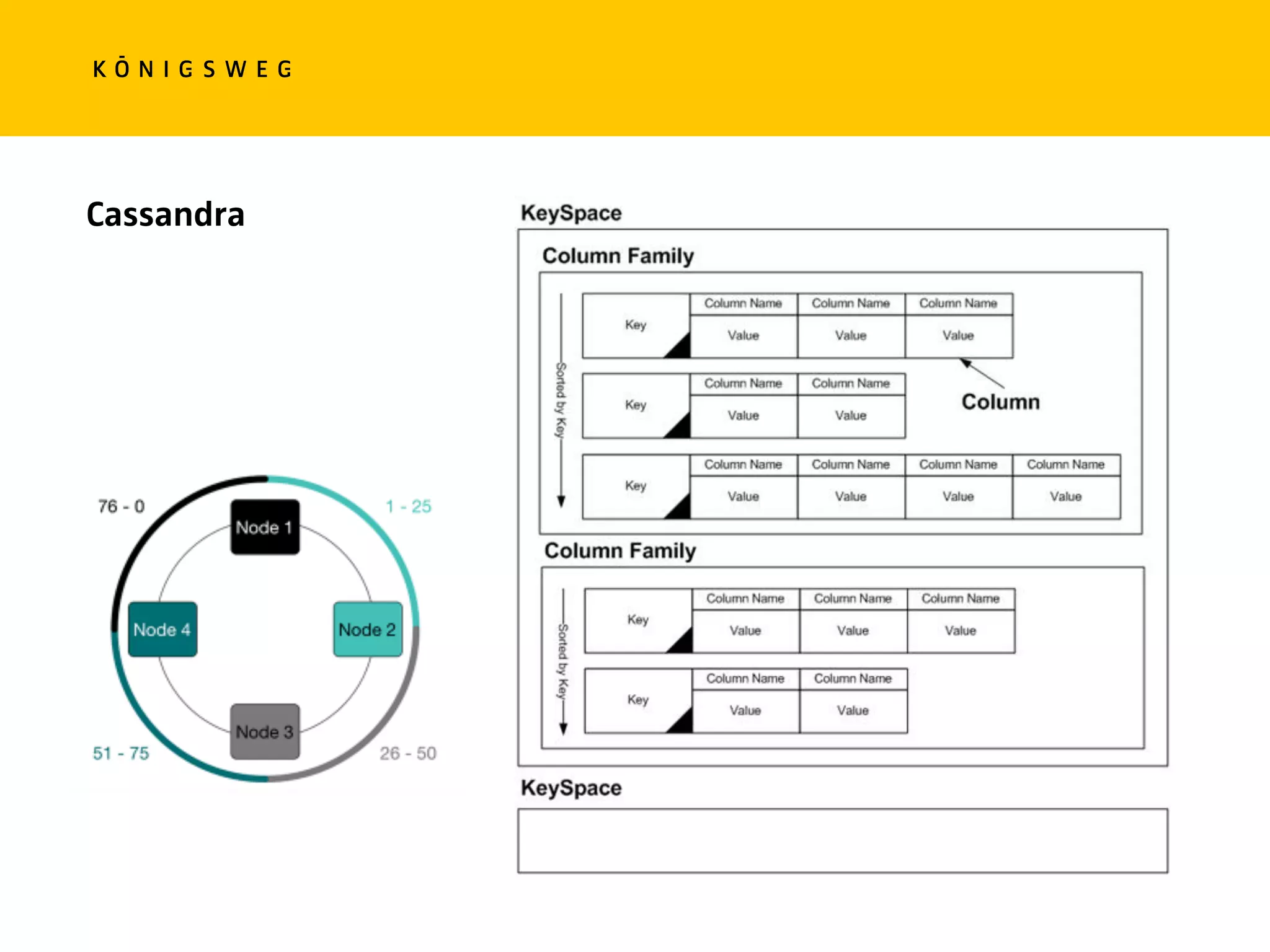

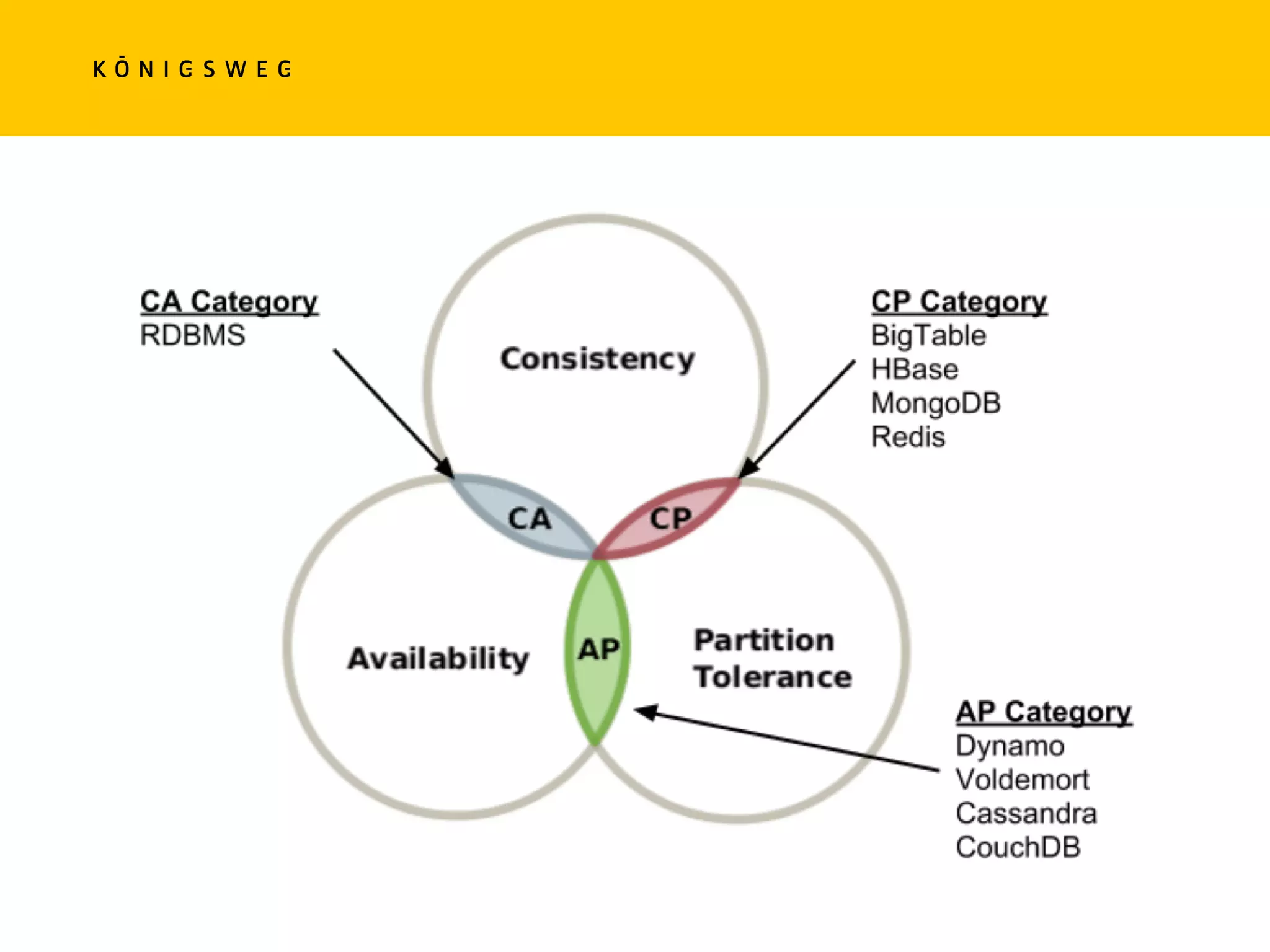

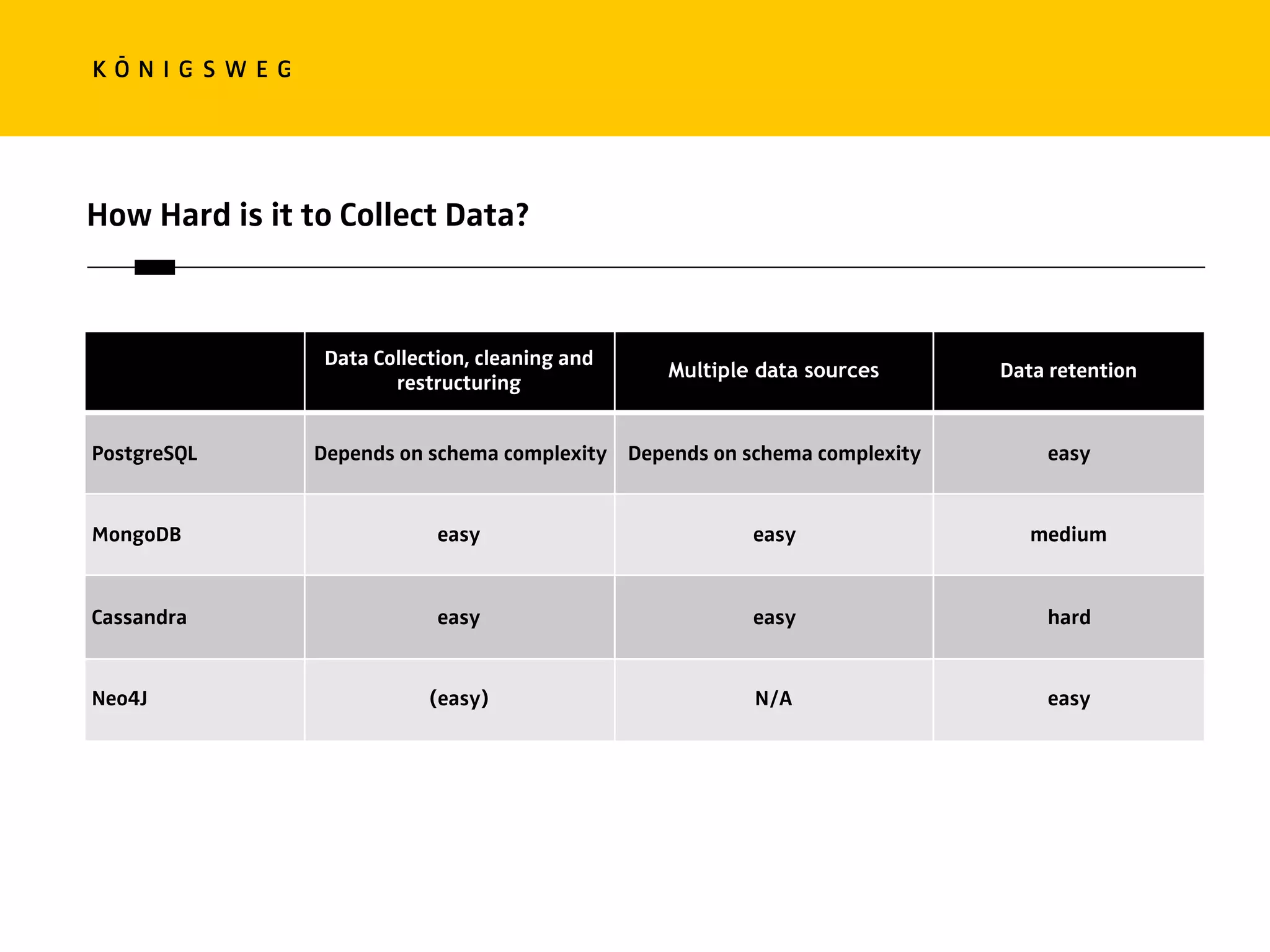

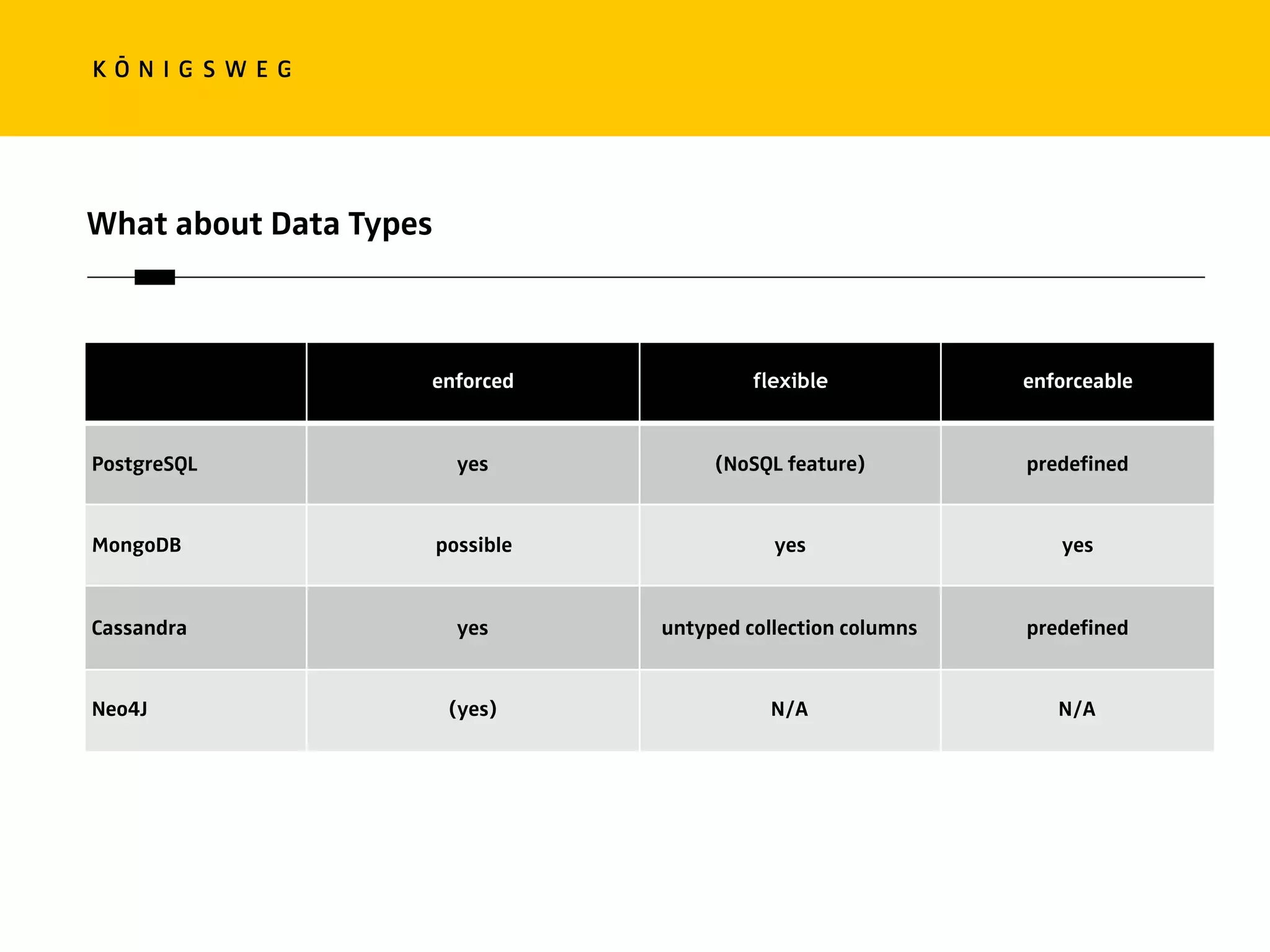

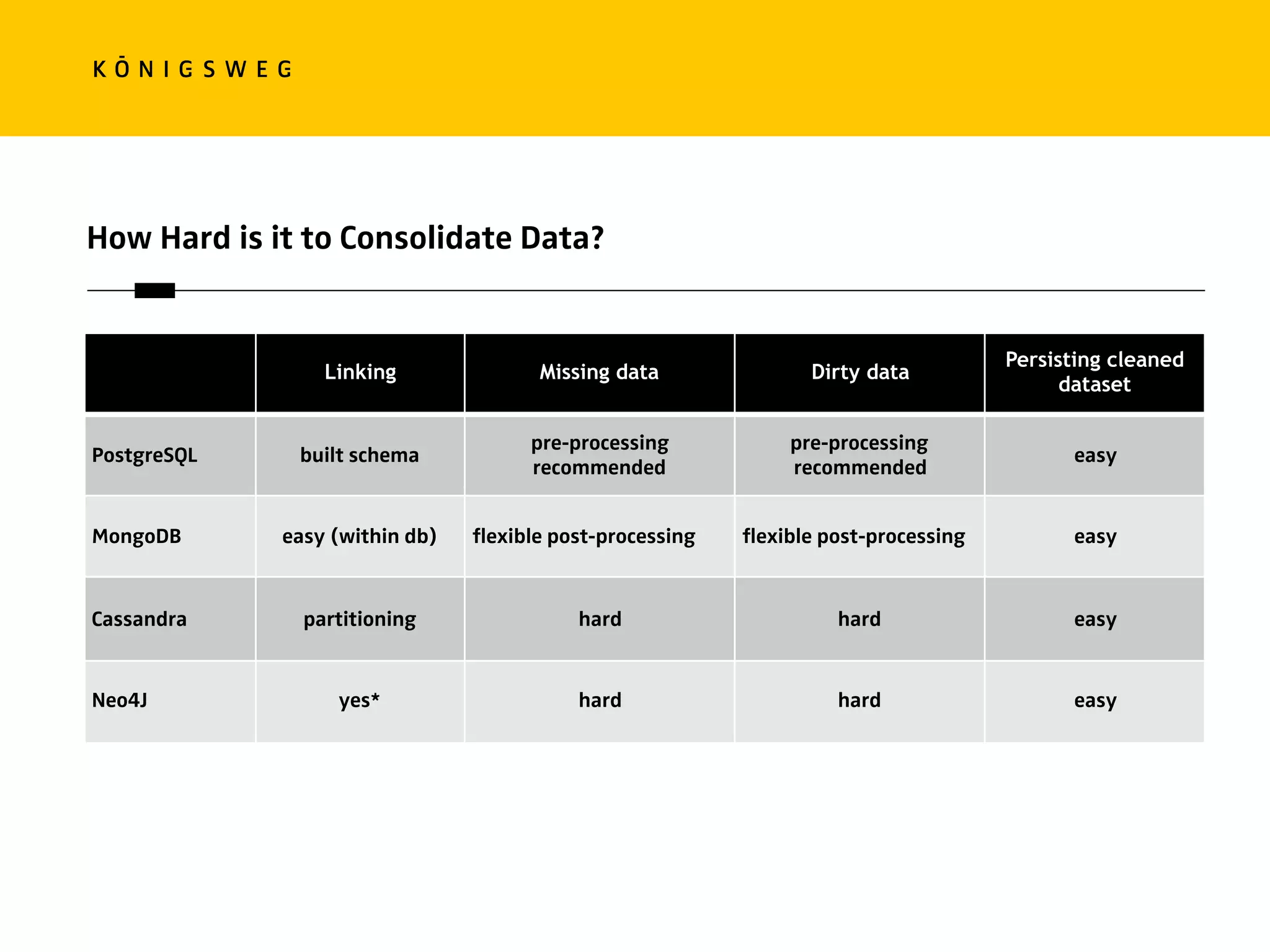

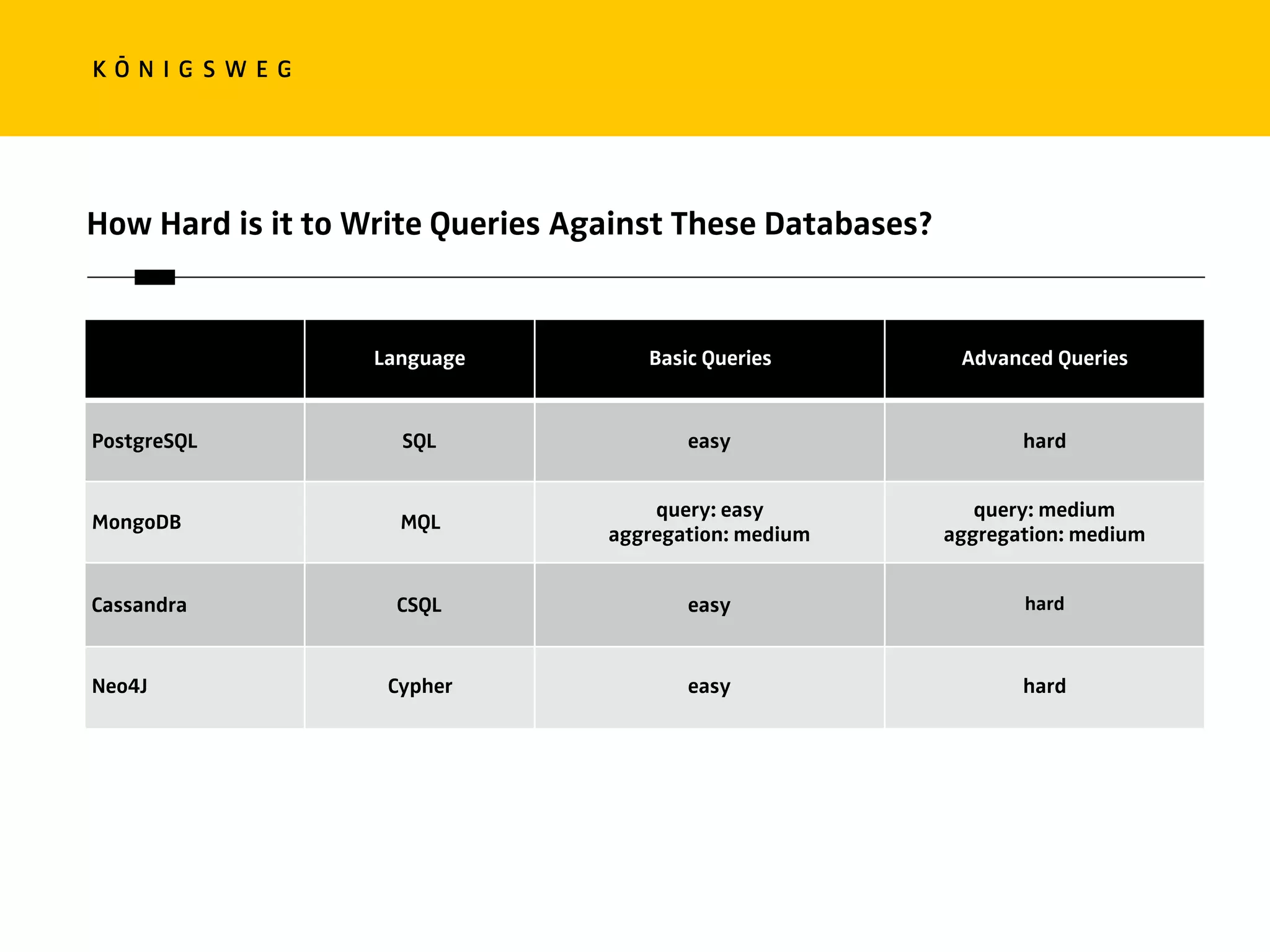

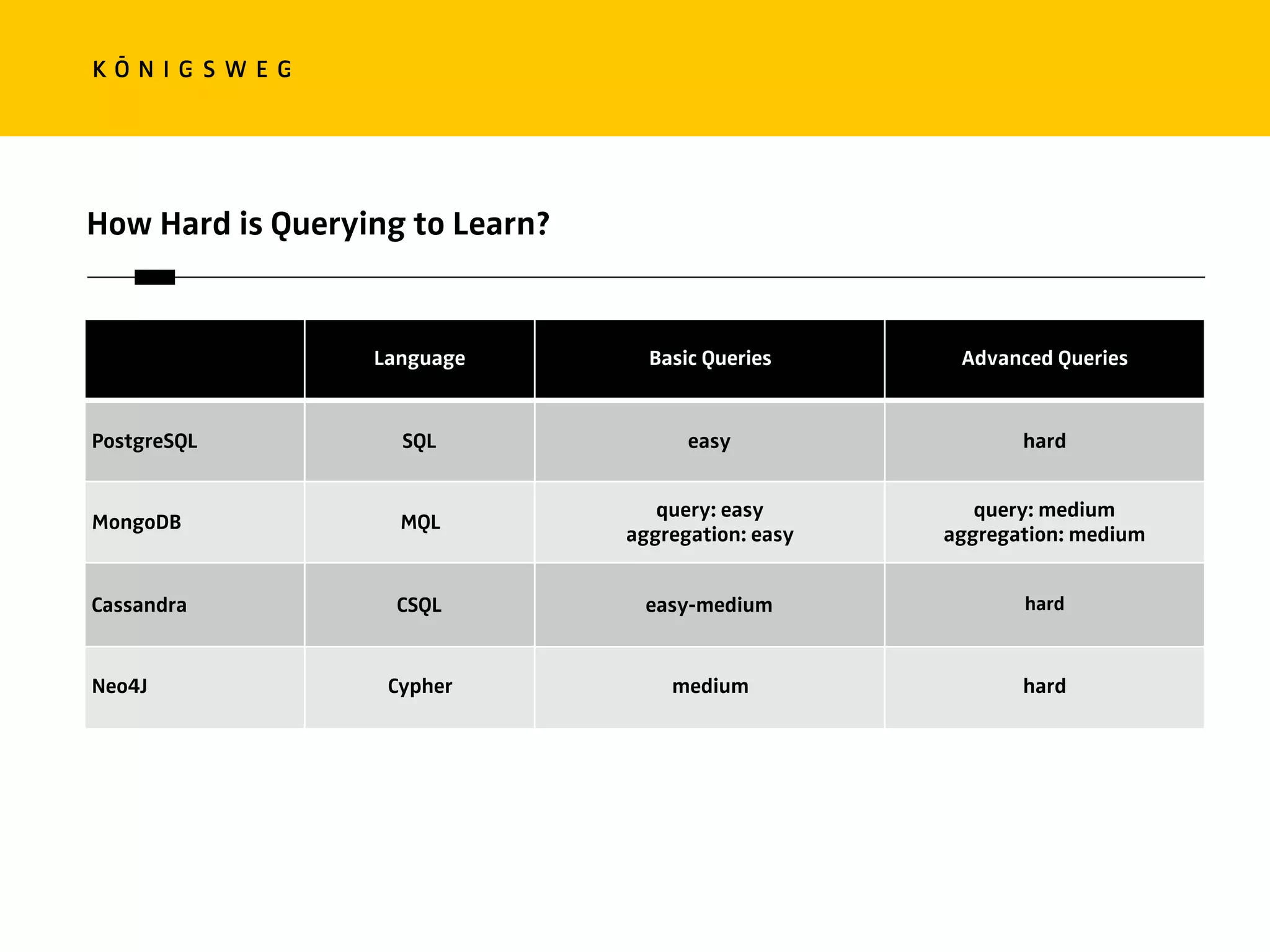

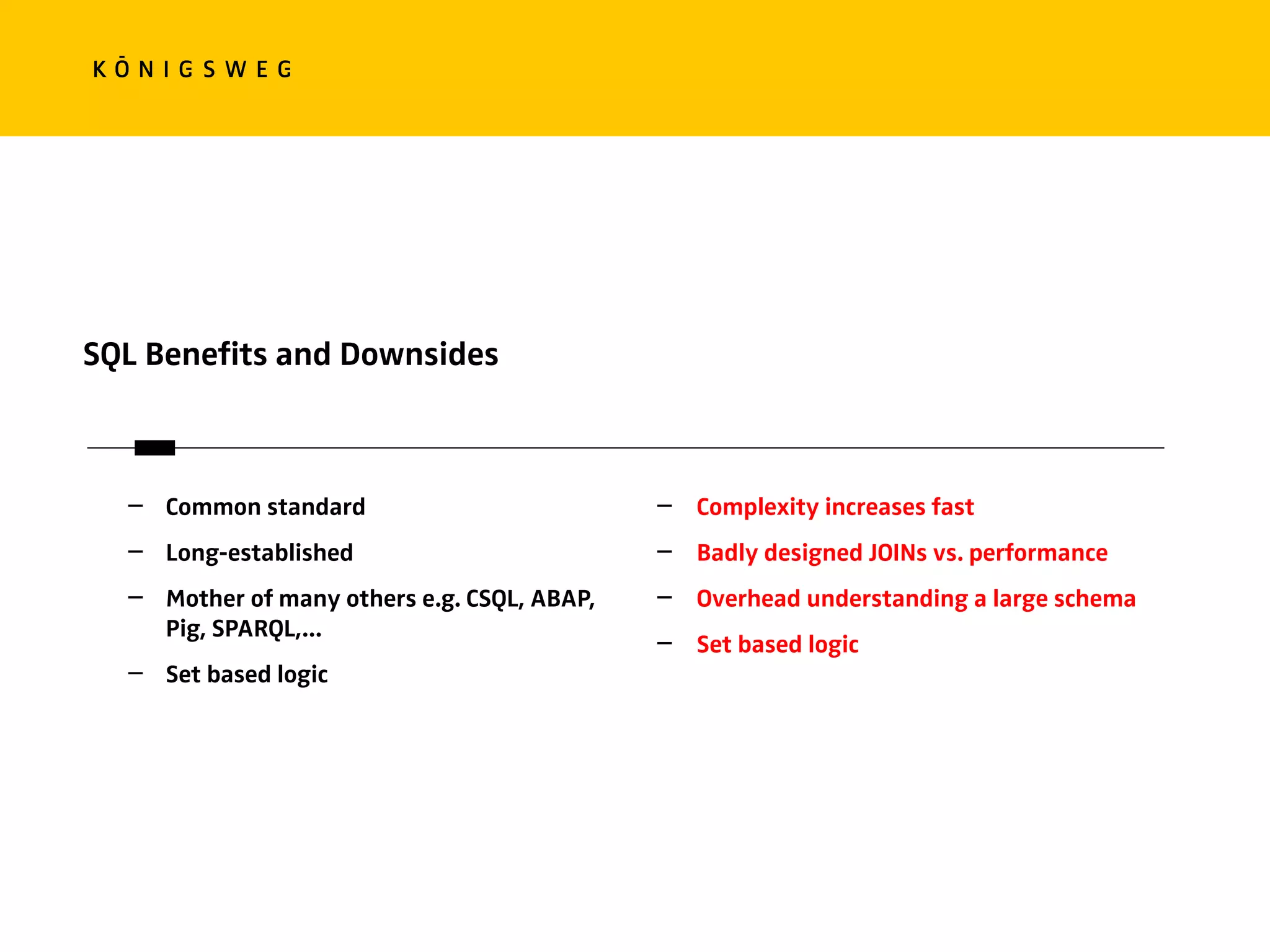

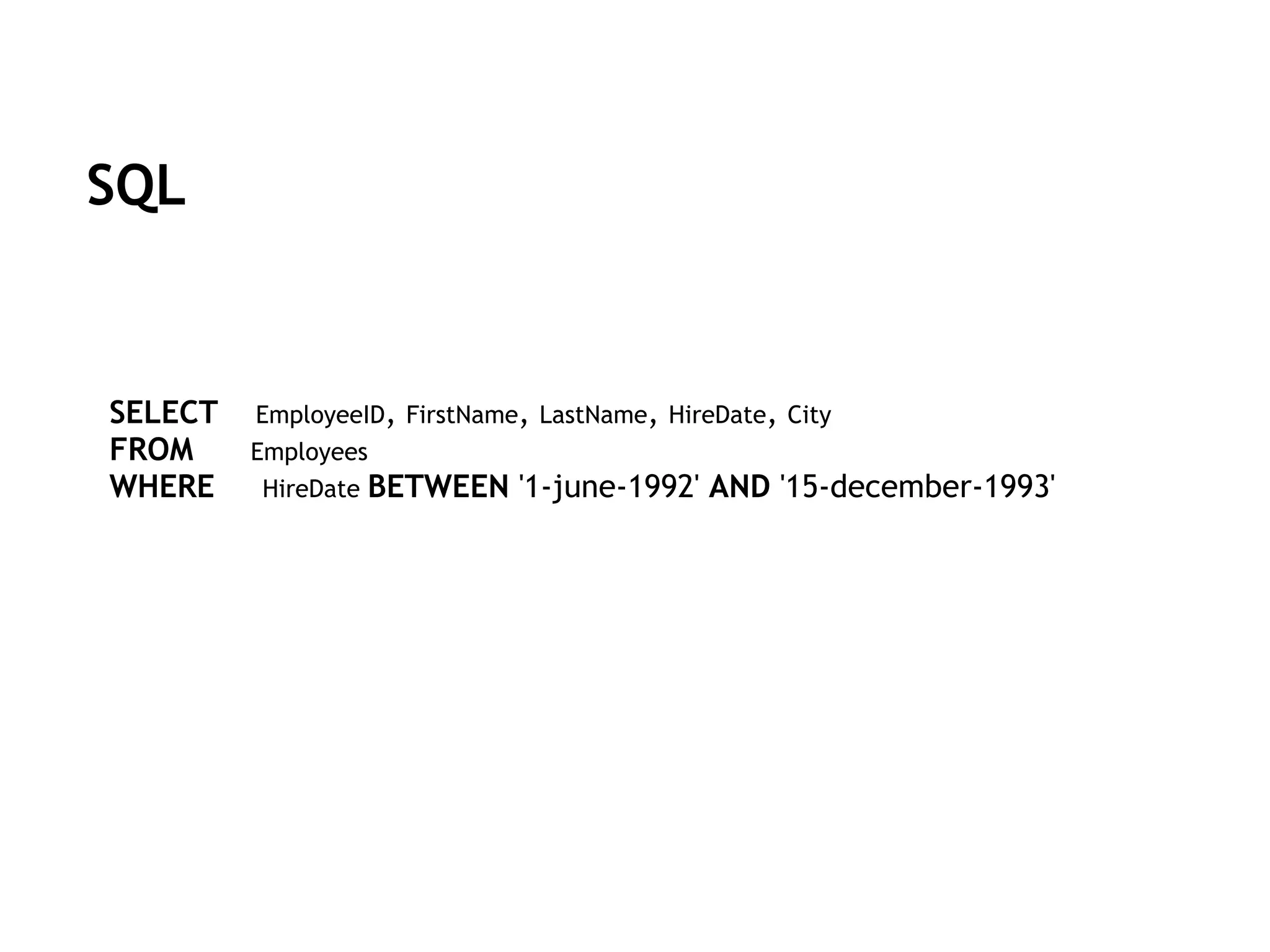

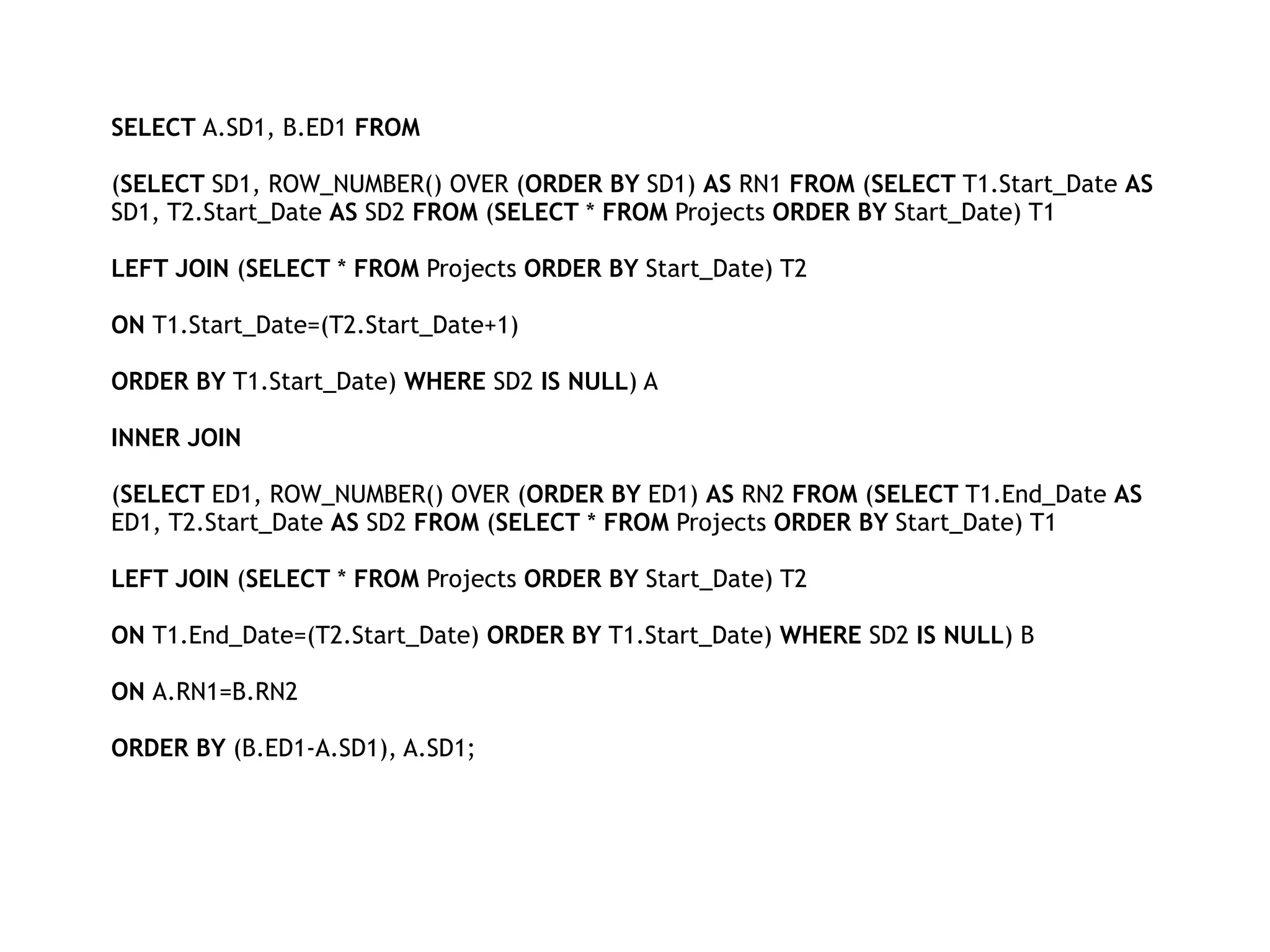

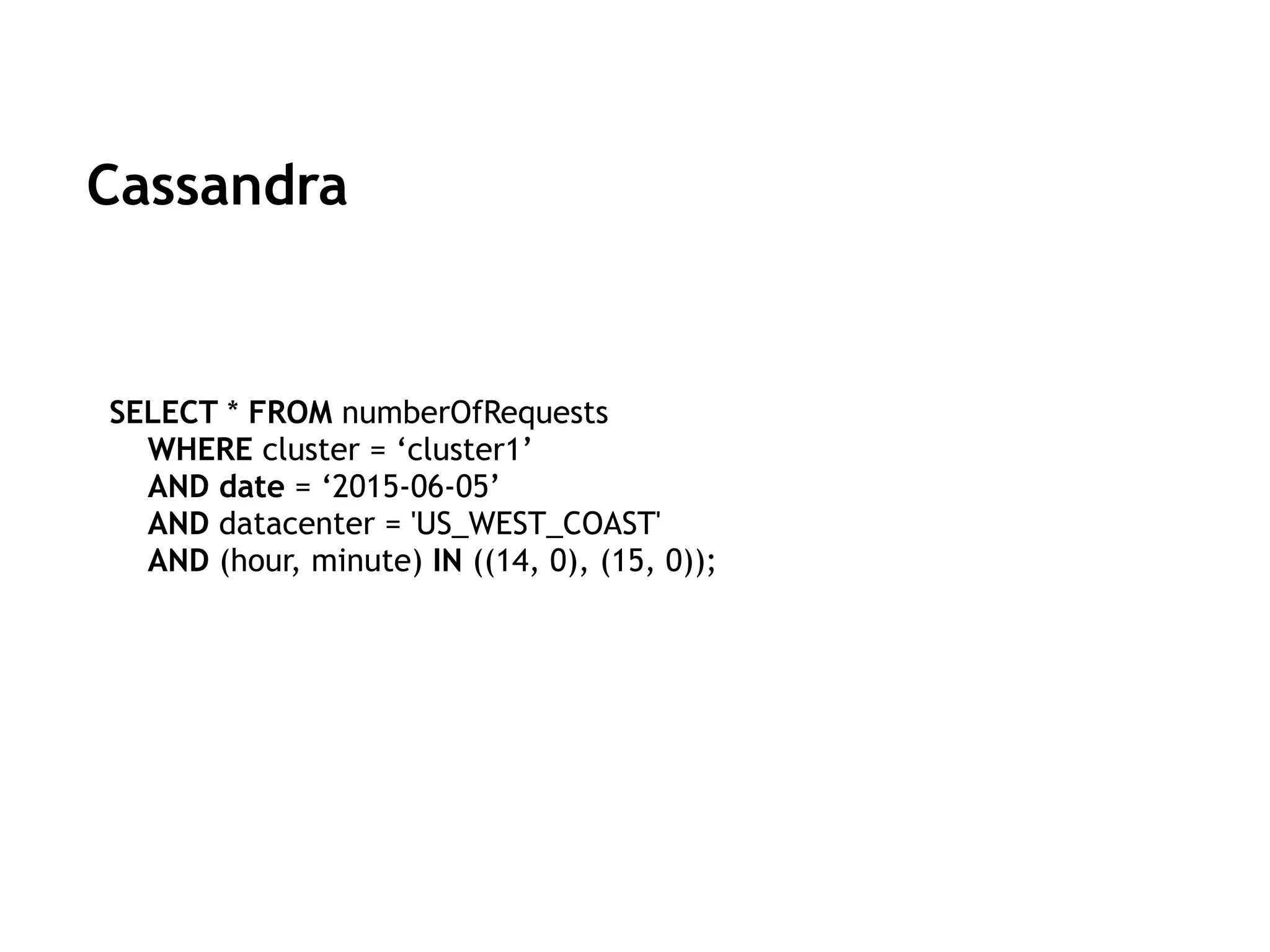

The document discusses the role of databases in data science, covering types of databases (RDBMS, NoSQL), their benefits, and challenges. It emphasizes the importance of choosing the right database based on project needs and highlights the evolution of database technologies. It concludes with advice for selecting databases for specific use cases and encourages exploration of various database solutions.

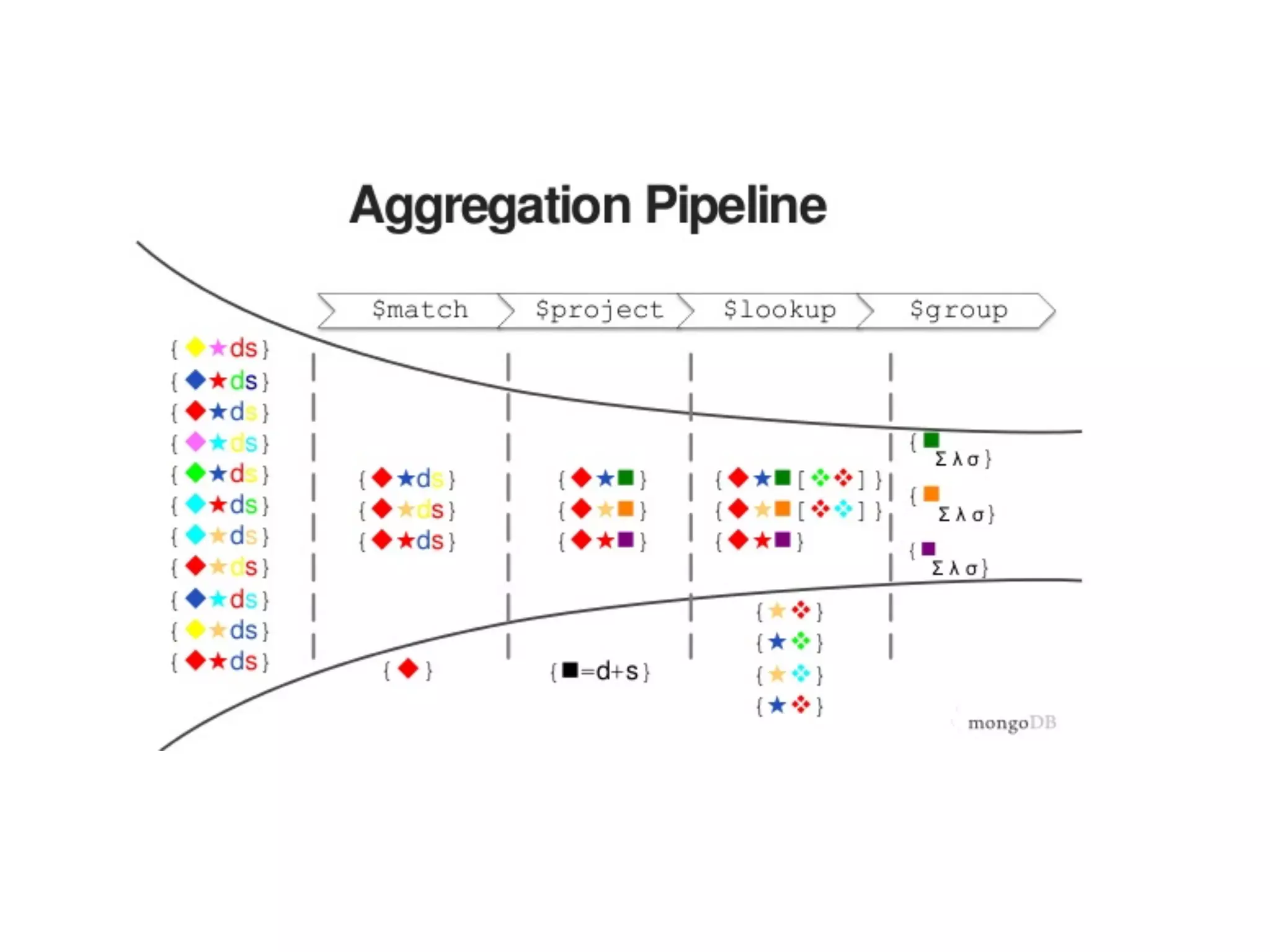

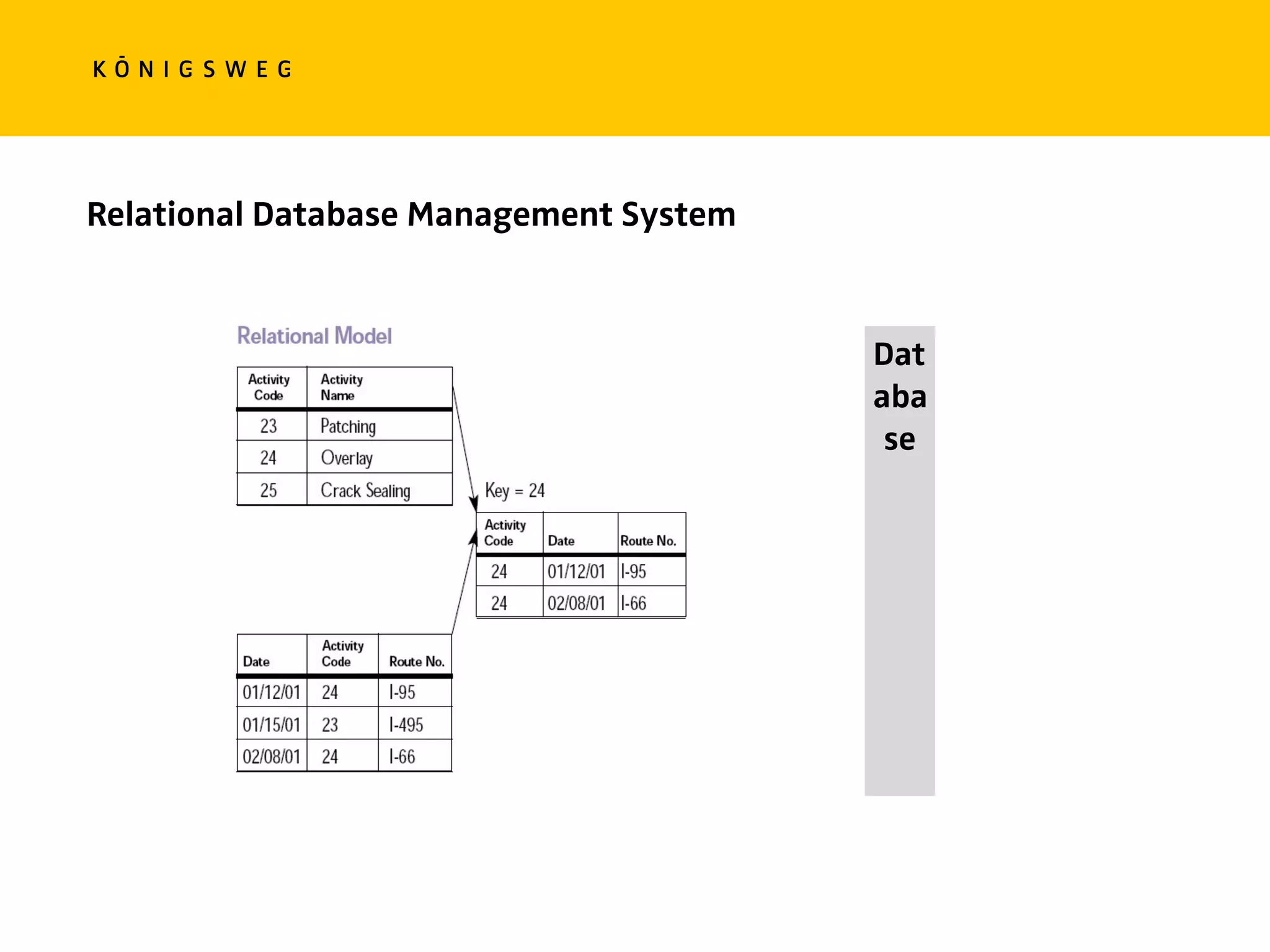

![Relational Databases

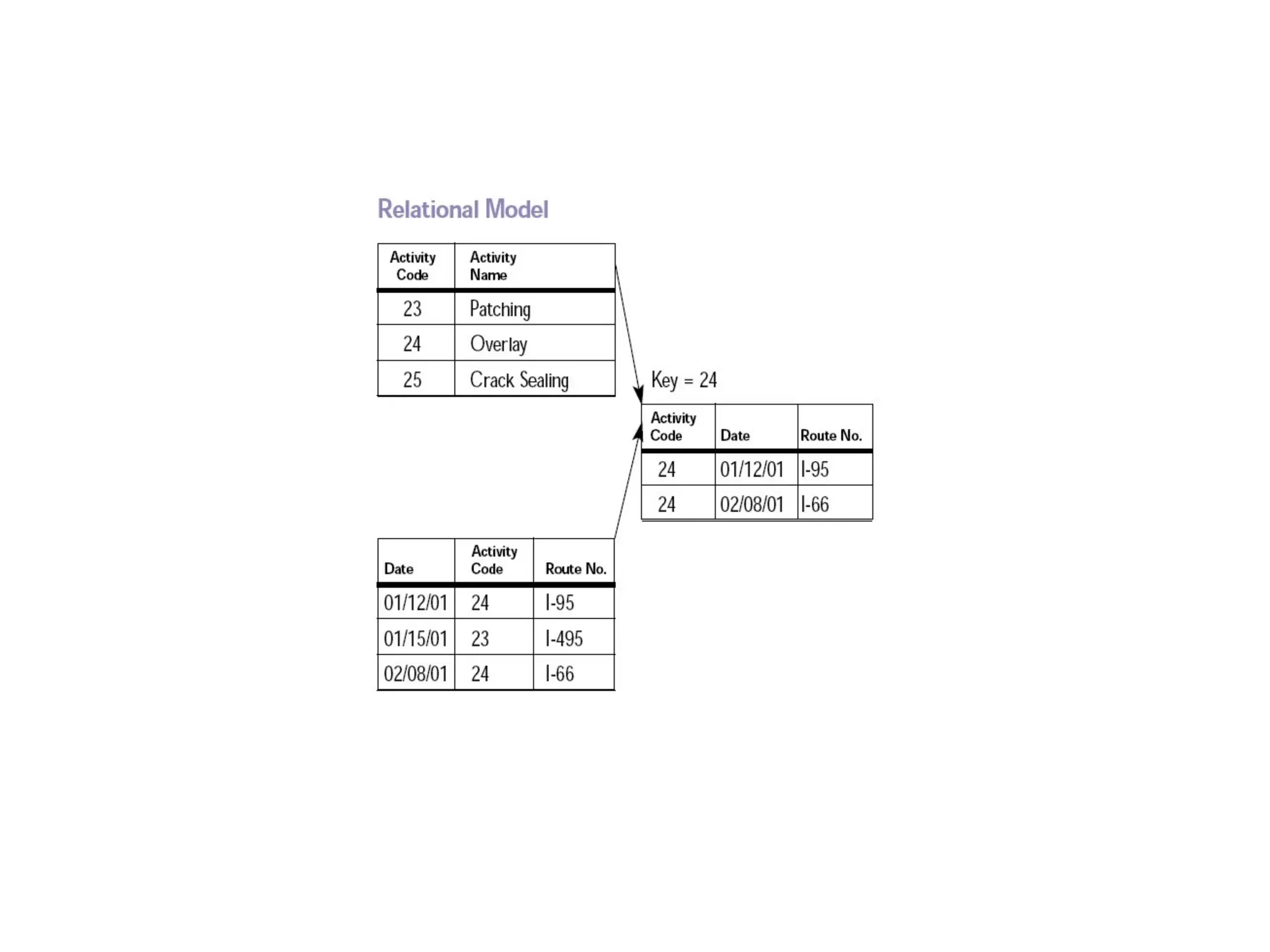

− Records are organised into tables

− Rows of these table are identified by unique keys

− Data spans multiple tables, linked via ids

− Data is ideally normalised

− Data can be denormalized for performance

− Transactions are ACID [Atomic, Consistent , Isolated, Durable]](https://image.slidesharecdn.com/pycon-nove-databases-for-data-science-180421160121/75/Databases-for-Data-Science-16-2048.jpg)

![MATCH (c:Customer {companyName:"Drachenblut Delikatessen"})

OPTIONAL MATCH (p:Product)<-[pu:PRODUCT]-(:Order)<-[:PURCHASED]-(c)

RETURN p.productName, toInt(sum(pu.unitPrice * pu.quantity)) AS volume

ORDER BY volume DESC;

Neo4J](https://image.slidesharecdn.com/pycon-nove-databases-for-data-science-180421160121/75/Databases-for-Data-Science-47-2048.jpg)

![pipeline = [

{"$match": {"artistName": “Suppenstar"}},

{"$sort": {„info.releaseDate: 1)])},

{"$group": {

"_id": {"$year": "$info.releaseDateEpoch"},

"count": {"$sum": "1}}},

{"$project": {"year": "$_id.year", "count": 1}}},

]

MongoDB Aggregation Pipeline](https://image.slidesharecdn.com/pycon-nove-databases-for-data-science-180421160121/75/Databases-for-Data-Science-48-2048.jpg)