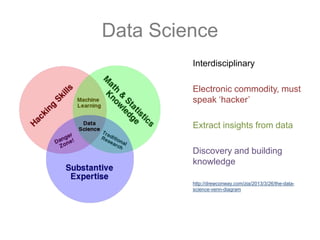

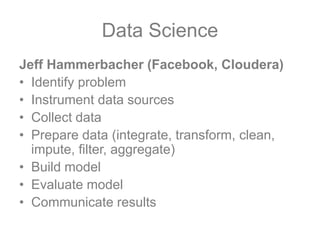

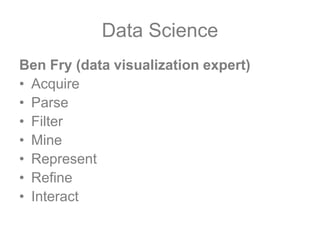

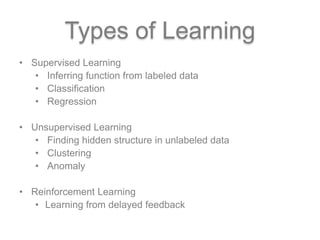

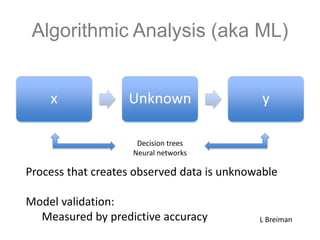

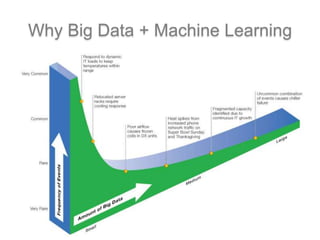

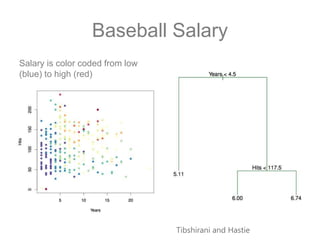

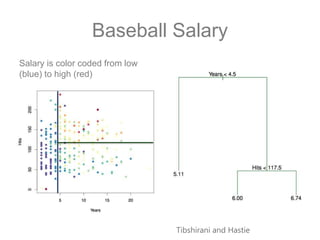

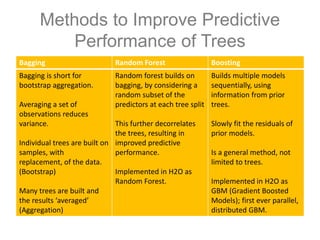

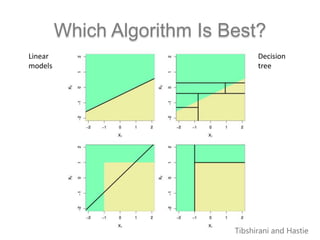

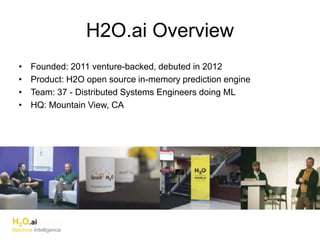

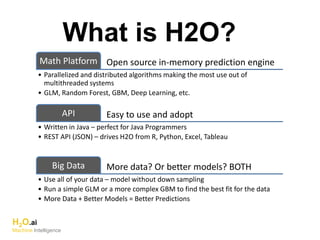

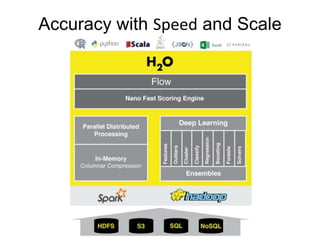

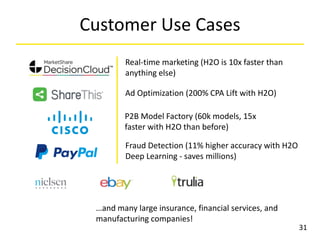

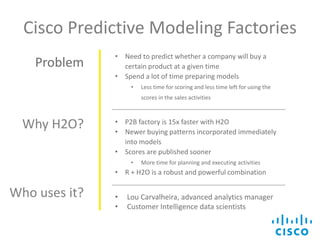

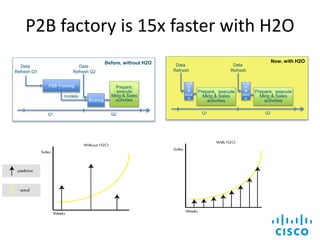

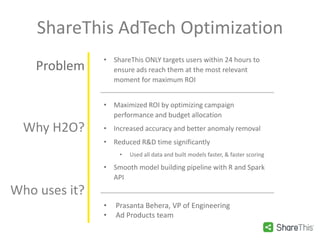

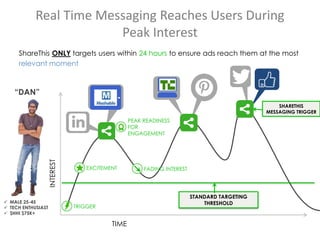

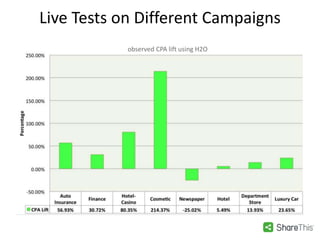

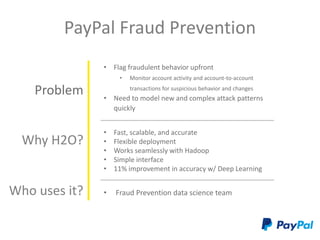

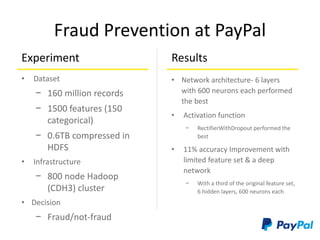

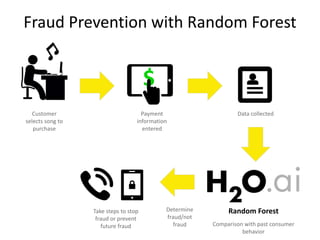

The document discusses scalable machine learning, focusing on algorithmic methods such as decision trees and their applications using the H2O machine learning engine. It highlights the importance of data science in extracting insights and improving predictions through various models, including random forests and gradient boosting. Furthermore, it showcases real-world use cases demonstrating H2O's effectiveness in ad optimization, fraud detection, and predictive modeling for companies.