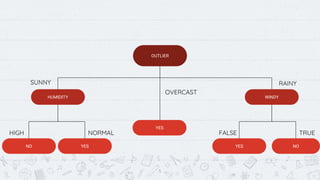

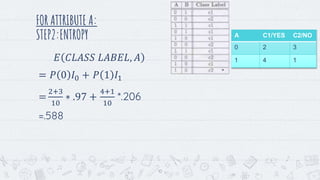

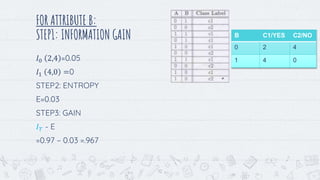

The document is a presentation by Iffat Firozy explaining the concept of decision trees, including their structure and generation from datasets. It details the steps for calculating information gain and entropy, illustrating with examples for attributes a and b. The presentation concludes by inviting questions and providing contact information.