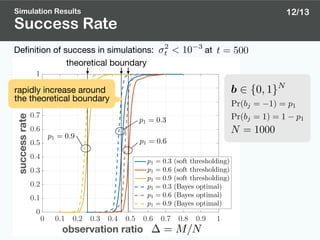

The document proposes a Discreteness-Aware Approximate Message Passing (DAMP) algorithm for reconstructing discrete-valued vectors from underdetermined linear measurements. DAMP extends existing AMP algorithms to handle discrete variables by incorporating probability distributions of the elements. The algorithm is analyzed using state evolution to derive conditions for perfect reconstruction. A Bayes optimal version of DAMP is also developed by minimizing mean squared error. Simulation results demonstrate improved reconstruction performance compared to conventional methods.

![Purpose

reconstruction of a discrete-valued vector

from its underdetermined linear measurements

Discrete-Valued Vector Reconstruction

Introduction 1/13

y = Ab 2 RM

Application

✦ overloaded MIMO signal detection [1]

(multiple-input multiple-output)

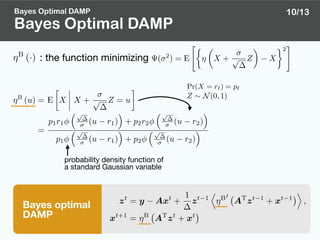

✦ faster-than-Nyquist signaling [2]

A by NM

reconstruct

ˆb

(M < N)

✦ multiuser detection [1]

✦ overloaded MIMO signal detection [2]

(multiple-input multiple-output)

✦ faster-than-Nyquist signaling [3]

[1] H. Sasahara, K. Hayashi, and M. Nagahara, "Multiuser detection based on MAP estimation with

sum-of-absolute-values relaxation," IEEE Trans. Signal Process., vol.65, no. 21, pp. 5621-5634, Nov. 2017.

[2] R. Hayakawa and K. Hayashi, "Convex optimization based signal detection for massive overloaded MIMO

systems,” IEEE Trans. Wireless Commun., vol. 16, no. 11, pp. 7080-7091, Nov. 2017.

[3] H. Sasahara, K. Hayashi, and M. Nagahara, "Symbol detection for faster-than-Nyquist signaling by

sum-of-absolute-values optimization," IEEE Signal Process. Lett., vol. 23, no. 12, pp. 1853-1857, Dec. 2016.

b 2 {r1, . . . , rL}N](https://image.slidesharecdn.com/apsipa2017public-171215145853/85/Binary-Vector-Reconstruction-via-Discreteness-Aware-Approximate-Message-Passing-3-320.jpg)

![Conventional Approach

2/13Introduction

✦ regularization-based method [4]

✦ transform-based method [4]

✦ SOAV optimization [5]

(Sum-of-Absolute-Values)

✦ DAMP algorithm [7]

(Discreteness-Aware Approximate Message Passing)

[4] A. Aïssa-El-Bey, D. Pastor, S. M. A. Sbaï, and Y. Fadlallah, “Sparsity-based recovery of finite alphabet

solutions to underdetermined linear systems,” IEEE Trans. Inf. Theory, vol. 61, no. 4, pp. 2008– 2018, Apr. 2015.

[5] M. Nagahara, “Discrete signal reconstruction by sum of absolute values,” IEEE Signal Process. Lett., vol. 22,

no. 10, pp.1575–1579, Oct. 2015.

[6] D. L. Donoho, A. Maleki, and A. Montanari, “Message-passing algorithms for compressed sensing,”

Proc. Nat. Acad. Sci., vol. 106, no. 45, pp. 18914–18919, Nov. 2009.

[7] R. Hayakawa and K. Hayashi, “Discreteness-aware AMP for reconstruction of symmetrically distributed

discrete variables,” in Proc. IEEE SPAWC 2017, Jul. 2017.

based on convex optimization

❖ low computational complexity

❖ analytical tractability

apply the idea of

AMP (Approximate Message Passing) algorithm [6]](https://image.slidesharecdn.com/apsipa2017public-171215145853/85/Binary-Vector-Reconstruction-via-Discreteness-Aware-Approximate-Message-Passing-4-320.jpg)

![Purpose of This Work

Introduction

1 extend the DAMP algorithm for asymmetric distributions

2

3

provide a condition for the perfect reconstruction

derive Bayes optimal DAMP

For binary vector (the simplest case),

Purpose of This Work

[7] R. Hayakawa and K. Hayashi, “Discreteness-aware AMP for reconstruction of symmetrically distributed

discrete variables,” in Proc. IEEE SPAWC 2017, Jul. 2017.

In [7], symmetric distribution of the elements of is assumed.

ex.)

3/13

b 2 {r1, r2}N

not applicable for b 2 {0, 1}N

b

(bj 2 {±1, ±3})(bj 2 {0, ±1})

bj bj bj

ex.)](https://image.slidesharecdn.com/apsipa2017public-171215145853/85/Binary-Vector-Reconstruction-via-Discreteness-Aware-Approximate-Message-Passing-5-320.jpg)

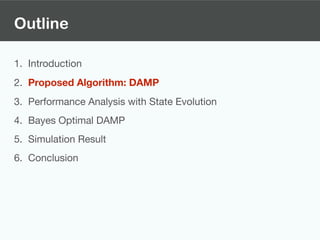

![Overview of Derivation

Proposed Algorithm: DAMP

✦ unknown vector:

✦ probability:

✦ measurements:

SOAV optimization [5]

Proposed algorithm:DAMP(Discreteness-aware AMP)

apply the idea of

AMP (Approximate Message Passing) algorithm [6]

parameter

b 2 {r1, r2}N

(r1 < r2)

Pr(bj = r1) = p1, Pr(bj = r2) = p2

ˆb = arg min

s2RN

(q1ks r11k1 + q2ks r21k1) subject to y = As

based on the fact that

(, ) hasb r11 b r21

approximately (, ) zero elementsp1N p2N

4/13

[5] M. Nagahara, “Discrete signal reconstruction by sum of absolute values,” IEEE Signal Process. Lett., vol. 22,

no. 10, pp.1575–1579, Oct. 2015.

[6] D. L. Donoho, A. Maleki, and A. Montanari, “Message-passing algorithms for compressed sensing,”

Proc. Nat. Acad. Sci., vol. 106, no. 45, pp. 18914–18919, Nov. 2009.

bjr1 r2

p2

p1](https://image.slidesharecdn.com/apsipa2017public-171215145853/85/Binary-Vector-Reconstruction-via-Discreteness-Aware-Approximate-Message-Passing-7-320.jpg)

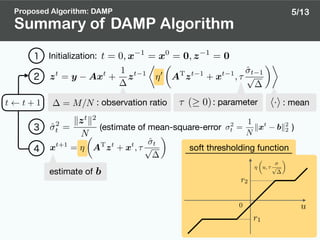

![[6] D. L. Donoho, A. Maleki, and A. Montanari, “Message-passing algorithms for compressed sensing,”

Proc. Nat. Acad. Sci., vol. 106, no. 45, pp. 18914–18919, Nov. 2009.

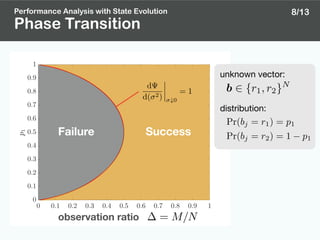

State Evolution

: estimate of at the th iteration

Performance Analysis with State Evolution

: mean-square-error (MSE) of

State Evolution [6]

( 2

) = E

"⇢

⌘

✓

X + p Z, ⌧ p

◆

X

2

#

predict the behavior of MSE

X ⇠ probability distribution of bj

Z ⇠ standard Gaussian distribution

In the large system limit ,

6/13

x

z

ex.)](https://image.slidesharecdn.com/apsipa2017public-171215145853/85/Binary-Vector-Reconstruction-via-Discreteness-Aware-Approximate-Message-Passing-10-320.jpg)

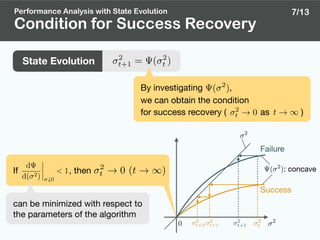

![Bayes optimal AMP [8]: AMP with minimizing

Bayes Optimal AMP [8]

[8] D. L. Donoho, A. Maleki, and A. Montanari, “The noise-sensitivity phase transition in compressed sensing,”

IEEE Trans. Inf. Theory, vol. 57, no. 10, pp. 6920–6941, Oct. 2011.

( 2

)

Bayes Optimal DAMP

State evolution

AMP algorithm

(in general form)

( 2

) = E

"⇢

⌘

✓

X + p Z

◆

X

2

#

9/13

Any Lipschitz function

can be used

zt

= y Axt

+

1

zt 1

⌦

⌘0

AT

zt 1

+ xt 1

↵

,

xt+1

= ⌘ AT

zt

+ xt

⌘(·)

⌘(·)

smaller is better( 2

)](https://image.slidesharecdn.com/apsipa2017public-171215145853/85/Binary-Vector-Reconstruction-via-Discreteness-Aware-Approximate-Message-Passing-14-320.jpg)