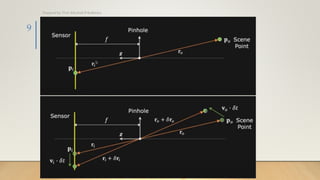

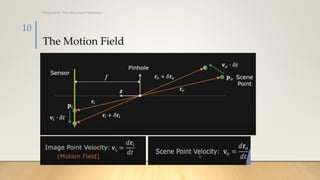

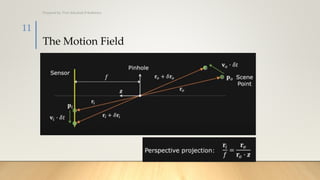

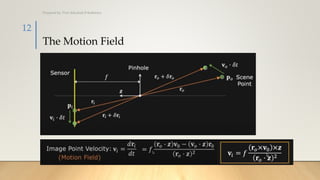

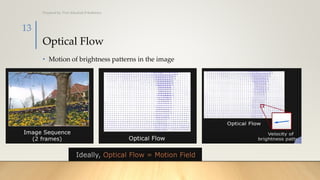

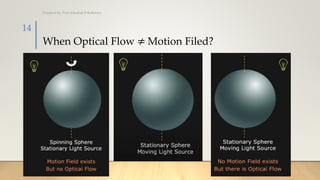

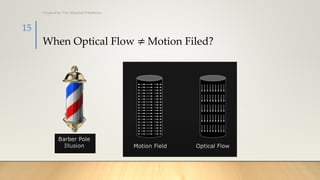

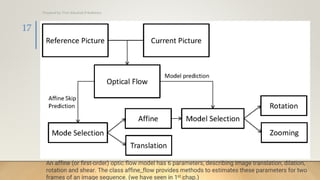

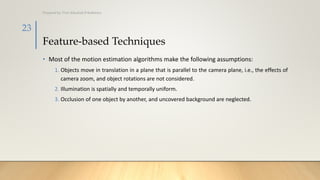

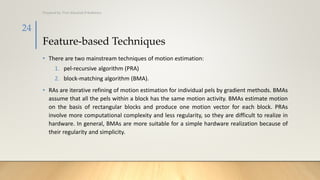

The document discusses motion representation in computer vision, focusing on concepts like motion fields, optical flow, motion parallax, and feature-based techniques for motion estimation. It highlights the importance of tracking object movements in 3D environments via 2D representations, along with different estimation methods such as pel-recursive algorithms and block-matching algorithms. It also addresses challenges in motion analysis, like occlusion and the complexity of human motion, especially relevant for applications in computer animation and video processing.