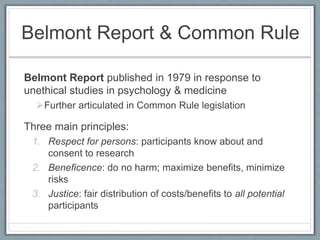

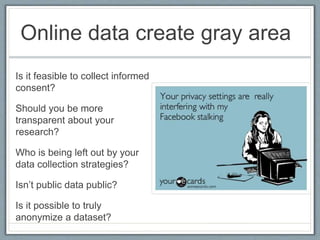

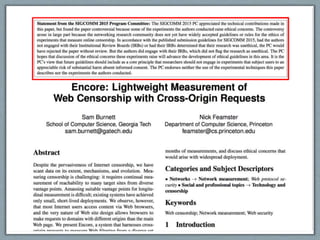

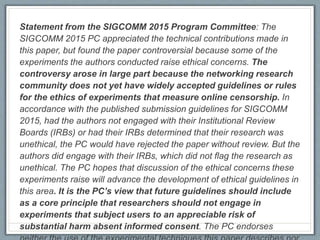

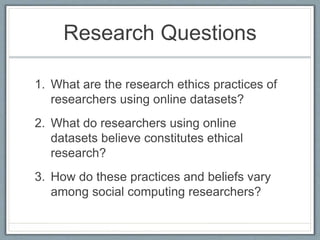

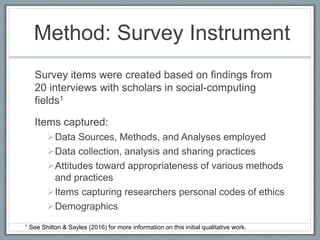

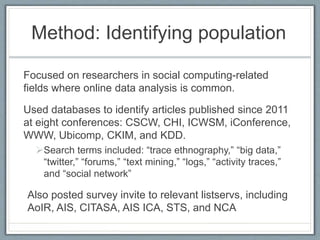

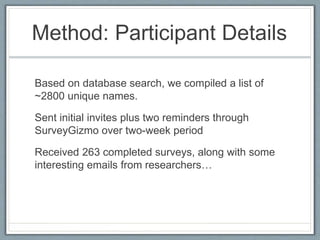

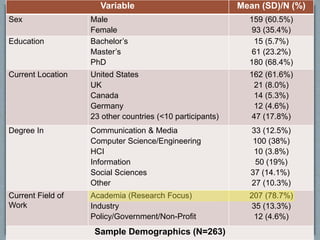

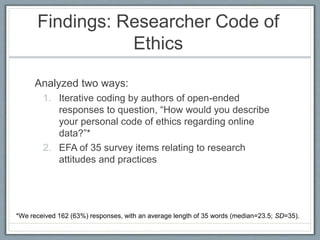

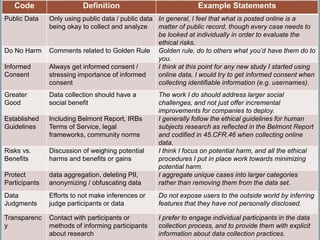

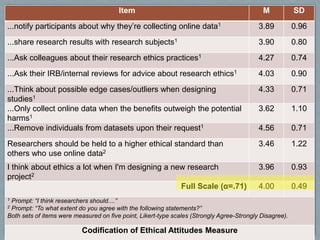

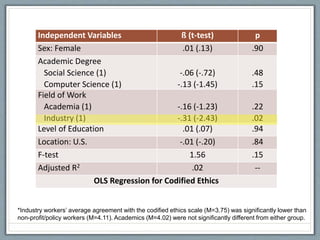

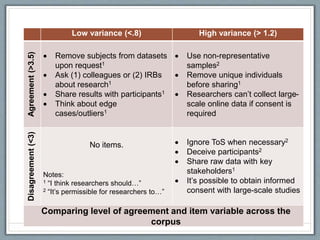

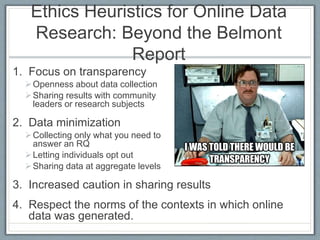

The document discusses the ethical challenges faced by researchers in the online data research community, highlighting the importance of informed consent and the complexities of data ethics. It presents findings from a survey of social computing researchers regarding their ethical practices and beliefs, revealing a lack of widely accepted guidelines for ethical online research. Key recommendations include enhancing transparency, data minimization, and respecting the contextual norms of online data usage.