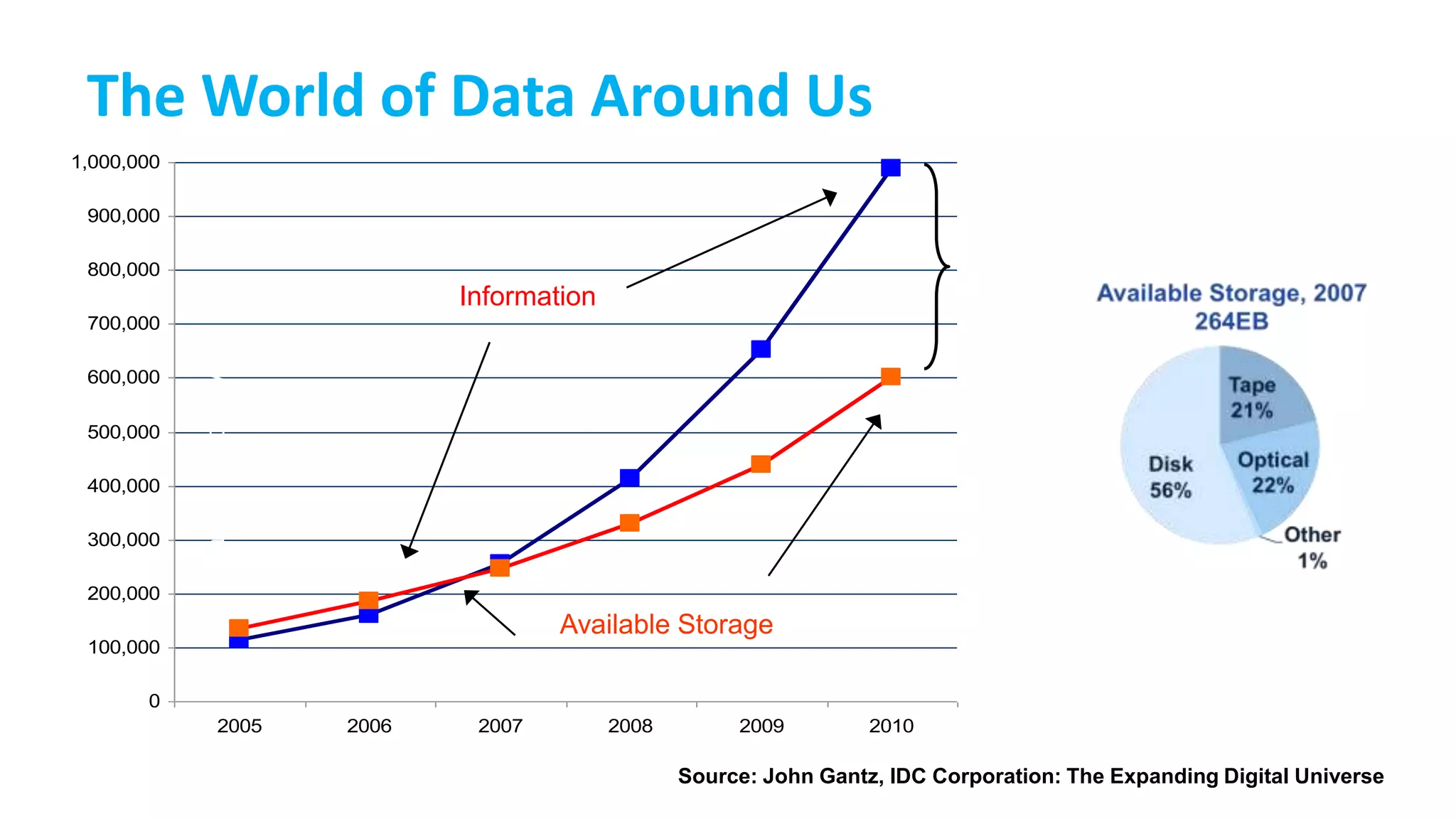

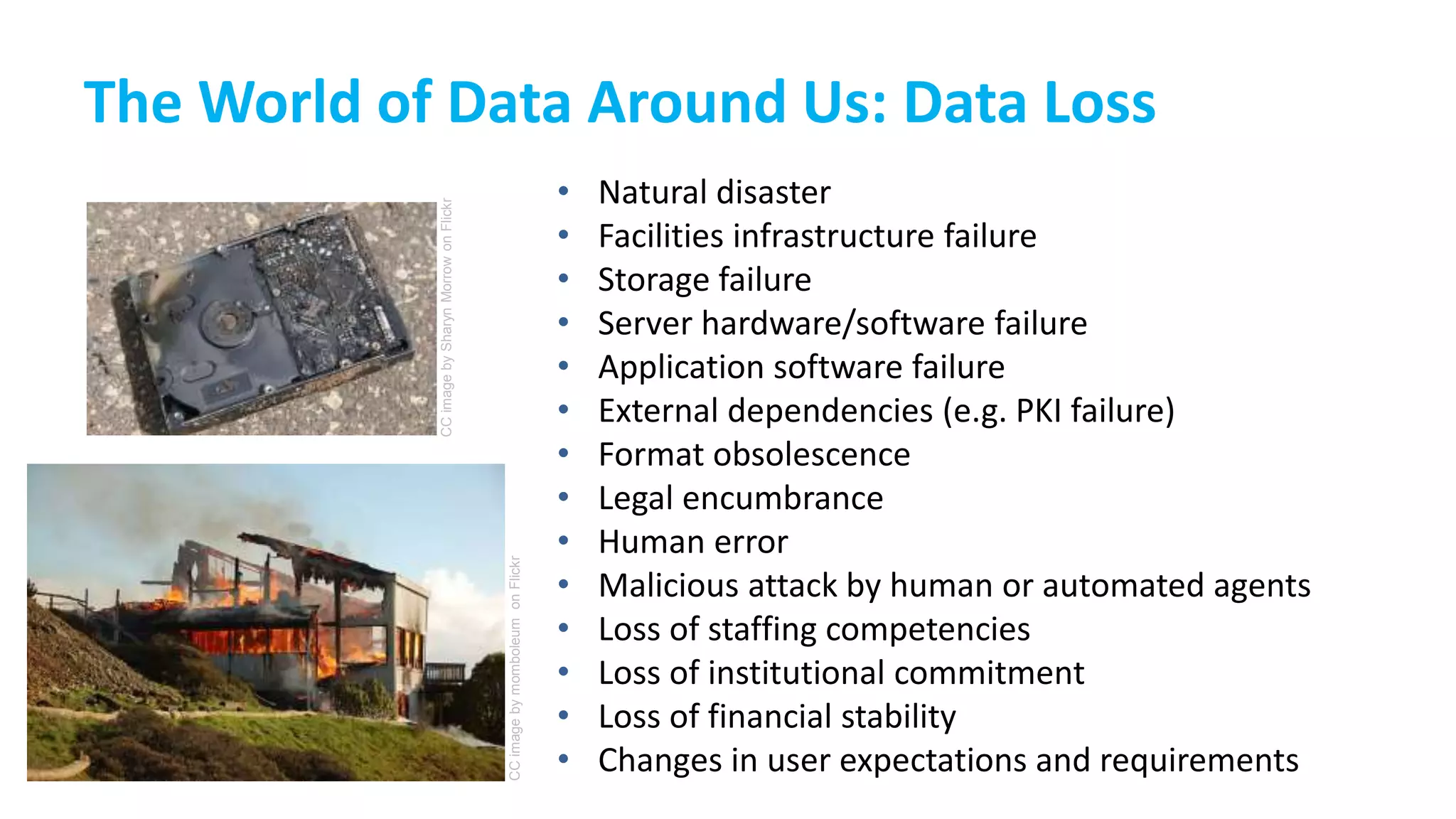

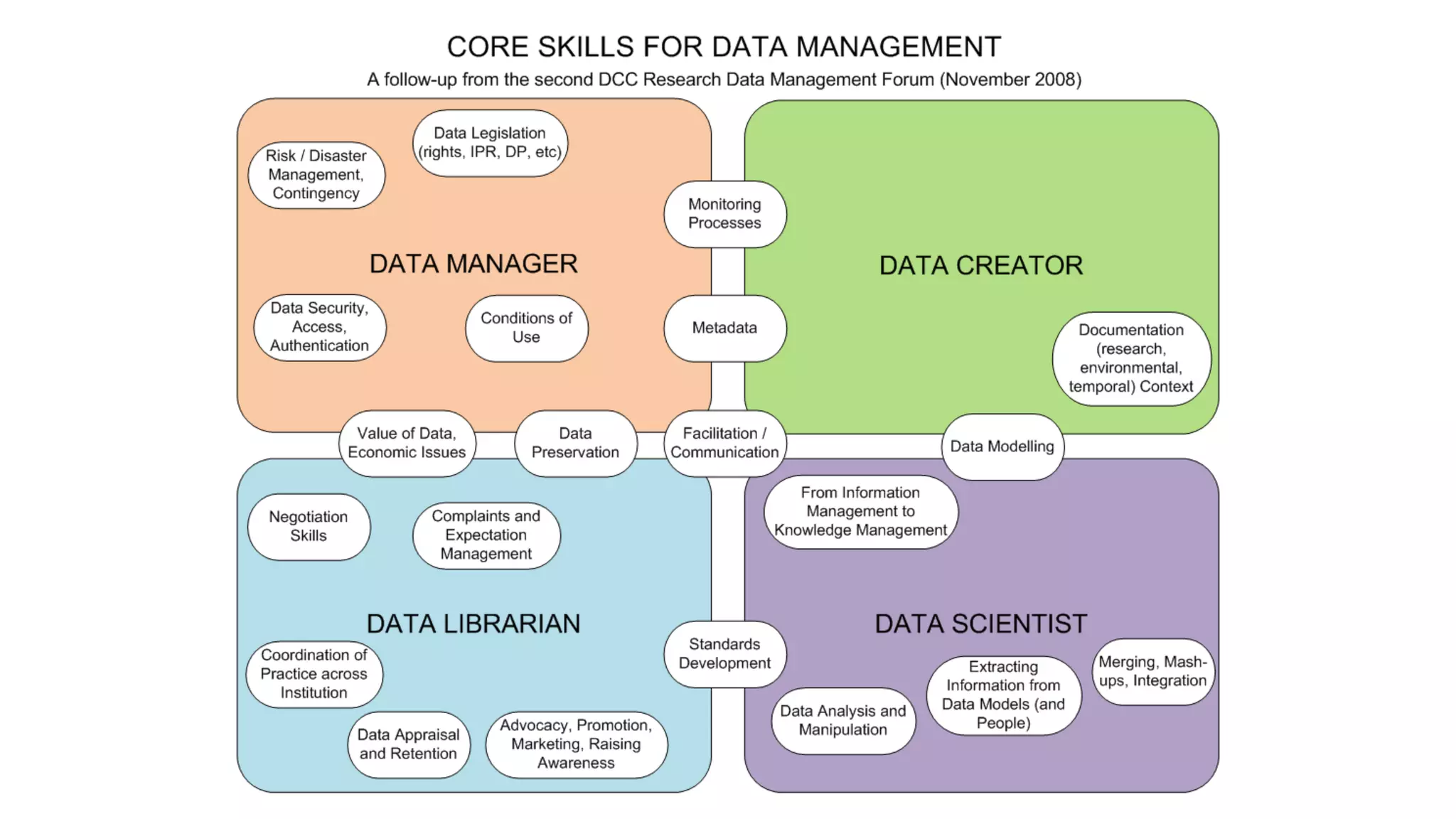

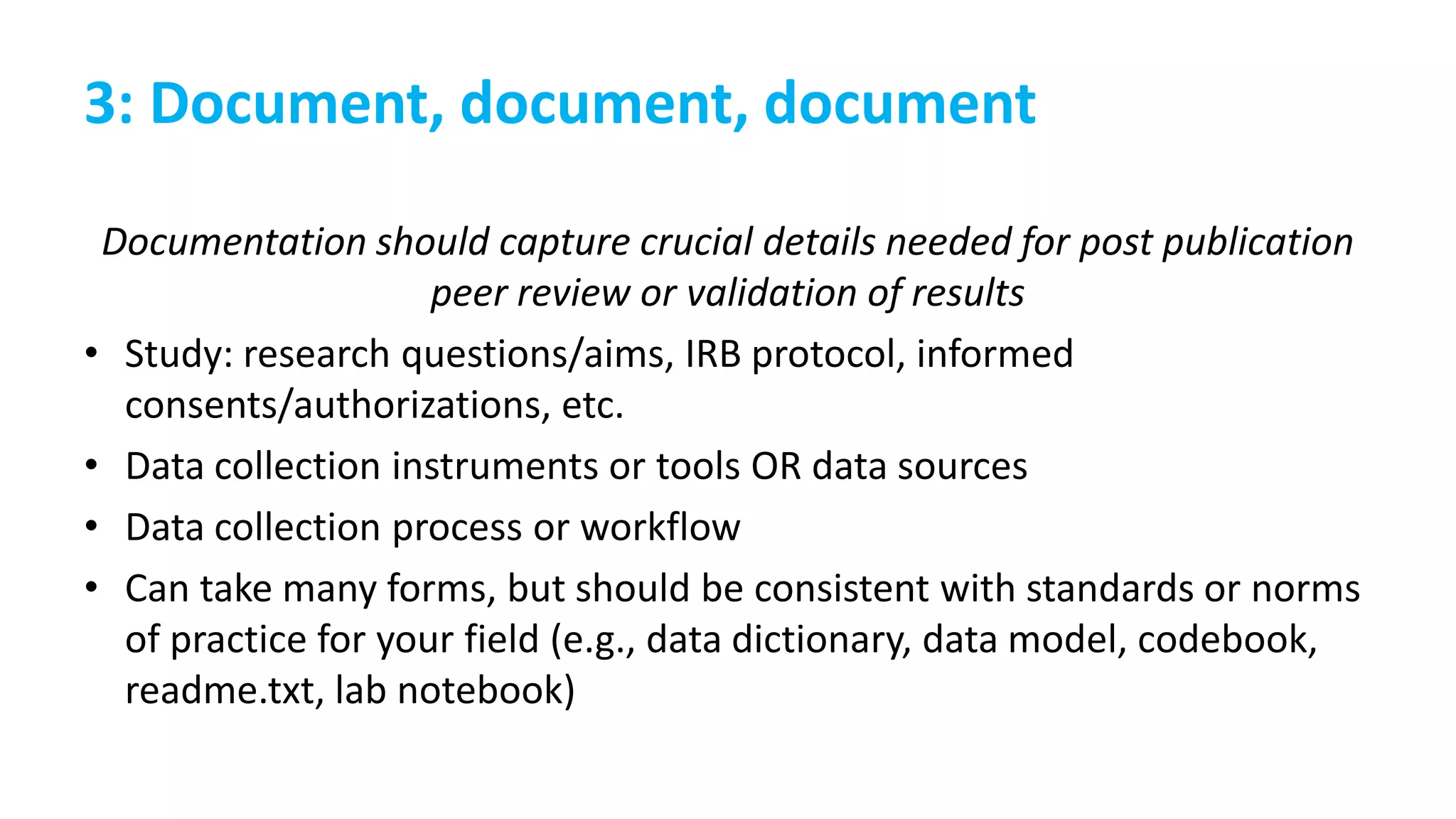

This document provides an overview of responsible data management practices for research integrity. It discusses key concepts like research data management (RDM), research data life cycles, roles and responsibilities of research team members, and practical RDM strategies.

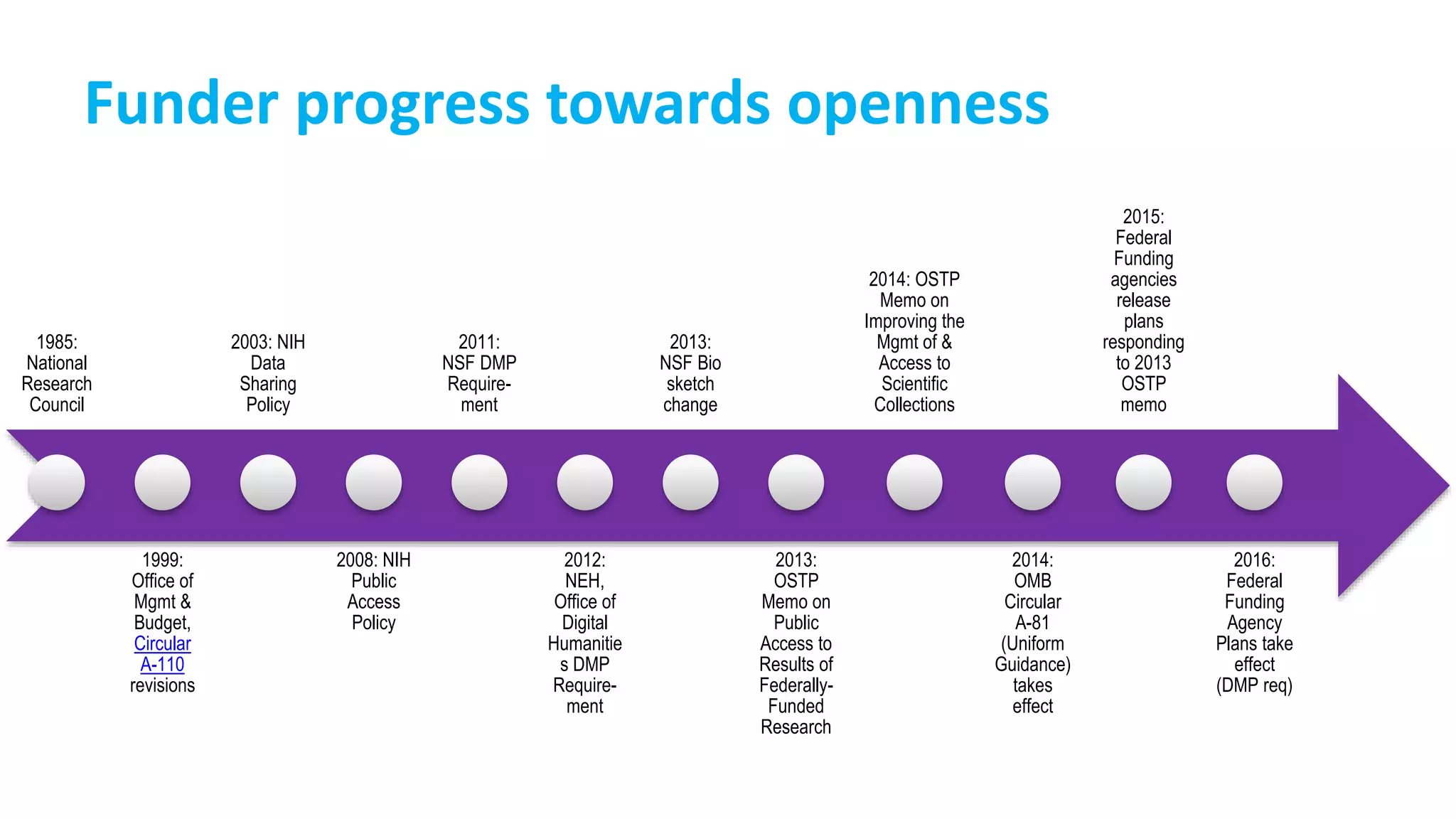

The document emphasizes the importance of RDM for ensuring research can be verified, reproduced and built upon. It outlines funder and publisher policies requiring data management plans and sharing. Practical RDM tips include having a data management plan, following backup best practices, thorough documentation, consistency, use of standards, quality monitoring and open sharing where possible. Overall it promotes RDM as crucial for research integrity and the value of data over time.

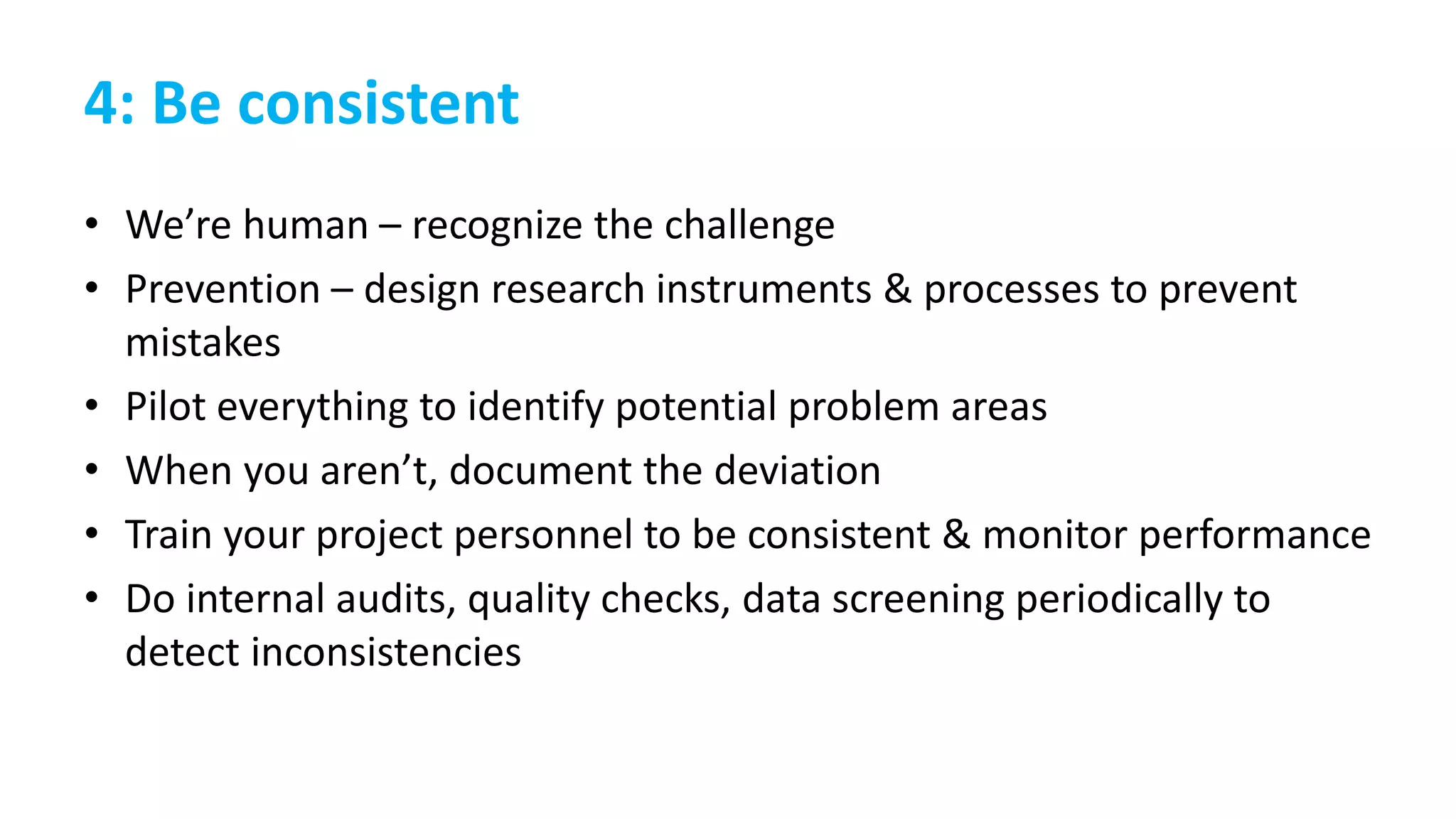

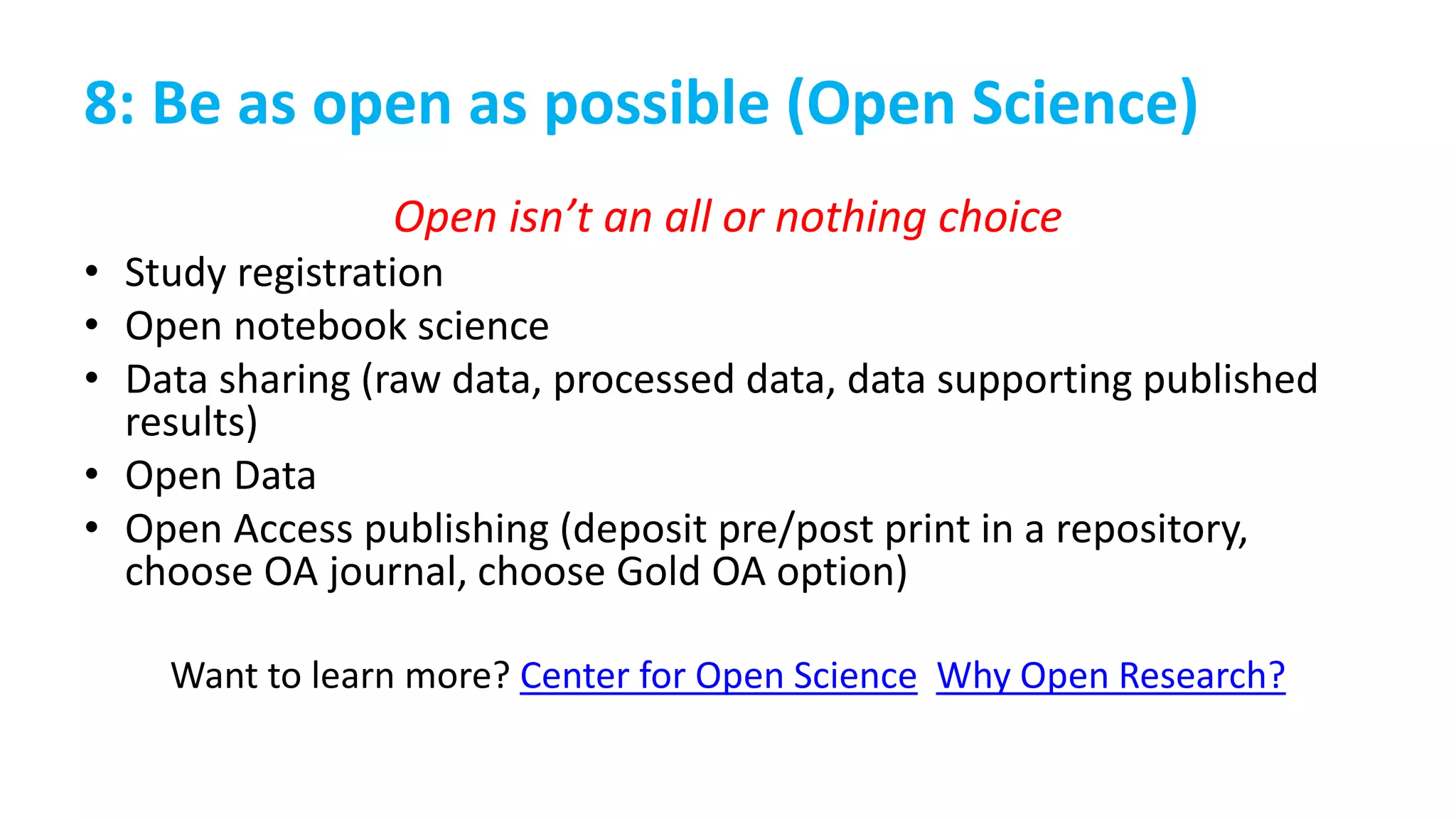

![Team Member Name Project Role Activity Description

Project design

[+ documentation]

Determining the aims of the project, the methods used to achieve those aims, and identifying the

products resulting from the project.

Translating the aims of the project into measurable research questions or hypotheses.

Instrument/measure/data

collection tool design

[+ documentation]

Creating tools that adapt the research questions or hypotheses into questions that can be

addressed by discrete data points.

Validating tools through external review or pilot testing.

Data collection

[+ documentation]

Conducting surveys, interviews, experiments, and other project procedures according to the

protocol in order to generate data.

Data processing [+

documentation]: entry,

proofing/cleaning, preparation for

analysis

Entering analog data into spreadsheet or database. Documenting procedures, date, and person

responsible.

Checking data entry for accuracy and completeness. Documenting procedures, date, and person

responsible.

Checking data for missing data, errors, and outliers. Documenting procedures, date, and person

responsible.

Deciding what data to include/exclude. Documenting decision-making process and criteria used.

Data analysis

[+ documentation]:

Selecting analytical tools to be used. Documenting decision-making process and criteria used.

Conducting data characterization and screening tests, running analyses, generating results.

Documenting process and files generated.

Deciding what data are relevant to the project aims and objectives. Documenting decision-making

process and criteria used.

Data reporting: Creating summary tables, graphs, and other visuals to represent the data.

Writing up the project details and relevant results in the packages/format requested by the client,

as specified by the deliverables agreed upon in the contract.](https://image.slidesharecdn.com/quaid-g504fa2016ethicsrdmpublic-161009190242/75/Managing-data-responsibly-to-enable-research-interity-31-2048.jpg)

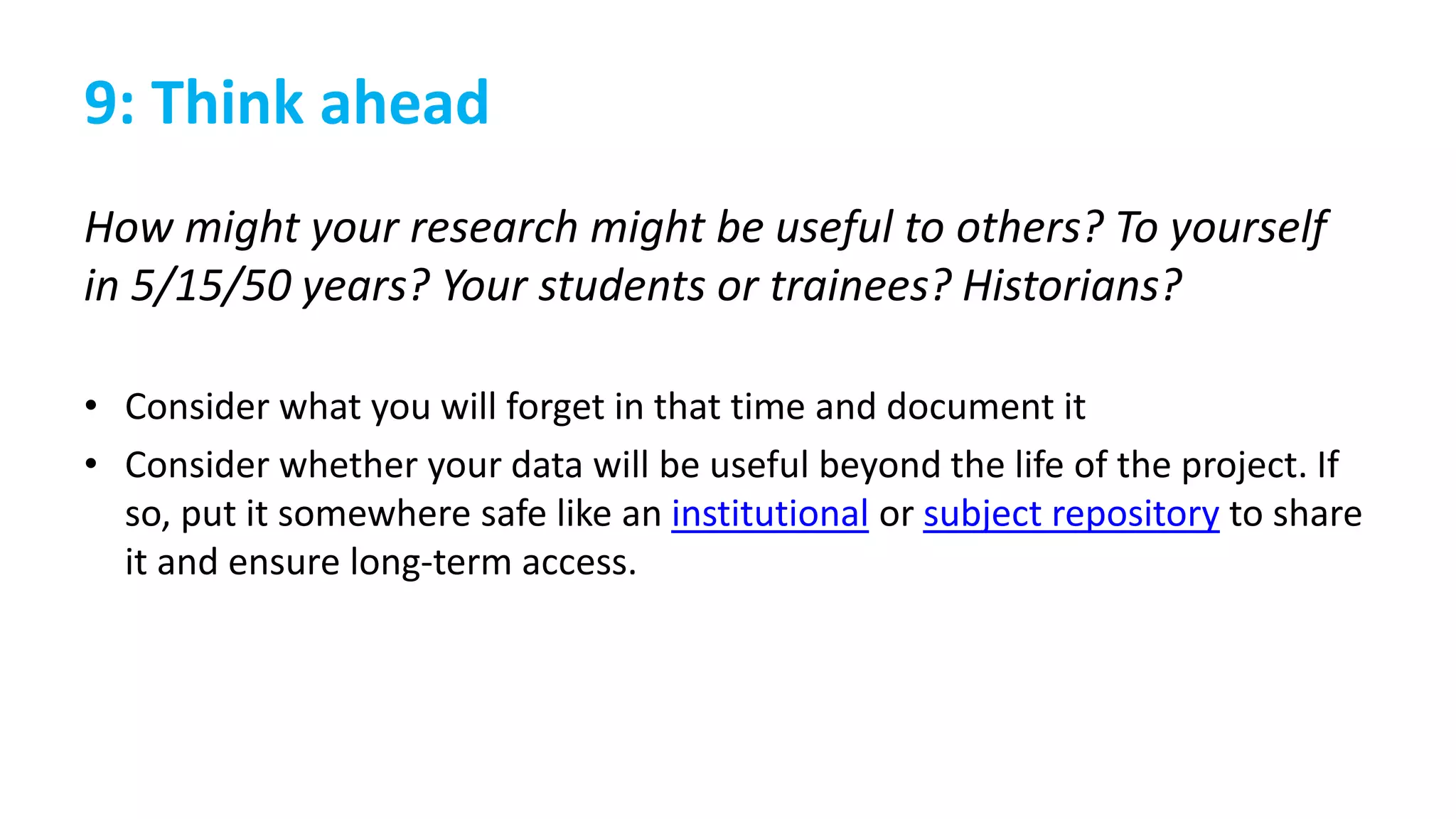

![3C: Structured documentation [metadata] is crucial for

discovery, reuse, and interoperability

• Metadata describes the who, what, when, where, how, why of the data

• Metadata = documentation for machines (standardized, structured)

• Purpose is to enable evaluation, discovery, organization, management, re-

use, authority/identification, and preservation

• Standards are commonly agreed upon terms and definitions in a structured

format

• Good documentation builds trust in your data – provenance, data integrity,

transparency, audit trail, etc.](https://image.slidesharecdn.com/quaid-g504fa2016ethicsrdmpublic-161009190242/75/Managing-data-responsibly-to-enable-research-interity-43-2048.jpg)