The document provides an overview of Hadoop installation and MapReduce programming. It discusses:

- Why Hadoop is used to deal with big data mining.

- How to learn Hadoop and MapReduce programming.

- What will be covered, including Hadoop installation, HDFS basics, and MapReduce programming.

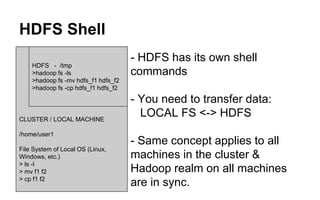

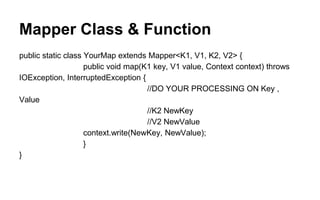

It then goes on to provide details on installing Hadoop on Amazon EC2, the main Hadoop components of HDFS and MapReduce, using the HDFS shell, the MapReduce programming model and components, writing MapReduce applications in Java, and configuring jobs. Code examples are also provided for a sample reverse indexing application.

![MapReduce Concept

- Programming Model for Distributed Parallel

Computing.

- Used on scalable commodity hardware cluster.

- Can process Big Data (100s of GBs, TBs)

- Based on Key-Value structure.

- Parallel MAP tasks, which emit <K, V> data

- Parallel REDUCE tasks, which processes <K, V[ ]>

data](https://image.slidesharecdn.com/cs267hadoopprogramming-140803210623-phpapp01/85/Cs267-hadoop-programming-6-320.jpg)

![MapReduce Model

M1

M2

M3

M4

R1

R2

R3

R4

<K, V>

<K, V>

<K, V>

<K, V>

Sort, Merge &

Shuffle

<K, V>

<K, V>

<K, V>

<K, V>

<K1, V [ ] >

<K2 V [ ] >

<K3, V [ ] >

<K4, V [ ] >

<K, V>

<K, V>

<K, V>

<K, V>](https://image.slidesharecdn.com/cs267hadoopprogramming-140803210623-phpapp01/85/Cs267-hadoop-programming-7-320.jpg)

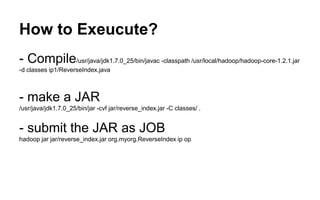

![Job Configuration

public static void main(String[] args) throws Exception {

//Create Configuration

Configuration conf = new Configuration();

//Create Job

Job job = new Job(conf, "YourApp");

//Specify Input Directory

FileInputFormat.addInputPath(job, new Path(args[0]));

//Specify Output Directory

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(Map.class);

//Specify Input Split Format By Which Mapper Reads <K, V>

job.setInputFormatClass(KeyValueTextInputFormat.class)

//Specify Output Format By Which Mapper Emits <K, V>

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setReducerClass(Reduce.class);

//Specify Output Format By Which Reducer Emits <K, V>

job.setOutputKeyClass(Text.class);

job.setOutputKeyClass(Value.class);

//Specify Output Format By Which Output is Written To The Output Files

job.setOutputValueClass(IntWritable.class);

job.setJarByClass(org.myorg.YourApp.class);

job.waitForCompletion(true);

}](https://image.slidesharecdn.com/cs267hadoopprogramming-140803210623-phpapp01/85/Cs267-hadoop-programming-14-320.jpg)

![Mapper & Reducer Algo

Mapper:

read line in K<filename>, V<rest contents>

tokenize V

for every token t:

emit K<t>, V<filename>

Reducer:

receive K<token>, V[ ] <filenames>

make unique list of V [ ]

form a comma separated string of filenames in V [ ] as str

emit K<token>, V<str>](https://image.slidesharecdn.com/cs267hadoopprogramming-140803210623-phpapp01/85/Cs267-hadoop-programming-16-320.jpg)

![Actual Java Program: Mapper

public static class Map extends Mapper<Text, Text, Text, Text> {

private Text word = new Text();

public void map(Text key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

String temp = tokenizer.nextToken();

//Strip last non-alphabet chars from a word

if ( ! temp.matches(".*[a-zA-Z]$") ) {

word.set(temp.substring(0, temp.length()-1));

}

else

word.set(temp);

context.write(word, key);

}

}

}](https://image.slidesharecdn.com/cs267hadoopprogramming-140803210623-phpapp01/85/Cs267-hadoop-programming-17-320.jpg)

![Actual Java Program: Main()

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = new Job(conf, "reverse-index");

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(Map.class);

job.setInputFormatClass(KeyValueTextInputFormat.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

job.setOutputFormatClass(TextOutputFormat.class);

job.setJarByClass(org.myorg.ReverseIndex.class);

job.waitForCompletion(true);

}](https://image.slidesharecdn.com/cs267hadoopprogramming-140803210623-phpapp01/85/Cs267-hadoop-programming-19-320.jpg)