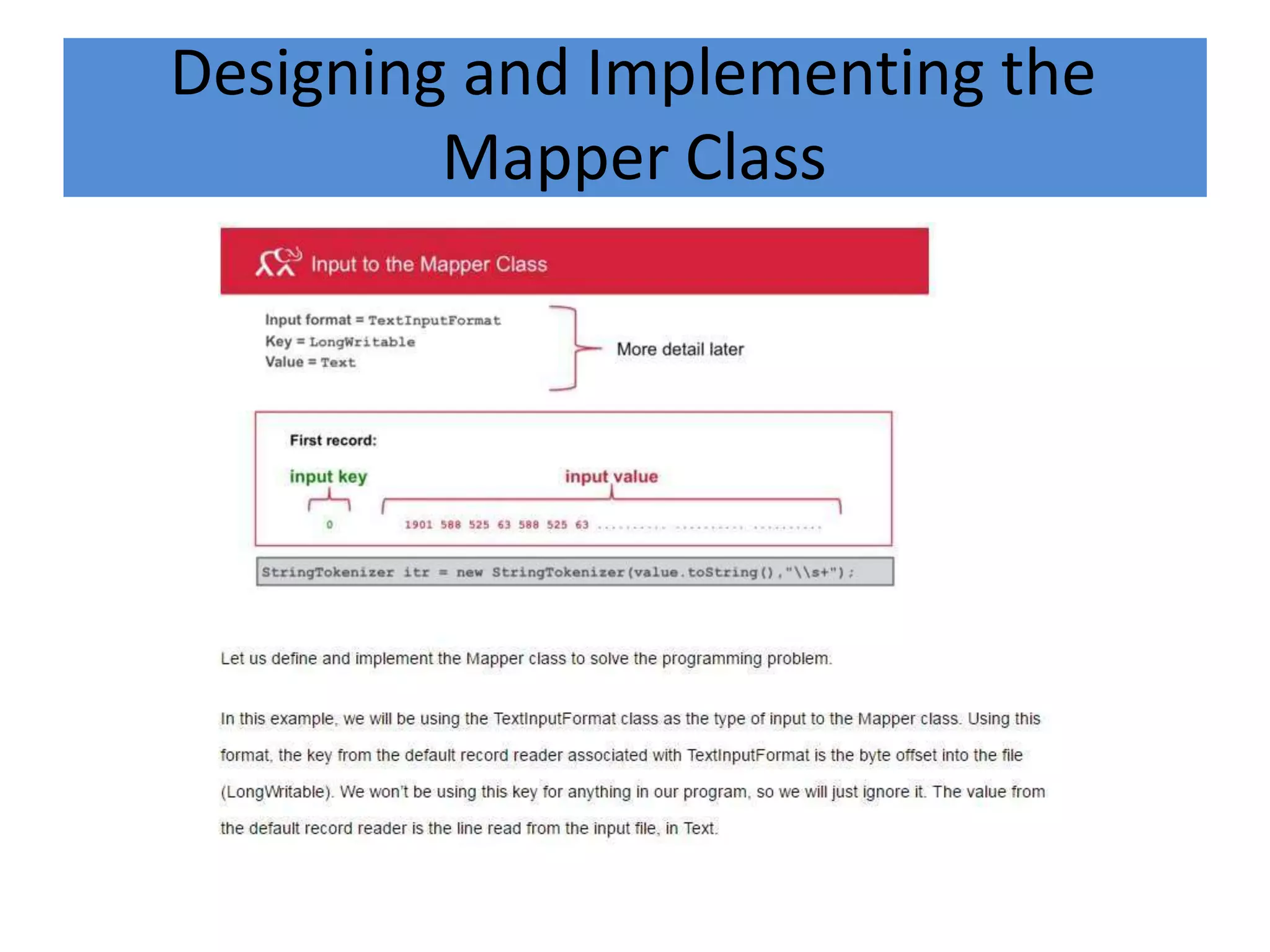

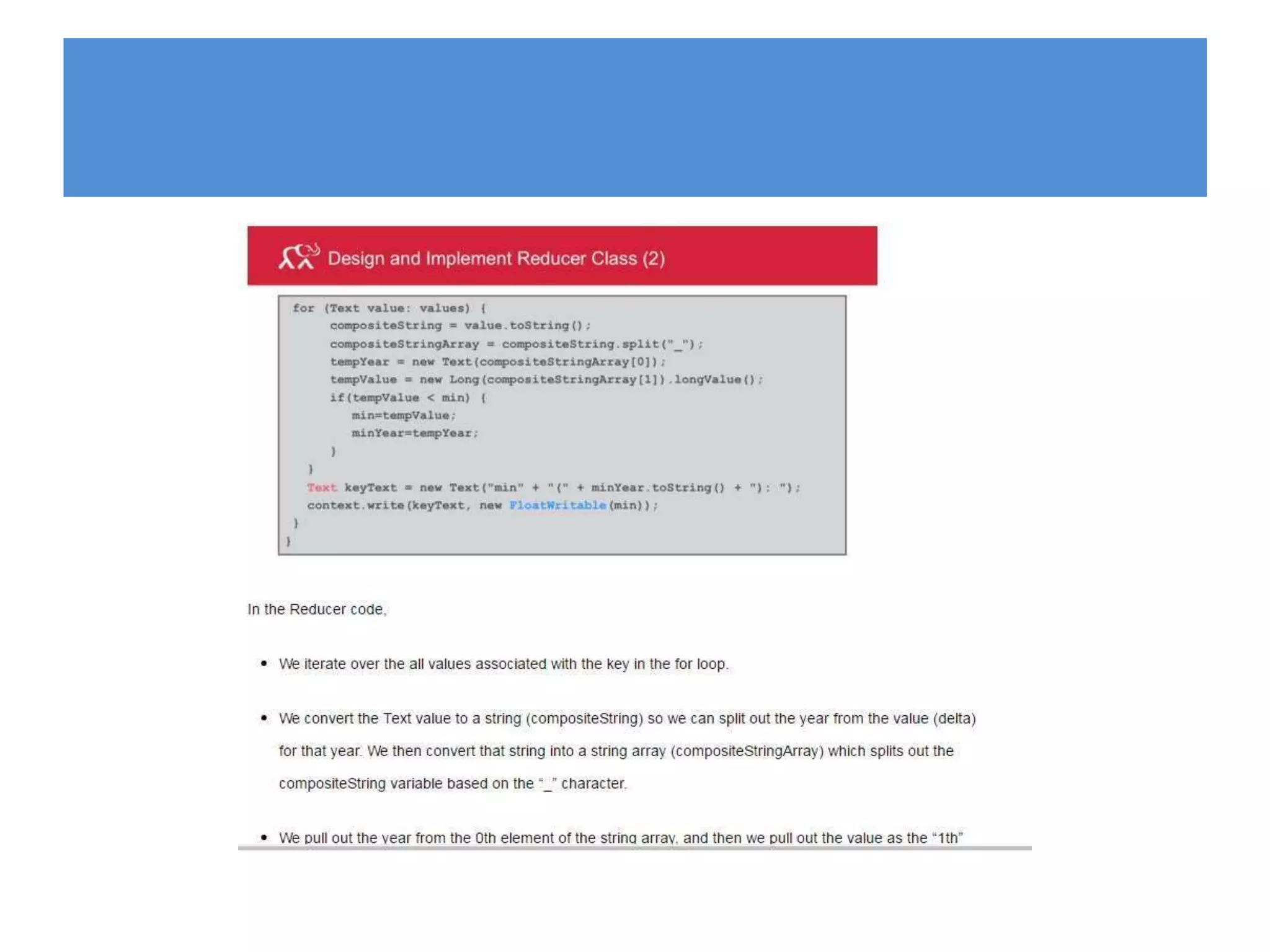

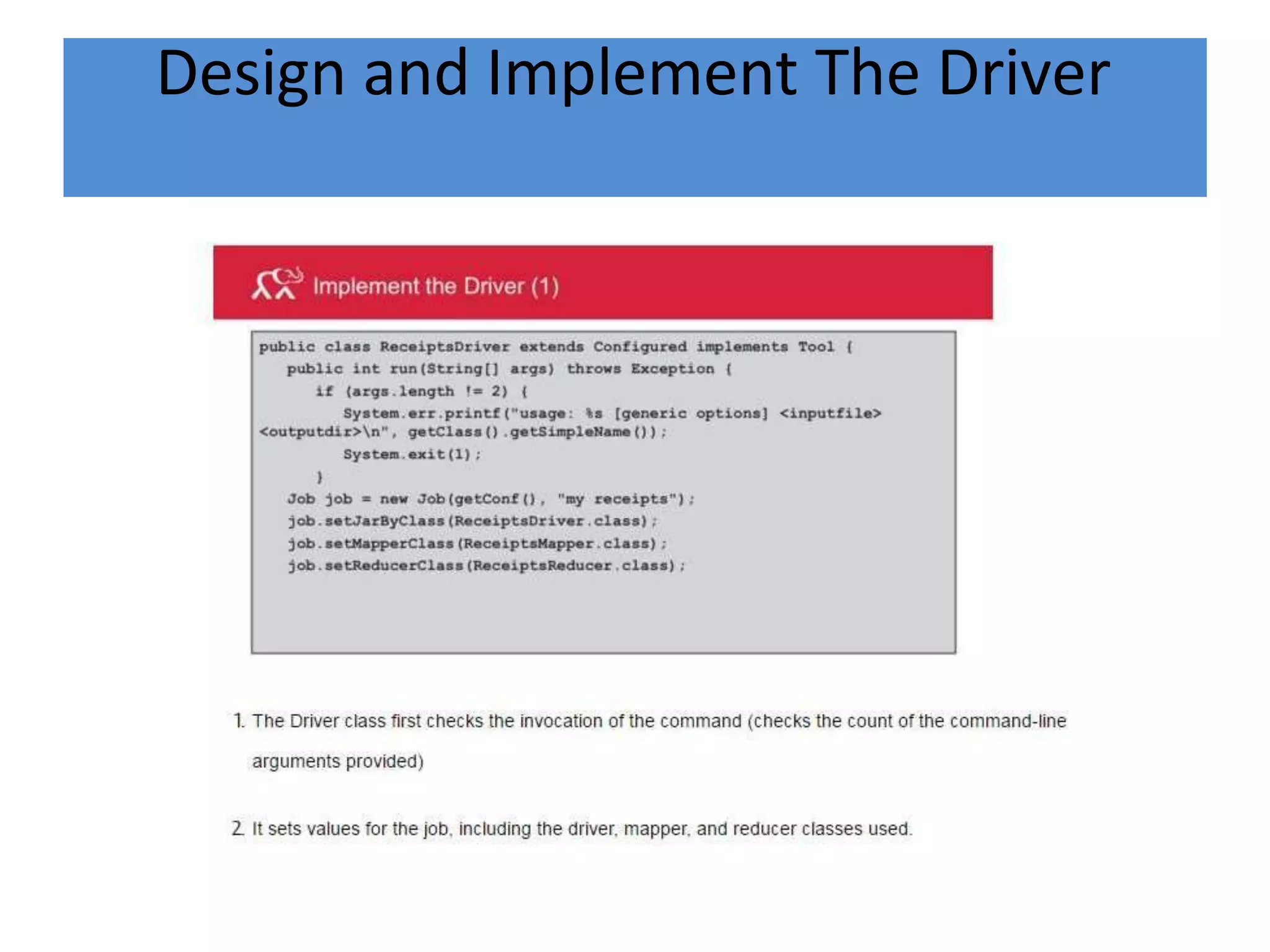

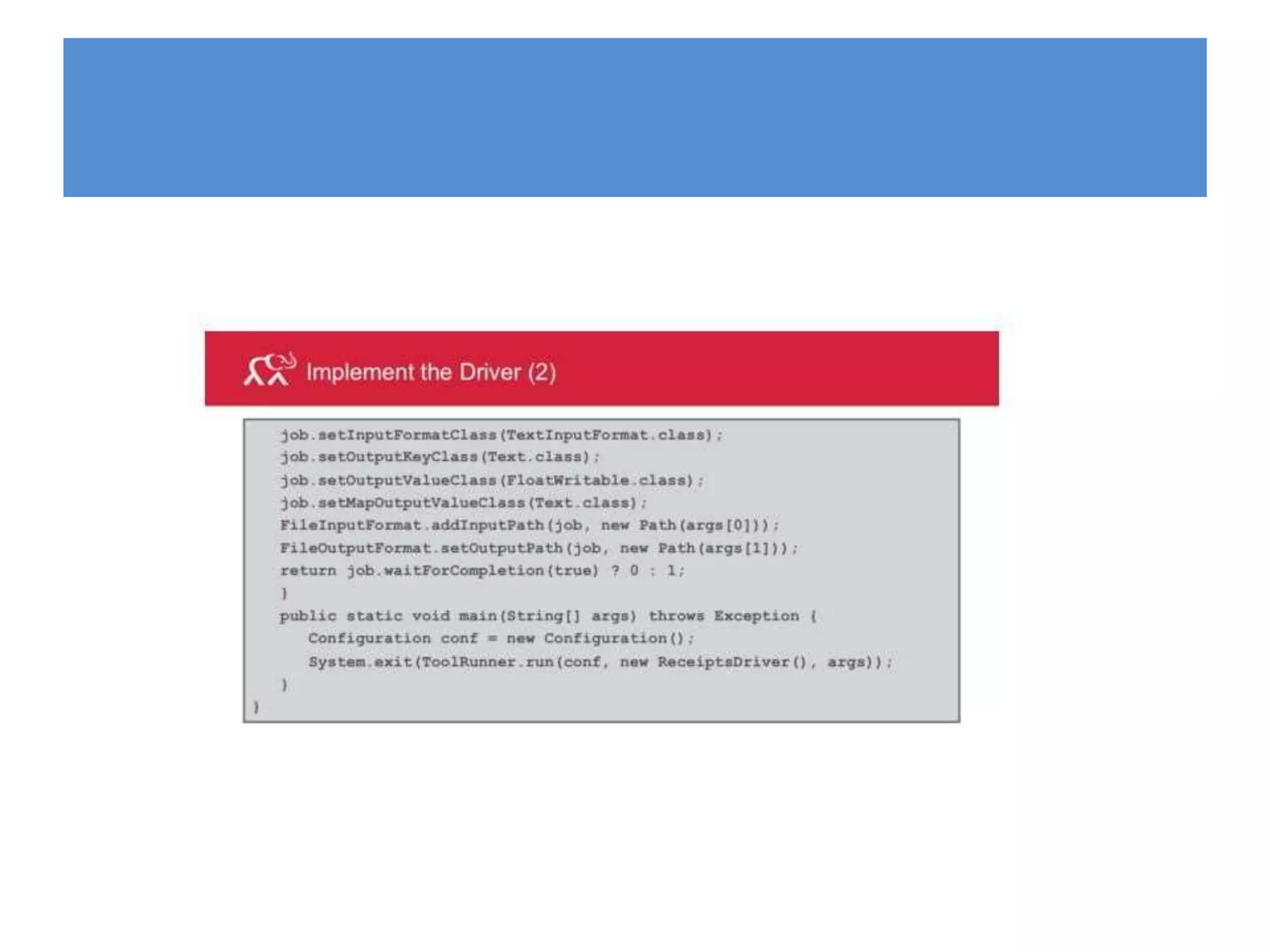

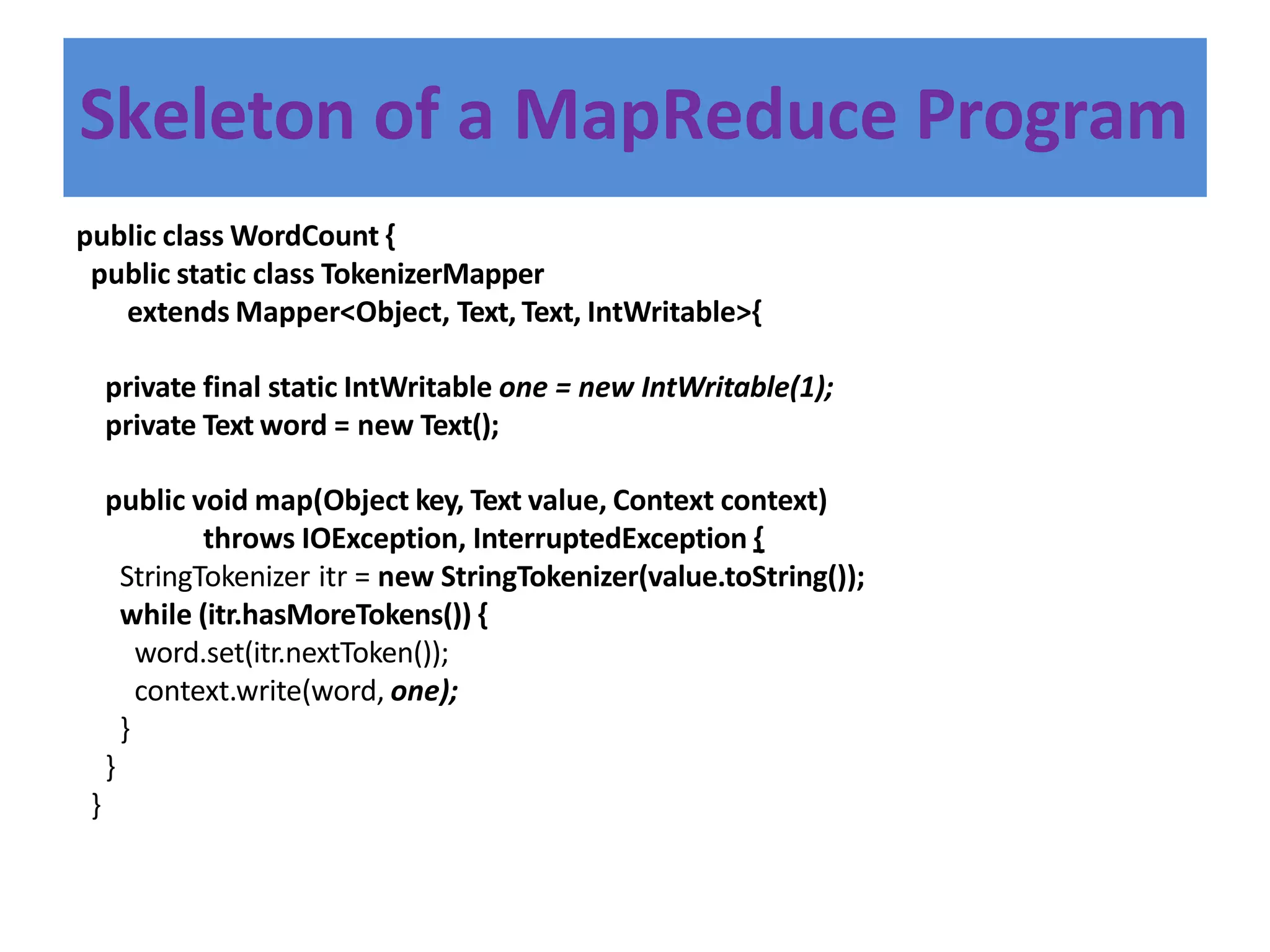

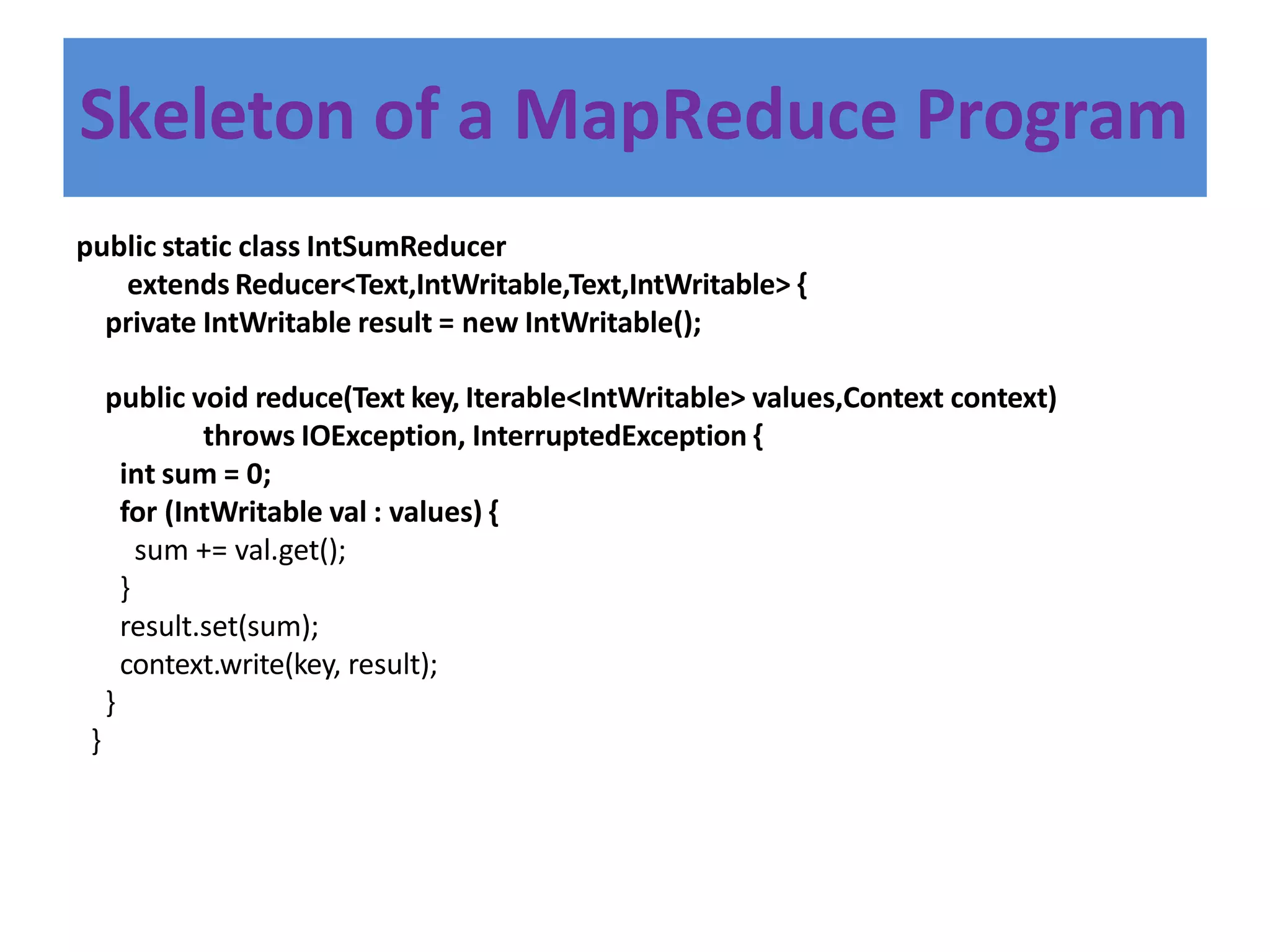

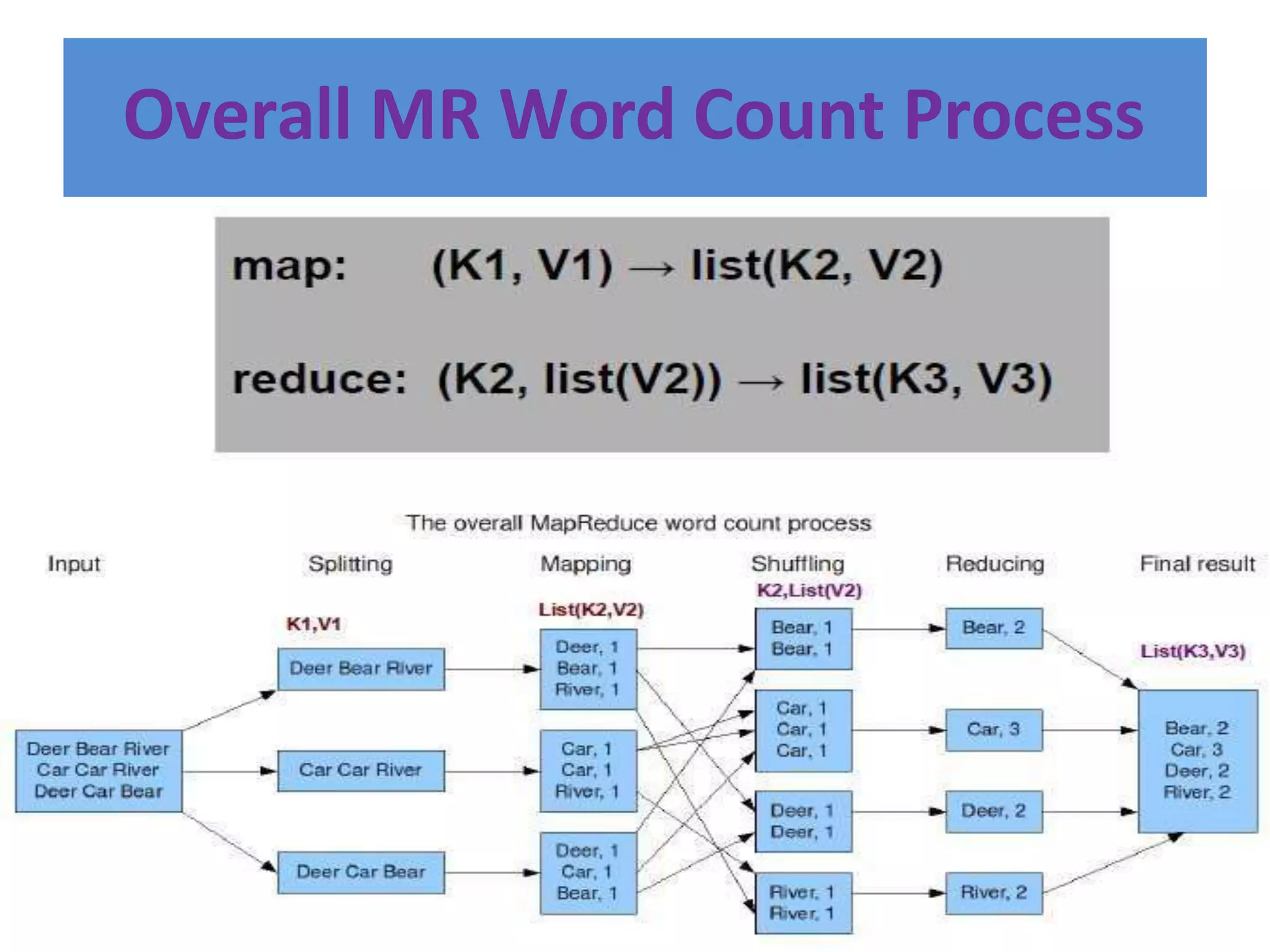

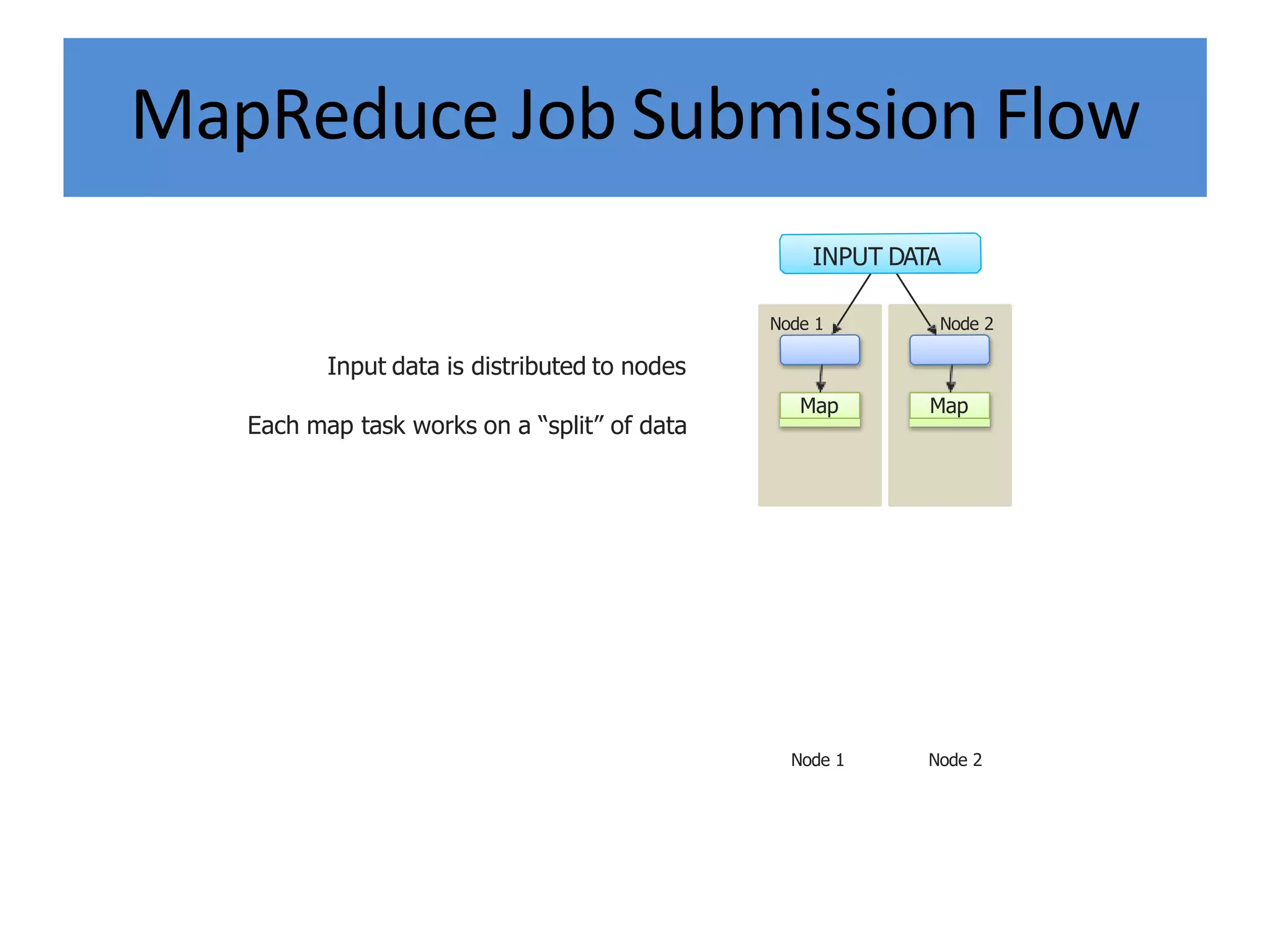

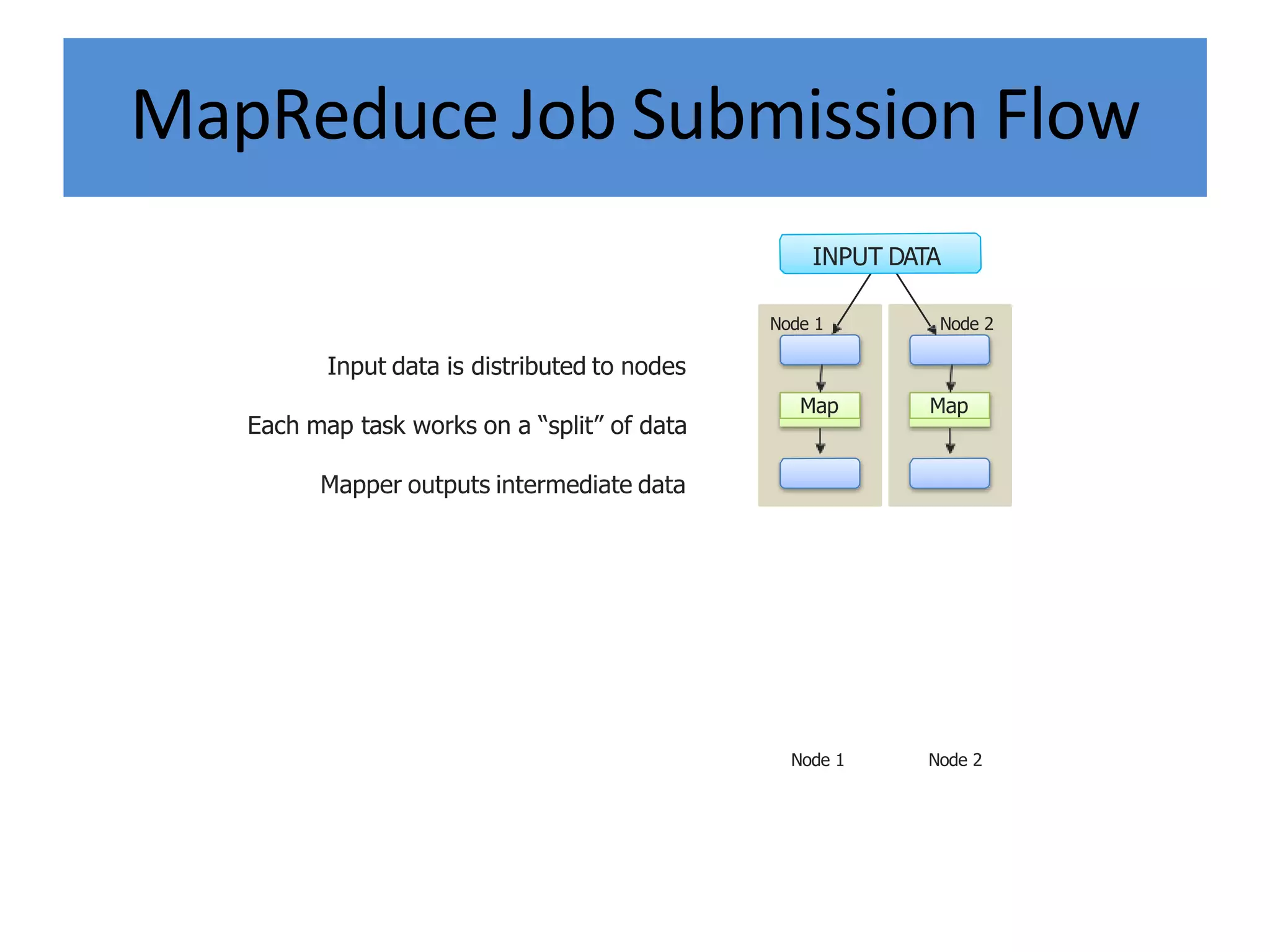

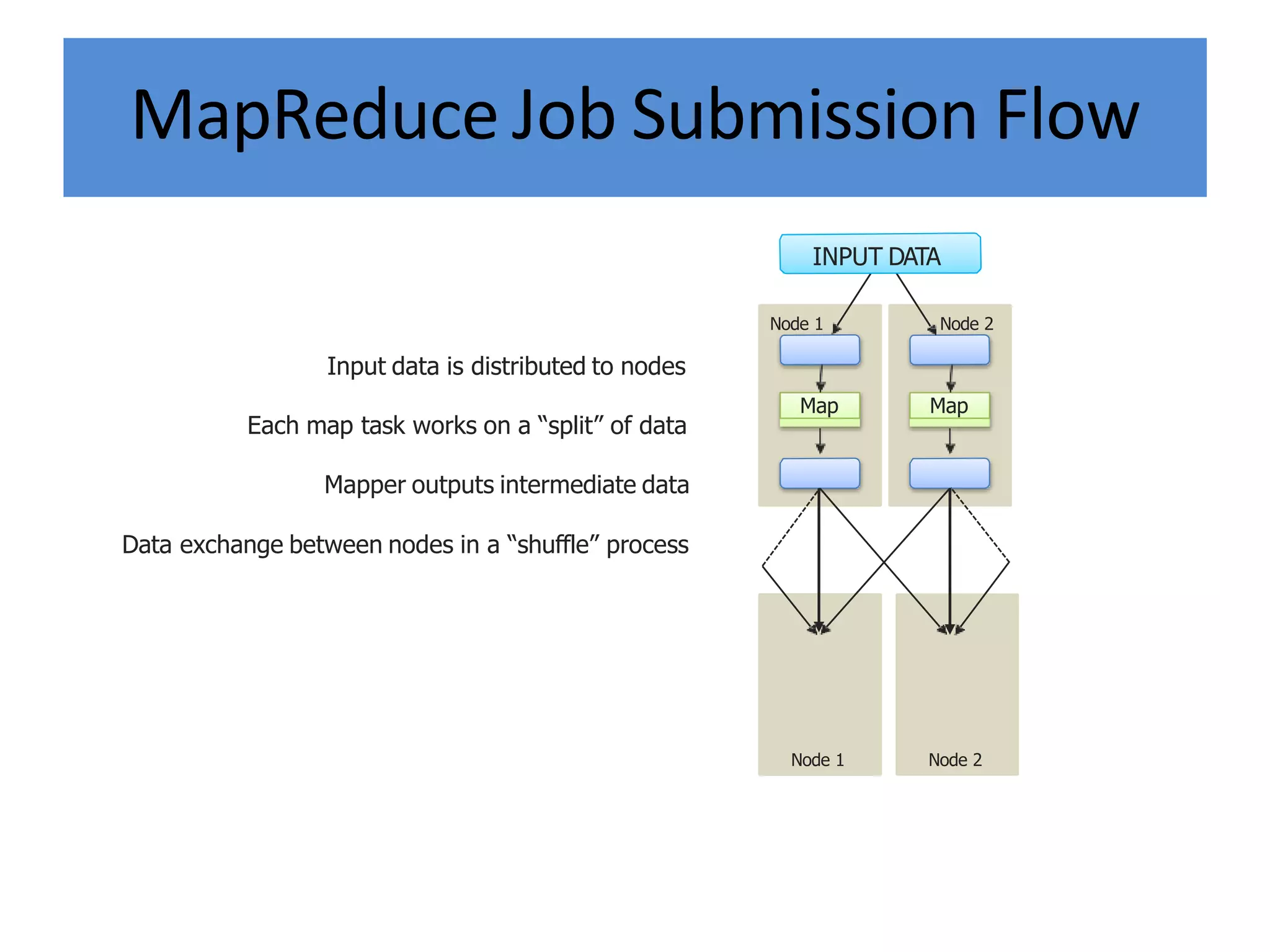

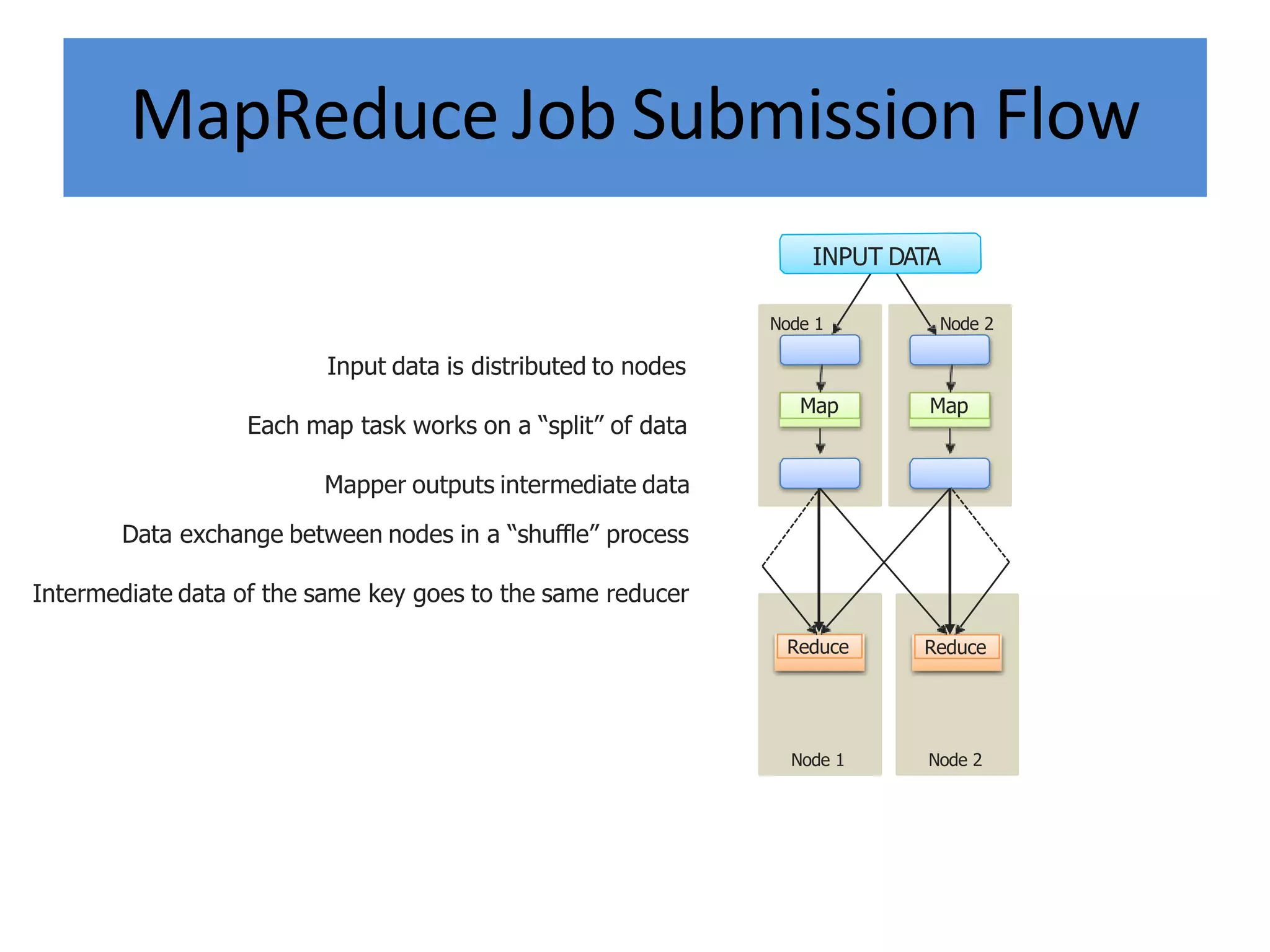

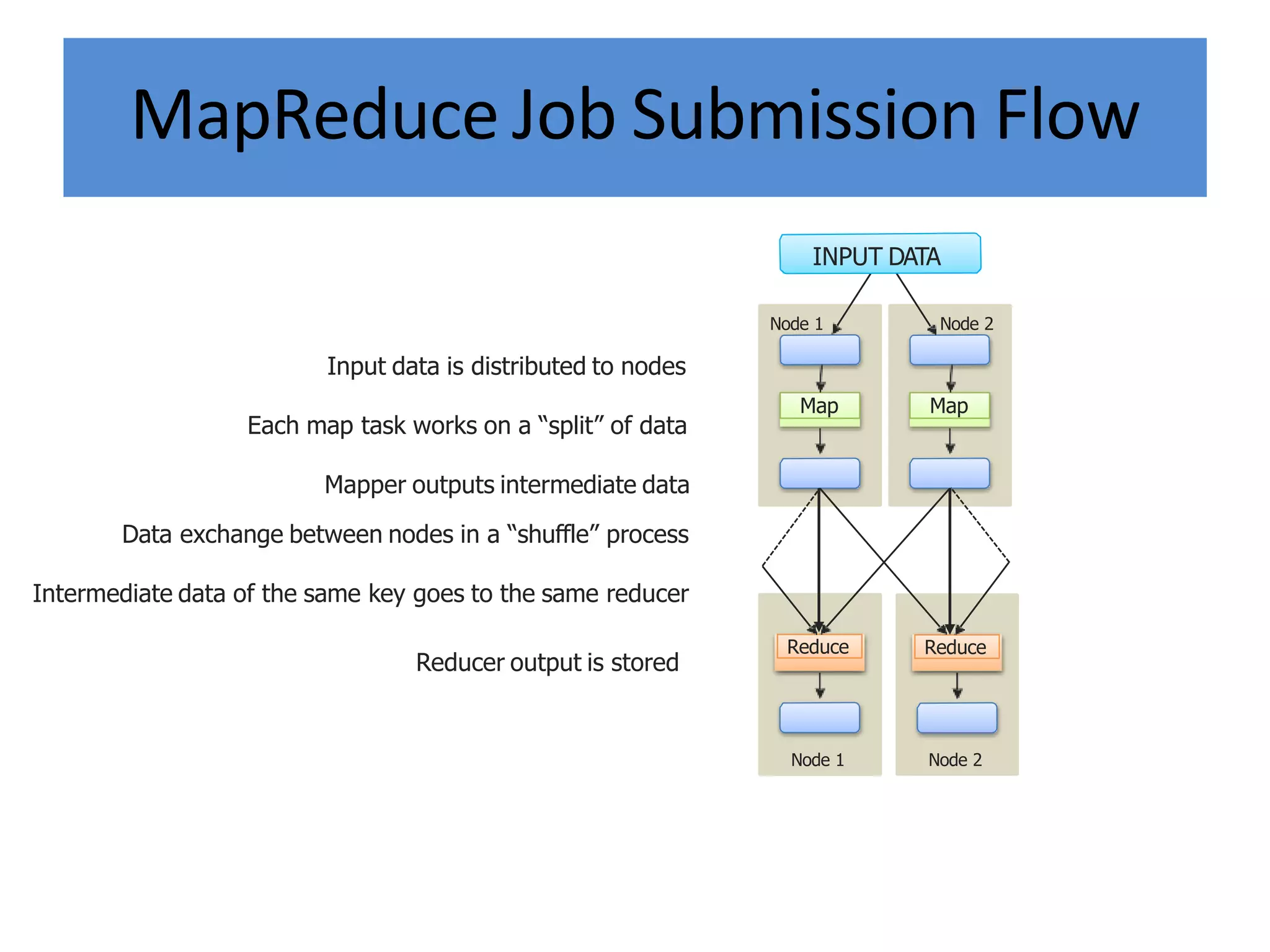

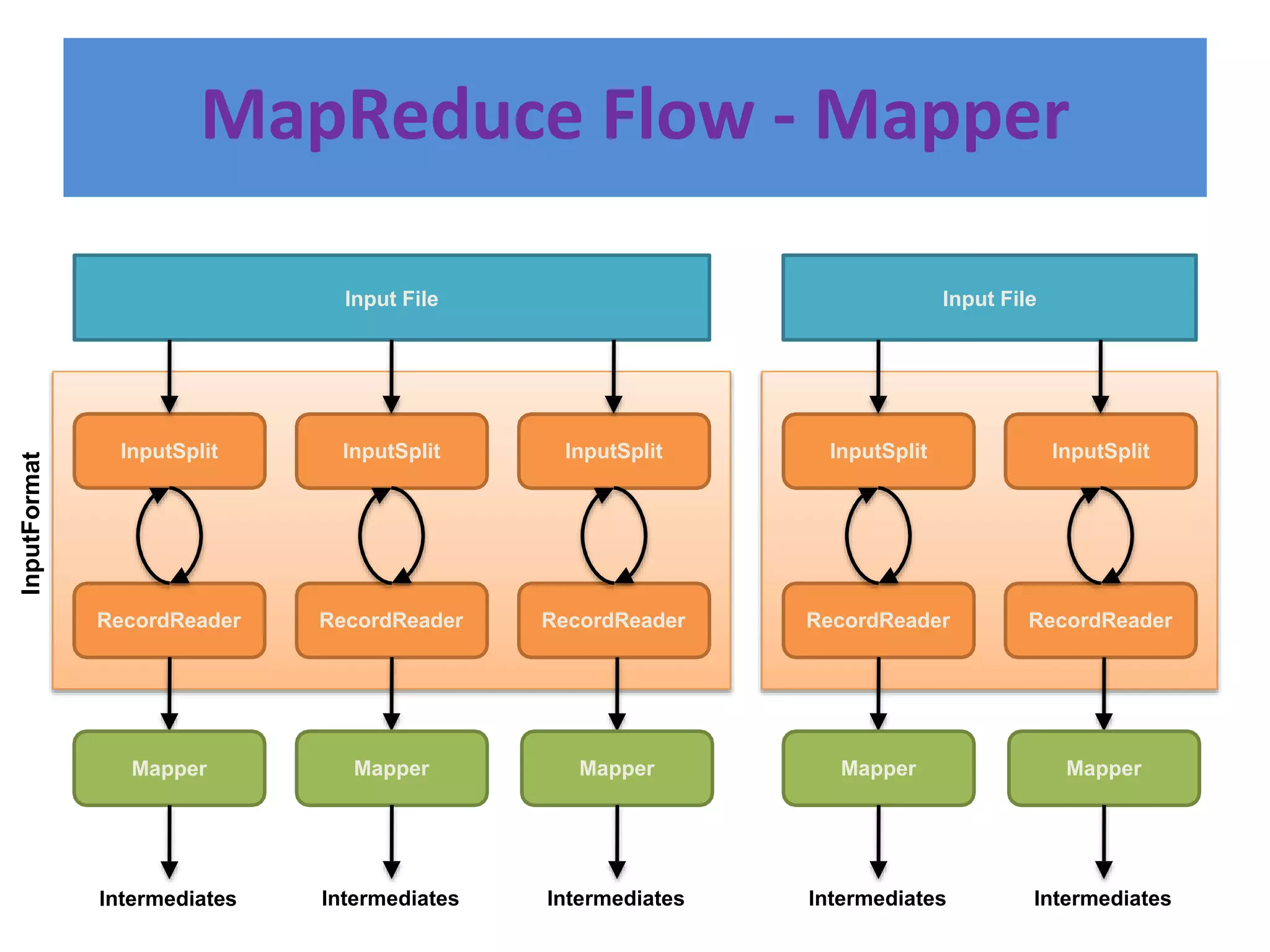

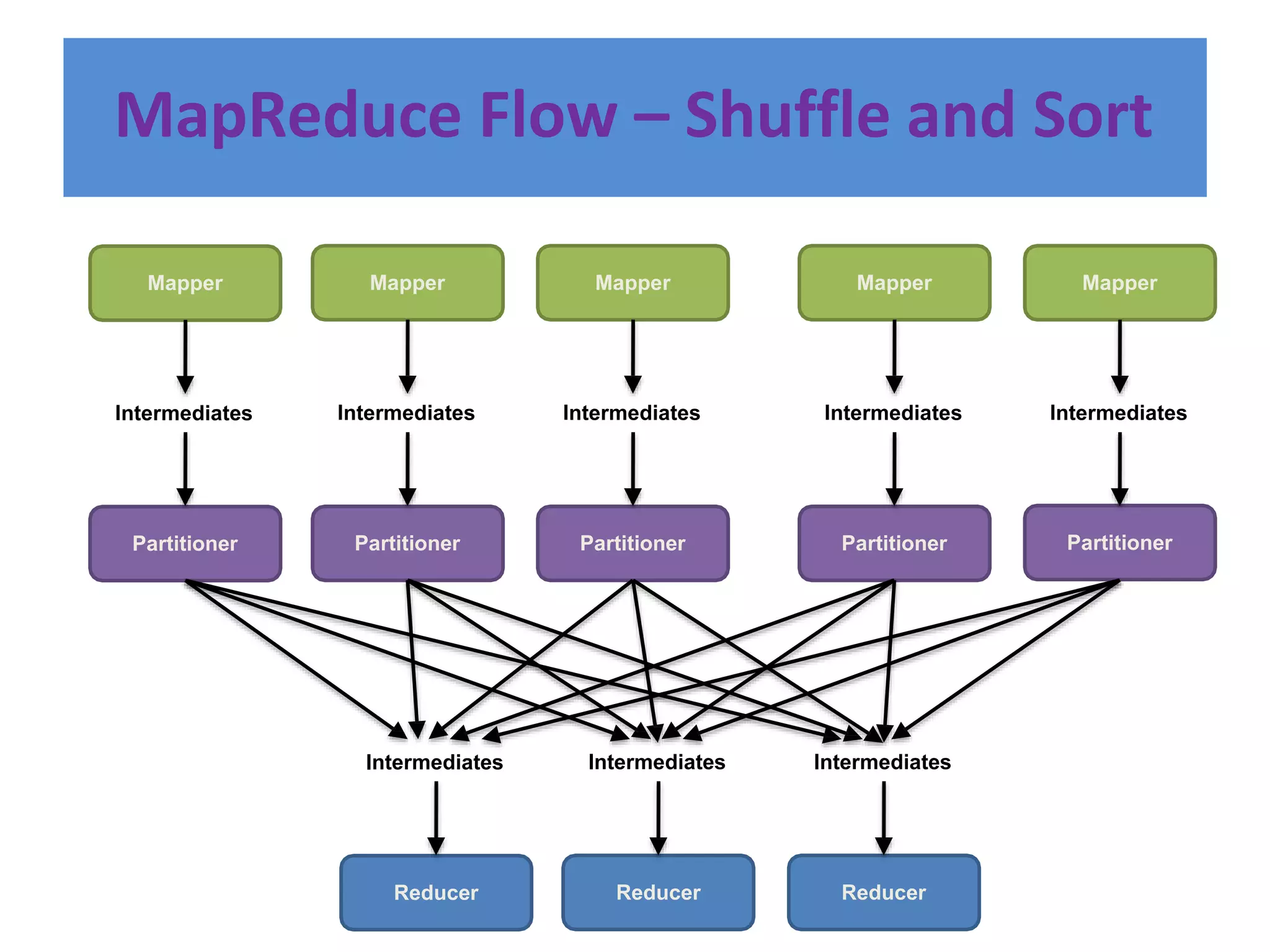

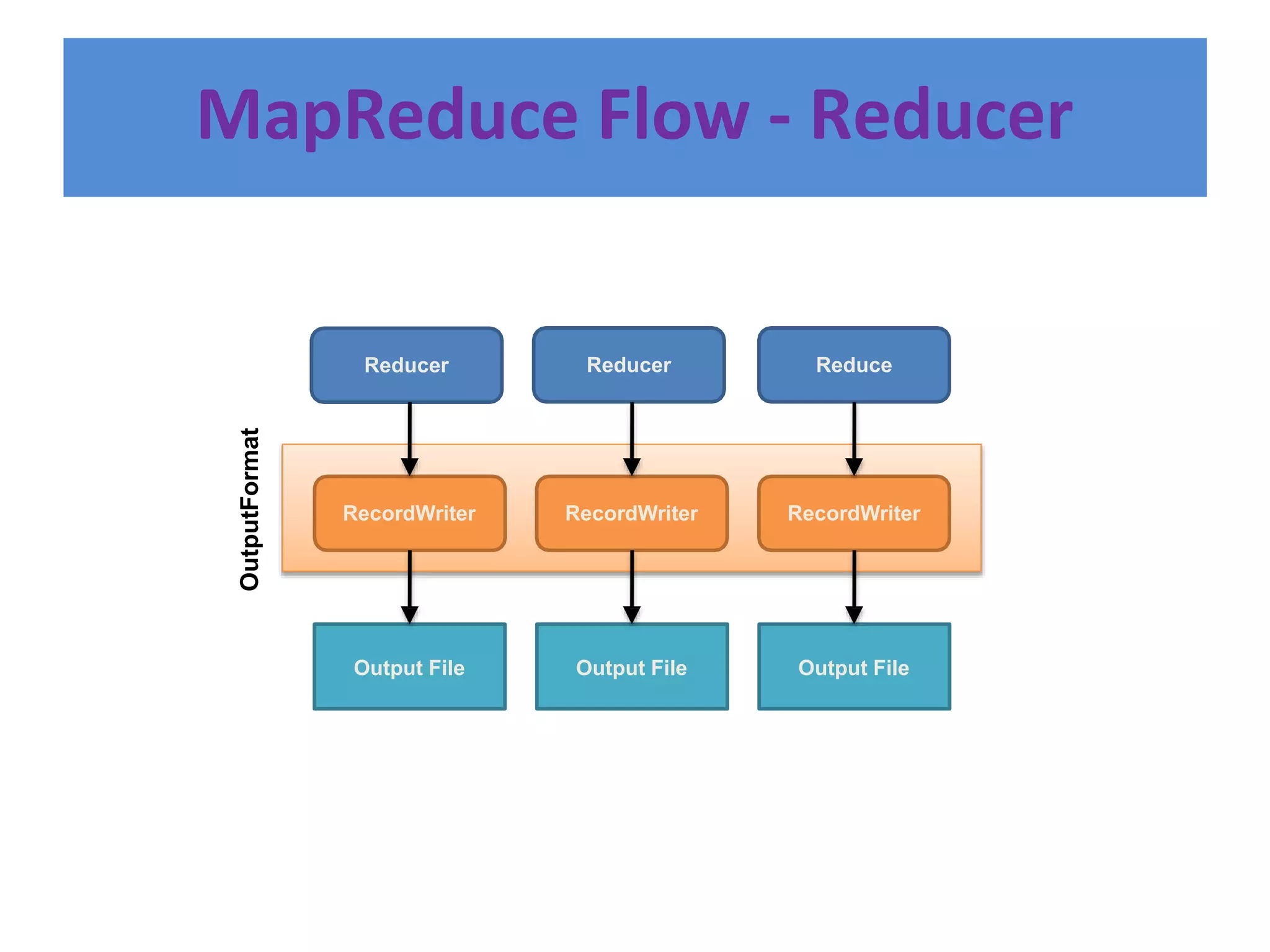

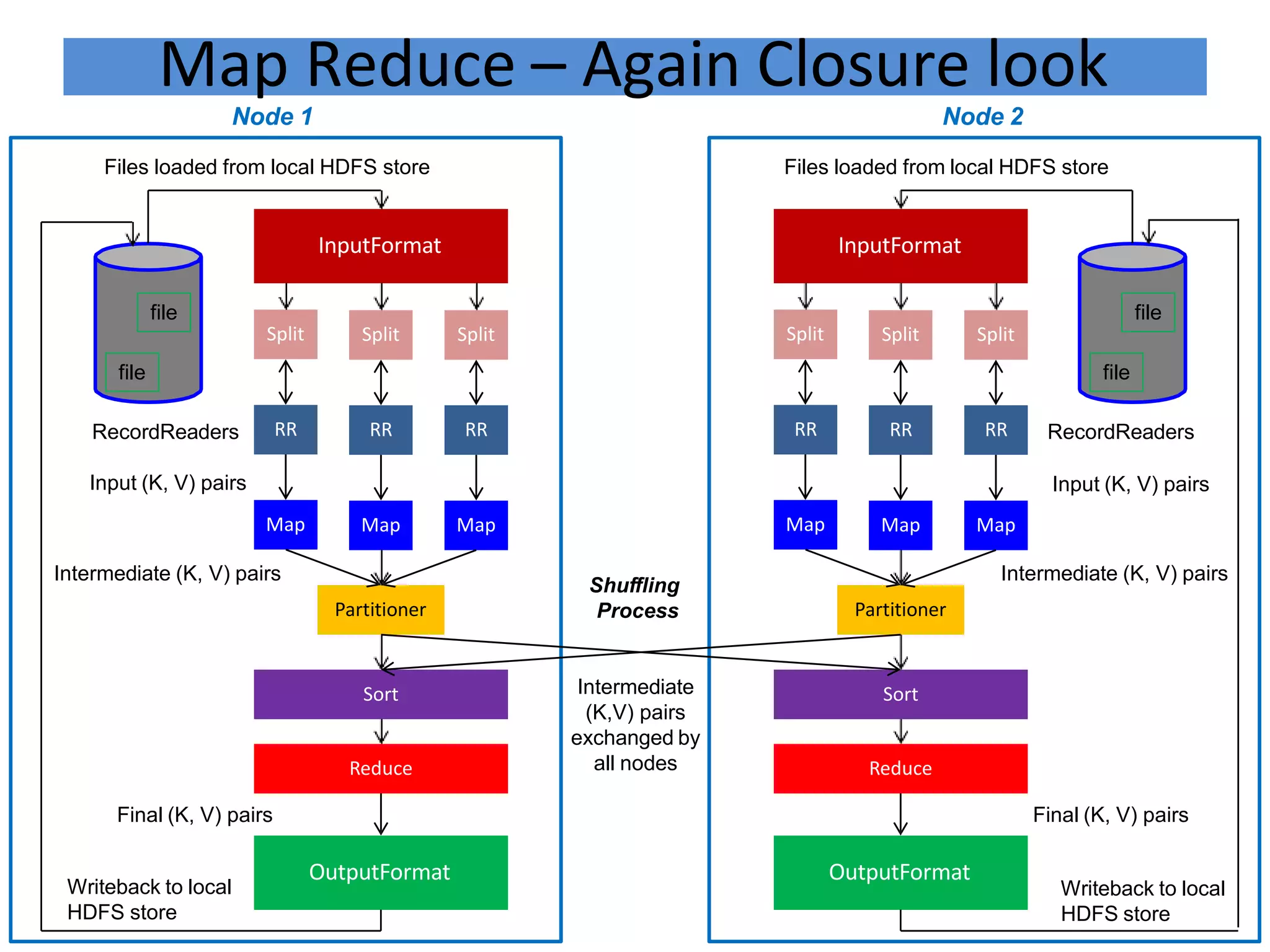

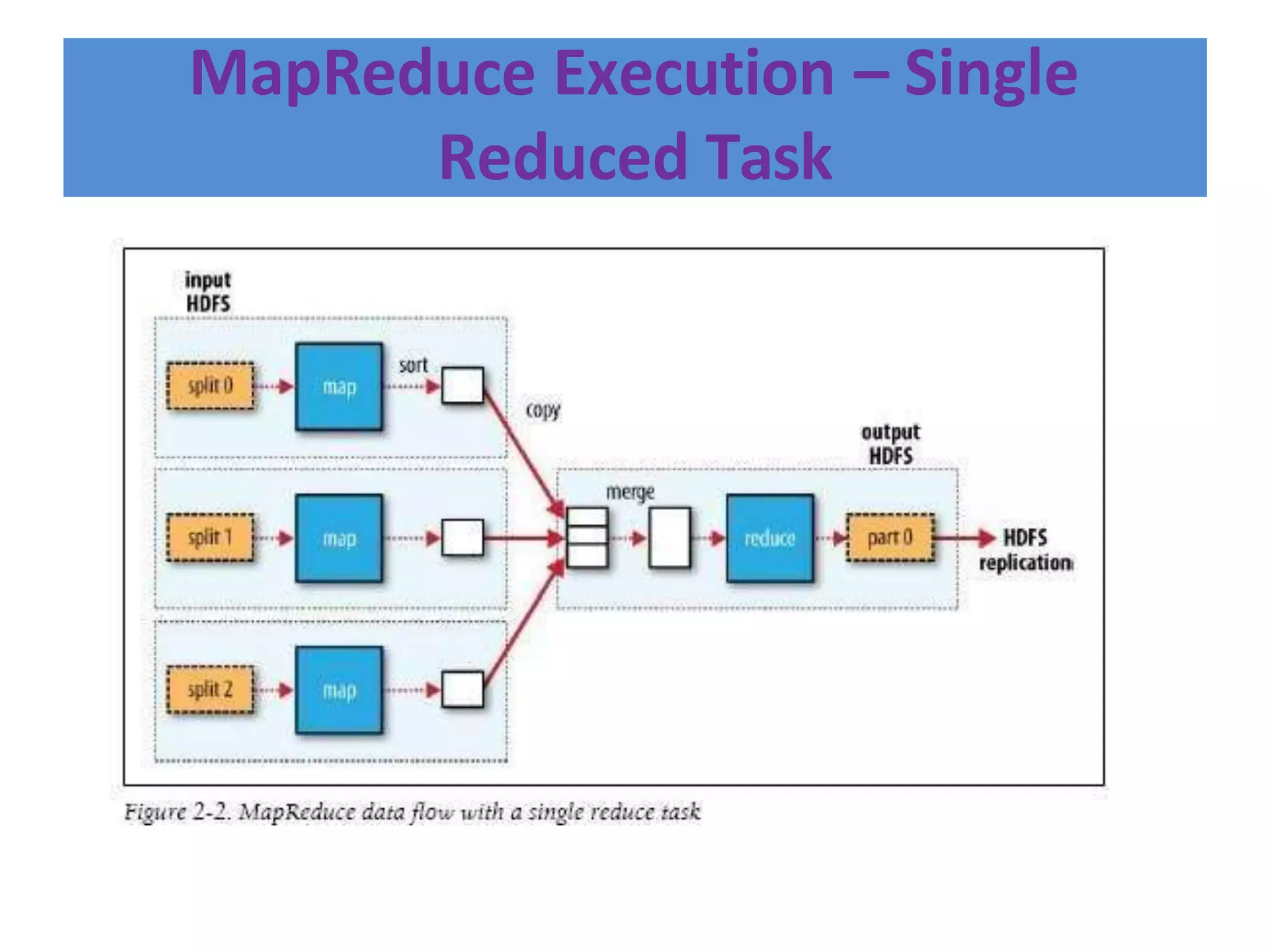

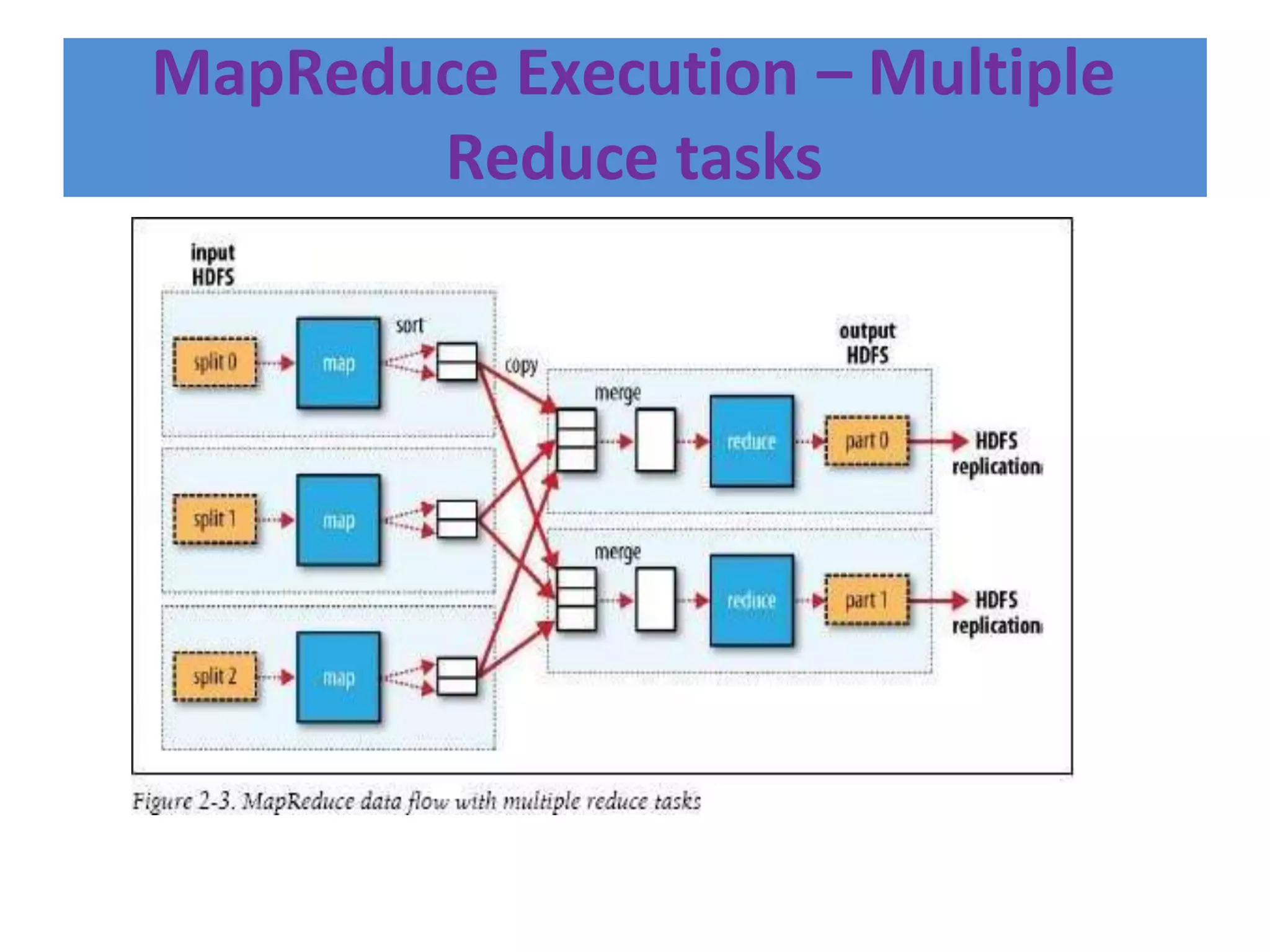

Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It provides reliable storage through HDFS and distributed processing via MapReduce. HDFS handles storage and MapReduce provides a programming model for parallel processing of large datasets across a cluster. The MapReduce framework consists of a mapper that processes input key-value pairs in parallel, and a reducer that aggregates the output of the mappers by key.

![Skeleton of a MapReduce program

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

FileSystem.get(conf).delete(new Path(args[1]), true);

job.waitForCompletion(true);

}](https://image.slidesharecdn.com/mapreduceindatascience-230308075321-898b053d/75/MAP-REDUCE-IN-DATA-SCIENCE-pptx-21-2048.jpg)

![ToolRunner

public int run(String[] args) throws Exception

{

Configuration conf = new Configuration();

Job job = new Job(conf);

job.setJarByClass(multiInputFile.class);

…..

……

……

FileOutputFormat.setOutputPath(job, new Path(args[2]));

FileSystem.get(conf).delete(new Path(args[2]), true);

return (job.waitForCompletion(true) ? 0 : 1);

}

public static void main(String[] args) throws Exception

{

int ecode = ToolRunner.run(new multiInputFile(), args);

System.exit(ecode);

}](https://image.slidesharecdn.com/mapreduceindatascience-230308075321-898b053d/75/MAP-REDUCE-IN-DATA-SCIENCE-pptx-67-2048.jpg)