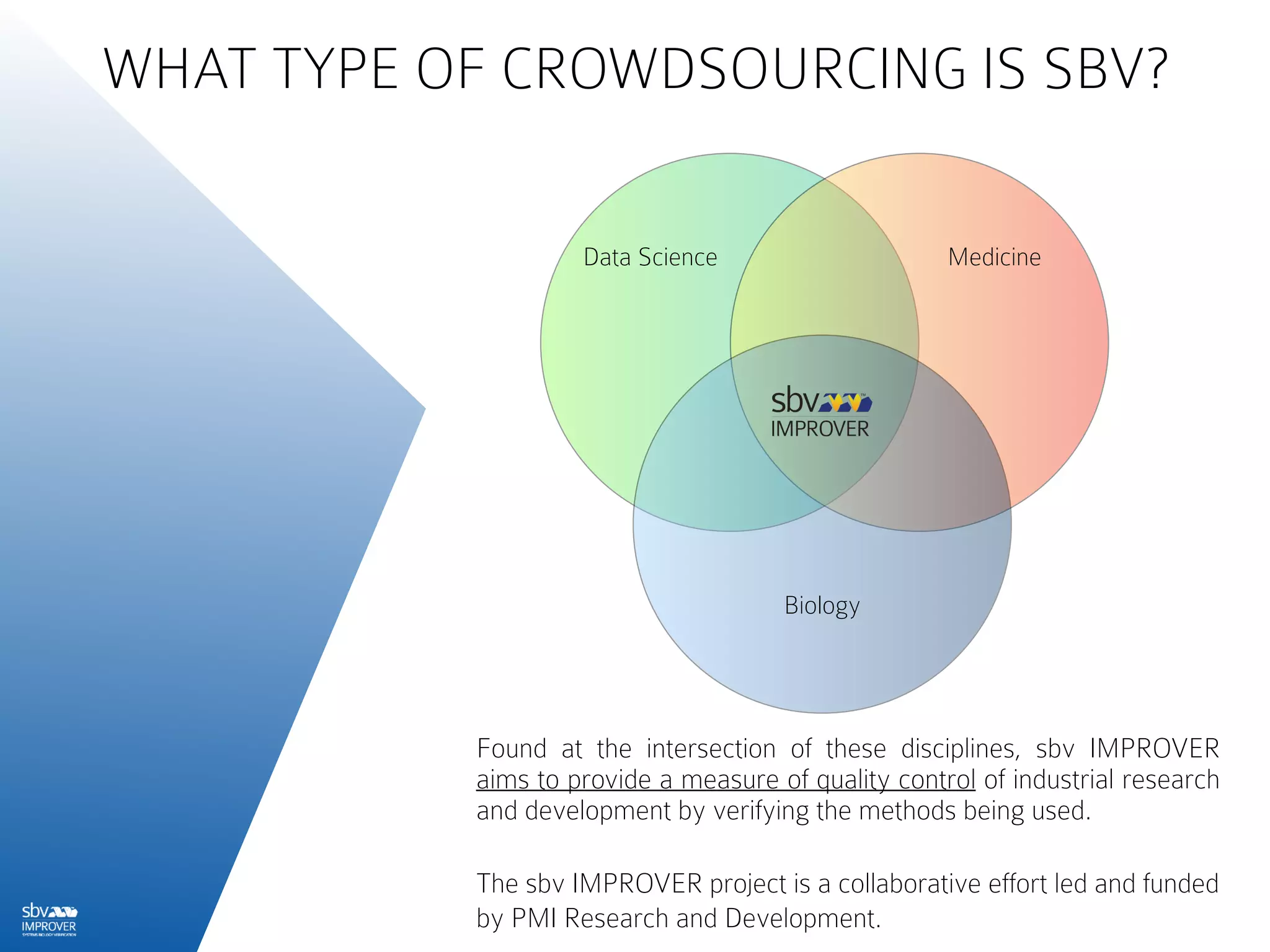

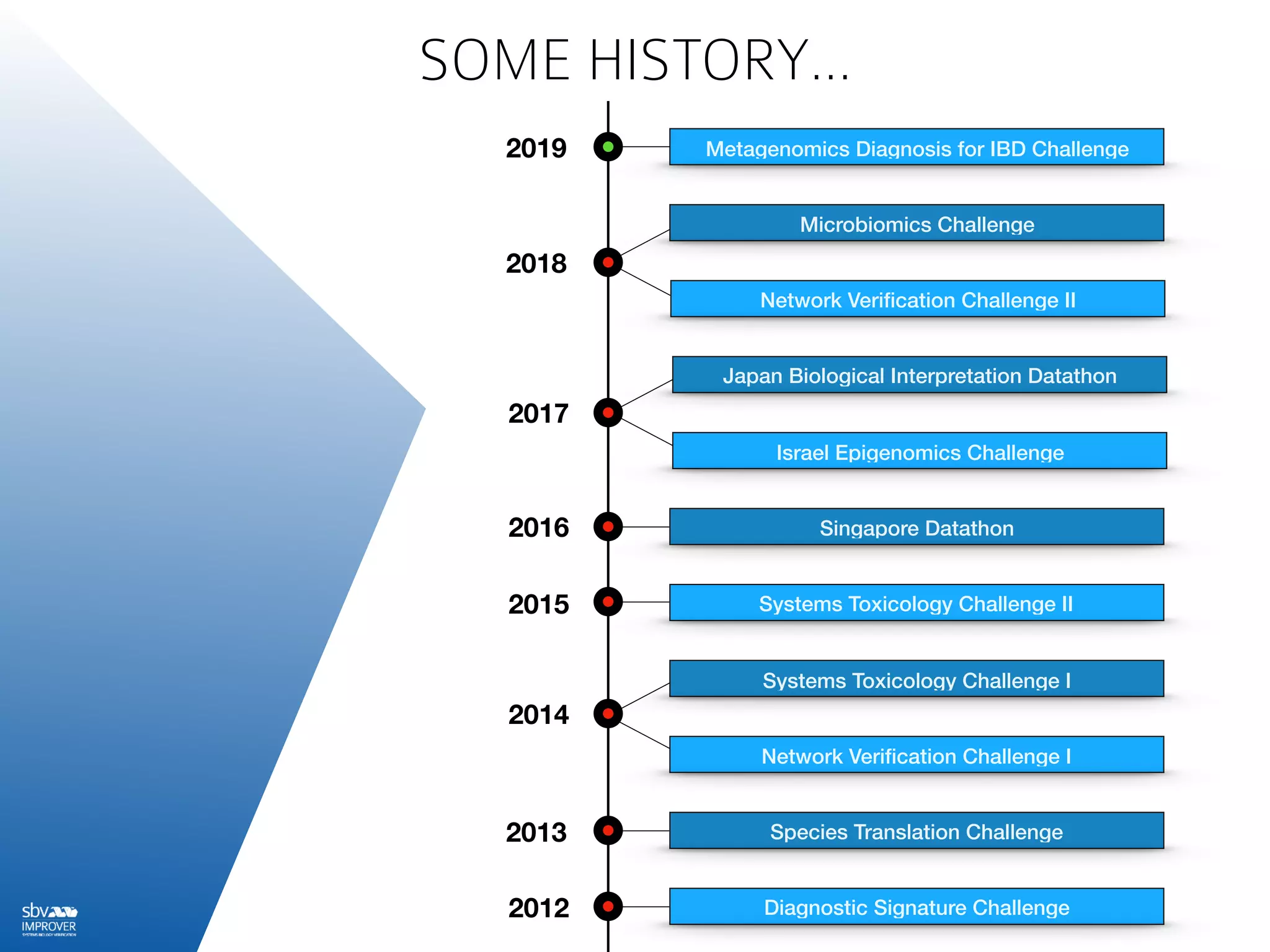

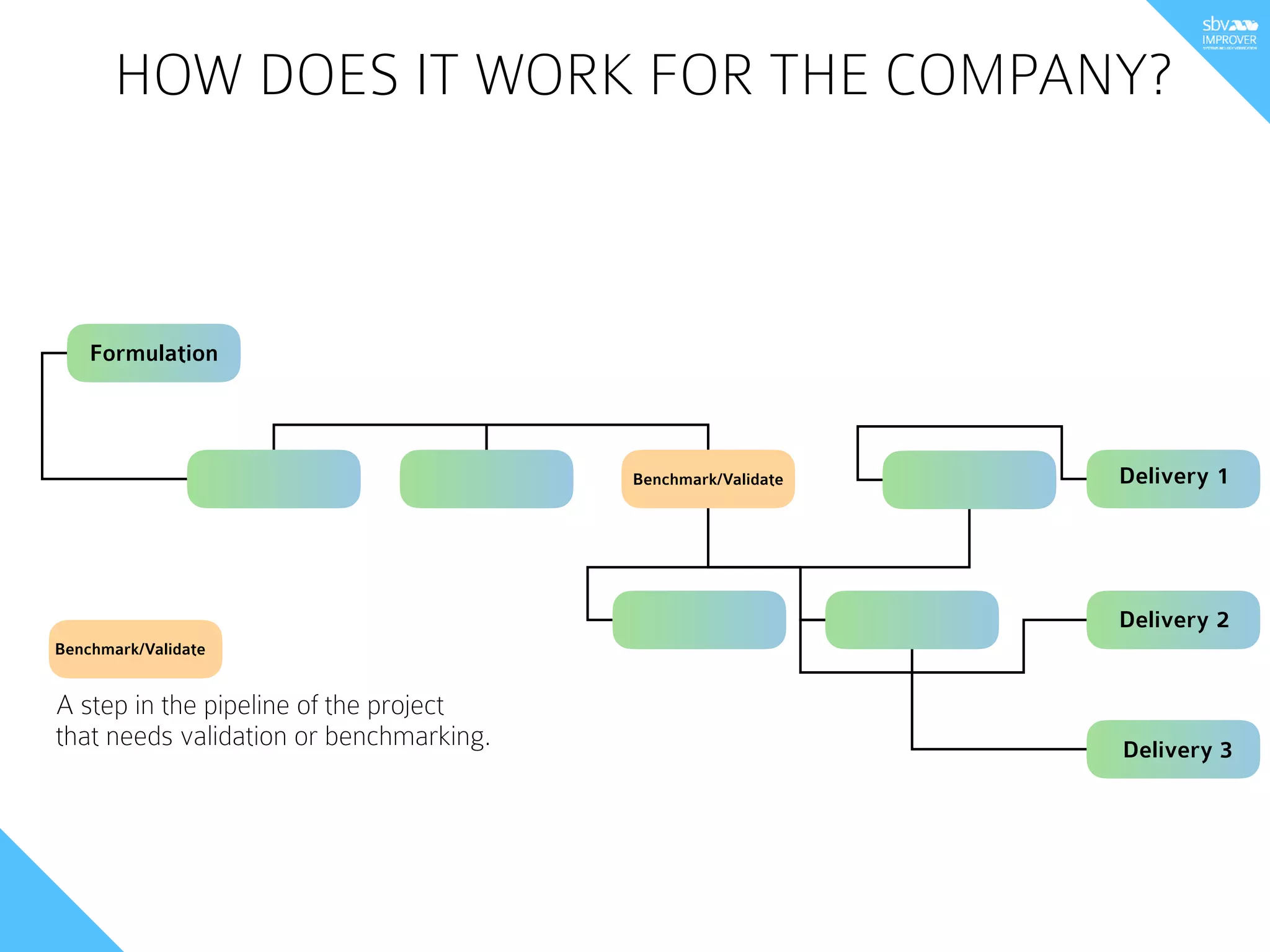

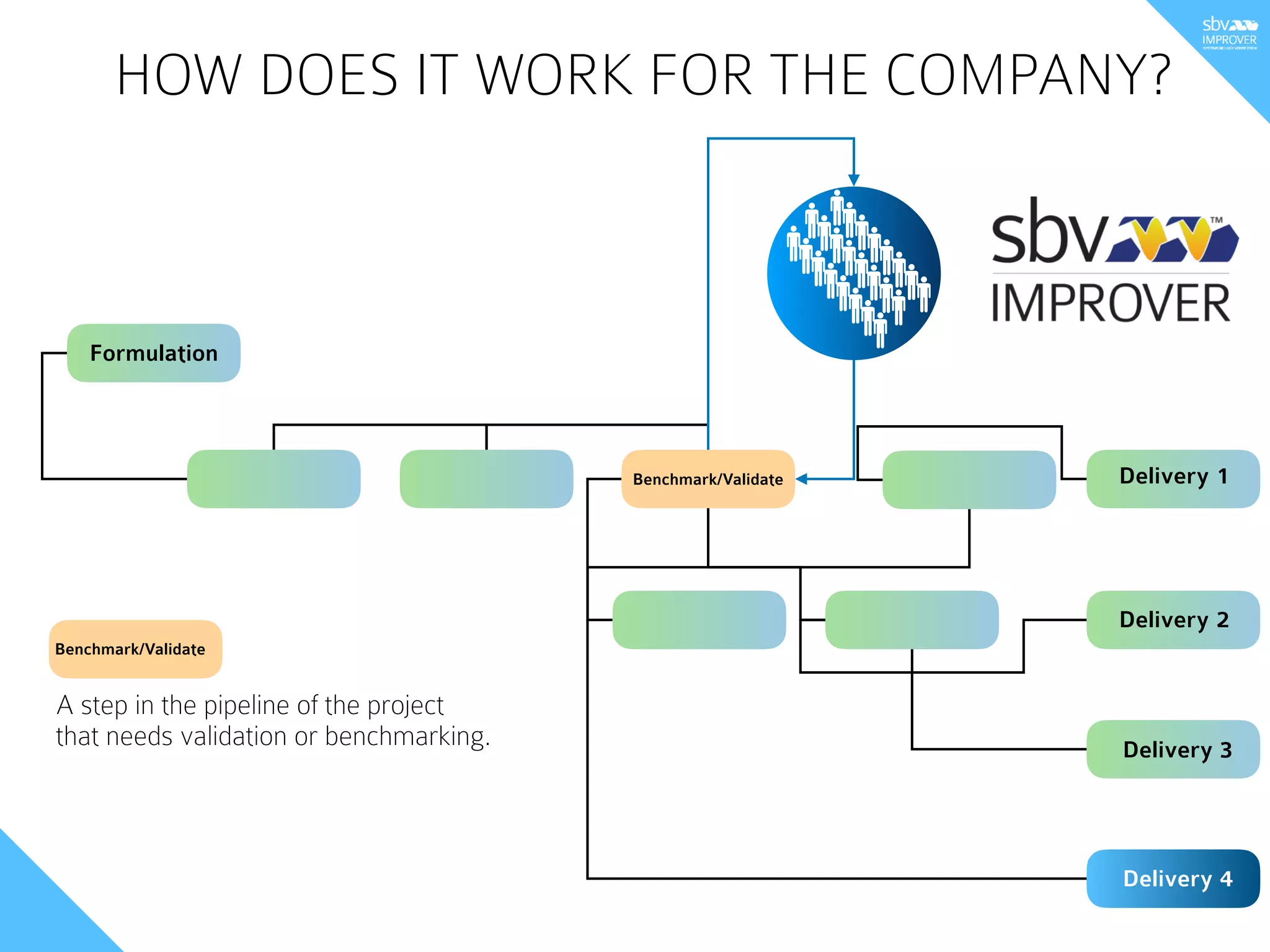

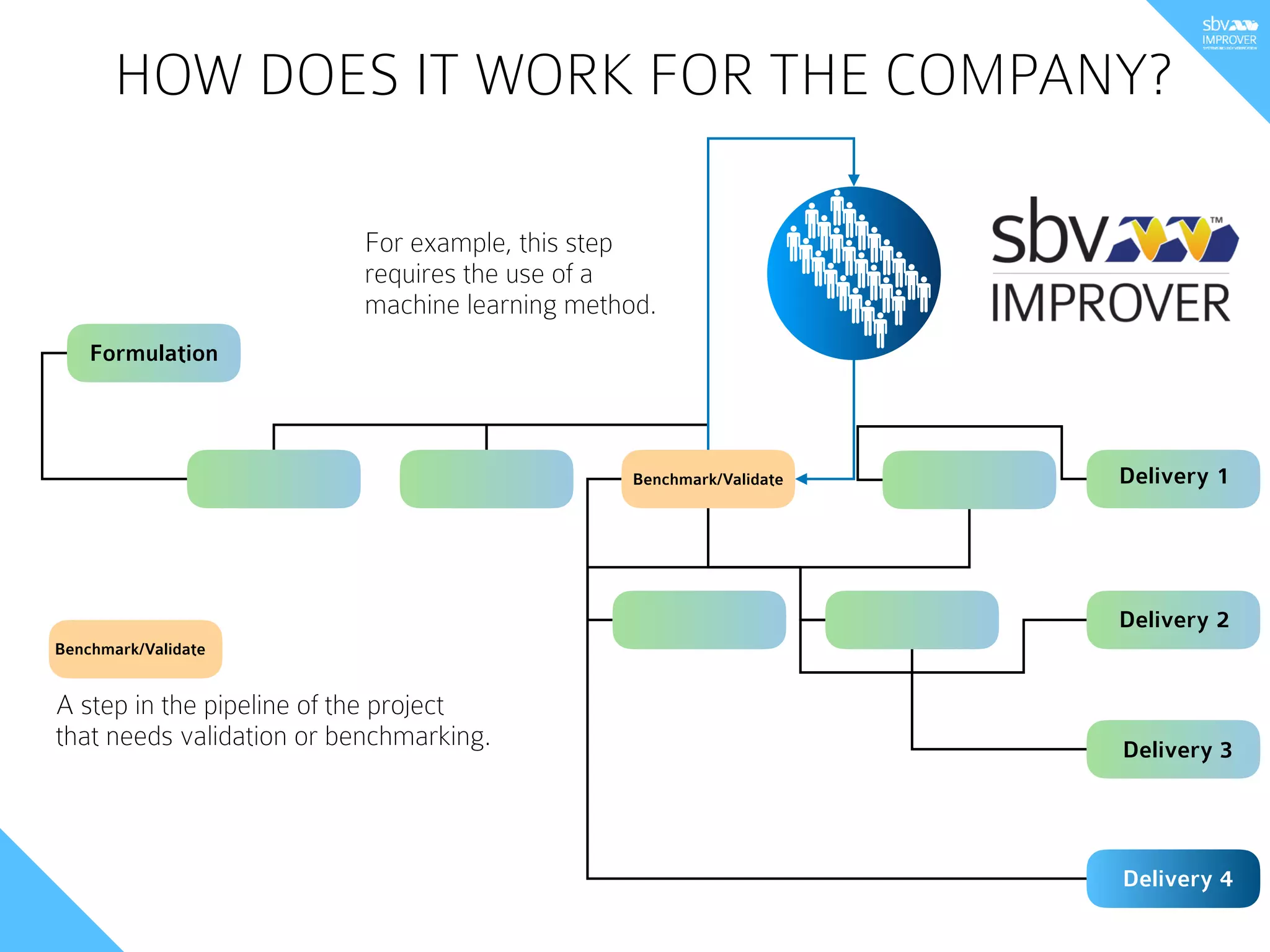

- The sbv IMPROVER project is a crowdsourcing platform led by PMI R&D to verify methods in industrial research through challenges in data science, biology and medicine. It aims to provide quality control of company research.

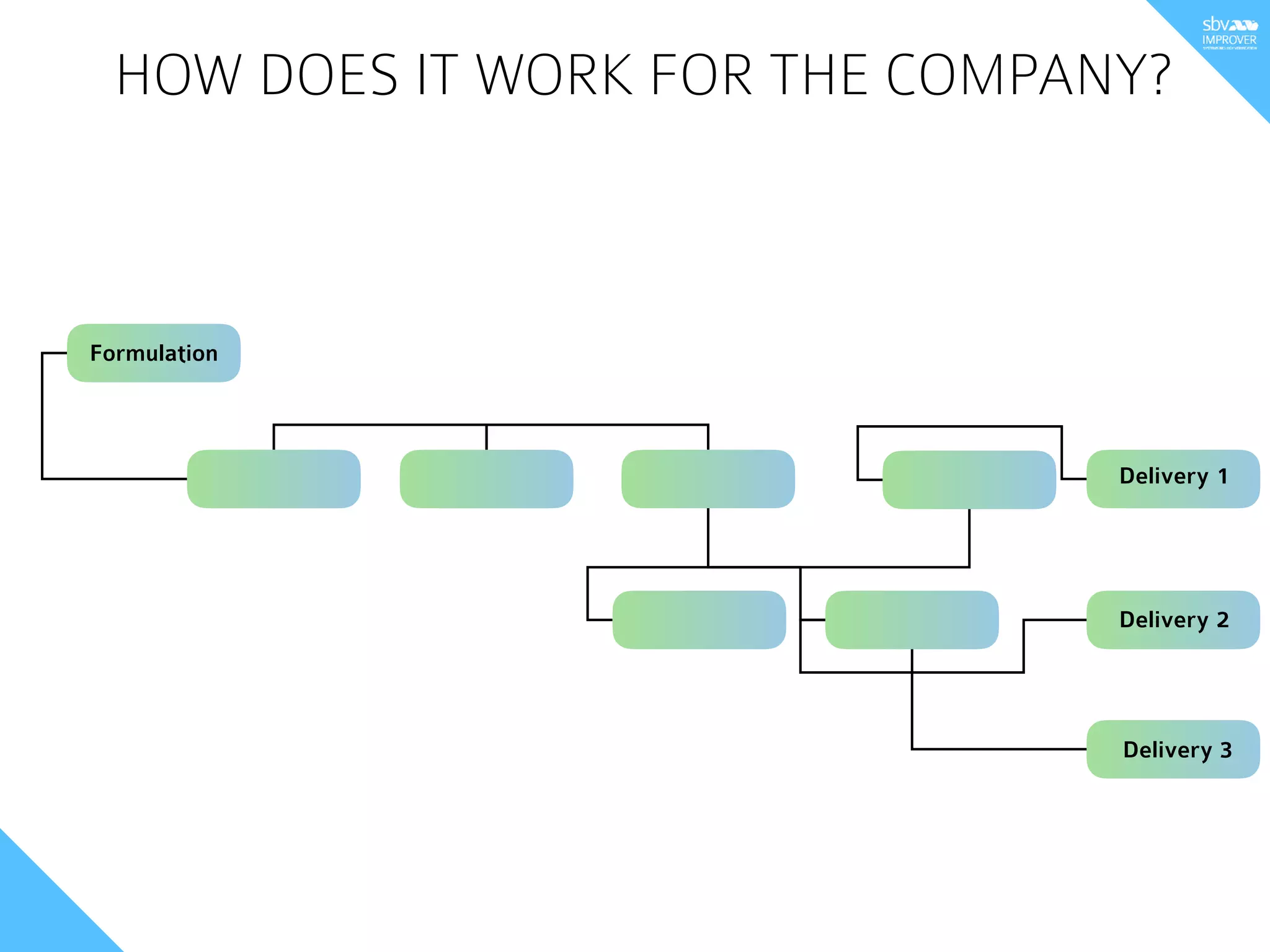

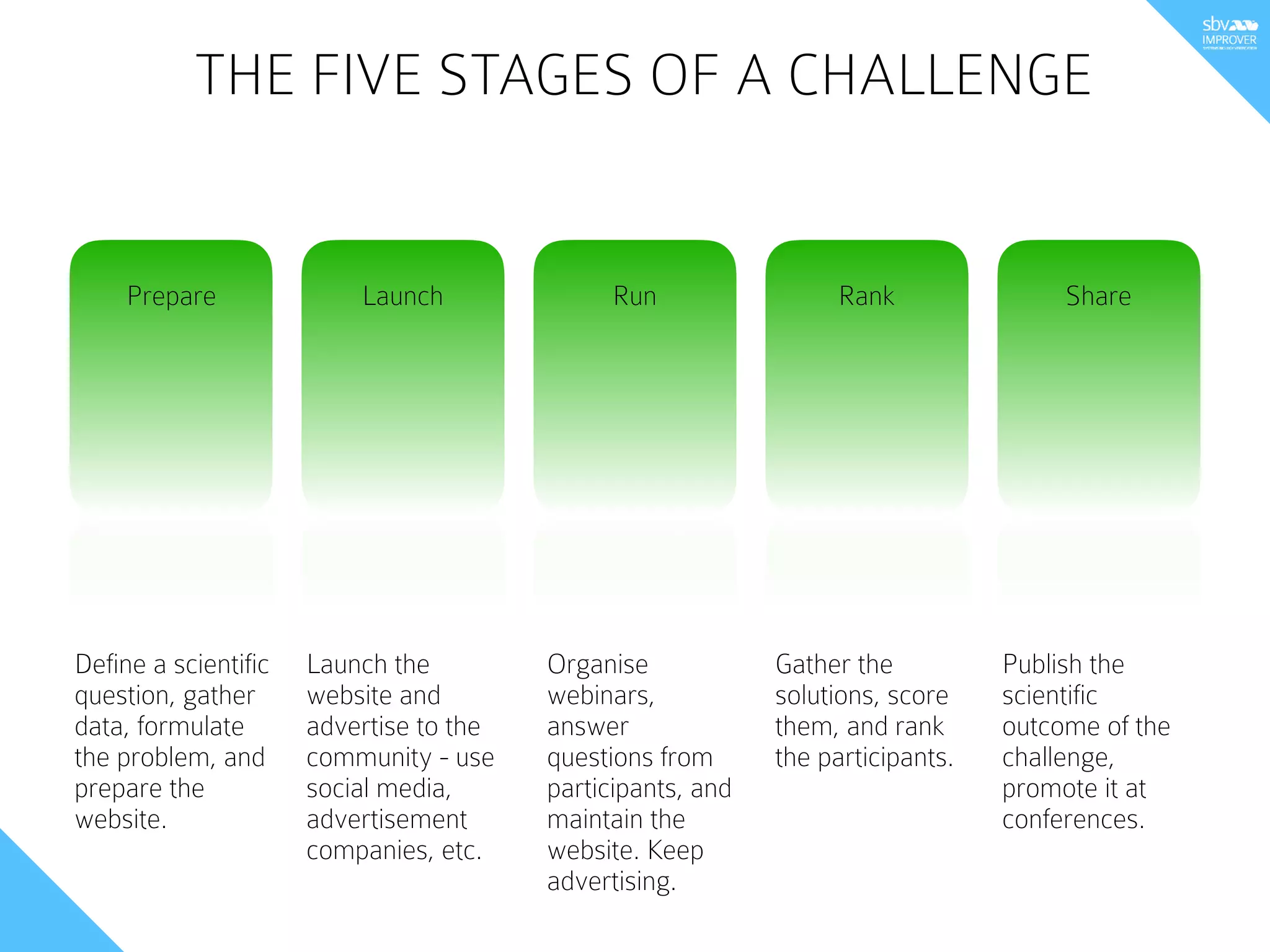

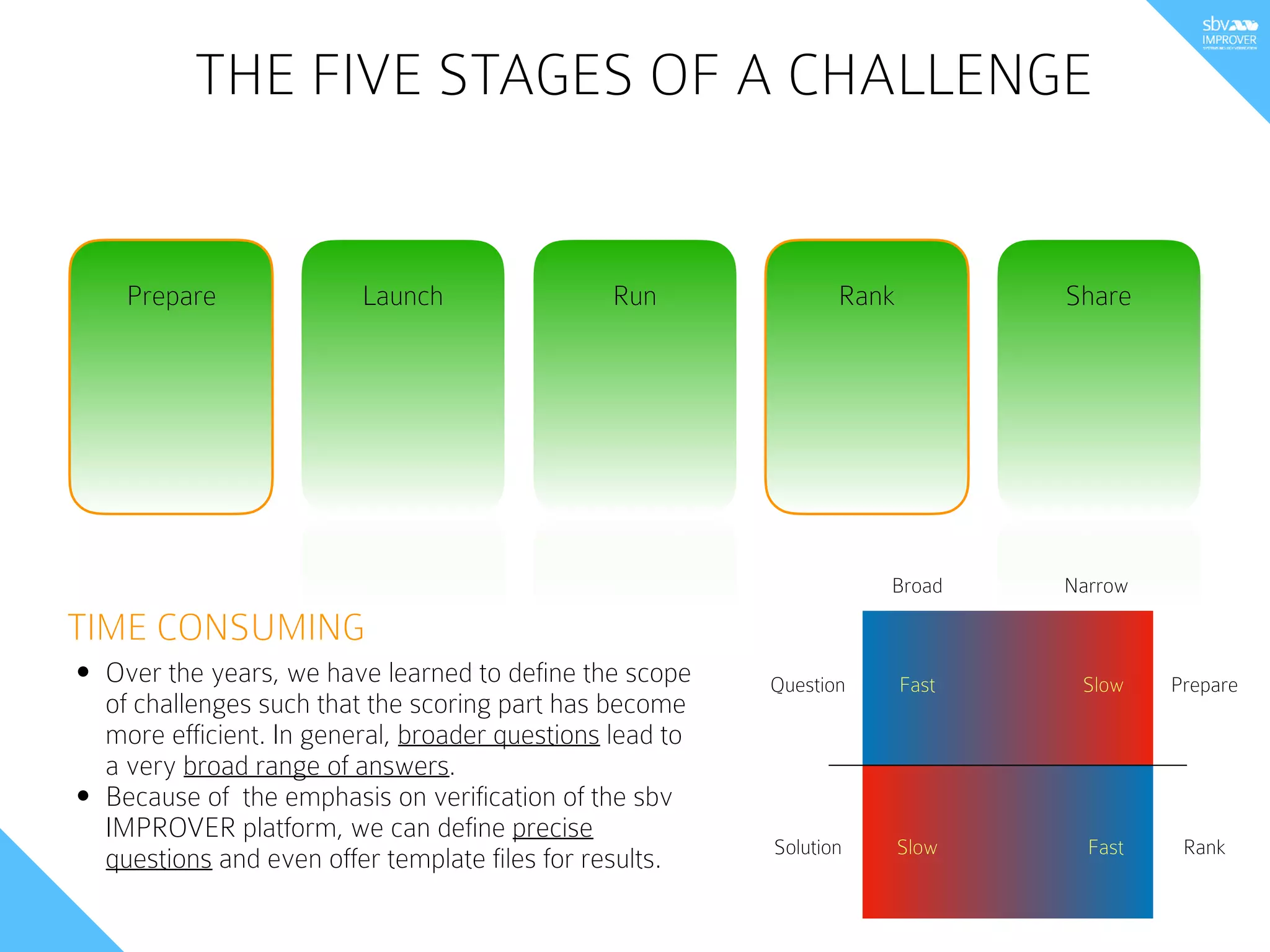

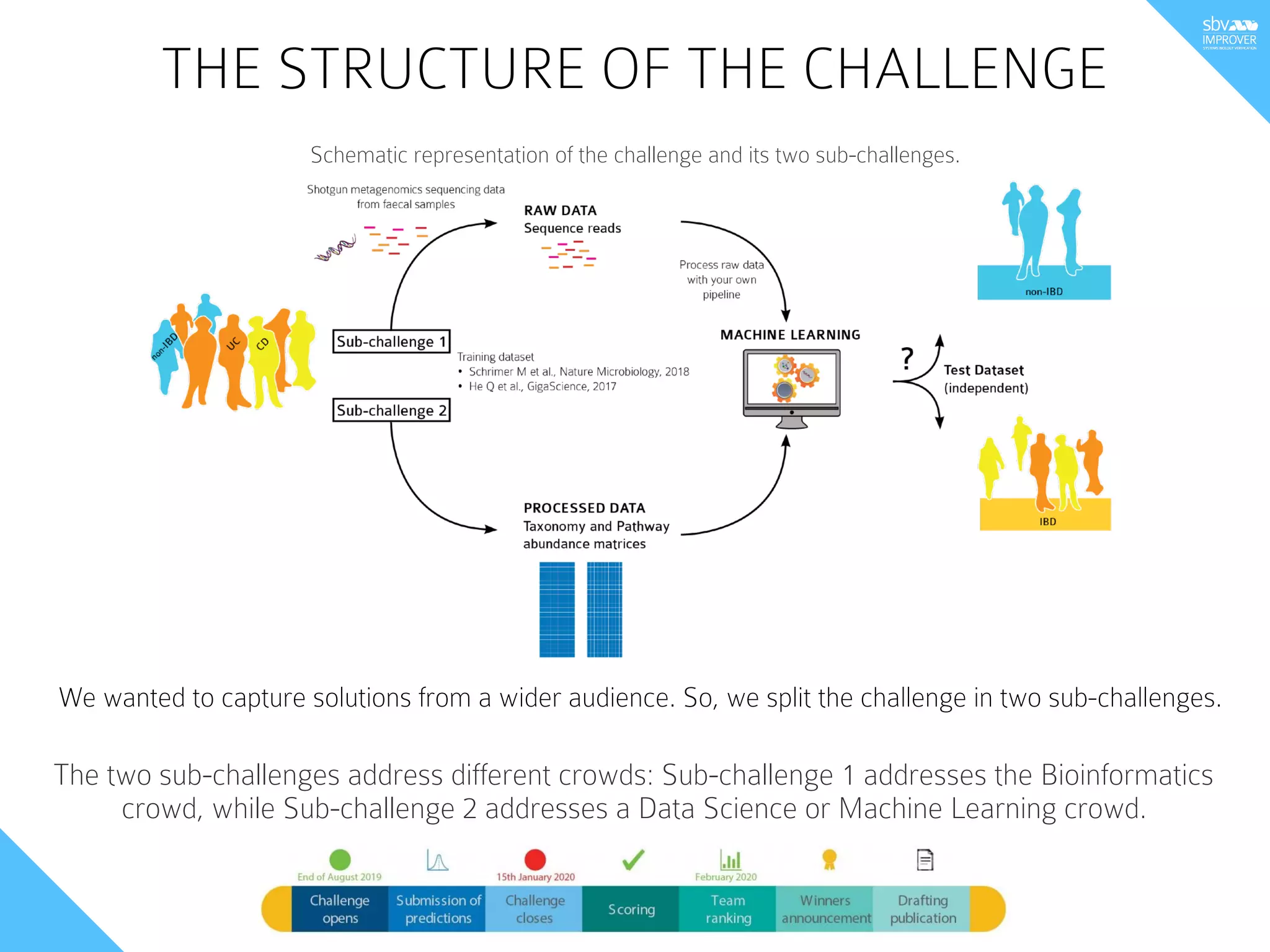

- Challenges follow five stages: preparation, launch, running the challenge, ranking submissions, and sharing results. Defining precise questions helps obtain focused solutions.

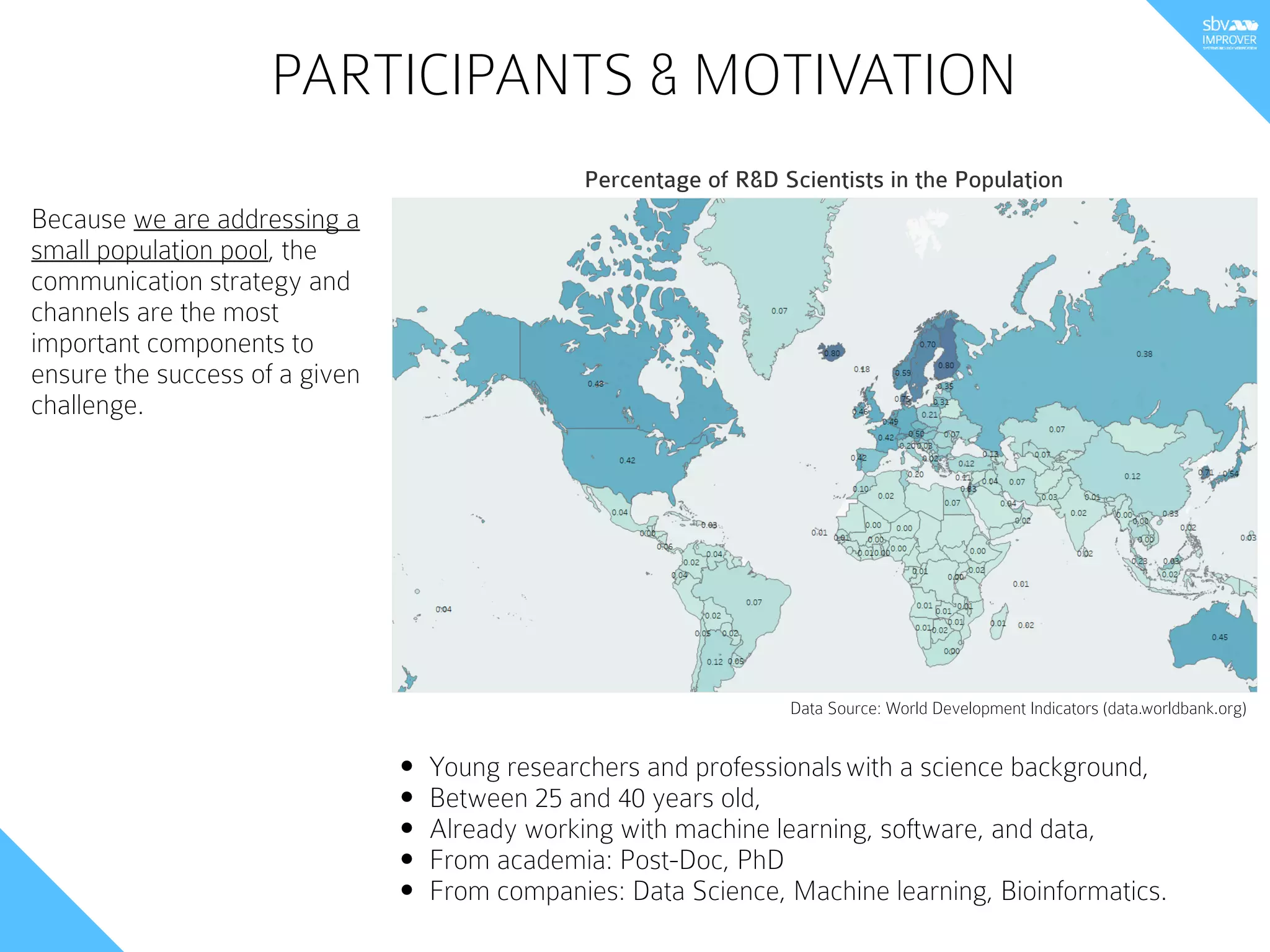

- Challenges engage crowds of young researchers interested in machine learning and data science. Advertising occurs through social media, conferences, and directly engaging previous participants.

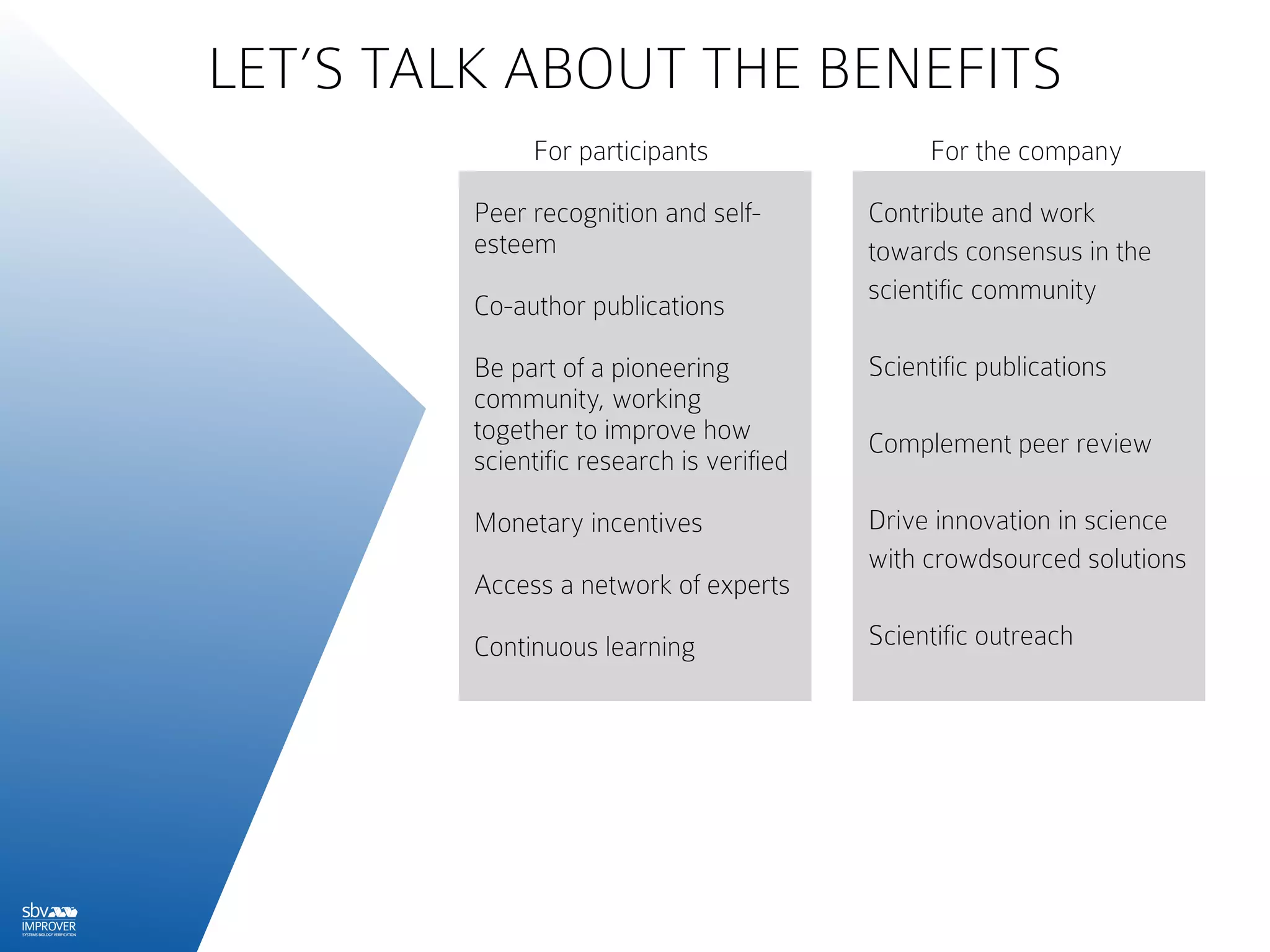

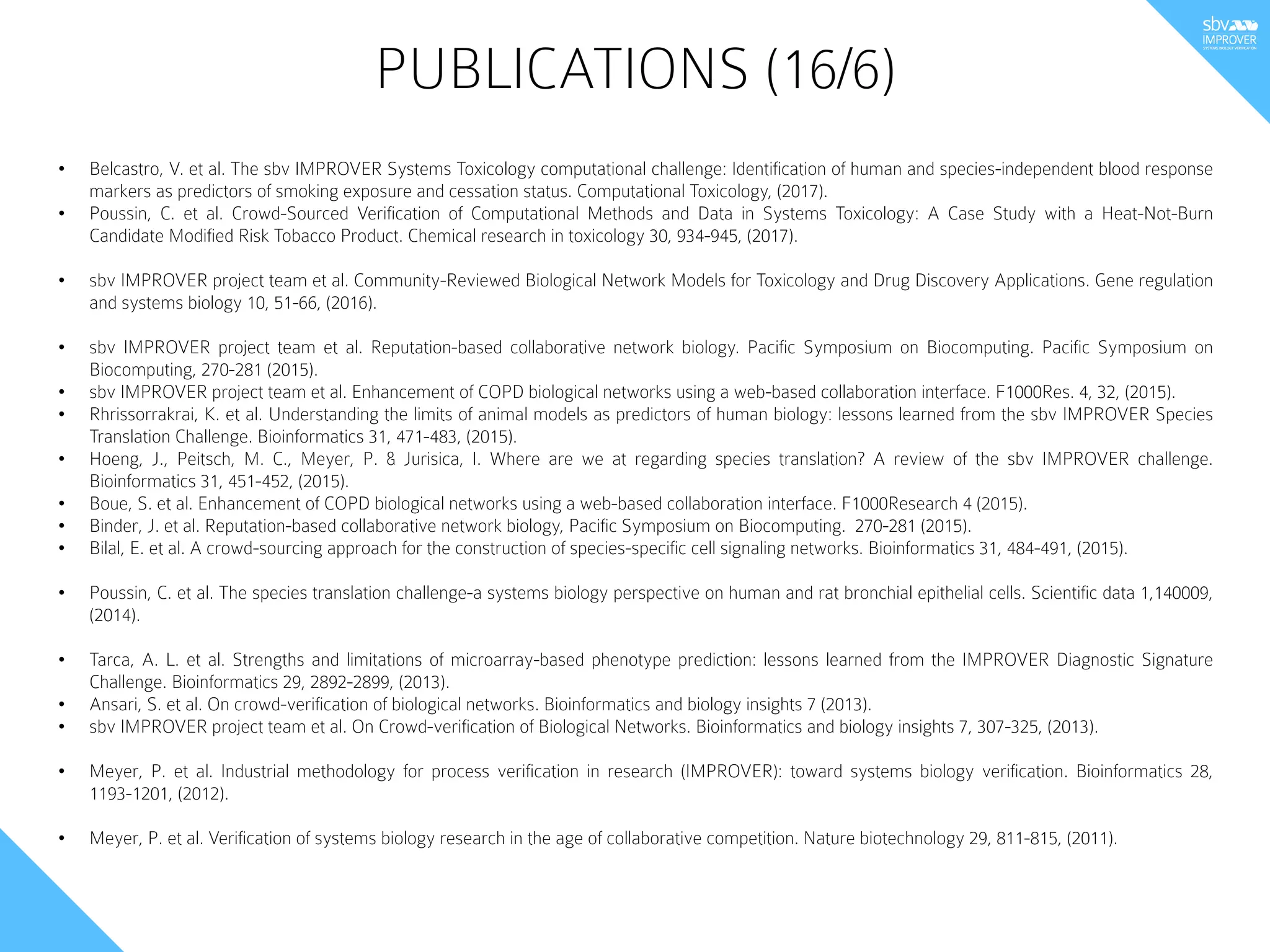

- Benefits include scientific publications, learning, and driving innovation through crowdsourced verification of methods. Maintaining the platform requires significant communication efforts but eng