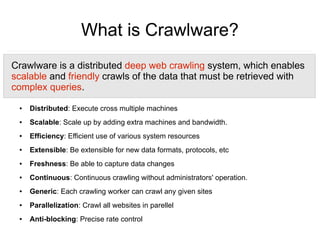

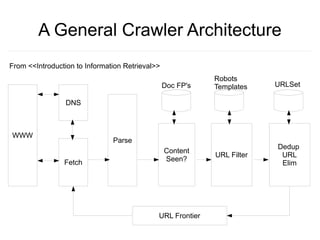

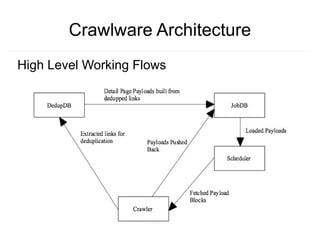

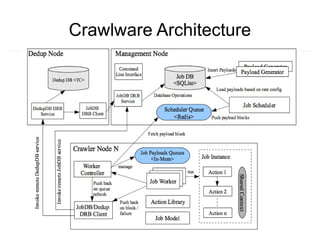

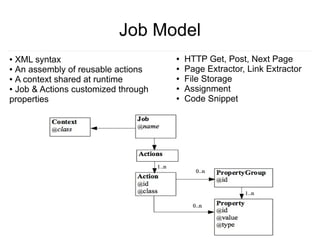

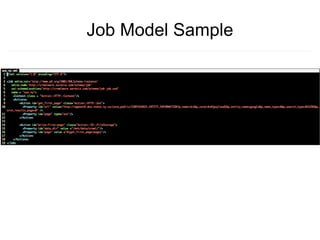

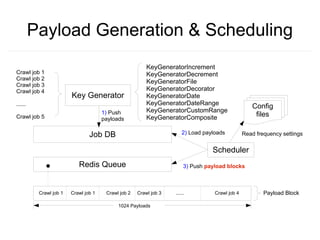

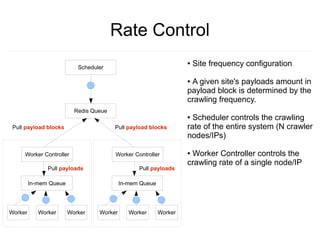

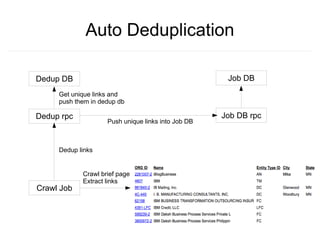

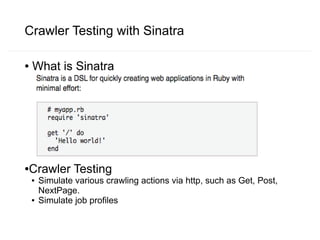

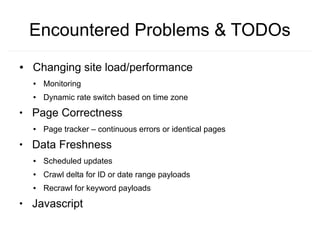

Crawlware is a distributed deep web crawling system that enables scalable and efficient crawls across multiple machines. It uses a job model with reusable actions to customize crawls and extracts data through complex queries. Key components include a payload generator that schedules crawls in parallel across sites, rate control to regulate the crawl rate, and auto-deduplication of extracted links. Testing is done through a Sinatra simulation and problems like changing site loads, data freshness, and JavaScript rendering still need to be addressed.