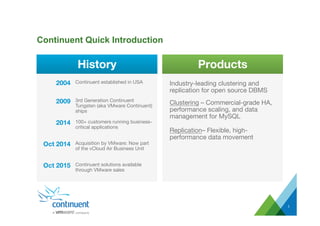

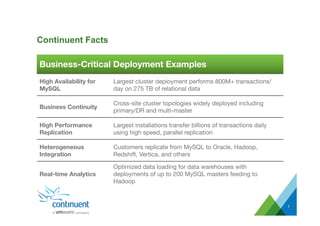

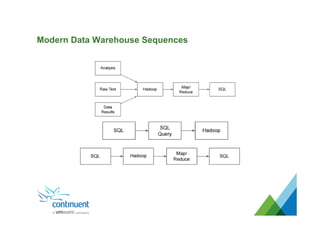

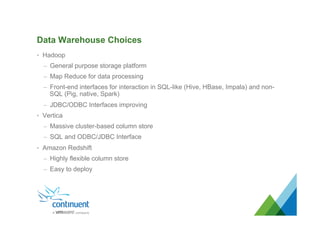

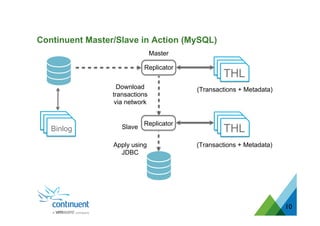

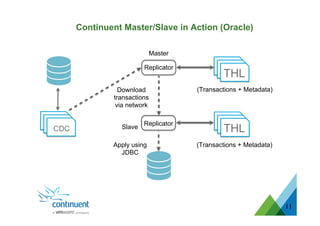

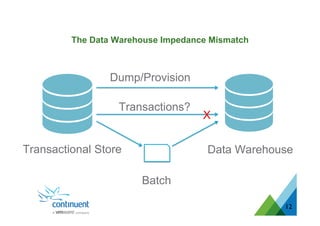

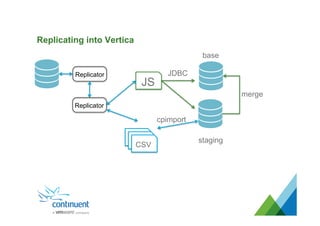

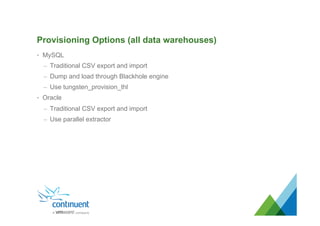

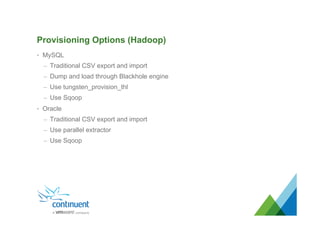

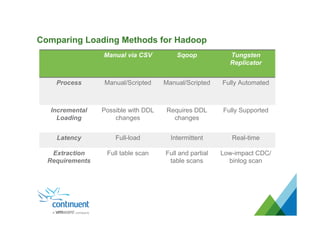

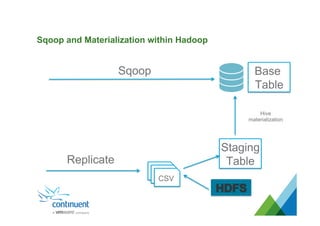

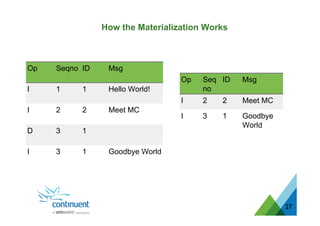

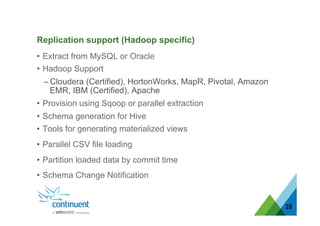

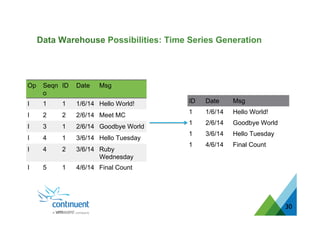

The document presents an overview of Continuent's data replication and integration solutions for databases such as MySQL and Oracle, emphasizing the shift towards real-time analytics and modern data warehousing. It highlights Continuent's products and their capabilities in high availability, business continuity, and efficient data integration across various platforms. Additionally, it discusses the evolution of data warehouses and the need for continuous data replication to support modern analytics requirements.