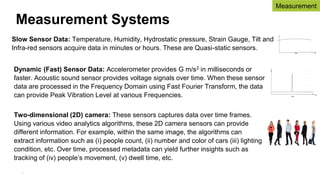

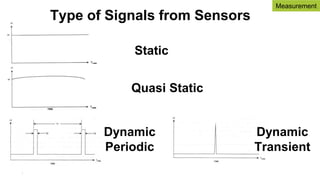

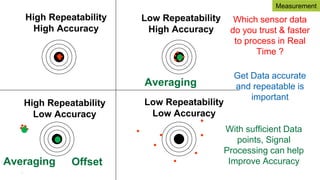

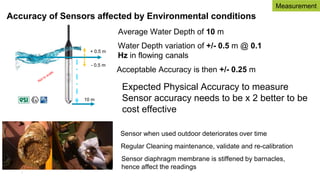

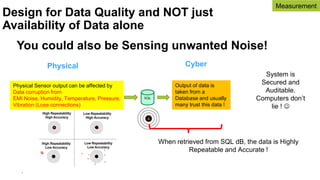

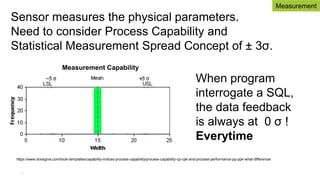

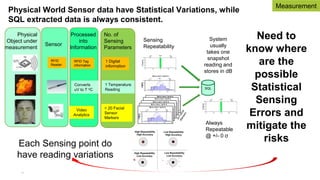

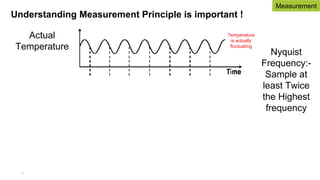

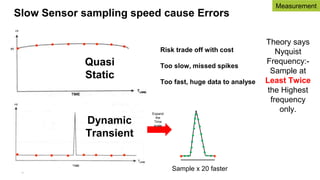

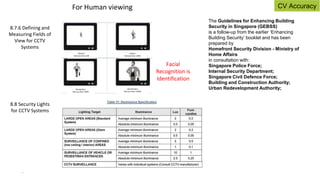

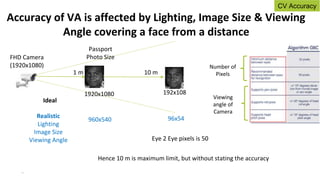

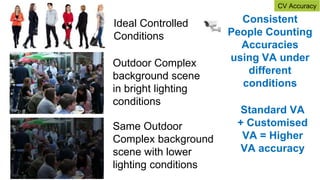

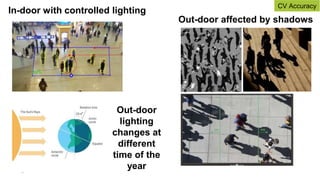

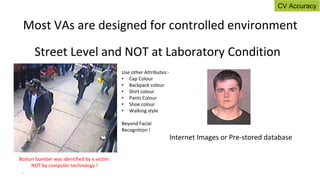

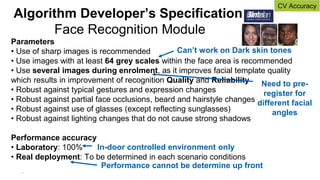

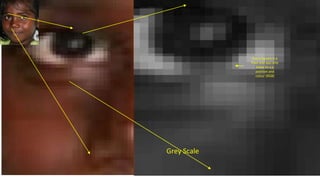

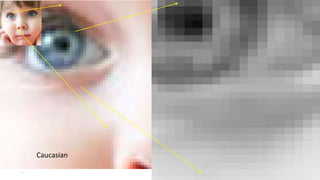

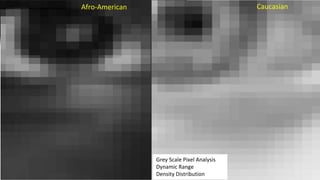

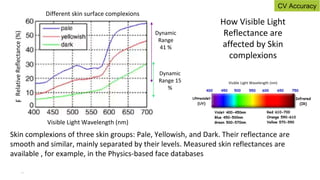

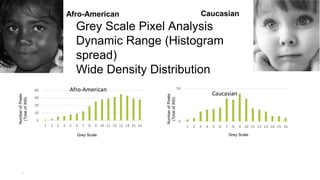

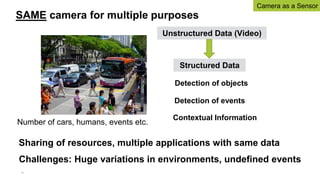

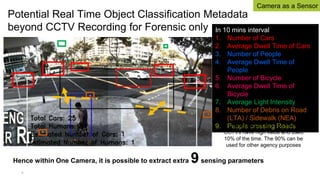

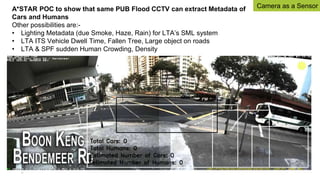

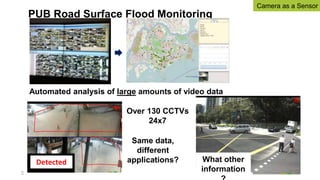

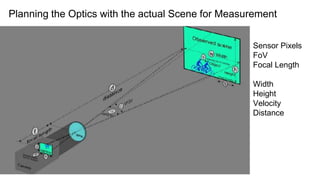

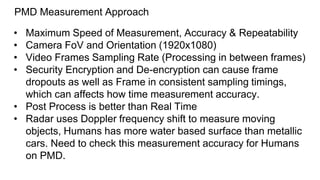

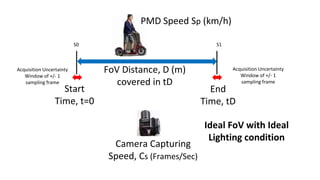

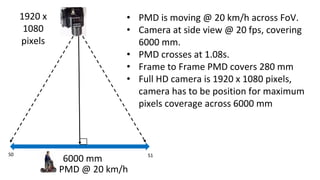

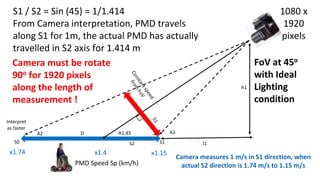

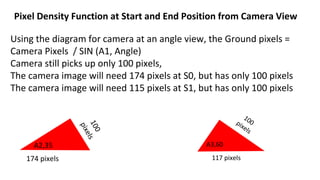

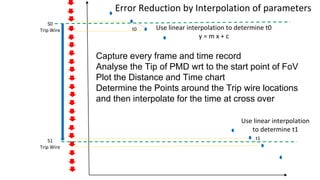

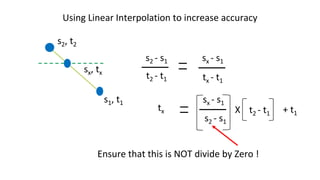

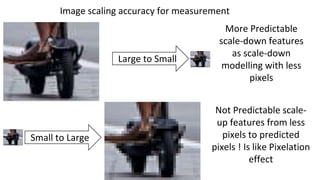

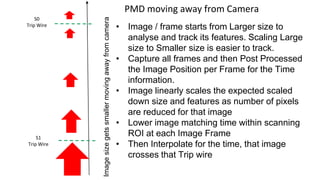

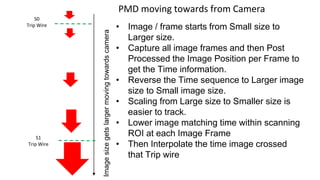

This document discusses using computer vision and cameras for measurement applications. It begins by introducing the speaker and their background. It then discusses some of the challenges with computer vision accuracy, particularly when using cameras as contactless sensors outdoors. It provides examples of using video analytics to extract metadata like people counts and speed measurements. The document emphasizes that measurement accuracy depends on many factors like sensor calibration, installation, and environmental conditions.