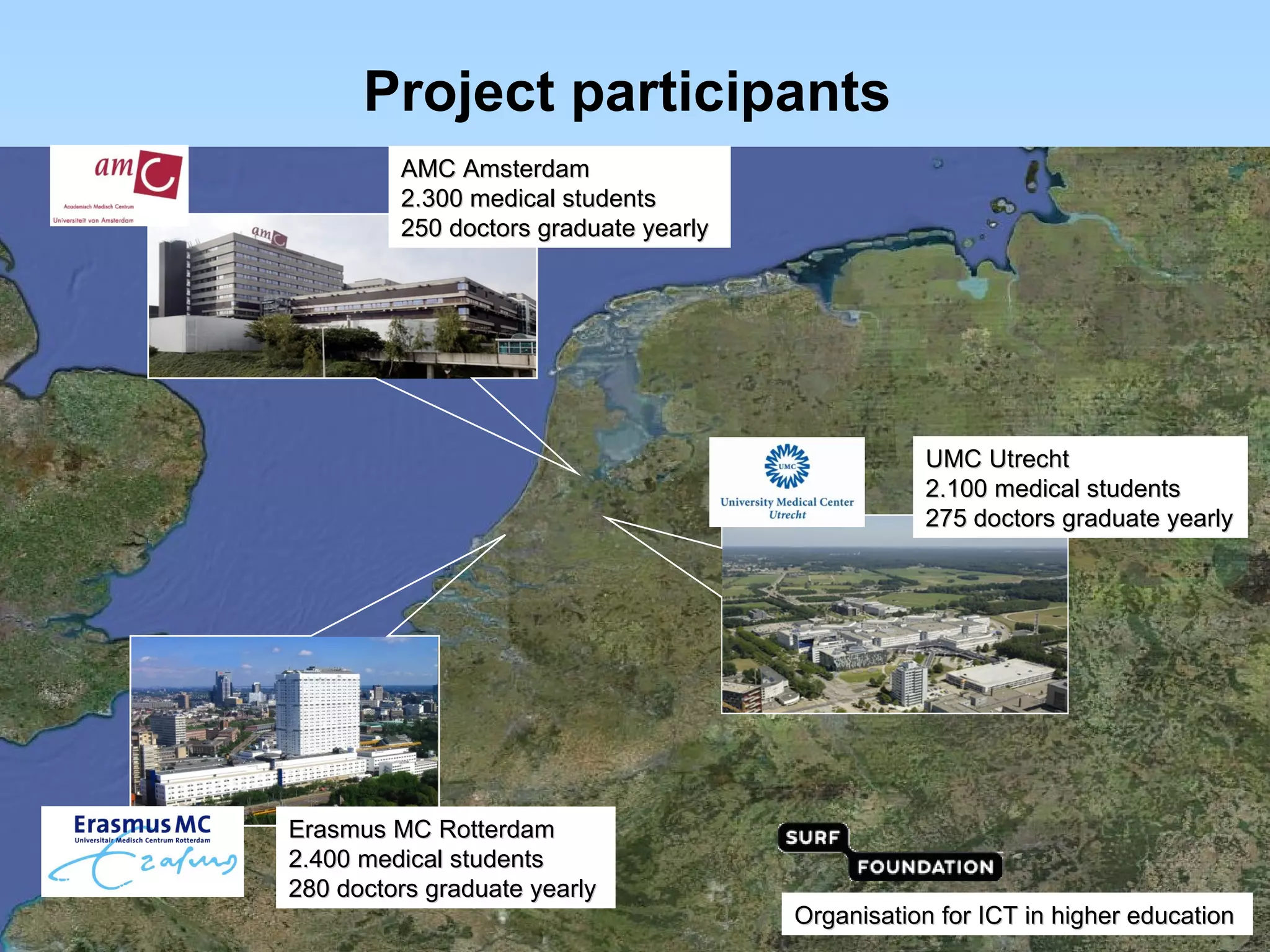

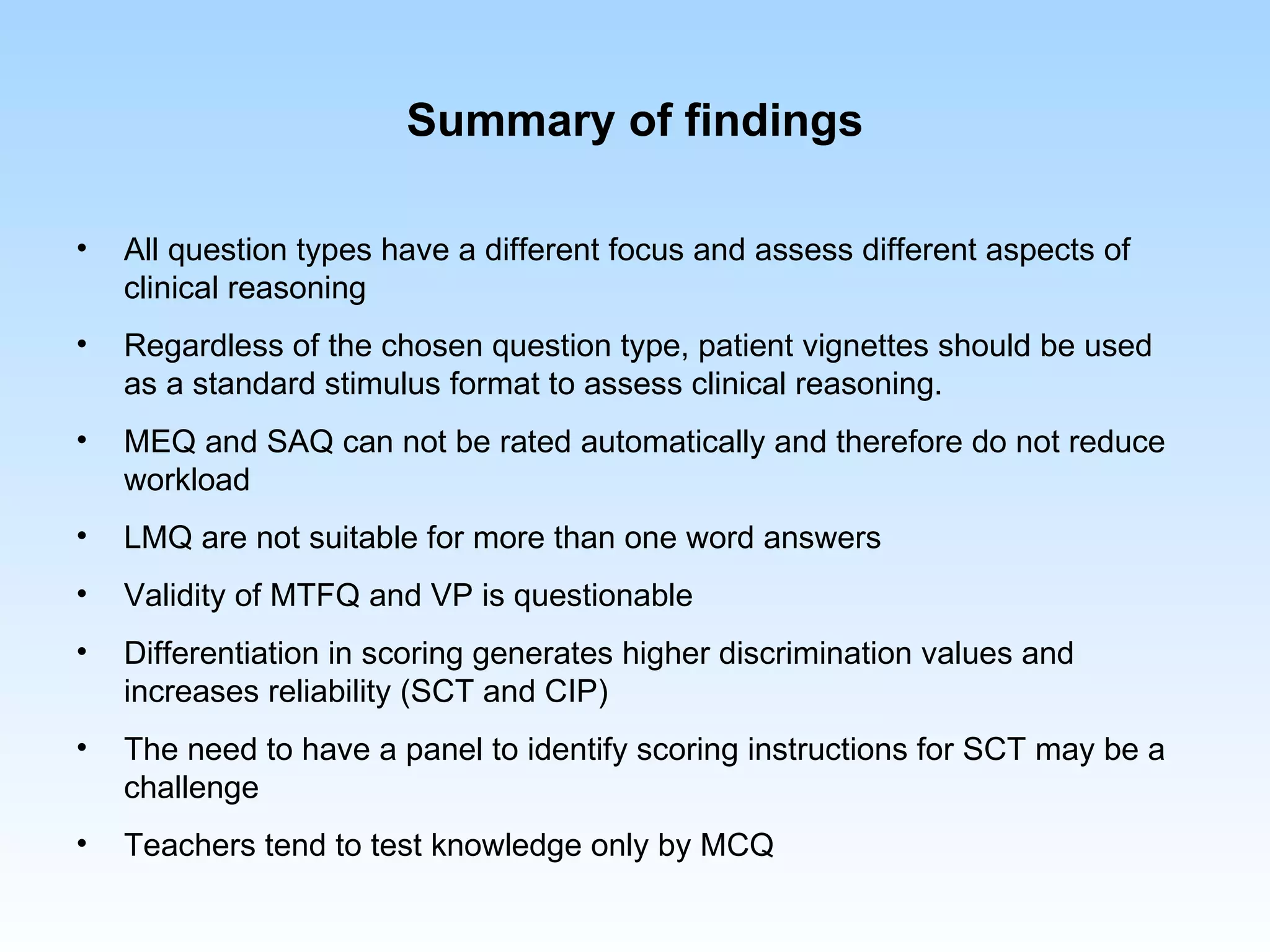

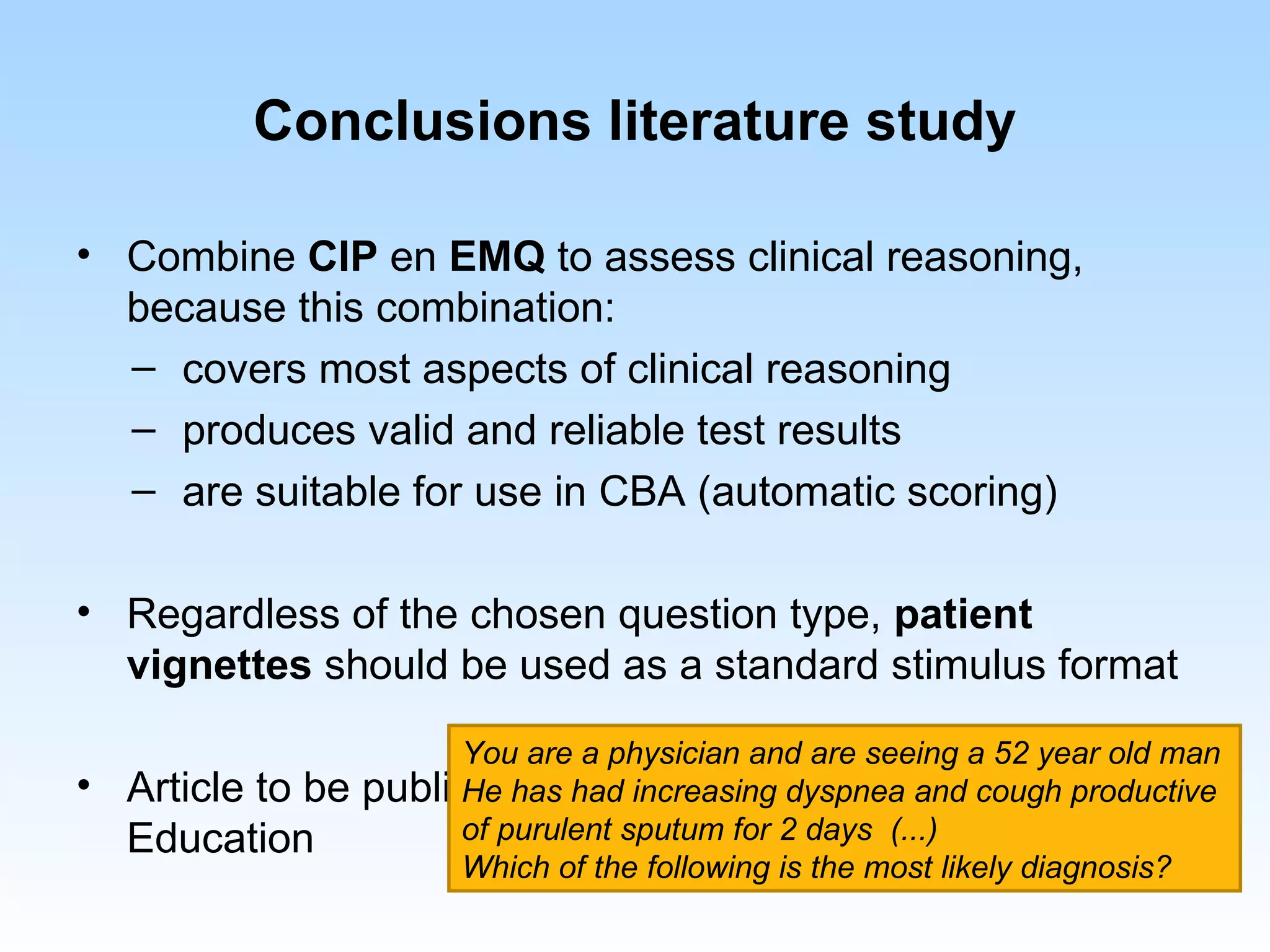

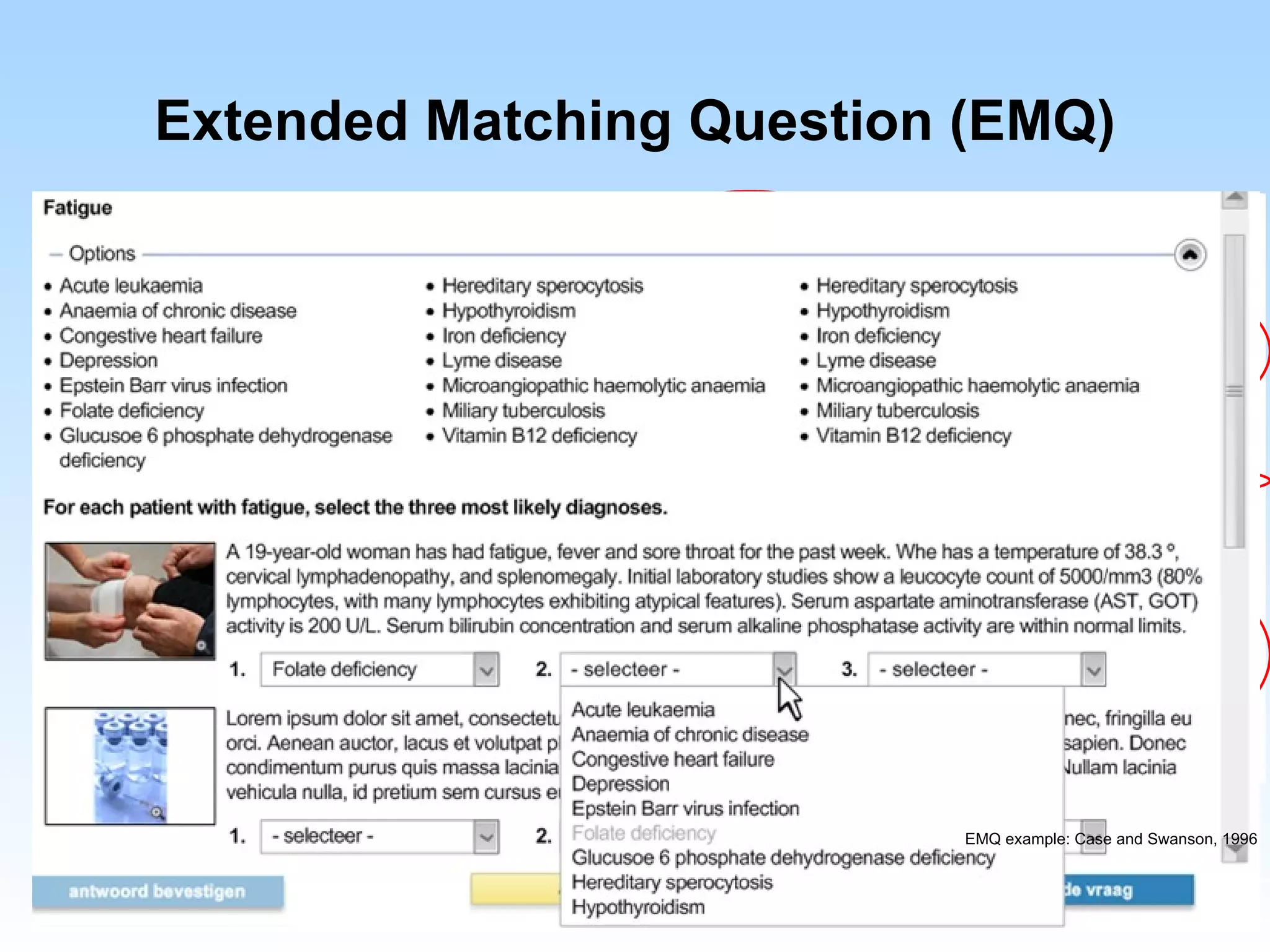

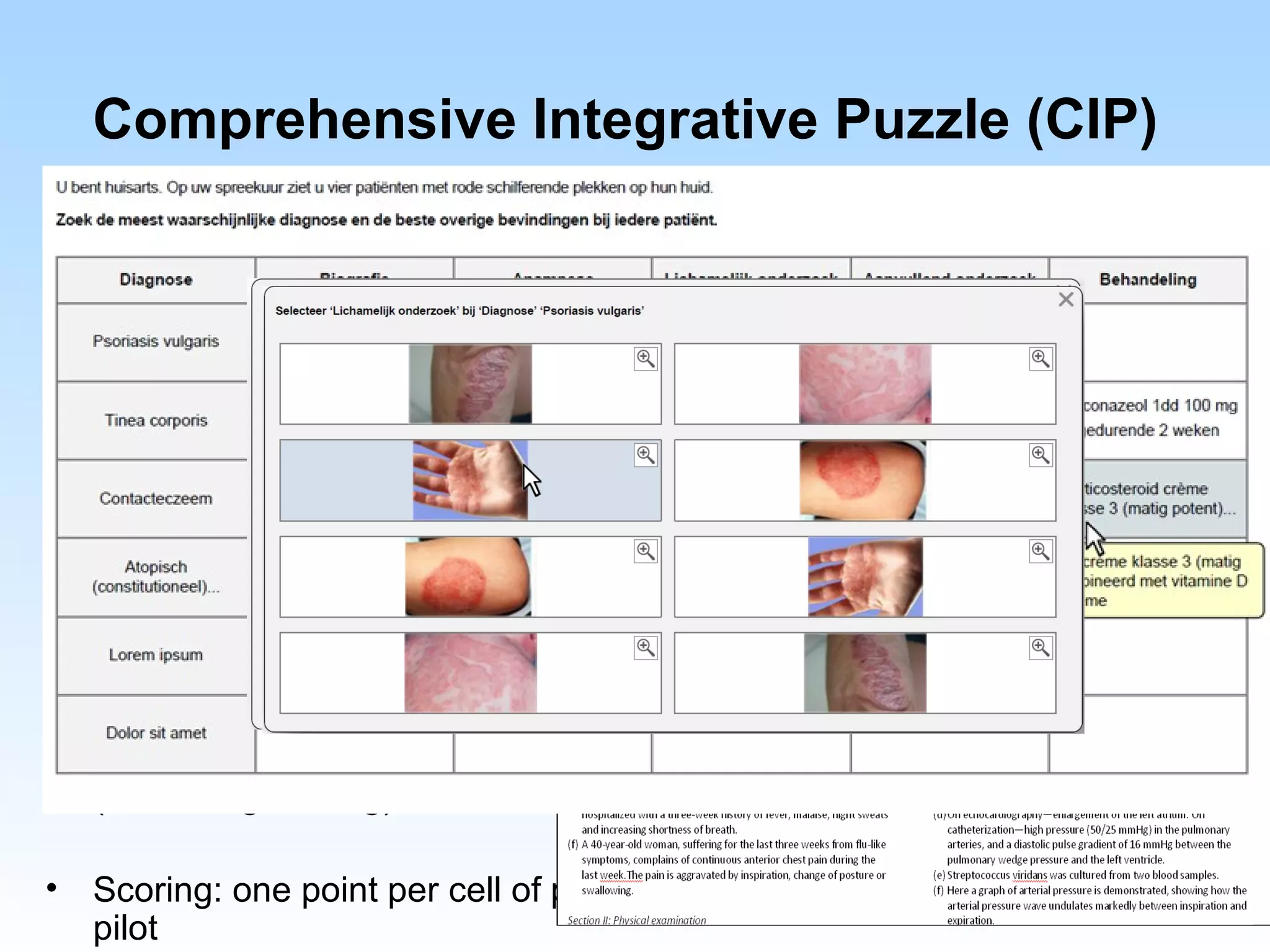

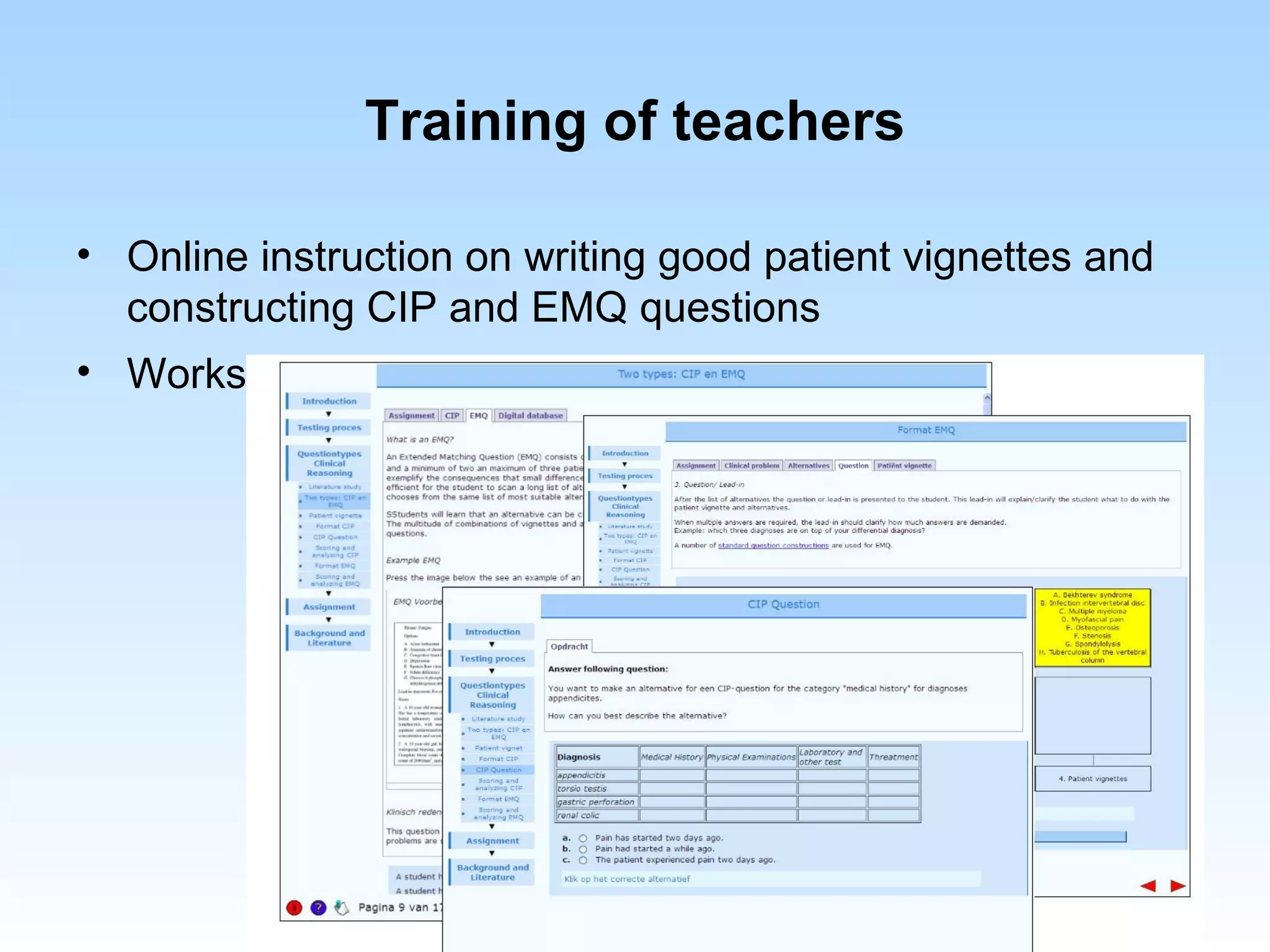

This document summarizes a project between 3 Dutch medical schools to develop computer-based assessments of clinical reasoning. The project aims to reduce workload for teachers while improving assessment quality. Literature identified Extended Matching Questions and Comprehensive Integrative Puzzles as suitable formats. Teachers were trained on question development. A prototype system was piloted with positive feedback. Future work includes developing more questions and implementing assessments in curricula.