The document outlines key computer architecture formulas and principles, including performance metrics such as CPU time, Amdahl's Law, and memory access times. It discusses the importance of domain-specific architectures in leveraging the slowdown of Moore's Law and the evolving landscape of computer architecture. Additionally, it highlights updates in the sixth edition of 'Computer Architecture: A Quantitative Approach,' emphasizing newly incorporated technology advancements and real-world applications.

![[10] Less than 5 min (to read and understand)

[15] 5–15 min for a full answer

[20] 15–20 min for a full answer

[25] 1 h for a full written answer

[30] Short programming project: less than 1 full day of

programming

[40] Significant programming project: 2 weeks of elapsed time

[Discussion] Topic for discussion with others

Solution

s to the case studies and exercises are available for instructors

who

register at textbooks.elsevier.com.

Supplemental Materials

A variety of resources are available online at

https://www.elsevier.com/books/

computer-architecture/hennessy/978-0-12-811905-1, including

the following:](https://image.slidesharecdn.com/computerarchitectureformulas1-221225184204-817ac276/85/Computer-Architecture-Formulas1-CPU-time-Instru-docx-37-320.jpg)

![New materials and links to other resources available on the Web

will be added

on a regular basis.

Helping Improve This Book

Finally, it is possible to make money while reading this book.

(Talk about cost per-

formance!) If you read the Acknowledgments that follow, you

will see that we

went to great lengths to correct mistakes. Since a book goes

through many print-

ings, we have the opportunity to make even more corrections. If

you uncover any

remaining resilient bugs, please contact the publisher by

electronic mail

([email protected]).

We welcome general comments to the text and invite you to

send them to a

separate email address at [email protected]

Concluding Remarks

Once again, this book is a true co-authorship, with each of us

writing half the chap-](https://image.slidesharecdn.com/computerarchitectureformulas1-221225184204-817ac276/85/Computer-Architecture-Formulas1-CPU-time-Instru-docx-39-320.jpg)

![ters and an equal share of the appendices.We can’t imagine how

long it would have

taken without someone else doing half the work, offering

inspiration when the task

seemed hopeless, providing the key insight to explain a difficult

concept, supply-

ing over-the-weekend reviews of chapters, and commiserating

when the weight of

our other obligations made it hard to pick up the pen.

Thus, once again, we share equally the blame for what you are

about to read.

John Hennessy ■ David Patterson

Preface ■ xxiii

mailto:[email protected]

mailto:[email protected]

mailto:[email protected]

mailto:[email protected]

This page intentionally left blank](https://image.slidesharecdn.com/computerarchitectureformulas1-221225184204-817ac276/85/Computer-Architecture-Formulas1-CPU-time-Instru-docx-40-320.jpg)

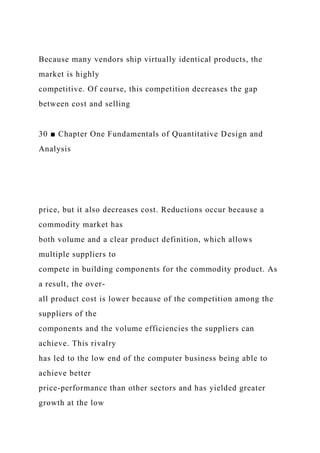

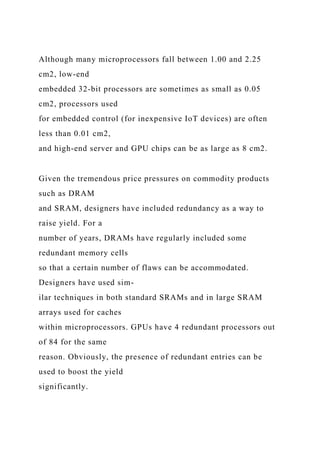

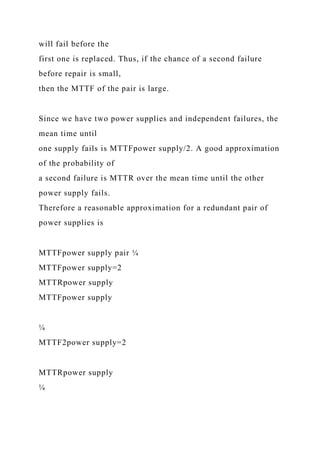

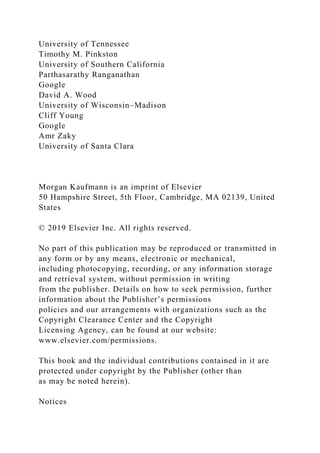

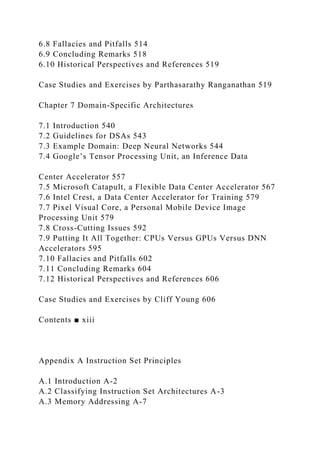

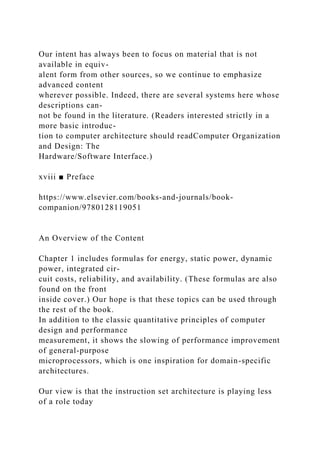

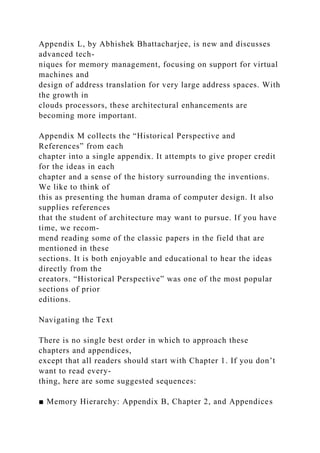

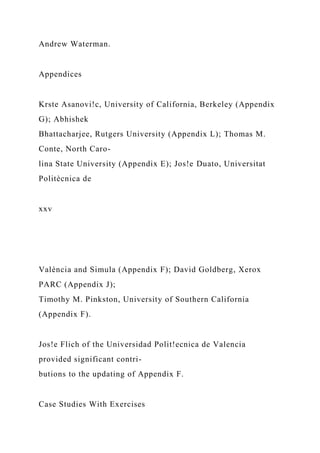

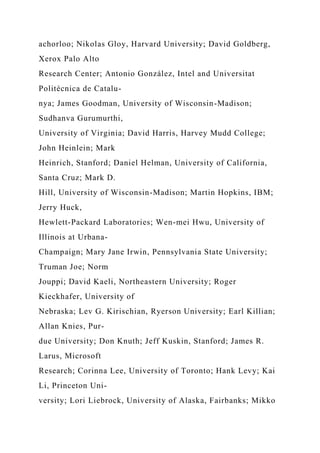

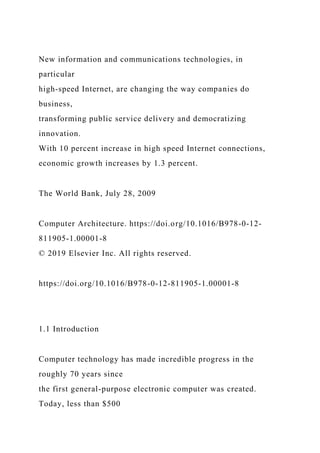

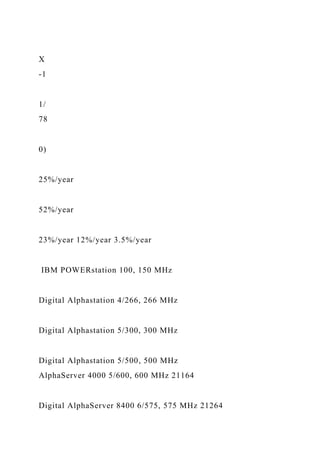

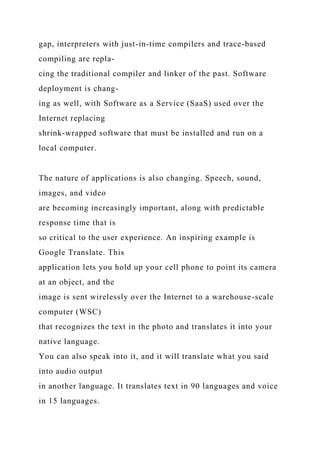

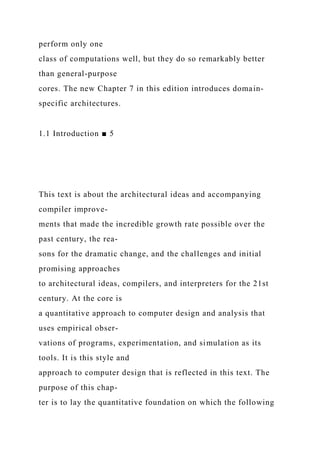

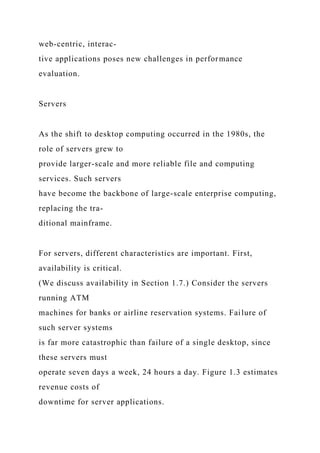

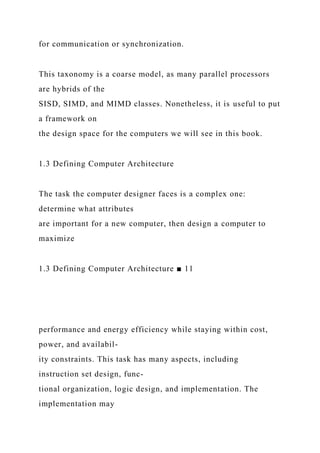

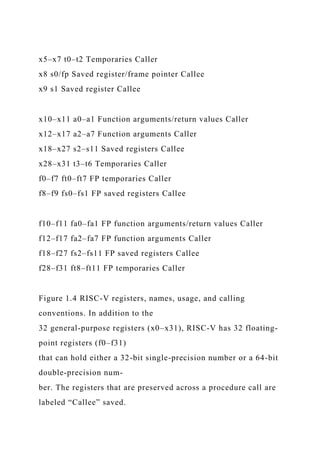

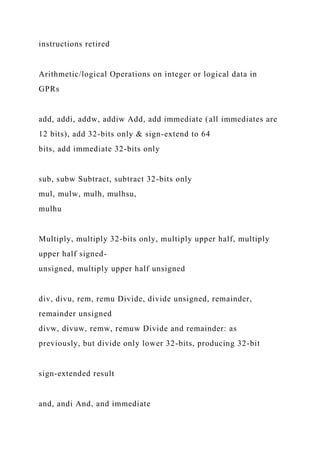

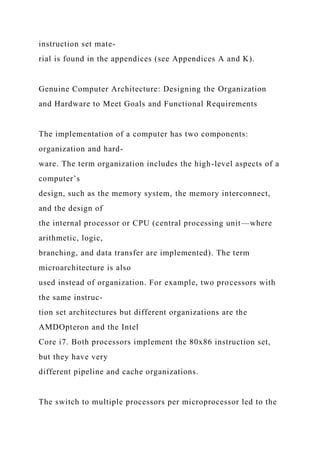

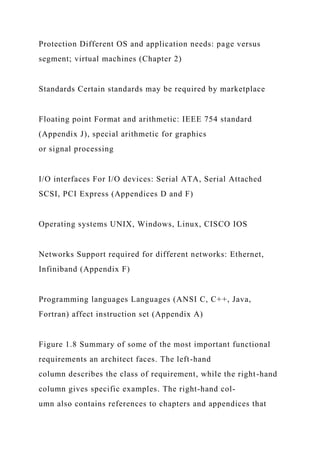

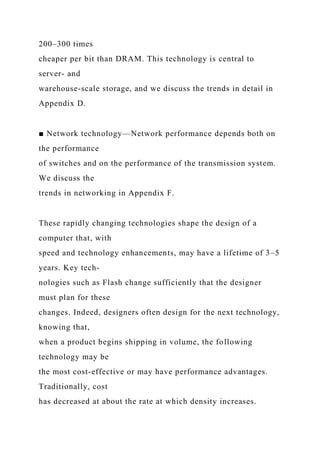

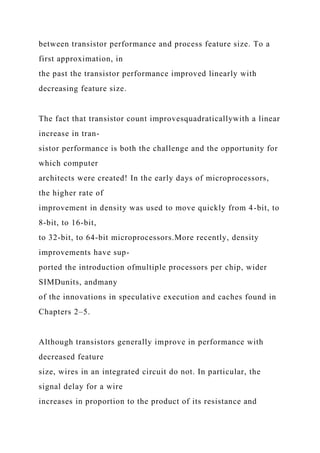

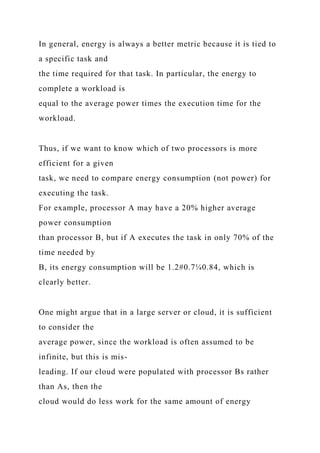

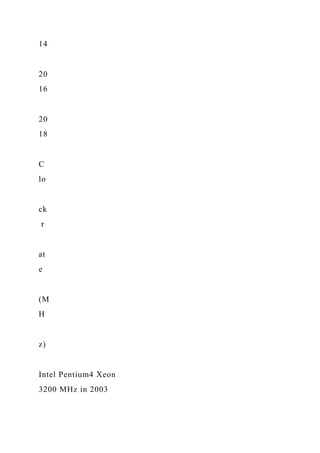

![opcodeimm [4:0]

imm [4:1|11]

rs1

rs1

rs2

rs2

funct3

funct3

imm [11:5]

opcoderdrs1 funct3imm [11:0]

opcoderdimm [31:12]

J-typeopcoderdimm [20|10:1|11|19:12]](https://image.slidesharecdn.com/computerarchitectureformulas1-221225184204-817ac276/85/Computer-Architecture-Formulas1-CPU-time-Instru-docx-118-320.jpg)

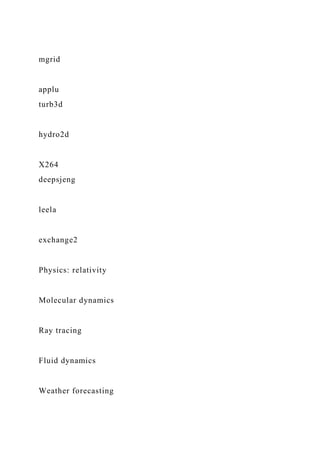

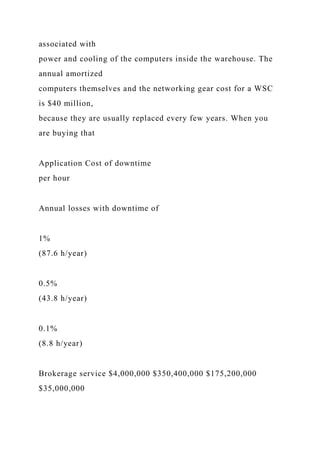

![B-typeopcodeimm [10:5]imm [12]

Figure 1.7 The base RISC-V instruction set architecture

formats. All instructions are 32 bits long. The R format is for

integer register-to-register operations, such as ADD, SUB, and

so on. The I format is for loads and immediate oper-

ations, such as LD and ADDI. The B format is for branches and

the J format is for jumps and link. The S format is for

stores. Having a separate format for stores allows the three

register specifiers (rd, rs1, rs2) to always be in the same

location in all formats. The U format is for the wide immediate

instructions (LUI, AUIPC).

16 ■ Chapter One Fundamentals of Quantitative Design and

Analysis

The other challenges facing the computer architect beyond ISA

design are par-

ticularly acute at the present, when the differences among

instruction sets are small

and when there are distinct application areas. Therefore,

starting with the fourth

edition of this book, beyond this quick review, the bulk of the](https://image.slidesharecdn.com/computerarchitectureformulas1-221225184204-817ac276/85/Computer-Architecture-Formulas1-CPU-time-Instru-docx-119-320.jpg)

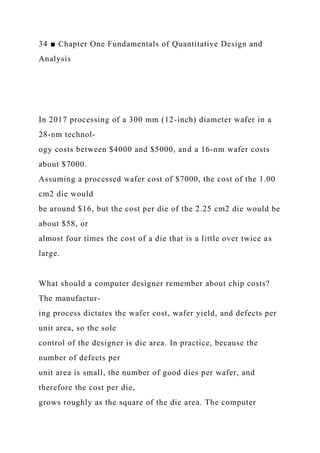

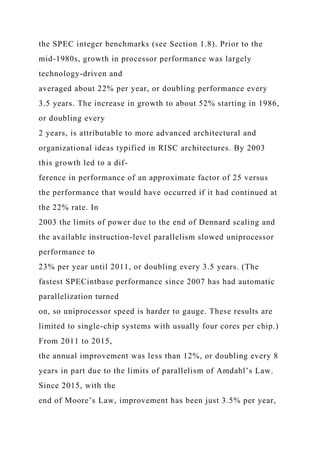

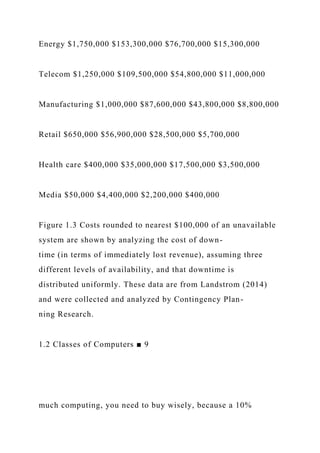

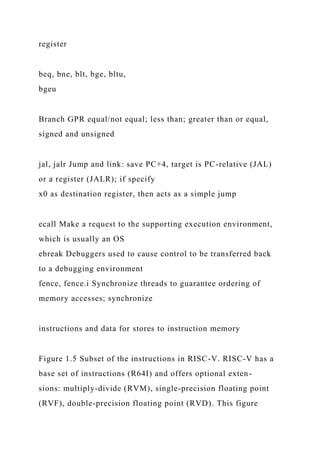

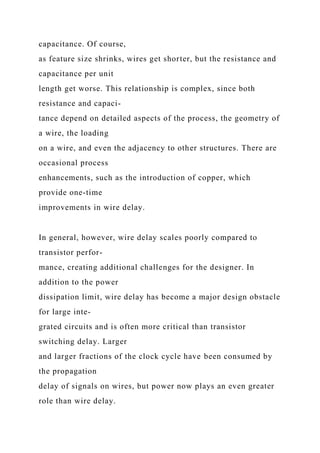

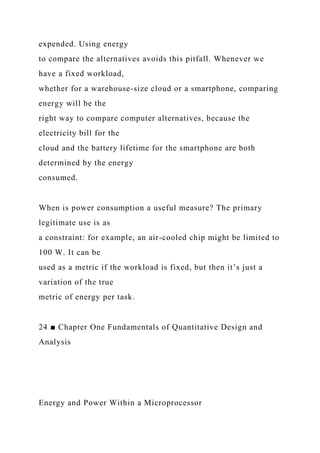

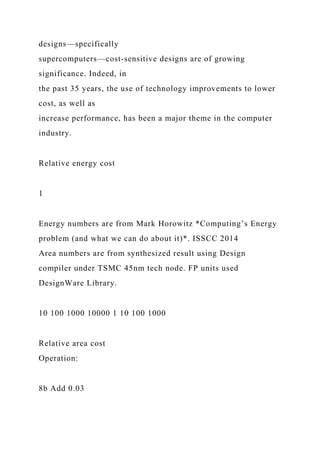

![3495

1640

7700

N/A

N/A

Figure 1.13 Comparison of the energy and die area of arithmetic

operations and energy cost of accesses to SRAM

and DRAM. [Azizi][Dally]. Area is for TSMC 45 nm technology

node.

1.6 Trends in Cost ■ 29

Textbooks often ignore the cost half of cost-performance

because costs change,

thereby dating books, and because the issues are subtle and

differ across industry

segments. Nevertheless, it’s essential for computer architects to](https://image.slidesharecdn.com/computerarchitectureformulas1-221225184204-817ac276/85/Computer-Architecture-Formulas1-CPU-time-Instru-docx-174-320.jpg)