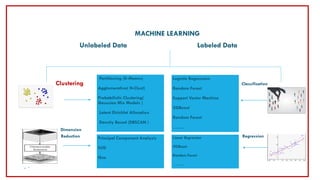

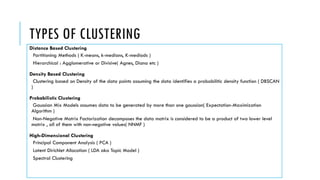

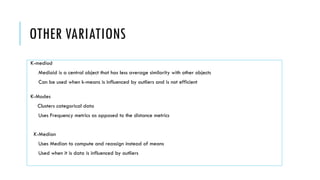

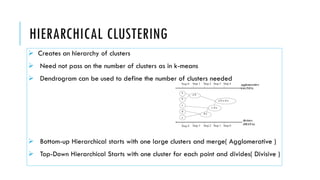

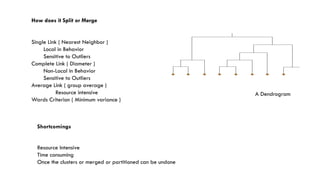

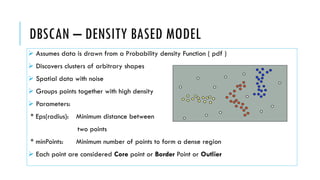

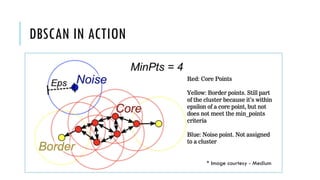

1. Clustering is an unsupervised machine learning technique used to group unlabeled data points into clusters based on similarity. There are several types of clustering including partitioning methods like k-means, hierarchical clustering, density-based clustering, and probabilistic clustering.

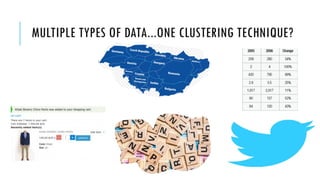

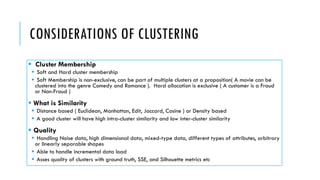

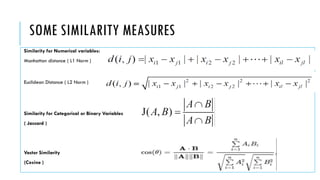

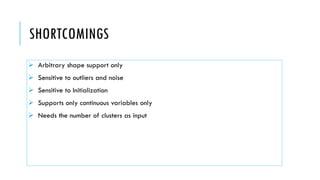

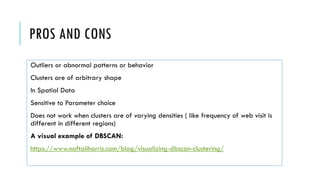

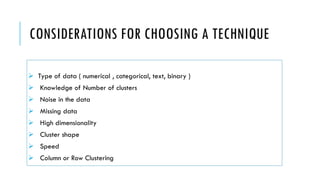

2. When choosing a clustering technique, factors to consider include the type of data, whether the number of clusters is known, the presence of noise or missing data, dimensionality of the data, shape of the clusters, and speed/scalability needs.

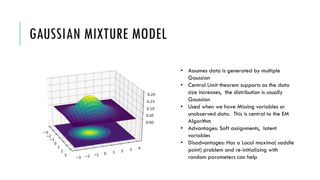

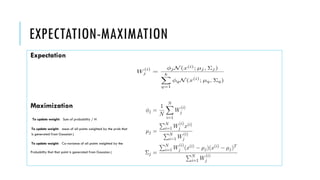

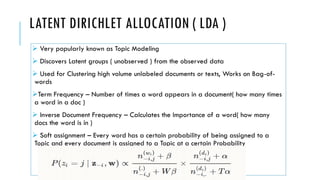

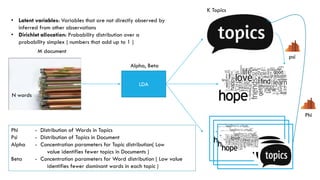

3. Popular clustering algorithms include k-means, hierarchical clustering, DBSCAN, Gaussian mixture models, and Latent Dirichlet Allocation (LDA) for text clustering. Each have their own pros and cons depending