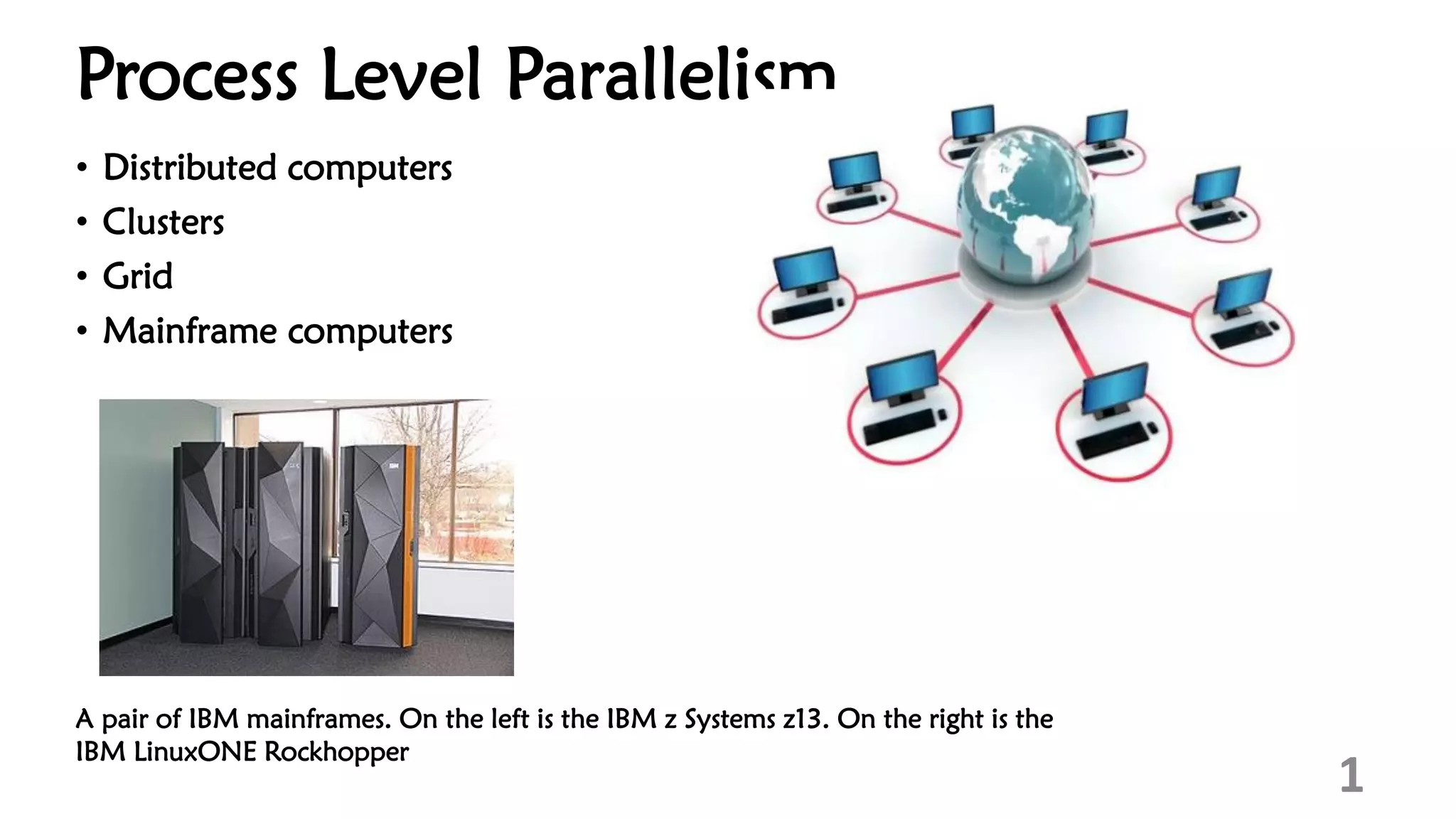

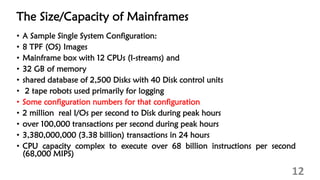

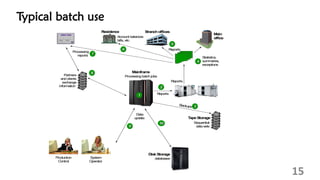

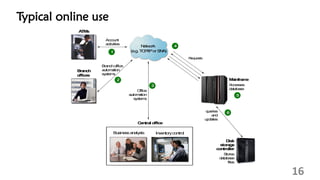

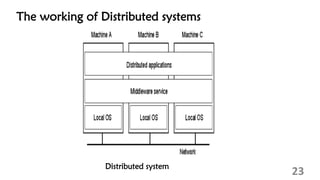

The document discusses different types of parallel computing including process level parallelism using distributed computers like clusters, grids, and mainframe computers. It provides details on distributed computing systems, how they are highly scalable with processing elements connected via a network. Examples are given of distributed systems like computer networks and network applications. The document also summarizes cluster computing using groups of connected computers, massively parallel computing using specialized interconnect networks, and grid computing which uses spare computing resources across administrative domains. Mainframe computers are described as used for critical enterprise applications requiring high availability, security, and bulk data processing.