This document discusses distributed system models and enabling technologies for scalable computing over the Internet. It covers the evolution of computing from centralized to distributed models utilizing multiple computers over the Internet. Key topics include high-performance computing, high-throughput computing, different levels of parallelism, innovative applications, and new paradigms like the Internet of Things. Computer clusters, virtualization, and their design are also summarized.

![8

Computing delivered as a utility can be defined as “on demand delivery of infrastructure,

applications, and business processes in a security-rich, shared, scalable, and based computer

environment over the Internet for a fee ”

Hardw are

Distributed Computing

Systems Management

2.1.4 SOA, Web Services, Web 2.0, and Mashups

The emergence of Web services (WS) open standards has significantly contributed

to advances in the domain of software integration [12]. Web services

can glue together applications running on different messaging product platforms,

enabling information from one application to be made available to

others, and enabling internal applications to be made available over the

Internet.

WS standards have been created on top of existing ubiquitous technologies such as HTTP and

XML, thus providing a common mechanism for delivering services, making them ideal for

implementing a service-oriented architecture(SOA). The purpose of a SOA is to address

requirements of loosely coupled, standards-based, and protocol-independent distributed

computing. In a SOA, software resources are packaged as “services,” which are well-defined,

selfcontained modules that provide standard business functionality and are independent of the

state or context of other services.

2.1.4 Grid Computing

Grid computing enables aggregation of distributed resources and transparently access to them.

Most production grids such as TeraGrid and EGEE seek to share compute and storage resources

distributed across different administrative domains, with their main focus being speeding up a

broad range of scientific applications, such as climate modeling, drug design, and protein

analysis.

2.1.5 Utility Computing

large grid installations have faced new problems, such as excessive spikes in demand for

resources coupled with strategic and adversarial behavior by users. Initially, grid resource

management techniques did not ensure fair and equitable access to resources in many systems.

Utility &

Grid

Computing

SOA

Web 2.0

Web Services

Mashups

Autonomic

Computing

Data Center

Automation

Hardw are

Virtualizatio

n

Multi-core

chips

Cloud

Computi

ng](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-8-320.jpg)

![9

Traditional metrics (throughput, waiting time, and slowdown) failed to capture the more subtle

requirements of users. There were no real incentives for users to be flexible about resource

requirements or job deadlines, nor provisions to accommodate users with urgent work .

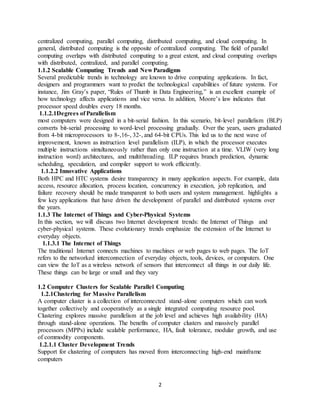

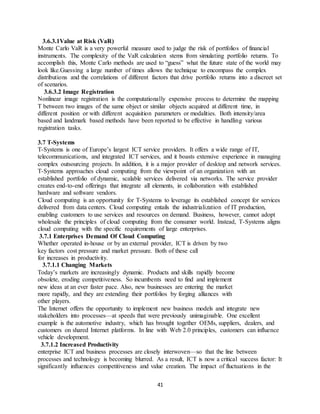

2.1.6 Hardware Virtualization

Cloud computing services are usually backed by large-scale data centers composed of thousands

of computers. Such data centers are built to serve many users and host many disparate

applications. For this purpose, hardware virtualization can be considered as a perfect fit to

overcome most operational issues of data center building and maintenance.

.

. Virtual Machine Monitor (Hypeor)

VMWare ESXi. VMware is a pioneer in the virtualization market. Its ecosystem

of tools ranges from server and desktop virtualization to high-level

management tools [24]. ESXi is a VMM from VMWare. It is a bare-metal

hypervisor, meaning that it installs directly on the physical server, whereas

others may require a host operating system.

Xen. The Xen hypervisor started as an open-source project and has served as a

base to other virtualization products, both commercial and open-source. It has

pioneered the para-virtualization concept, on which the guest operating system,

by means of a specialized kernel, can interact with the hypervisor, thus

significantly improving performance.

KVM. The kernel-based virtual machine (KVM) is a Linux virtualization

subsystem. Is has been part of the mainline Linux kernel since version 2.6.20,

thus being natively supported by several distributions. In addition, activities

such as memory management and scheduling are carried out by existing kernel .

2.1.7 Virtual Appliances and the Open Virtualization Format

An application combined with the environment needed to run it (operating

system, libraries, compilers, databases, application containers, and so forth) is

referred to as a “virtual appliance.” Packaging application environments in the

Virtual Machine NVirtual Machine 2Virtual Machine 1

User software User software

Data

Base

Web

Server

Email Server Facebook

App

Java Ruby

on

Rails

User software

App A App X

App B App Y

Windows Linux Guest OS

Virtual Machine Monitor (Hypervisor)

Hardware](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-9-320.jpg)

![10

shape of virtual appliances eases software customization, configuration, and

patching and improves portability. Most commonly, an appliance is shaped as

a VM disk image associated with hardware requirements, and it can be readily

deployed in a hypervisor.

2.1.8 Autonomic Computing

The increasing complexity of computing systems has motivated research onautonomic

computing, which seeks to improve systems by decreasing humaninvolvement in their operation.

In other words, systems should managethemselves, with high-level guidance from humans.

2.2 Migrating Into A Cloud

Cloud computing has been a hotly debated and discussed topic amongst IT professionals and

researchers both in the industry and in academia. There are intense discussions on several blogs,

in Web sites, and in several research efforts. This also resulted in several entrepreneurial efforts

to help leverage and migrate into the cloud given the myriad issues, challenges, benefits, and

limitations and lack of comprehensive understanding of what cloud computing can do. On the

one hand, there were these large cloud computing IT vendors like Google, Amazon, and

Microsoft, who had started offering cloud computing services on what seemed like a

demonstration and trial basis though not explicitly mentioned.

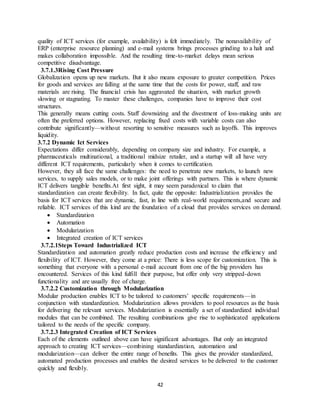

2.2.1 The Promise of the Cloud

Most users of cloud computing services offered by some of the large-scale data centers are least

bothered about the complexities of the underlying systems or their functioning. More so given

the heterogeneity of either the systems or the software running on them. They were most

impressed by the simplicity,uniformity, and ease of use of the Cloud Computing Service

abstractions. In small and medium enterprises, cloud computing usage for all additional cyclical

IT needs has yielded substantial and significant economic savings. Many such success stories

have been documented and discussed on the Internet. This economics and the associated trade-

offs, of leveraging the cloud computing services, now popularly called “cloud economics,” for

satisfying enterprise’s

2.2.2 The Cloud Service Offerings and Deployment Models

Cloud computing has been an attractive proposition both for the CFO and the CTO of an

enterprise primarily due its ease of usage. This has been achieved by large data center service

vendors or now better known as cloud service vendors again primarily due to their scale of

Cloudonomics

Technology

• ‘Pay per use’ – Low er Cost Barriers

• On Demand Resources –Autoscaling

• Capex vs OPEX – No capital expenses (CAPEX) and only operational expenses

OPEX.

• SLA driven operations – Much Low er TCO

• Attractive NFR support: Availability, Reliability

• ‘Infinite’ Elastic availability – Compute/Storage/Bandw idth

• Automatic Usage Monitoring and Metering

• Jobs /Tasks Virtualized and Transparently ‘Movable’

• Integration and interoperability ‘support’ for hybrid ops

• Transparently encapsulated & abstracted IT features.

IaaS

• Abstract Compute/Storage/Bandwidth Resources

• Amazon Web Services[10,9] – EC2, S3, SDB, CDN, CloudWatch](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-10-320.jpg)

![17

integration, OpSource helps its customers grow their SaaS application and increase customer

retention.

The Platform Architecture. OpSource Connect is made up of key features

including

OpSource Services Bus

OpSource Service Connectors

OpSource Connect Certified Integrator Program

OpSource Connect ServiceXchange

OpSource Web Services Enablement Program.

2.3.8. SnapLogic

SnapLogic is a capable, clean, and uncluttered solution for data integration that can be deployed

in enterprise as well as in cloud landscapes. The free community edition can be used for the most

common point-to-point data integration tasks, giving a huge productivity boost beyond custom

code. SnapLogic professional edition is a seamless upgrade that extends the power of this

solution with production management, increased capacity, and multi-user features at a price that

won’t drain the budget, which is getting shrunk due to the economic slump across the globe.

Changing data sources. SaaS and on-premise applications, Web APIs, and

RSS feeds

Changing deployment options. On-premise, hosted, private and public

cloud platforms

Changing delivery needs. Databases, files, and data services

2.4 The Enterprise Cloud Computing Paradigm

Cloud computing is still in its early stages and constantly undergoing changes as new vendors,

offers, services appear in the cloud market. This evolution of the cloud computing model is

driven by cloud providers bringing new services to the ecosystem or revamped and efficient

exiting services primarily triggered by the ever changing requirements by the consumers.

However, cloud computing is predominantly adopted by start-ups or SMEs so far, and wide-

scale enterprise adoption of cloud computing model is still in its infancy. Enterprises are still

carefully contemplating the various usage models where cloud computing can be employed to

support their business operations.

2.4.1 Background

According to NIST [1], cloud computing is composed of five essential characteristics: on

demand self-service, broad network access, resource pooling, rapid elasticity, and measured

service. The ways in which these characteristics are manifested in an enterprise context vary

according to the deployment model employed.

Relevant Deployment Models for Enterprise Cloud Computing

There are some general cloud deployment models that are accepted by the majority of cloud

stakeholders today, as suggested by the references and and discussed in the following:

Public clouds are provided by a designated service provider for general public under a

utility based pay-per-use consumption model. The cloud resources are hosted generally

on the service provider’s premises. Popular examples of public clouds are Amazon’s

AWS (EC2, S3 etc.), Rackspace Cloud Suite, and Microsoft’s Azure Service Platform.

Private clouds are built, operated, and managed by an organization for its internal use

only to support its business operations exclusively. Public, private, and government](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-17-320.jpg)

![18

organizations worldwide are adopting this model to exploit the cloud benefits like

flexibility, cost reduction, agility and so on.

Virtual private clouds are a derivative of the private cloud deployment model but are

further characterized by an isolated and secure segment of resources, created as an

overlay on top of public cloud infrastructure using advanced network virtualization

capabilities. Some of the public cloud vendors that offer this capability include Amazon

Virtual Private Cloud, OpSource Cloud, and Skytap Virtual Lab .

Community clouds are shared by several organizations and support a specific

community that has shared concerns (e.g., mission, security requirements, policy, and

compliance considerations). They may be managed by the organizations or a third party

and may exist on premise or off premise . One example of this is OpenCirrus [6] formed

by HP, Intel, Yahoo, and others.

Managed clouds arise when the physical infrastructure is owned by and/or physically

located in the organization’s data centers with an extension of management and security

control plane controlled by the managed service provider . This deployment model isn’t

widely agreed upon, however, some vendors like ENKI and NaviSite’s NaviCloud offers

claim to be managed cloud offerings.

Hybrid clouds are a composition of two or more clouds (private, community, or public)

that remain unique entities but are bound together by standardized or proprietary

technology that enables data and application

Adoption and Consumption Strategies

The selection of strategies for enterprise cloud computing is critical for IT

capability as well as for the earnings and costs the organization experiences,

motivating efforts toward convergence of business strategies and IT. Some

critical questions toward this convergence in the enterprise cloud paradigm are

as follows:

Will an enterprise cloud strategy increase overall business value?

Are the effort and risks associated with transitioning to an enterprise

cloud strategy worth it?

Which areas of business and IT capability should be considered for the

enterprise cloud?

Which cloud offerings are relevant for the purposes of an organization?

How can the process of transitioning to an enterprise cloud strategy be

piloted and systematically executed?](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-18-320.jpg)

![21

physical machines is traditionally a time consuming process with low assurance on deployment’s

time and cost.

3.1.1 Background And RelatedWork

In this section, we will have a quick look at previous work, give an overview about virtualization

technology, public cloud, private cloud, standardization efforts, high availability through the

migration, and provisioning of virtual machines, and shed some lights on distributed

management’s tools.

3.1.1.1 Virtualization Technology Overview

Virtualization has revolutionized data center’s technology through a set of techniques and tools

that facilitate the providing and management of the dynamic data center’s infrastructure. It has

become an essential and enabling technology of cloud computing environments. Virtualization

can be defined as the abstraction of the four computing resources (storage, processing power,

memory, and network or I/O). It is conceptually similar to emulation, where a system pretends to

be another system, whereas virtualization is a system pretending to be two or more of the same

system

3.1.1.2 Public Cloud and Infrastructure Services

Public cloud or external cloud describes cloud computing in a traditional mainstream sense,

whereby resources are dynamically provisioned via publicly accessible Web applications/Web

services (SOAP or RESTful interfaces)from an off-site third-party provider, who shares

resources and bills on a fine-grained utility computing basis [3], the user pays only for the

capacity of the provisioned resources at a particular time.

3.1.1.3 Private Cloud and Infrastructure Services

A private cloud aims at providing public cloud functionality, but on private resources, while

maintaining control over an organization’s data and resources to meet security and governance’s

requirements in an organization. Private cloud exhibits a highly virtualized cloud data center

Virtual Machine Virtual Machine

Virtual Machine

Virtual Machine Virtual Machine

Virtualization Layer (VMM or Hypervisor)

Physical Server Layer

Workload

1

Workload

2

Workload

3

Workload

n

Guest OS Guest OS Guest OS Guest OS](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-21-320.jpg)

![23

migration, hot/life migration, cold/regular migration, and live storage migration of a virtual

machine [20]. In this process, all key machines’ components, such as CPU, storage disks,

networking, and memory, are completely virtualized, thereby facilitating the entire state of a

virtual machine to be captured by a set of easily moved data files. We will cover some of the

migration’s techniques that most virtualization tools provide as a feature.

Migrations Techniques

Live Migration and High Availability. Live migration (which is also called hot or real-time

migration) can be defined as the movement of a virtual machine from one physical host to

another while being powered on. When it is properly carried out, this process takes place without

any noticeable effect from the end user’s point of view (a matter of milliseconds). One of the

most significant advantages of live migration is the fact that it facilitates proactive maintenance

in case of failure, because the potential problem can be resolved before the disruption of service

occurs.

Live Migration Anatomy, Xen Hypervisor Algorithm. In this section we will explain live

migration’s mechanism and how memory and virtual machine states are being transferred,

through the network, from one host A to another host B the Xen hypervisor is an example for

this mechanism.

3.1.3 Vm Provisioning And Migration In Action

Now, it is time to get into business with a real example of how we can manage the life cycle,

provision, and migrate a virtual machine by the help of one of the open source frameworks used

to manage virtualized infrastructure. Here, we will use ConVirt (open source framework for the

management of open source virtualization like Xen and KVM , known previously as XenMan).

Deployment Scenario. ConVirt deployment consists of at least one ConVirt workstation, where

ConVirt is installed and ran, which provides the main console for managing the VM life cycle,

managing images, provisioning new VMs, monitoring machine resources, and so on. There are

two essential deployment scenarios for ConVirt: A, basic configuration in which the Xen or

KVM virtualization platform is on the local machine, where ConVirt is already installed; B, an

advanced configuration in which the Xen or KVM is on one or more remote servers. The

scenario in use here is the advanced one.](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-23-320.jpg)

![48

Unit-IV

Monitoring ,Management and Applications

4.1 An Architecture For Federated Cloud Computing

Cloud computing enables companies and individuals to lease resources on demand from a

virtually unlimited pool. The “pay as you go” billing model applies charges for the actually used

resources per unit time. This way, a business can optimize its IT investment and improve

availability and scalability.

4.1.1 A Typical Use Case

As a representative of an enterprise-grade application, we have chosen to analyze SAPt systems

and to derive from them general requirements that such application might have from a cloud

computing provider.

4.1.2 SAP Systems

SAP systems are used for a variety of business applications that differ by version and

functionality [such as customer relationship management (CRM) and enterprise resource

planning (ERP)]. For a given application type, the SAP system components consist of generic

parts customized by configuration and parts custom-coded for a specific installation. Certain

SAP applications are composed of several loosely coupled systems.

4.1.3The Virtualized Data Center Use Case

Consider a data center that consolidates the operation of different types of SAP applications and

all their respective environments (e.g., test, production) using virtualization technology. The

applications are offered as a service to external customers, or, alternatively, the data center is

operated by the IT department of an enterprise for internal users (i.e., enterprise employees). A

special variation that deserves mentioning is when the data center serves an on-demand,

Software as a Service (SaaS) setup, where customers are external and where each customer

(tenant) gets the same base version of the application. However, each tenant configures and

customizes the application to suit his specific needs. It is reasonable to assume that a tenant in

this case is a small or medium business (SMB) tenant.

4.1.4 Independence

Just as in other utilities, where we get service without knowing the internals

of the utility provider and with standard equipment not specific to any provider

(e.g., telephones), for cloud computing services to really fulfill the computing as

a utility vision, we need to offer cloud computing users full independence. Users

should be able to use the services of the cloud without relying on any providerspecific

tool, and cloud computing providers should be able to manage their

infrastructure without exposing internal details to their customers or partners.

4.1.5 Isolation

Cloud computing services are, by definition, hosted by a provider that will simultaneously host

applications from many different users. For these users to move their computing into the cloud,

they need warranties from the cloud computing provider that their stuff is completely isolated

from others. Users must be ensured that their resources cannot be accessed by others sharing the

same cloud and that adequate performance isolation is in place to ensure that no other user may

possess the power to directly effect the service granted to their application. Elasticity One of the

main advantages of cloud computing is the capability to provide, or release, resources on

demand. These “elasticity” capabilities should be enacted automatically by cloud computing

providers to meet demand variations, just as electrical companies are able (under normal

operational circumstances) to automatically deal with variances in electricity consumption levels.](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-48-320.jpg)

![52

articles in on-line forums and magazines, where many HPC users express their own opinion on

the relationship between the two paradigms [11, 28, 29, 40].

Cloud is simply presented, by its supporters, as an evolution of the grid. Some

consider grids and clouds as alternative options to do the same thing in a different

way. However, there are very few clouds on which one can build, test, or run

compute-intensive applications.

4.3.1.1 Grid and Cloud as Alternatives

Both grid and cloud are technologies that have been conceived to provide users with handy

computing resources according to their specific requirements .Grid was designed with a bottom-

up approach. Its goal is to share a hardware or a software among different organizations by

means of common protocols and policies. The idea is to deploy interoperable services in order to

allow the access to physical resources (CPU, memory, mass storage ,etc.) and to available

software utilities. Users get access to a real machine. Grid resources are administrated by their

owners. Authorized users can invoke grid services on remote machines without paying and

without service level guarantees.

4.3.1.2Grid and Cloud Integration

To understand why grids and clouds should be integrated, we have to start by considering what

the users want and what these two technologies can provide .Then we can try to understand how

cloud and grid can complement each other and why their integration is the goal of intensive

research activities . We know that a supercomputer runs faster than a virtualized resource. For

example, a LU benchmark on EC2 (the cloud platform provided by Amazon) runs slower, and

some overhead is added to start VMs . On the other hand, the probability to execute an

application in fixed time on a grid resource depends on many parameters and cannot be

guaranteed.

Integration of virtualization into existing e-infrastructures

Deployment of grid services on top of virtual infrastructures

Integration of cloud-base services in e-infrastructures

Promotion of open-source components to build clouds

Grid technology for cloud federation

4.3.2 Hpc In The Cloud: Performance-Related Issue

This section will discuss the issues linked to the adoption of the cloud paradigm in the HPC

context. In particular, we will focus on three different issues:

1. The difference between typical HPC paradigms and those of current cloud environments,

especially in terms of performance evaluation.

2. A comparison of the two approaches in order to point out their advantages and drawbacks, as

far as performance is concerned.

3. New performance evaluation techniques and tools to support HPC in cloud systems.

In light of the above, it is clear that the typical HPC user would like to know how long his

application will run on the target cluster and which configuration has the highest

performance/cost ratio. The advanced user, on the other hand,would also know if there is a way

to optimize its application so as to reduce the cost of its run without sacrificing performance. The

high-end user, who cares more for performance than for the cost to be sustained, would like

instead to know how to choose the best configuration to maximize the performance of his

application.

4.3.2.1HPC Systems and HPC on Clouds: A Performance Comparison](https://image.slidesharecdn.com/cloudcomputing-140704125117-phpapp02/85/Cloud-computing-52-320.jpg)