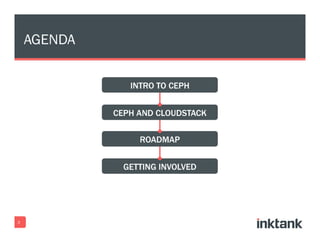

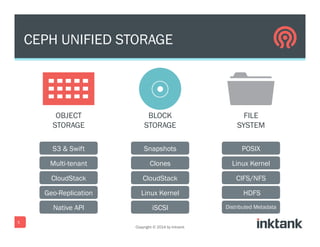

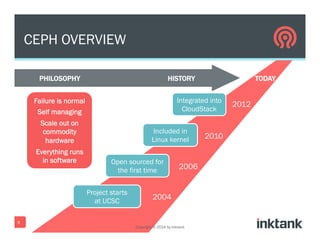

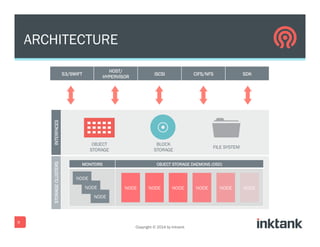

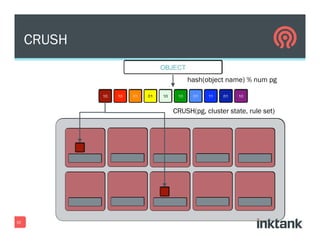

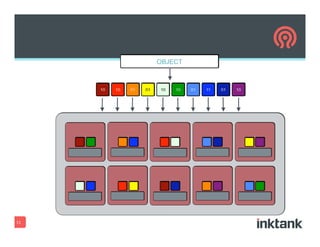

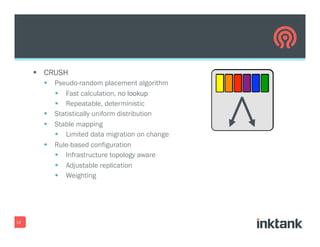

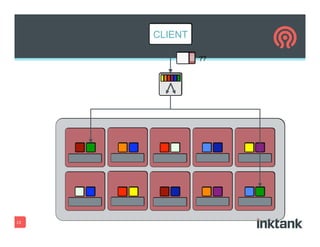

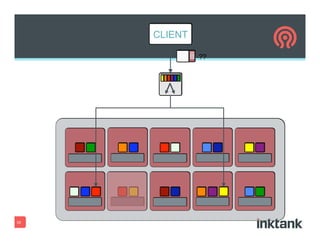

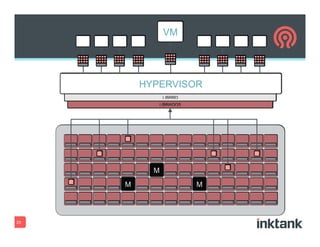

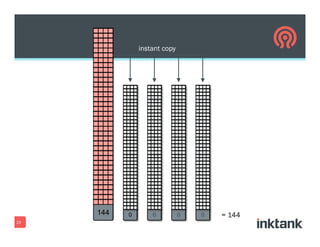

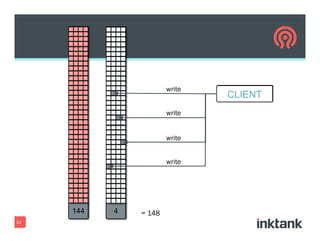

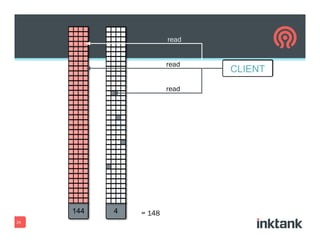

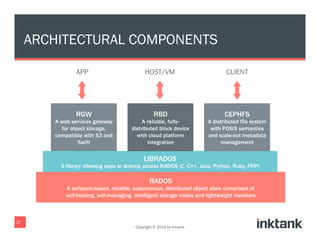

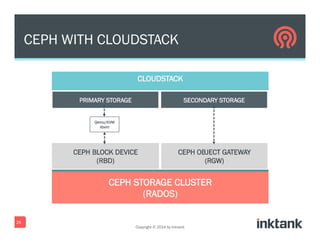

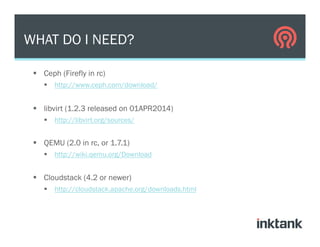

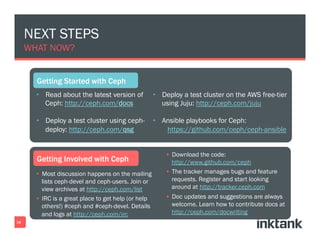

This document provides an overview and agenda for a presentation on Ceph. It discusses Ceph's philosophy of being self-managing and scale-out on commodity hardware. The architecture uses CRUSH for pseudo-random placement of data and supports file, block, and object storage. It also covers Ceph's integration with CloudStack for instant provisioning of hundreds of VMs efficiently. Next steps discussed include learning more about the latest Ceph version, deploying a test cluster, and contributing to the open source project.