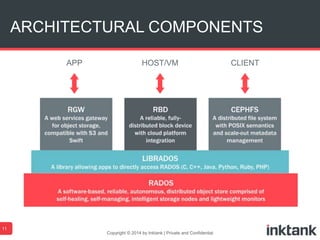

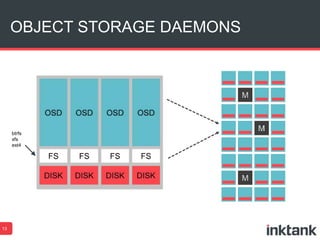

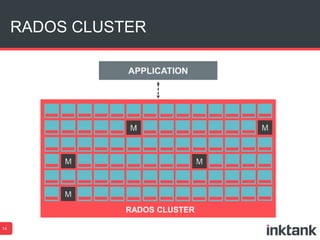

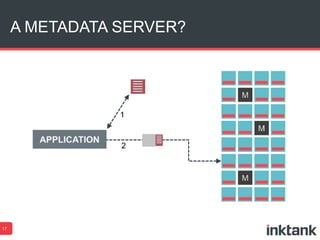

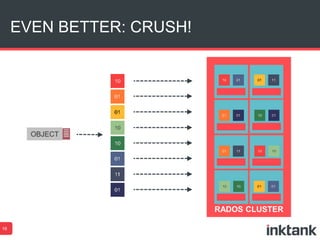

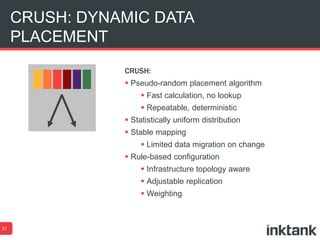

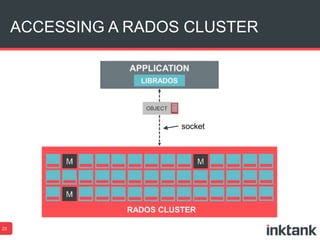

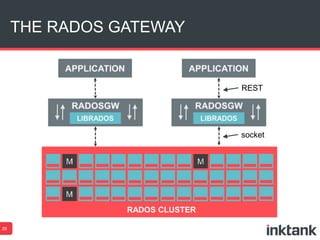

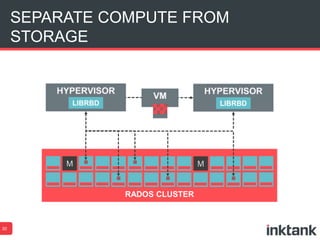

The document discusses the projected growth of data storage, indicating that by 2020, over 15 zettabytes (zb) will be stored, necessitating new scalable storage solutions like Ceph. It outlines the architectural components and capabilities of Ceph, including object storage daemons and the use of the CRUSH algorithm for data placement. Additionally, it emphasizes community efforts and events related to Ceph development and collaboration.