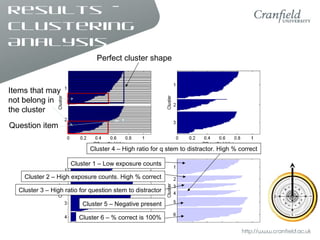

The document summarizes a pilot study analyzing 115 question items from an online export controls training. Various techniques were used to analyze the data, including exploratory data analysis, principle component analysis, and clustering analysis. The analysis identified questions with deficiencies or anomalies, indicating issues with question design. It was found that exploratory data analysis helped highlight both strong and weak question items, identifying areas for improvement. Recommendations include developing a visual dashboard to monitor question performance, applying validation rules to the authoring tool, and taking a more systematic approach to question design and alignment with learning objectives.