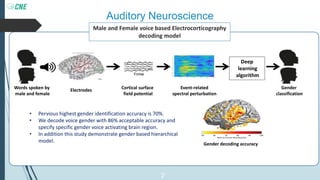

This document summarizes a study that developed a brain-robot interaction system combining human and machine intelligence. The system uses electrocorticography to decode gender from voices with 86% accuracy, specifying gender-activated brain regions. It also presents a hierarchical gender decoding model. The study integrated brain-computer interface techniques like P300 and SSVEP with machine learning to allow human supervision of a robot at a high level, with the robot using sensors and algorithms to complete tasks autonomously when possible. An experiment tested the system's ability to efficiently control a robot to navigate an environment and pick up objects.