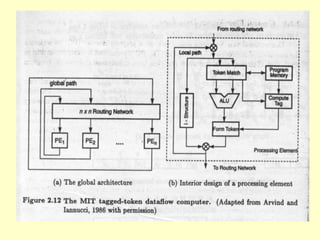

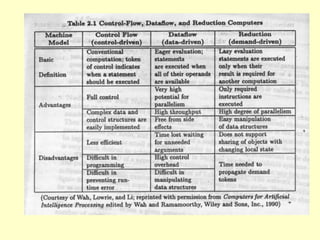

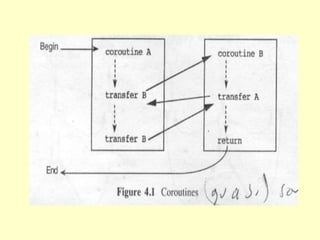

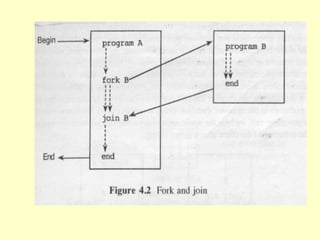

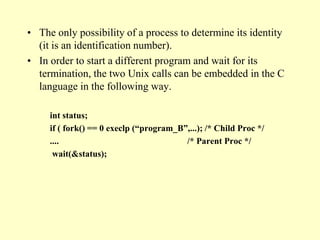

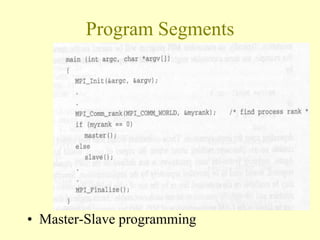

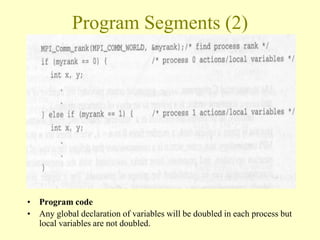

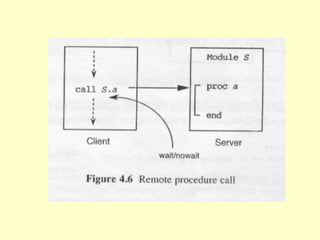

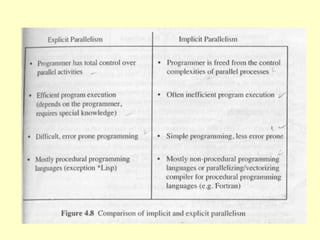

This chapter discusses different concepts for parallel processing including control flow, data flow, and demand driven approaches. Control flow is based on sequential execution guided by a program counter, while data flow and demand driven approaches allow for more parallelism driven by data or demand availability. Data flow architectures emphasize fine-grained parallelism at the instruction level without shared memory. Demand driven approaches initiate operations based on demand for results. The chapter compares different flow mechanisms and discusses concepts like coroutines, fork/join, and remote procedure calls for parallel programming.