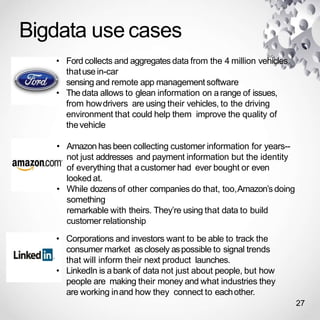

The document discusses the concept of big data, its challenges, and the technologies used to manage it, including Hadoop and MapReduce. It explains the types of data and the importance of distributed computing for handling large datasets efficiently. Various use cases of big data are highlighted, showcasing its impact in industries like automotive and e-commerce.