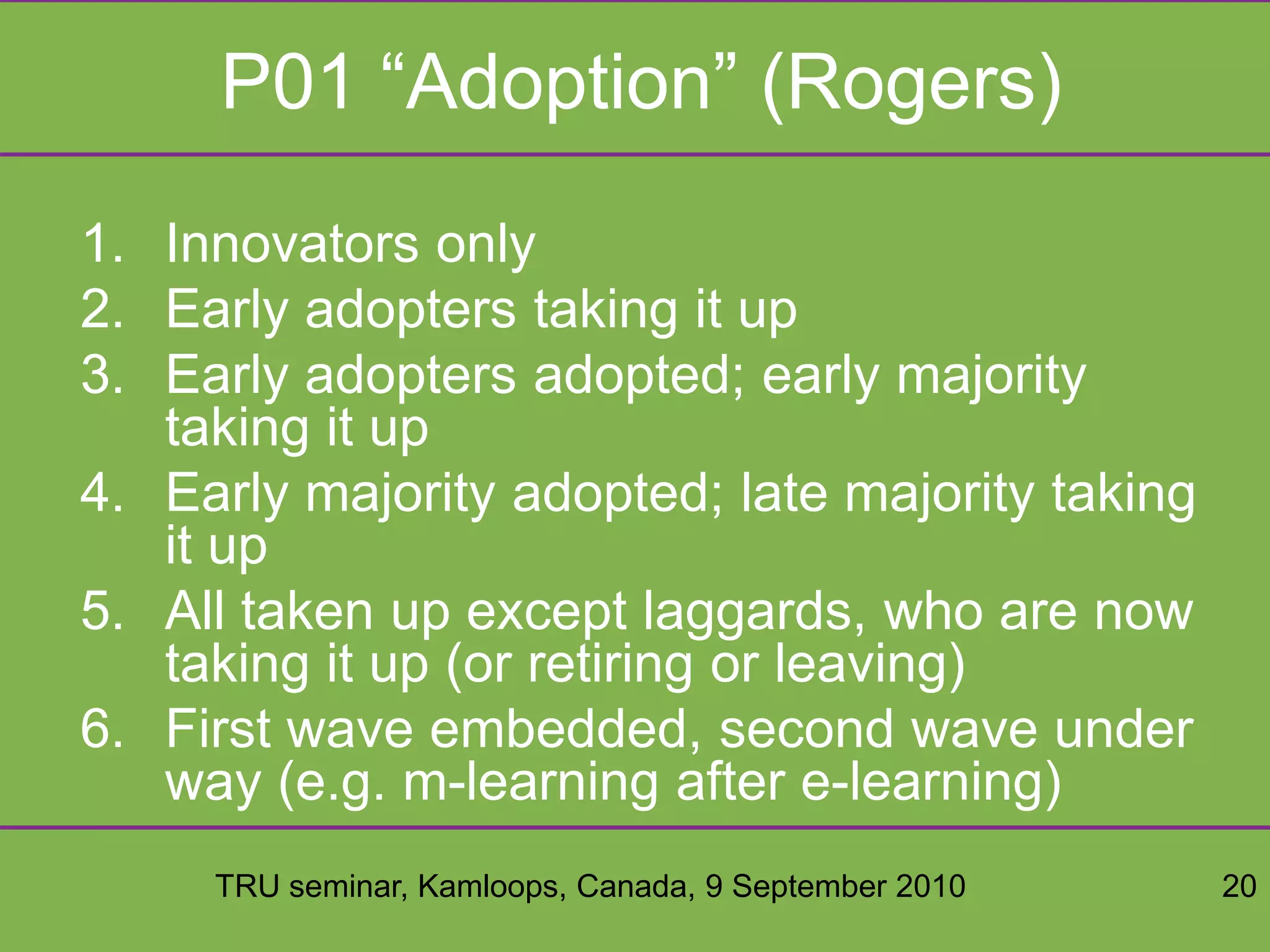

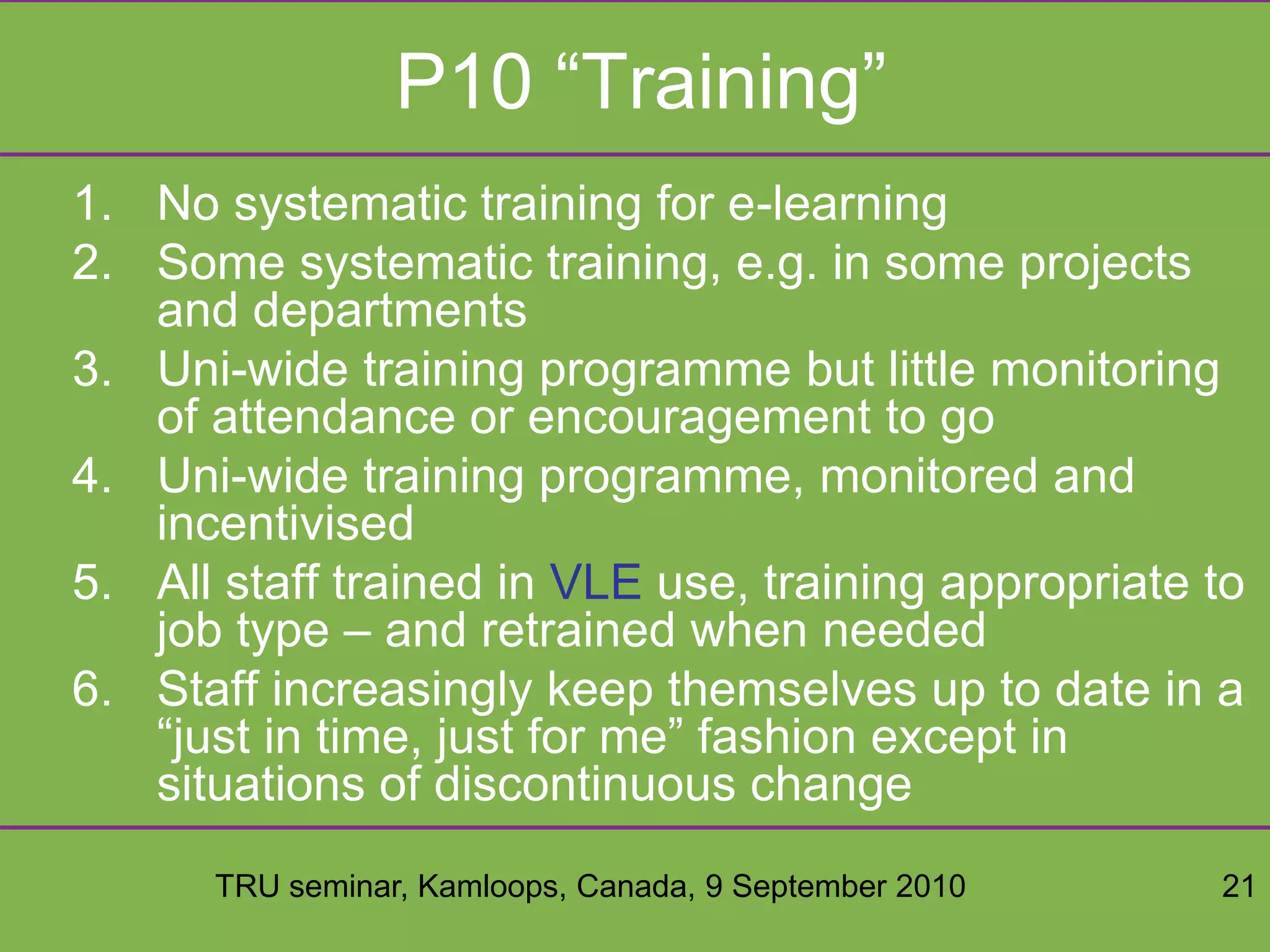

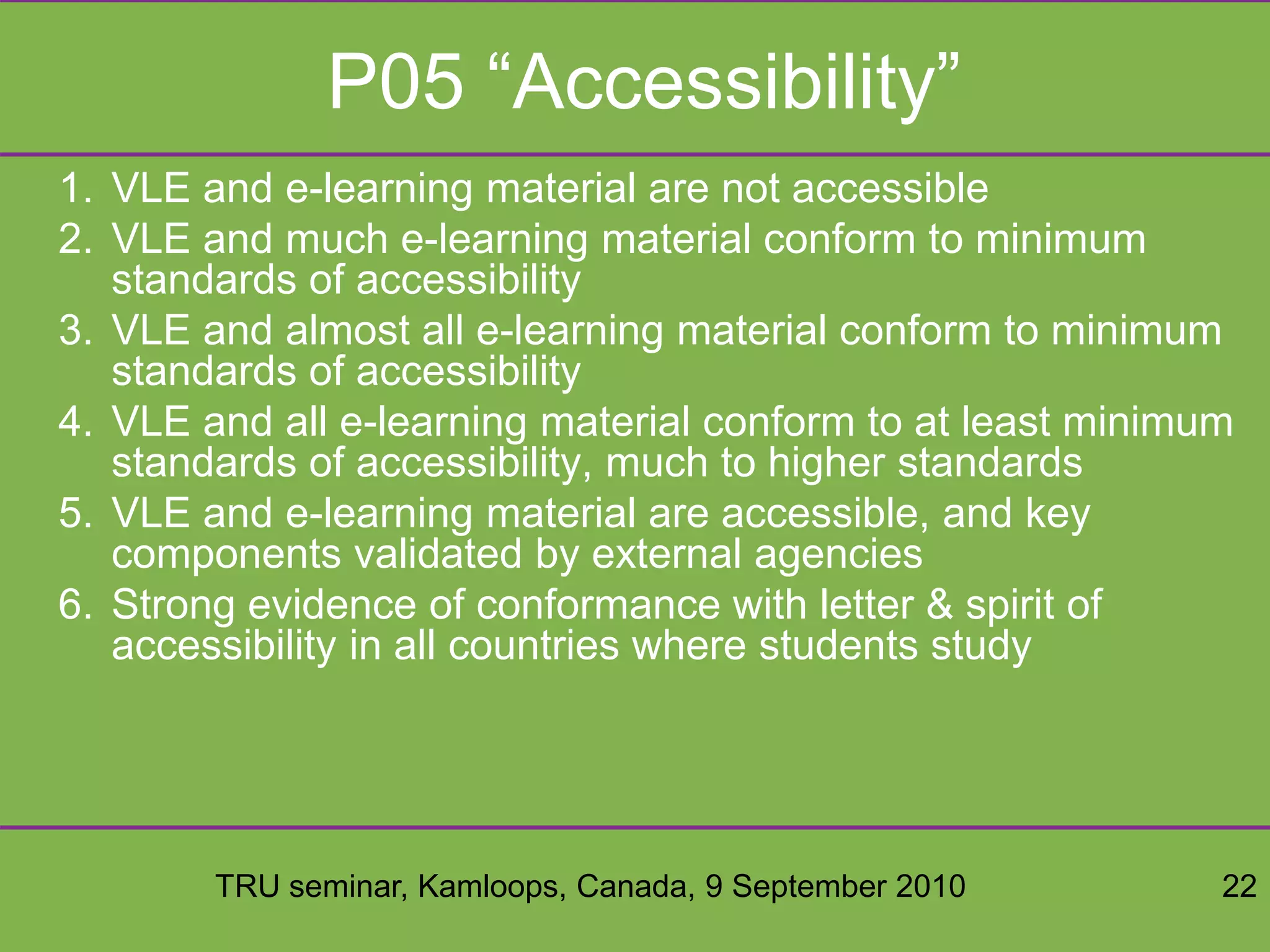

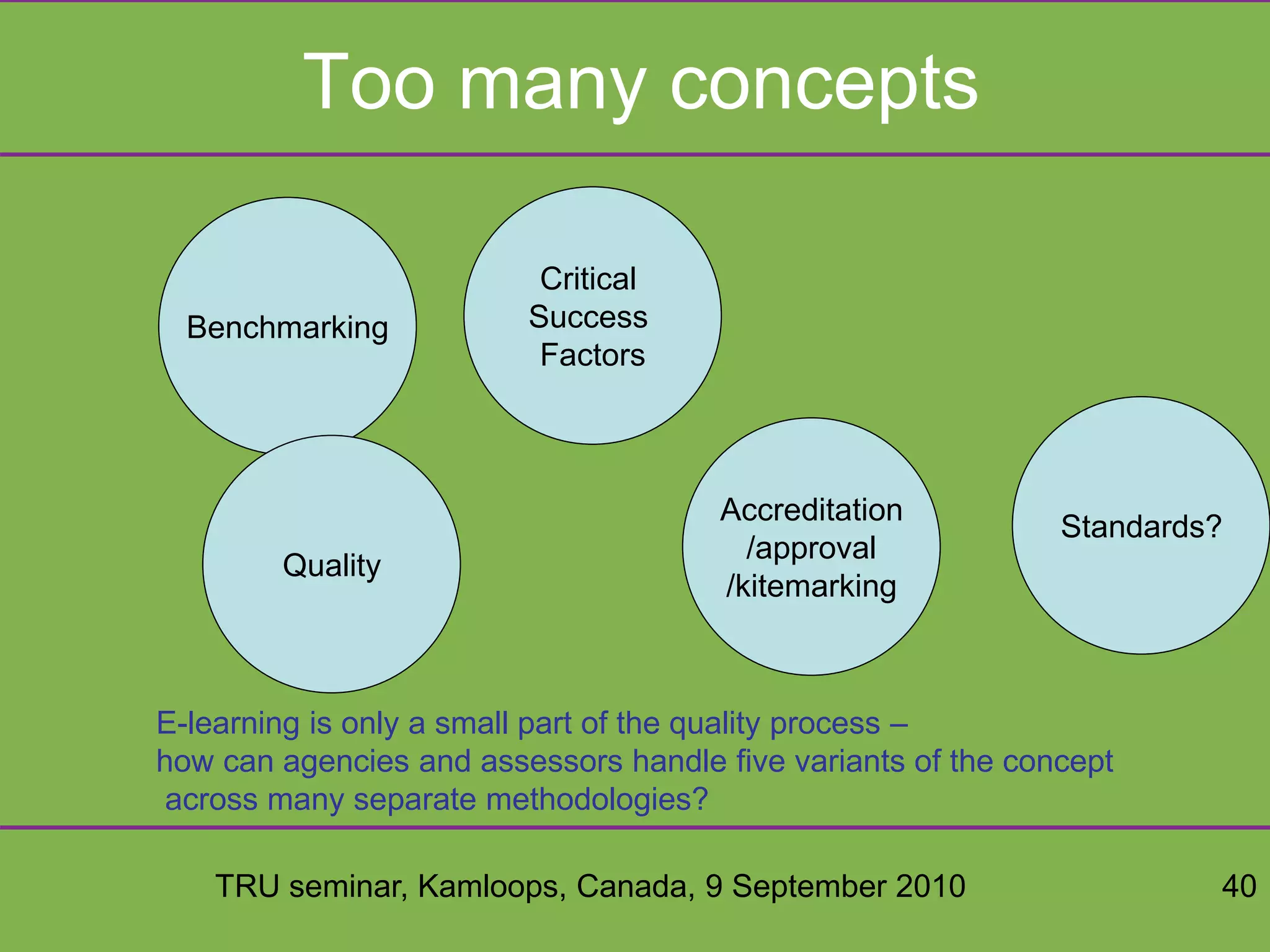

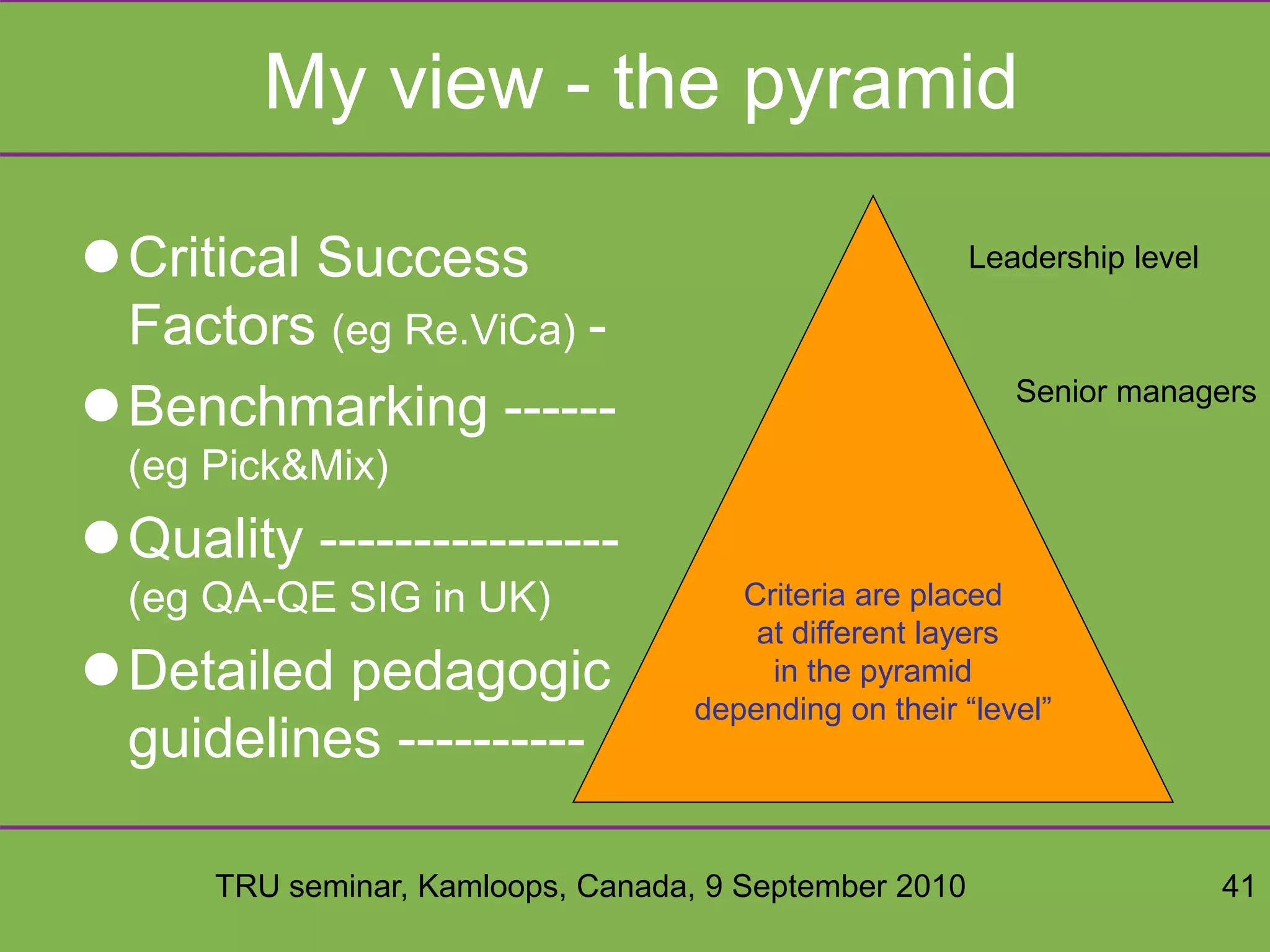

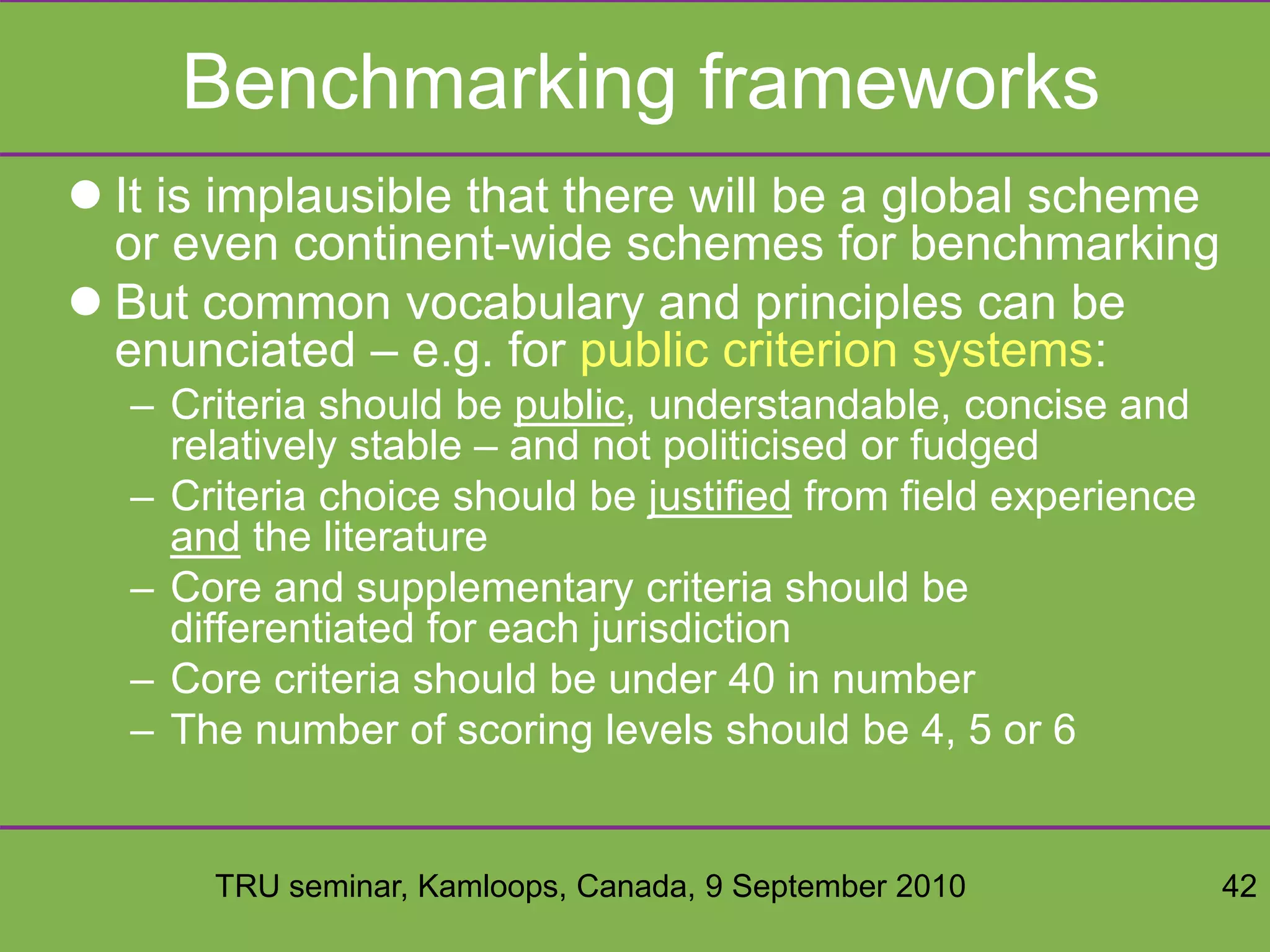

The document discusses UK approaches to quality in e-learning, particularly through benchmarking programs and the re.vica project, focusing on critical success factors for distance learning. It outlines the four phases of the UK higher education benchmarking program, various methodologies used, and the importance of self-review processes. Additionally, it emphasizes the significance of developing public criteria and maintaining a systematic approach to enhance e-learning quality across different institutions.

![TRU seminar, Kamloops, Canada, 9 September 2010 9

UK: benchmarking e-learning

“Possibly more important is for us [HEFCE] to

help individual institutions

understand their own positions on e-learning,

to set their aspirations and goals for

embedding e-learning – and then to

benchmark themselves and their progress

against institutions with similar goals,

and across the sector”](https://image.slidesharecdn.com/bacsich-tru-sep-2010-101022122917-phpapp02/75/Benchmarking-derived-approaches-to-quality-in-e-learning-9-2048.jpg)