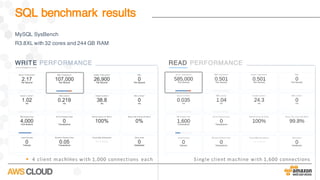

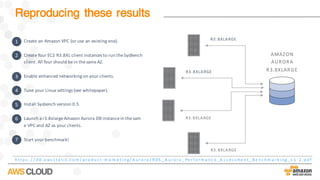

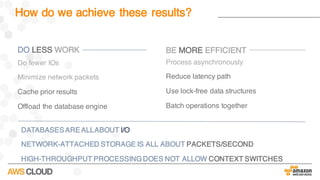

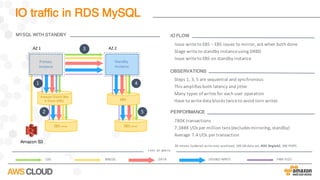

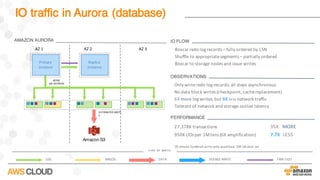

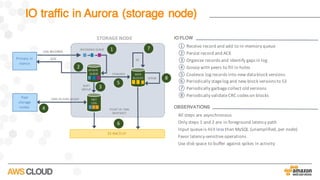

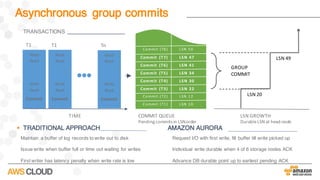

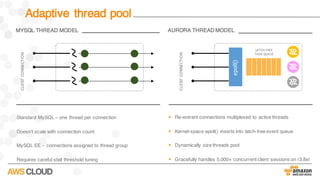

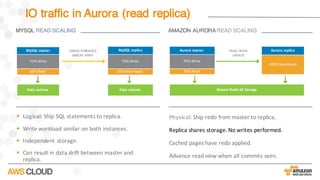

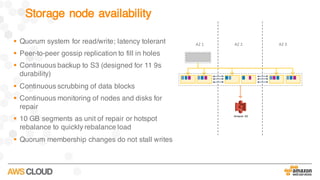

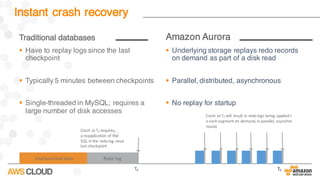

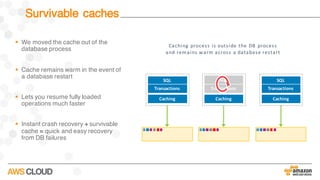

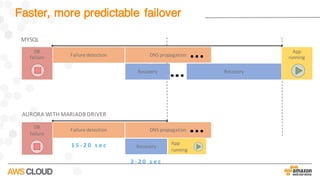

Amazon Aurora is a MySQL-compatible relational database that provides the performance and availability of commercial databases with the simplicity and cost-effectiveness of open source databases. The document compares the performance of Amazon Aurora to MySQL and describes how Aurora achieves high performance through techniques such as doing fewer I/Os, caching results, processing asynchronously, and batching operations together. It also explains how Aurora achieves high availability through a quorum system, peer-to-peer replication to multiple Availability Zones, continuous backup to S3, and fast failover capabilities.