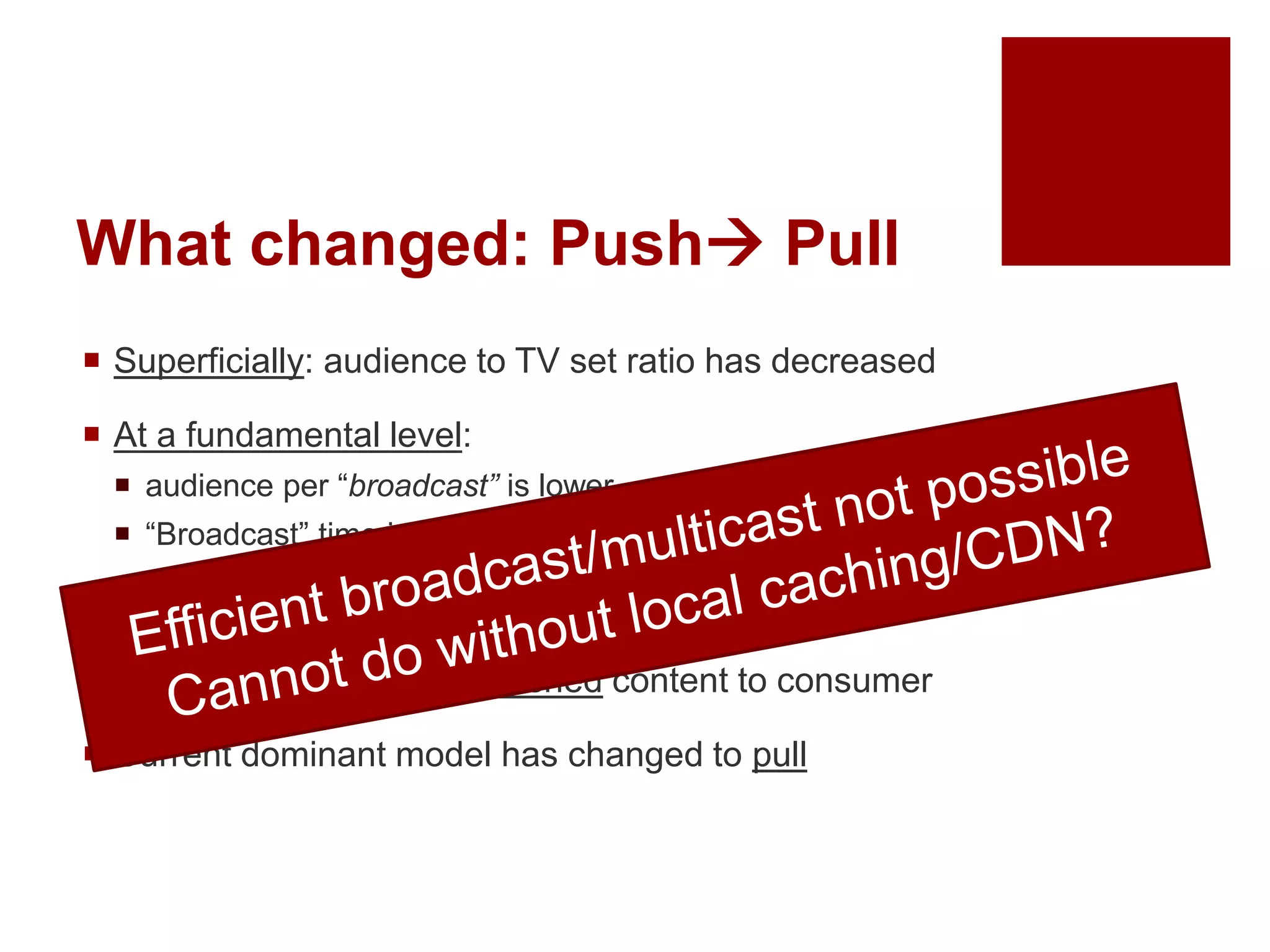

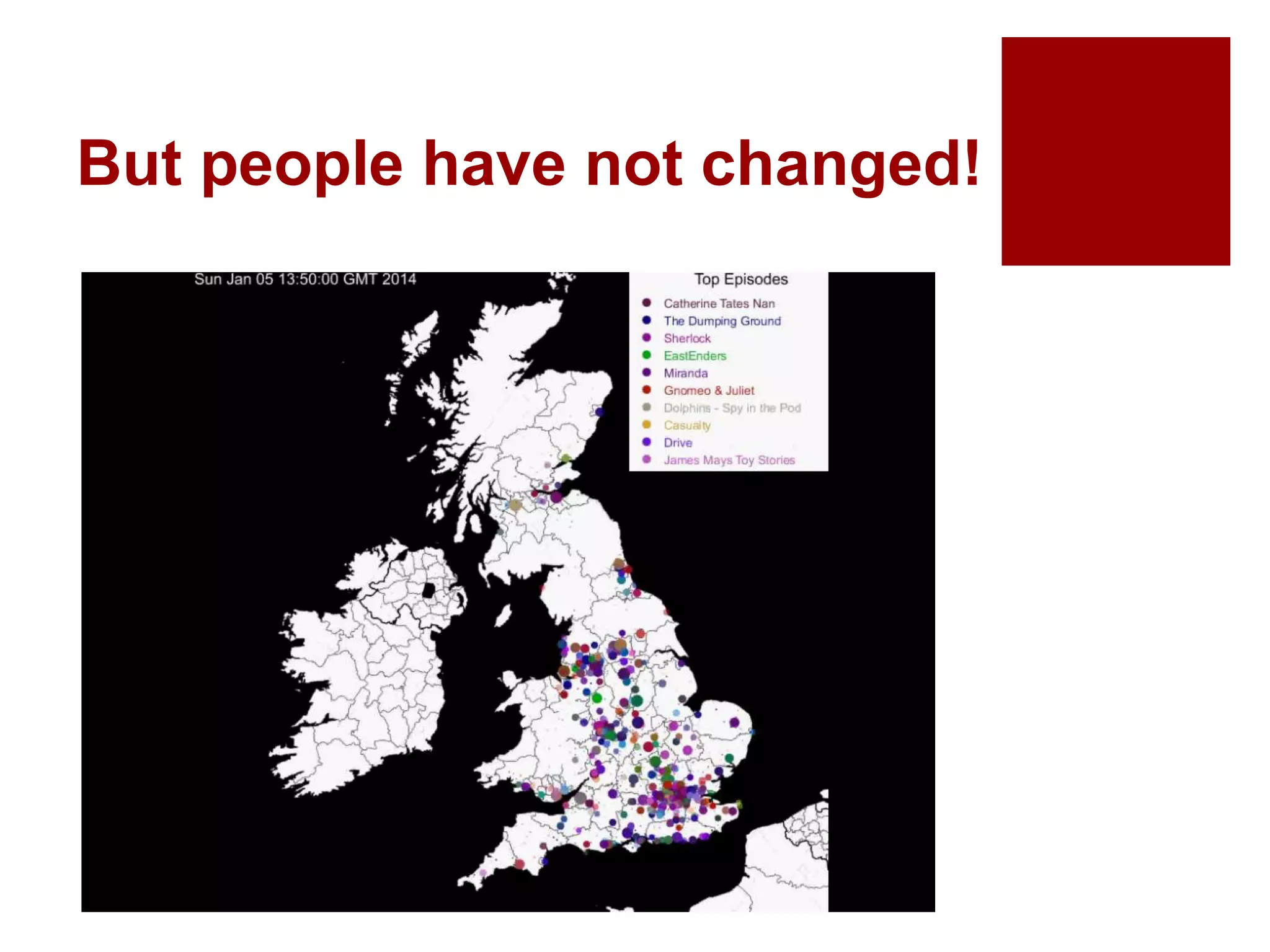

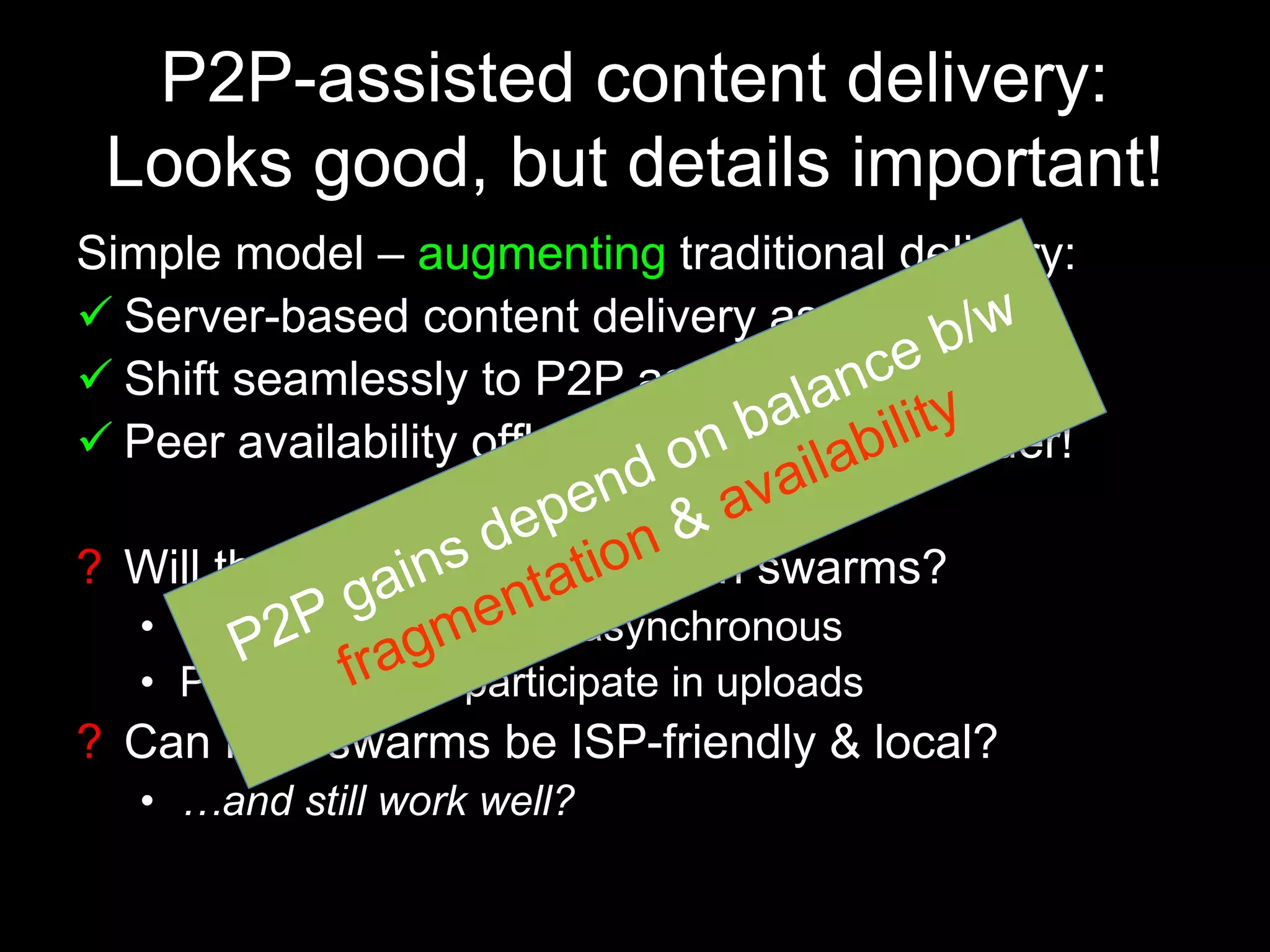

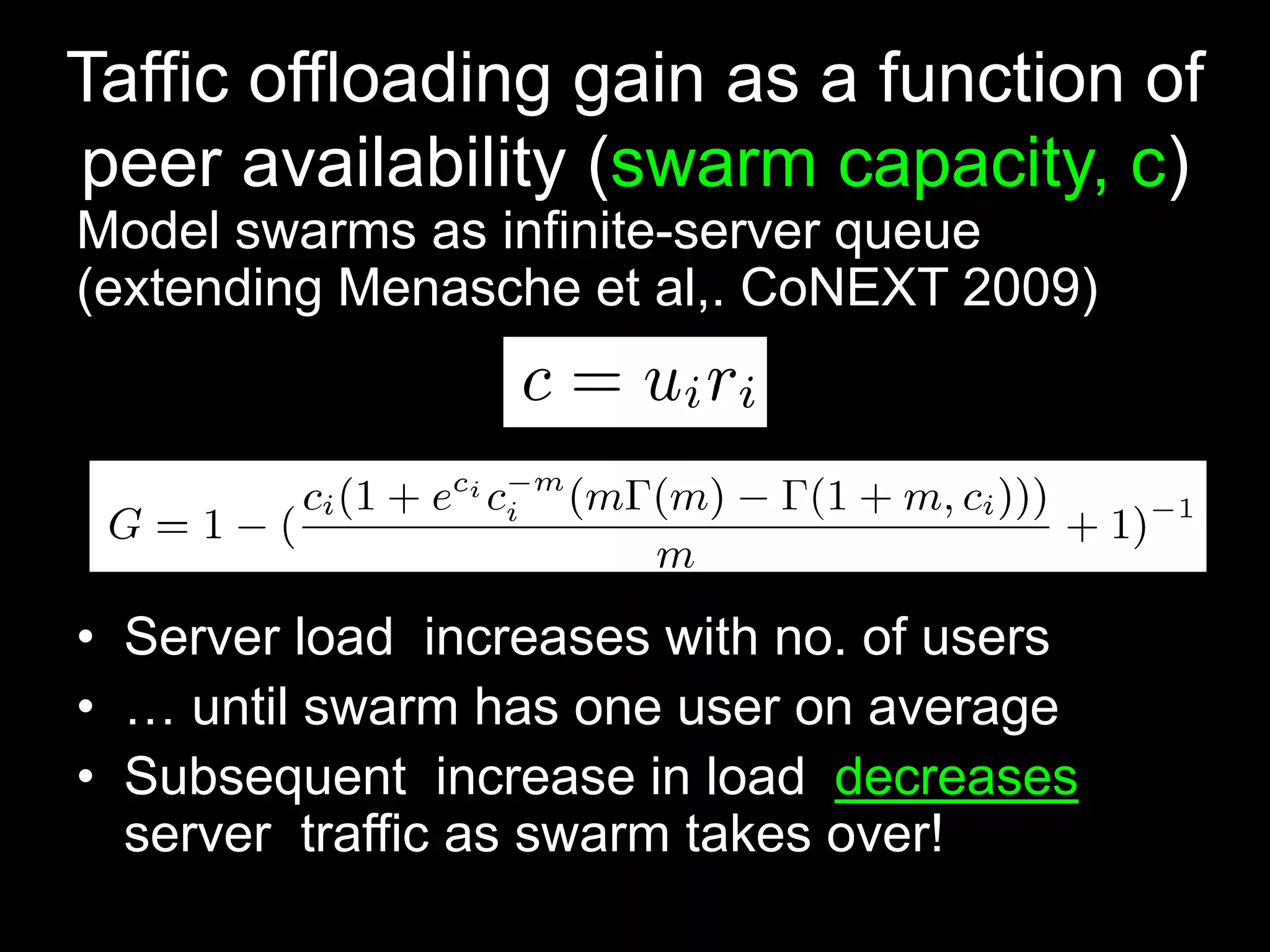

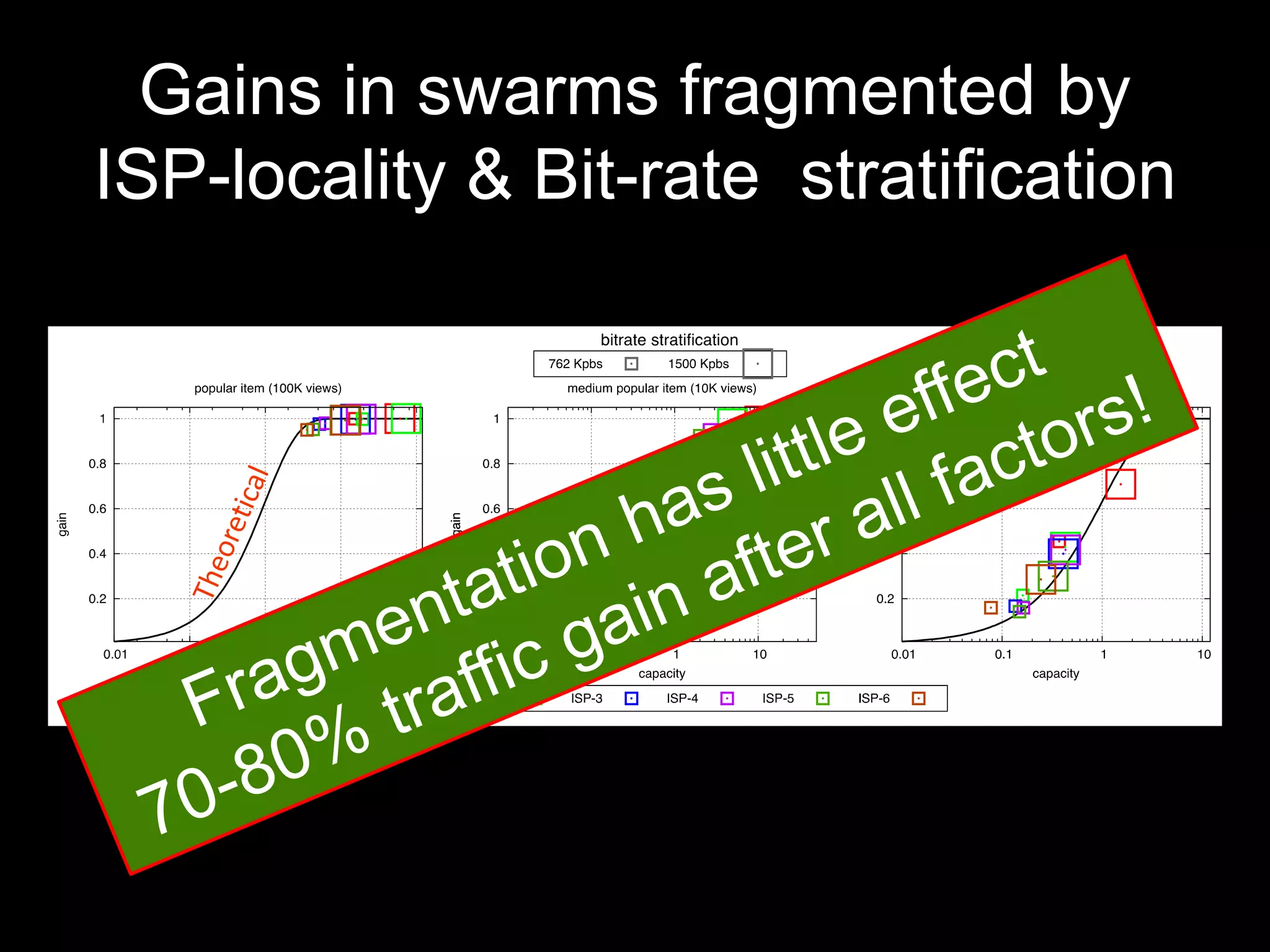

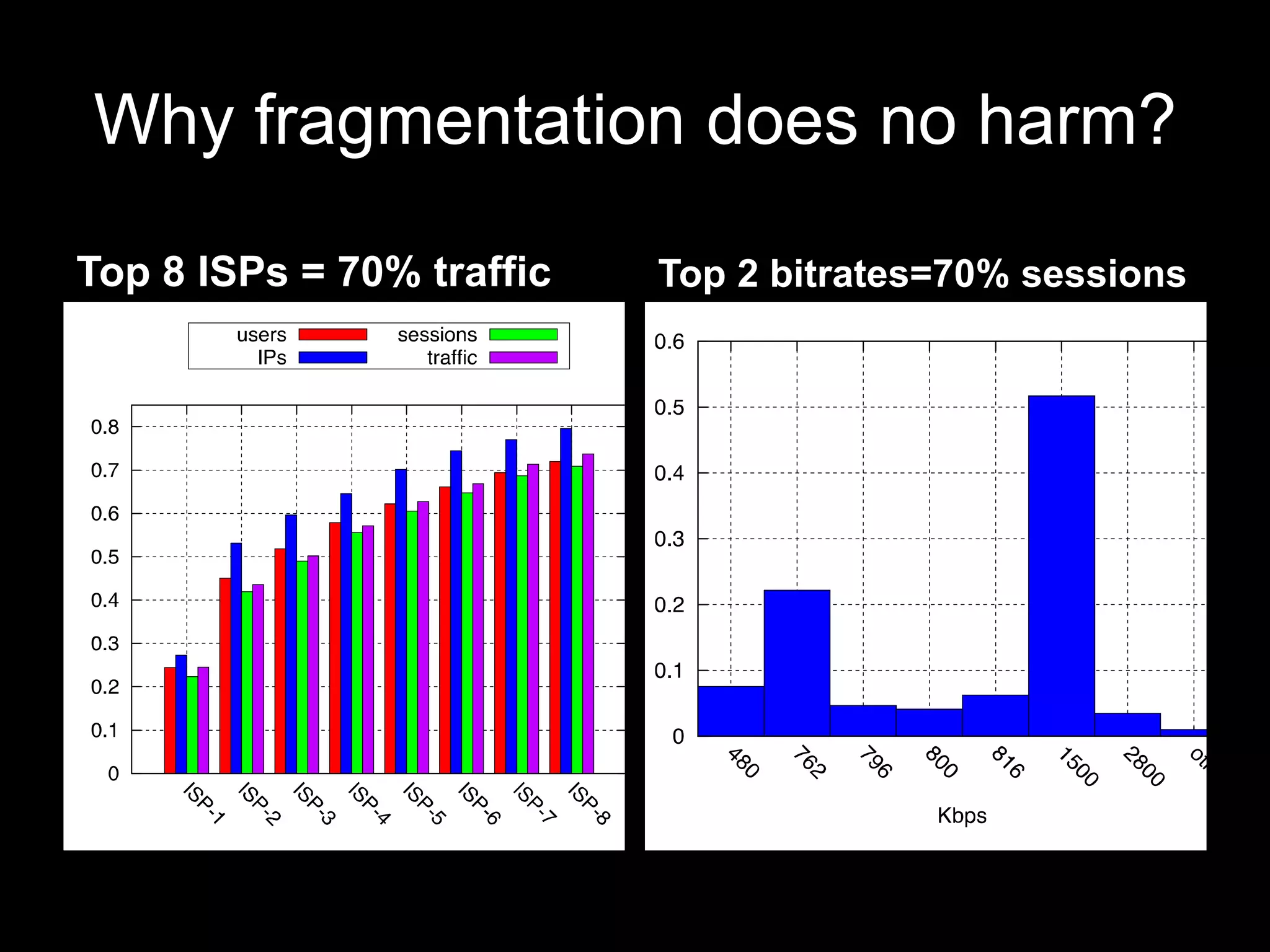

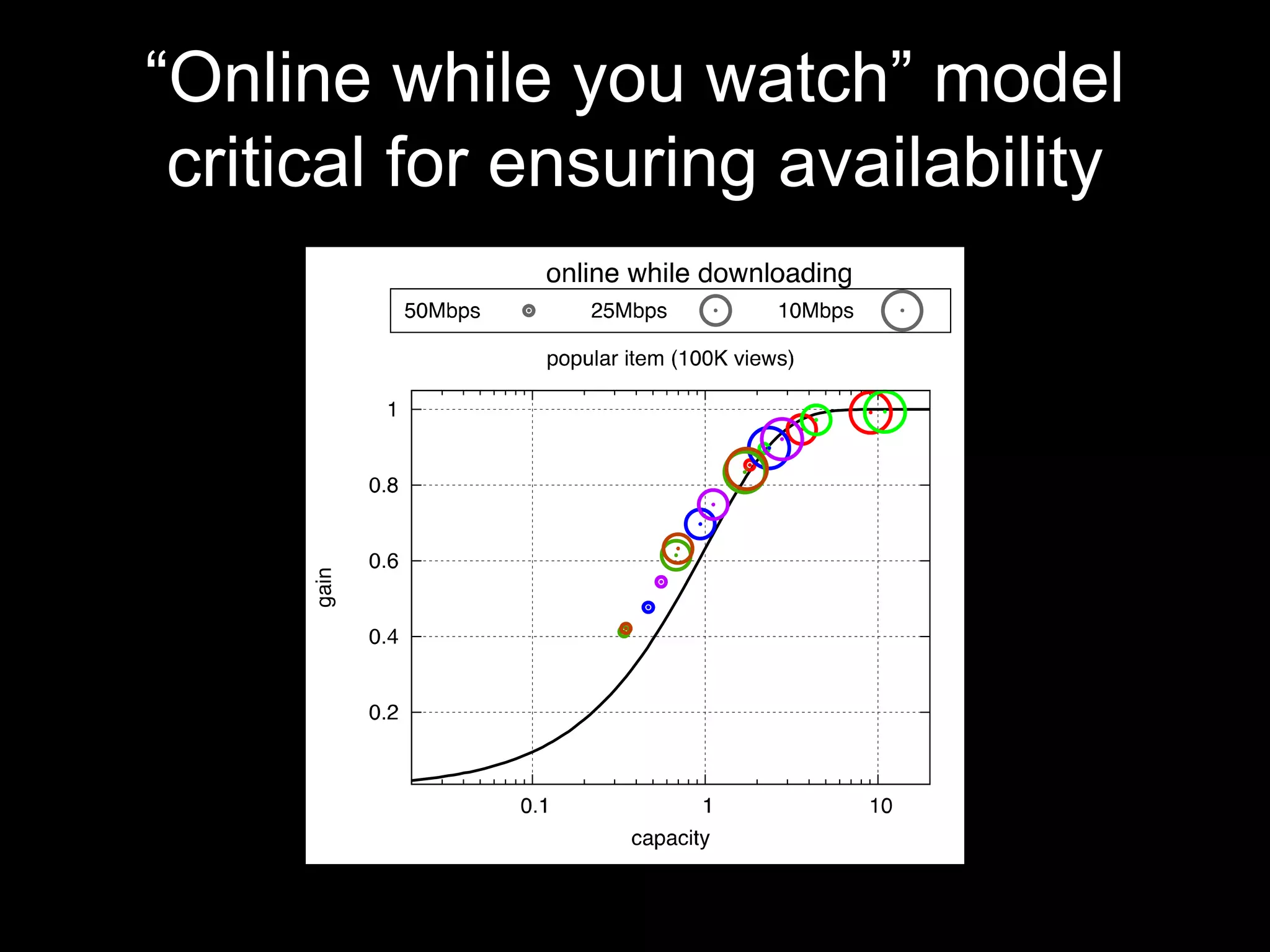

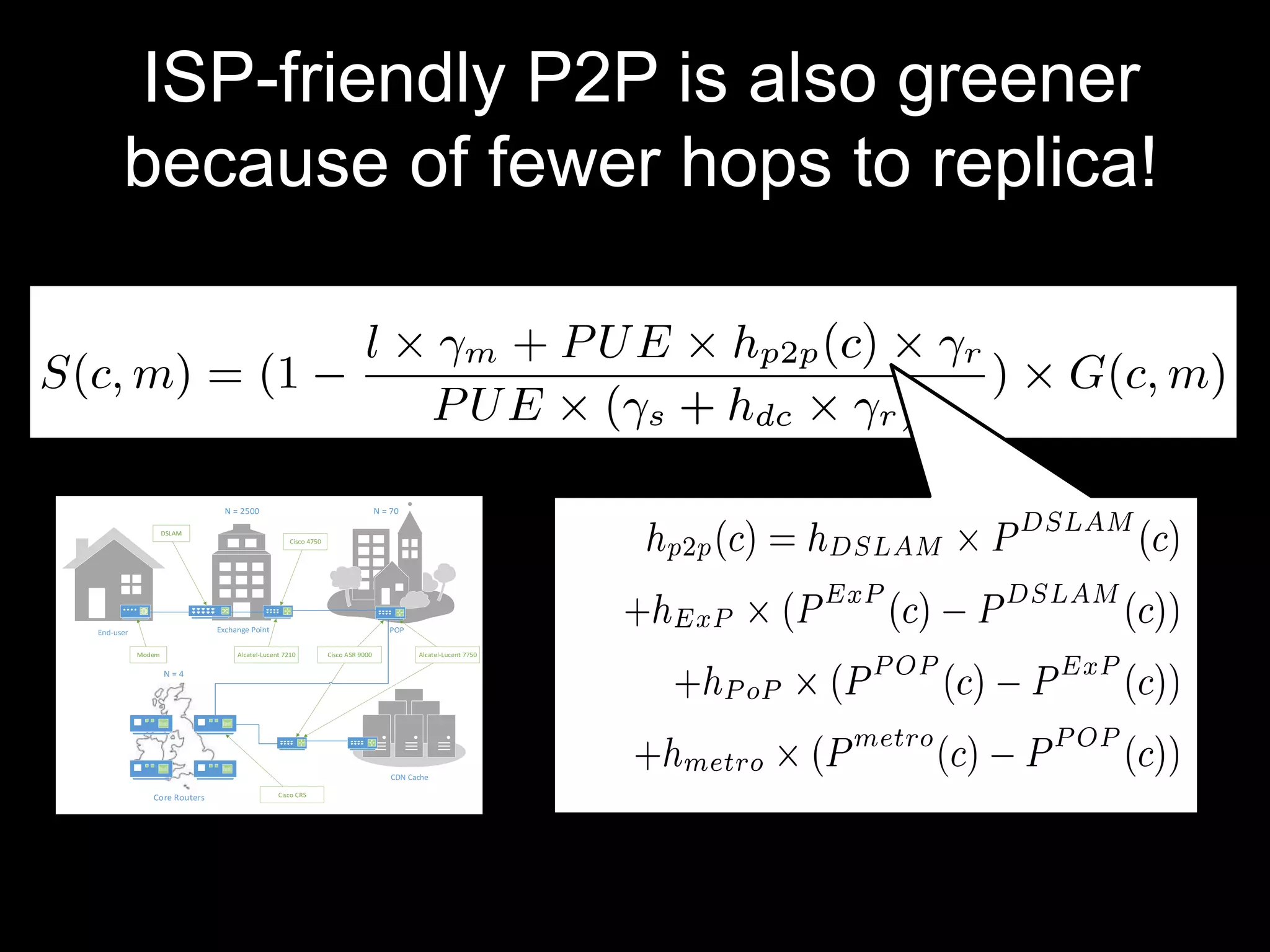

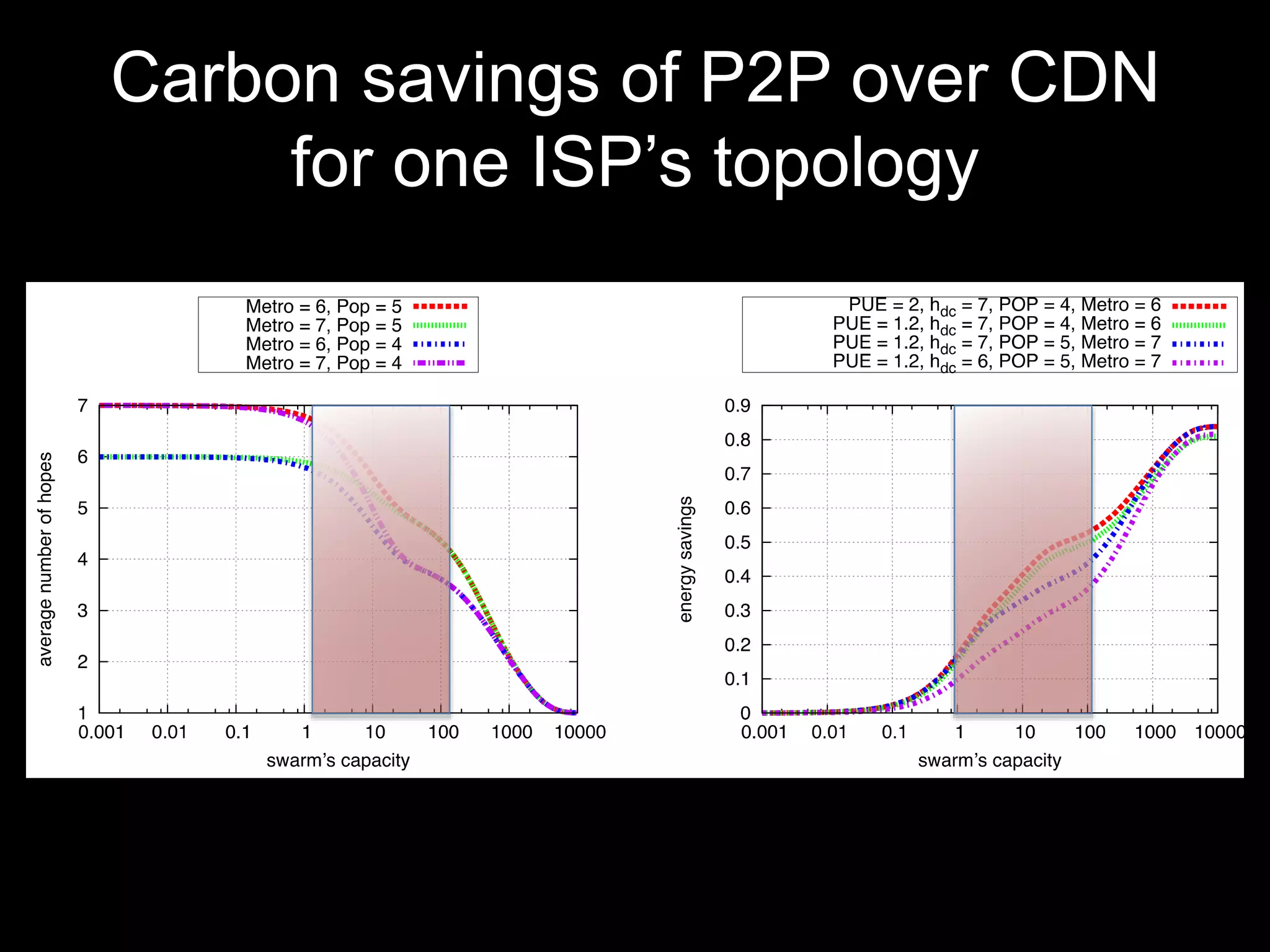

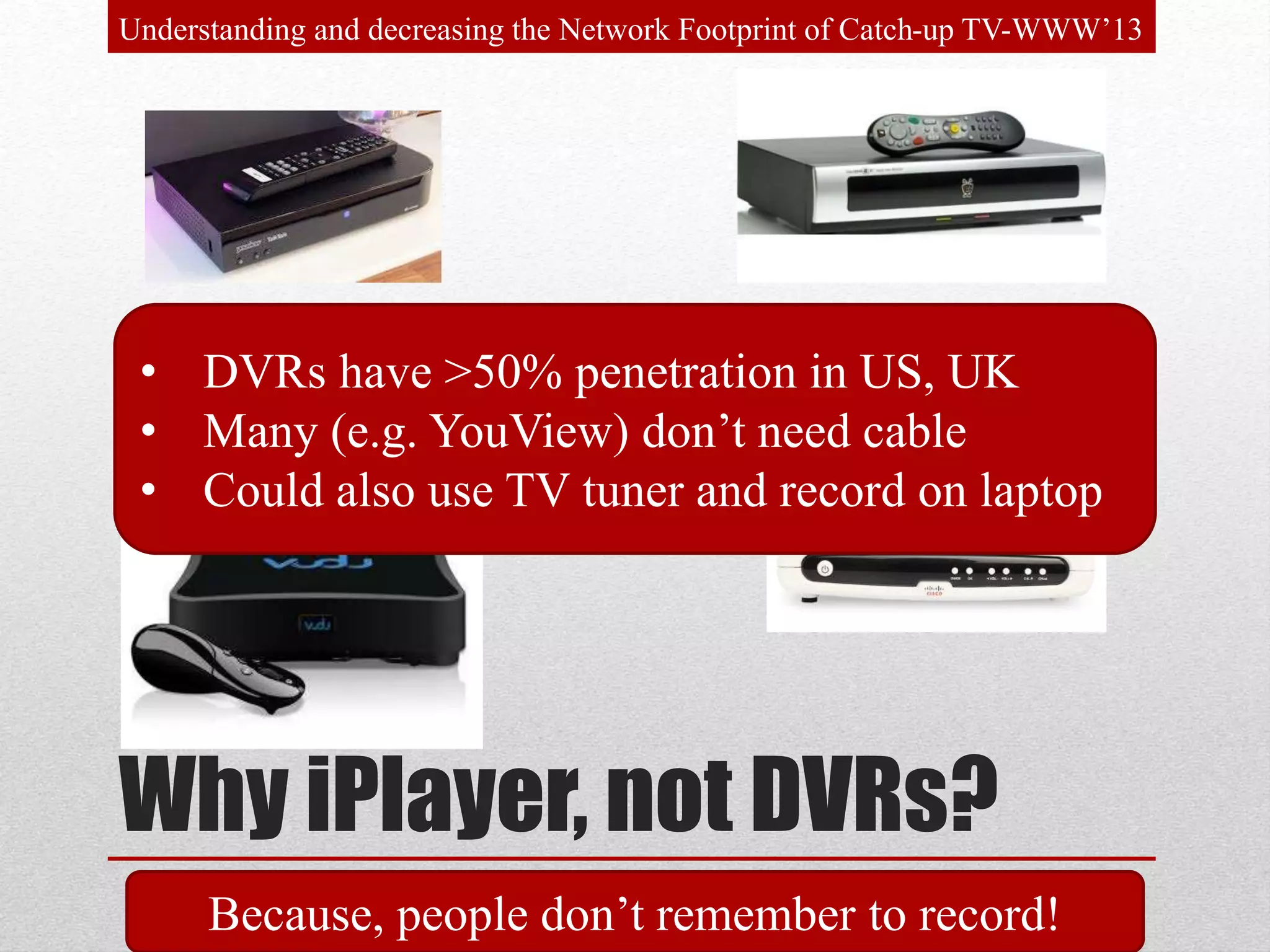

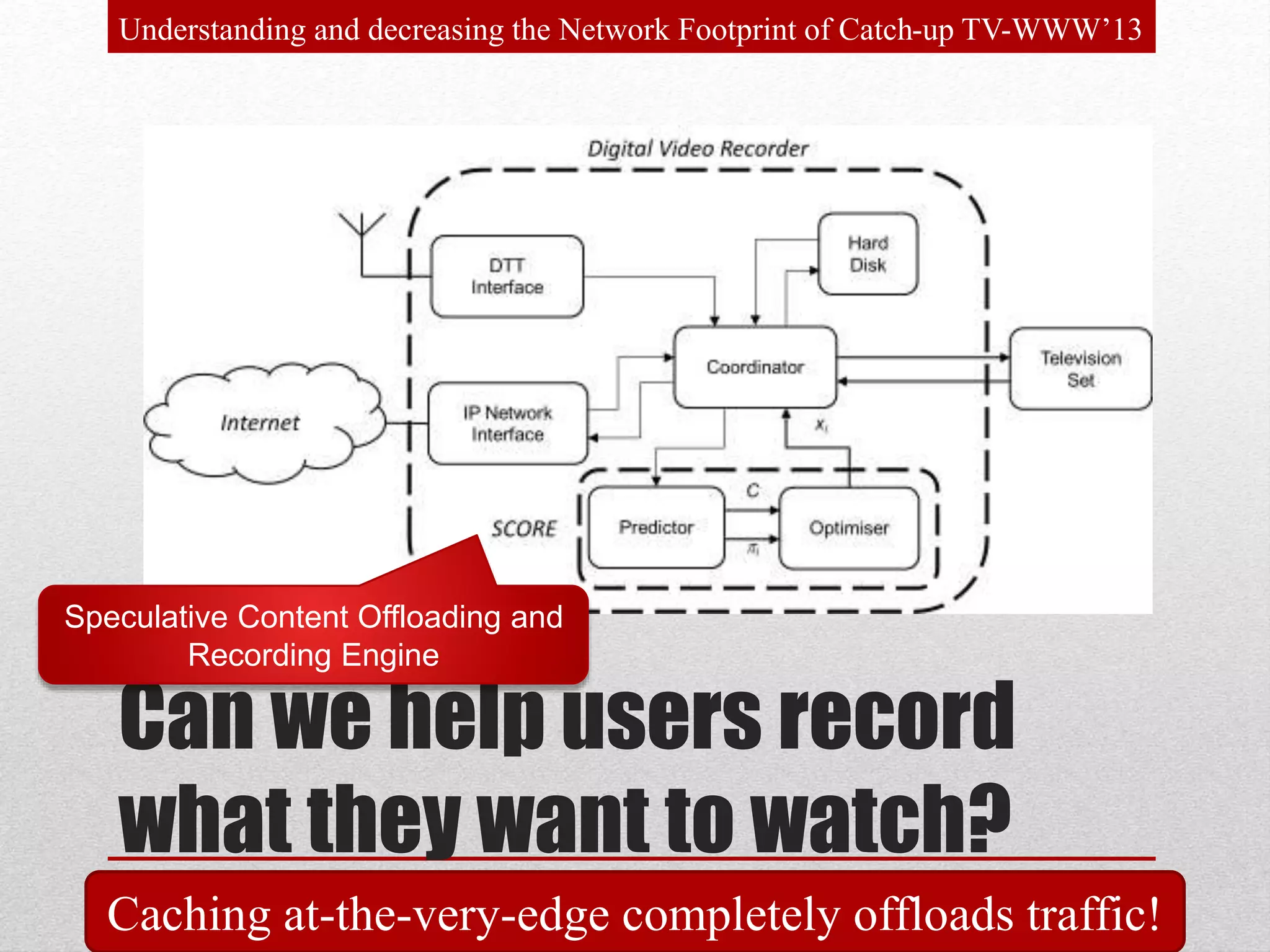

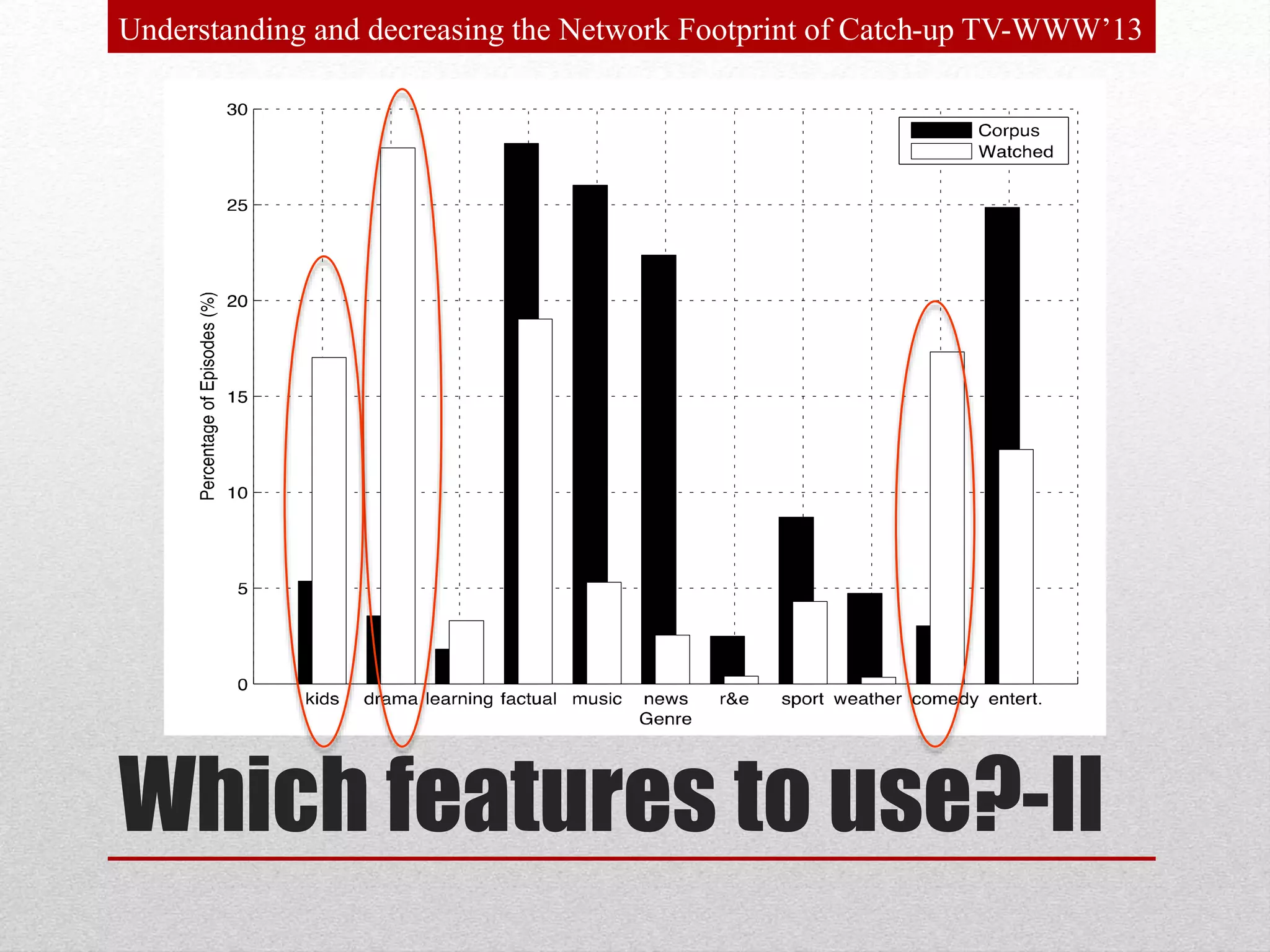

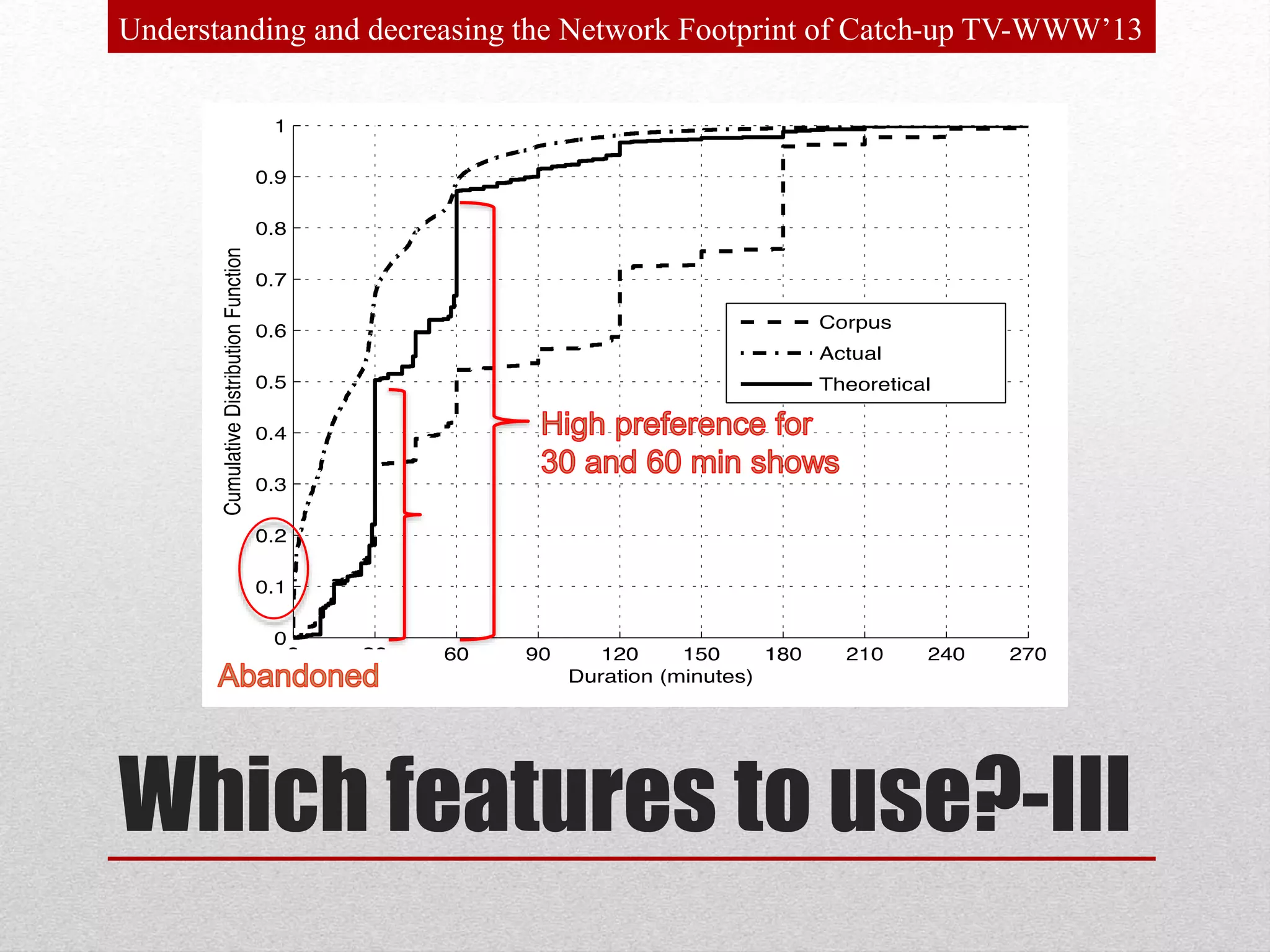

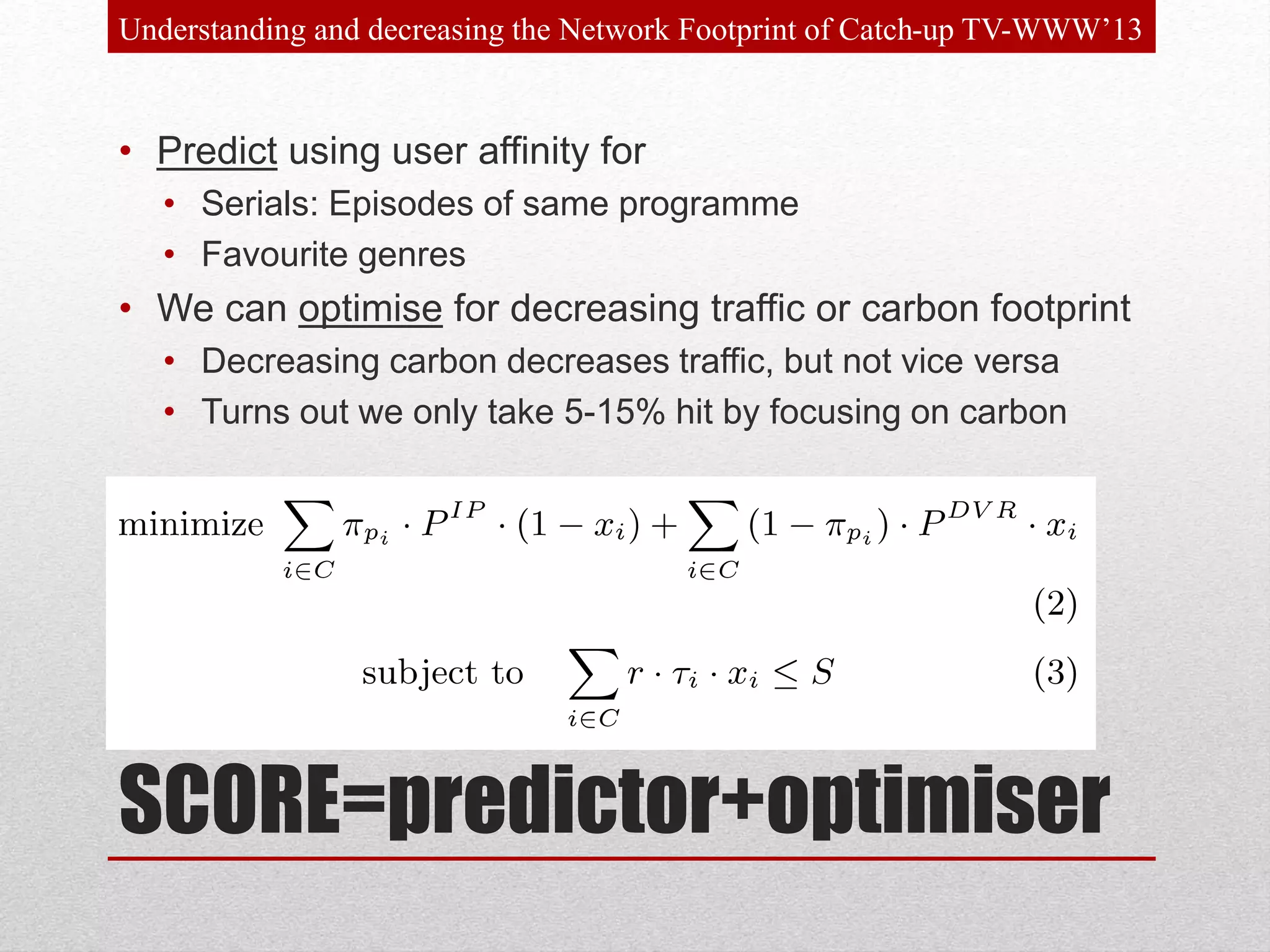

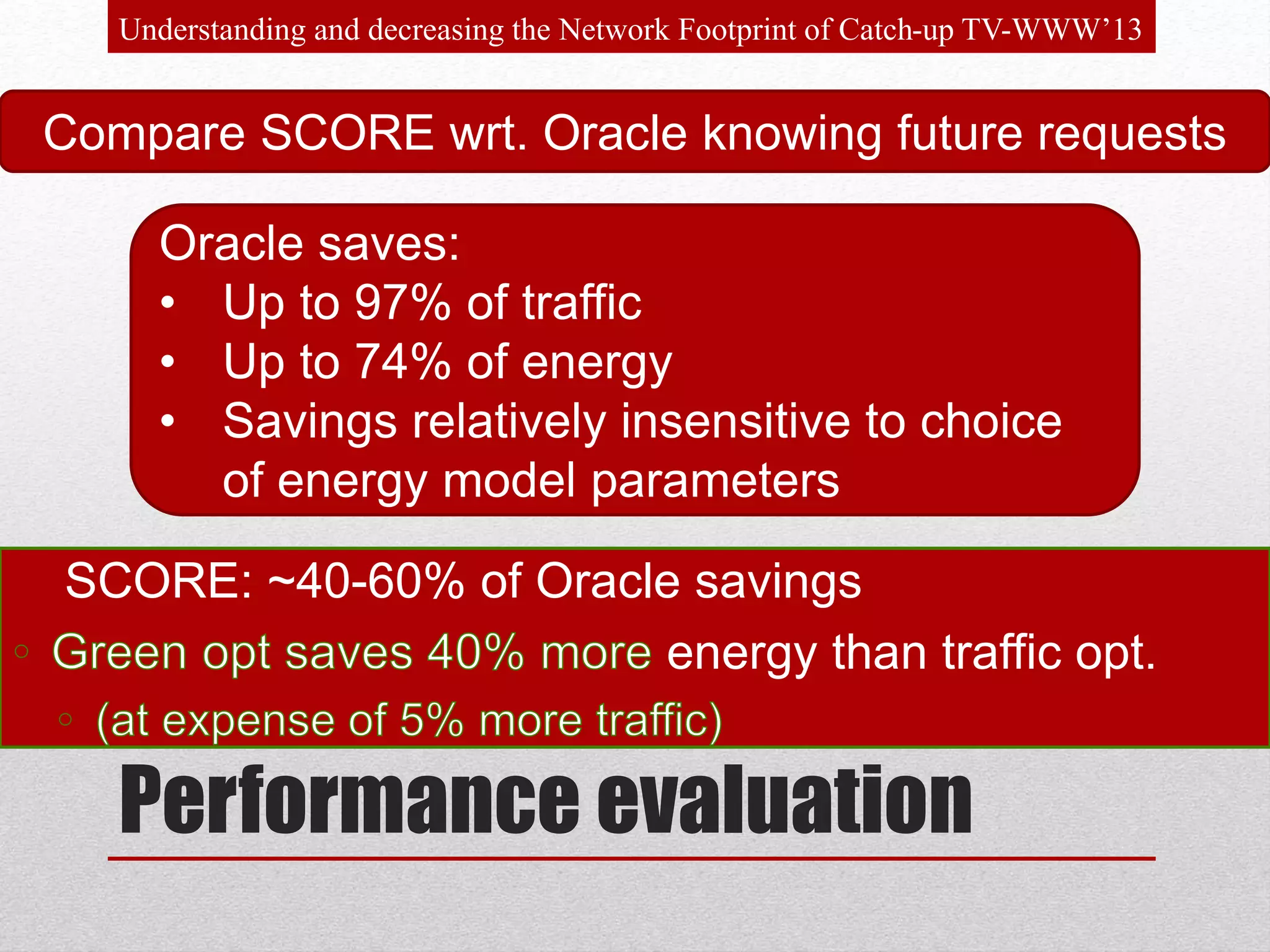

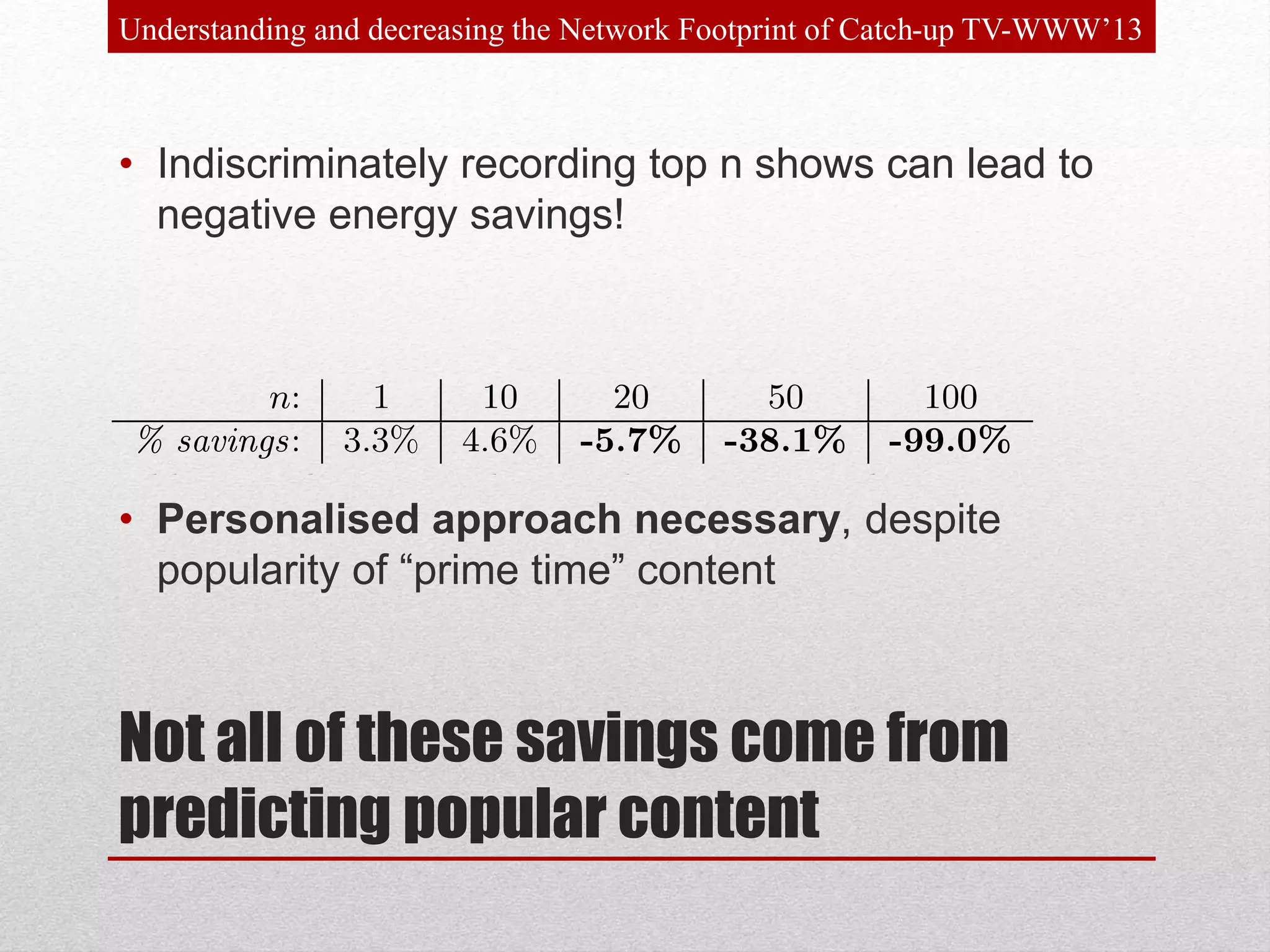

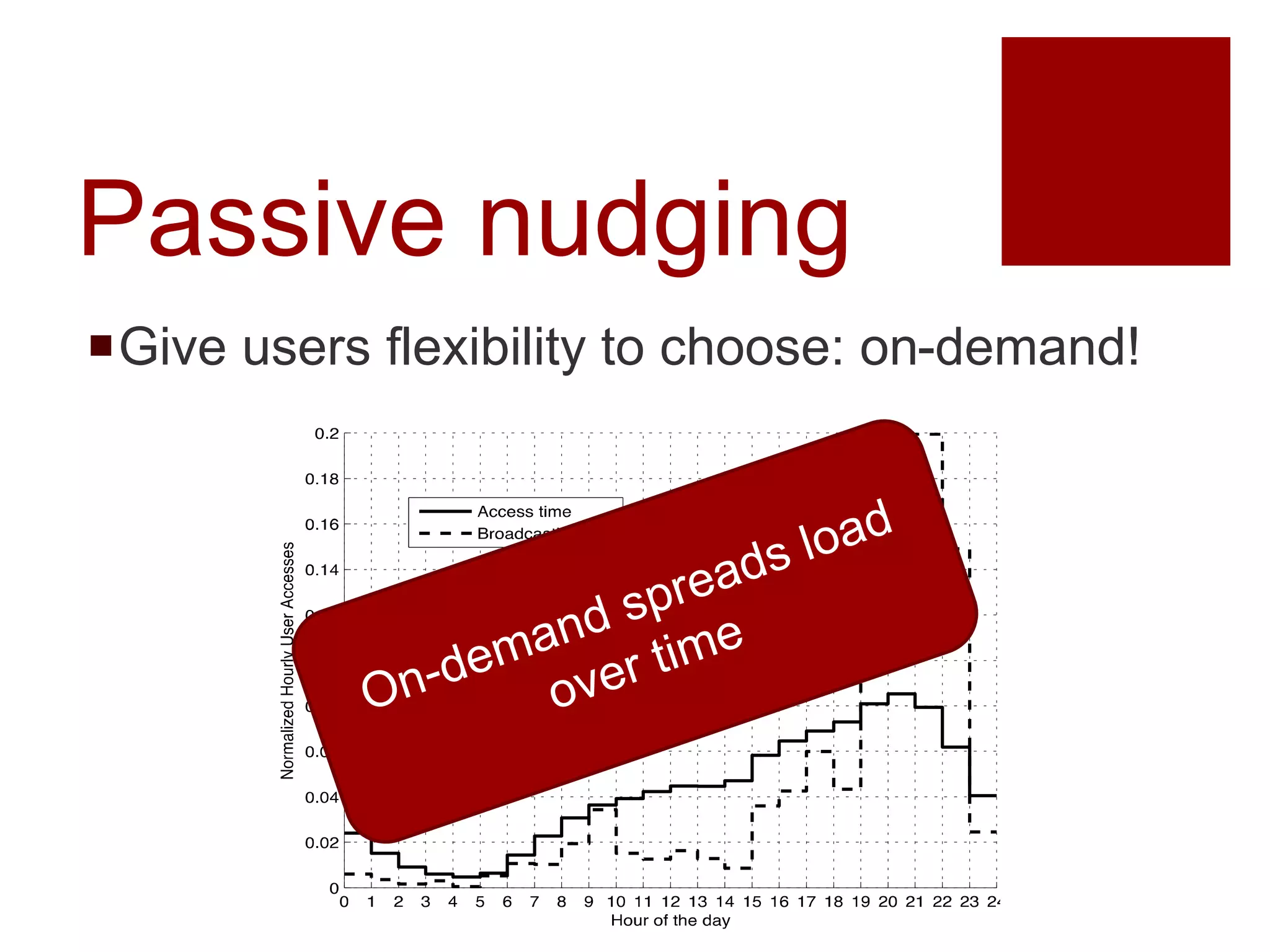

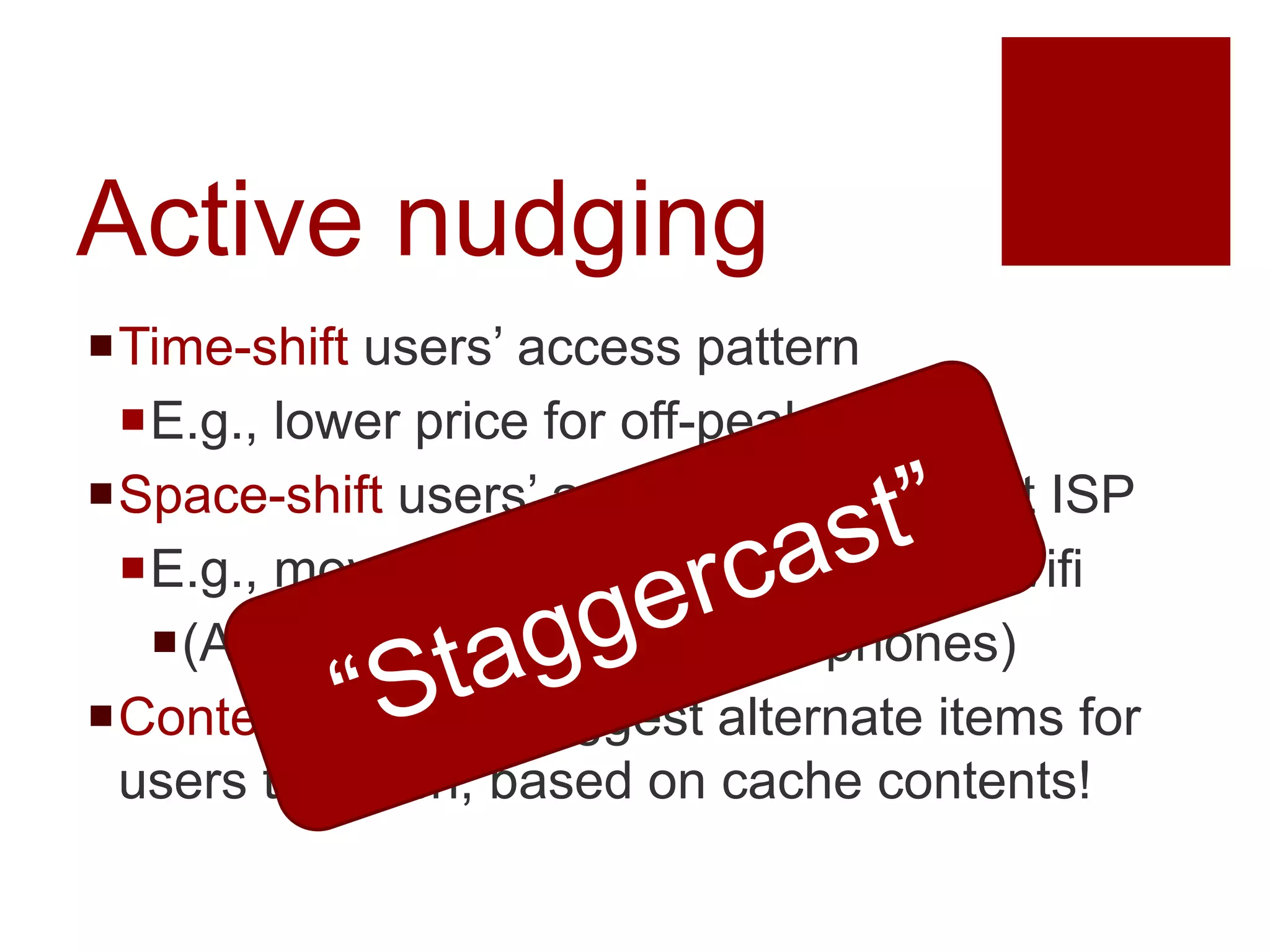

The document addresses the challenges and innovations in content delivery systems for on-demand video streaming. It emphasizes the importance of peer-to-peer (P2P) technology, user behavior predictions, and 'nudging' strategies to optimize delivery and reduce network strain. The findings propose new methods to enhance efficiency while adapting to the changing landscape of media consumption.