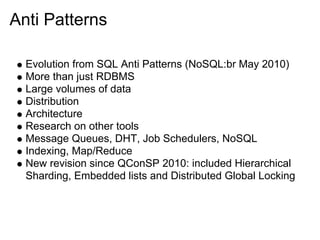

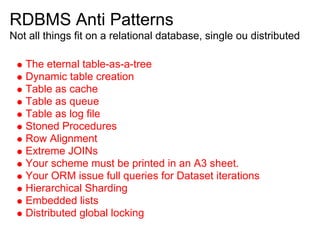

This document discusses architectural anti-patterns related to data distribution and handling failures. It provides examples of anti-patterns when using SQL and NoSQL databases, including using tables as queues, logs, or caches instead of the proper tools. Alternatives are suggested such as using message queues, document databases, and key-value stores instead of forcing data models. The document advises to simplify data schemes, avoid over-engineering, and think about how to best structure data and applications.

![The eternal tree

Problem: Most threaded discussion example uses something

like a table which contains all threads and answers, relating to

each other by an id. Usually the developer will come up with

his own binary-tree version to manage this mess.

id - parent_id -author - text

1 - 0 - gleicon - hello world

2 - 1 - elvis - shout !

Alternative: Document storage:

{ thread_id:1, title: 'the meeting', author: 'gleicon', replies:[

{

'author': elvis, text:'shout', replies:[{...}]

}

]

}](https://image.slidesharecdn.com/architecturalantipatternsfordatahandling1-101023060338-phpapp02/85/Architectural-anti-patterns-for-data-handling-6-320.jpg)

![Cycle of changes - Product A

1. There was the database model

2. Then, the cache was needed. Performance was no good.

3. Cache key: query, value: resultset

4. High or inexistent expiration time [w00t]

(Now there's a turning point. Data didn't need to change often.

Denormalization was a given with cache)

5. The cache needs to be warmed or the app wont work.

6. Key/Value storage was a natural choice. No data on MySQL

anymore.](https://image.slidesharecdn.com/architecturalantipatternsfordatahandling1-101023060338-phpapp02/85/Architectural-anti-patterns-for-data-handling-20-320.jpg)