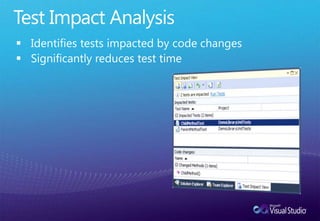

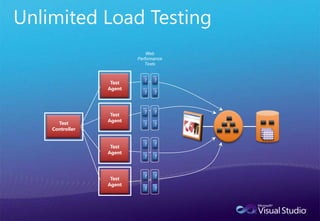

The document announces a Microsoft Cloud Day event on April 27th 2011 from 9am to 5pm at the Microsoft Auditorium in Singapore. The event will cover application quality best practices using Visual Studio 2010, including reducing unit testing time with test impact analysis and code coverage metrics, enforcing coding standards with code analysis and metrics, identifying performance bottlenecks with profiling tools, and identifying application limits with load testing. The document provides details on these strategies and includes demos of the Visual Studio tools.