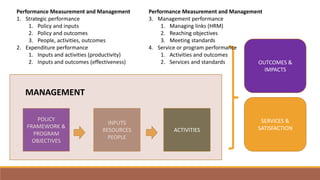

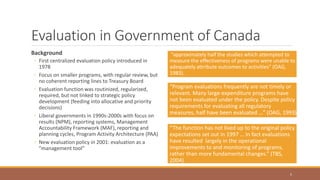

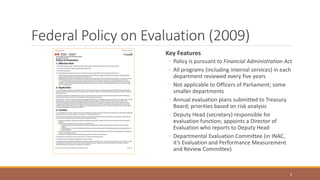

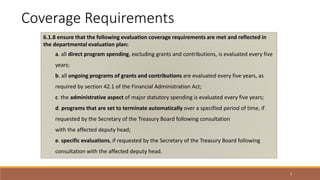

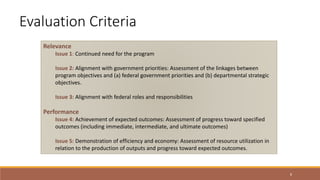

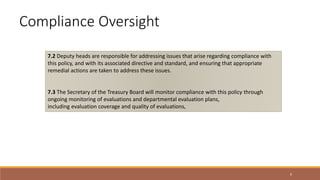

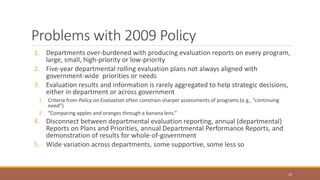

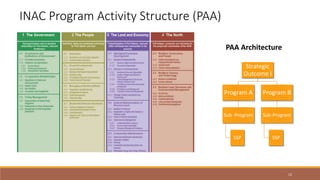

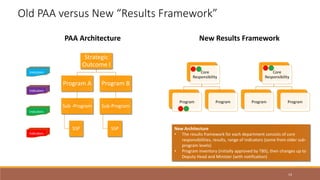

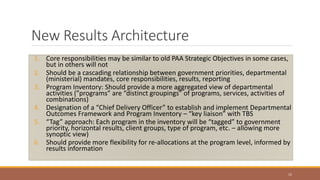

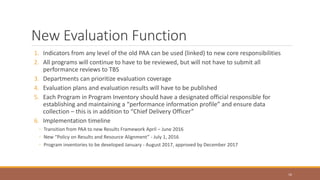

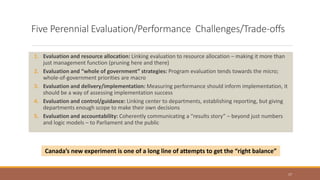

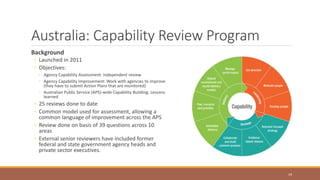

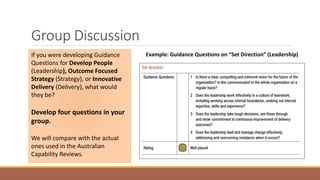

The document discusses performance management and measurement best practices and recent initiatives. It covers four main topics: 1) strategic, expenditure, and management performance measurement; 2) evaluation in the Government of Canada including background, policy features, problems with policies, and the new evaluation function; 3) Australia's capability review program; and 4) challenges for evaluation/performance including linking to resource allocation, whole of government strategies, delivery/implementation, control/guidance, and accountability.