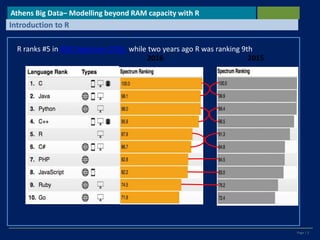

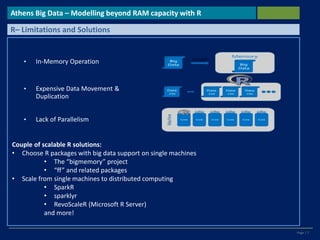

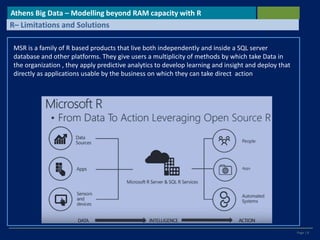

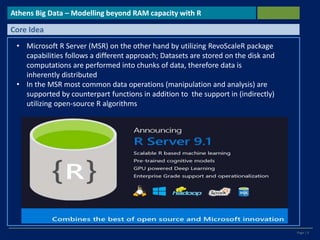

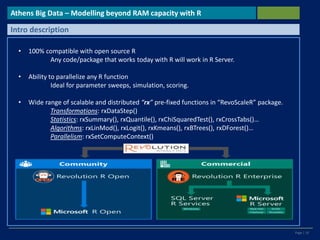

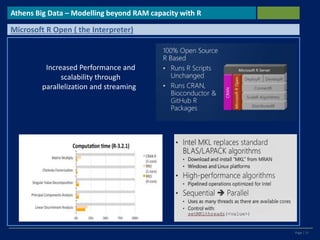

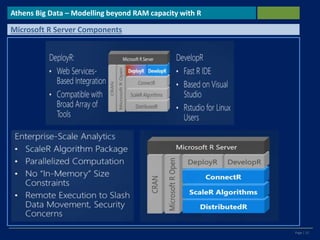

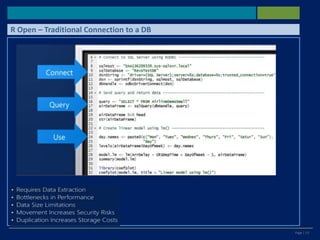

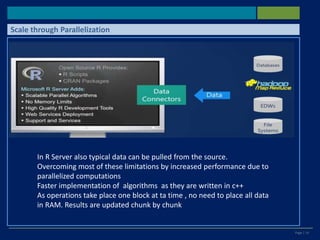

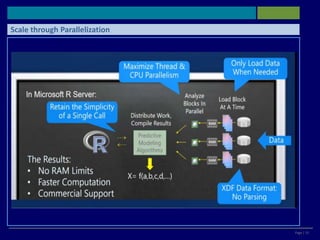

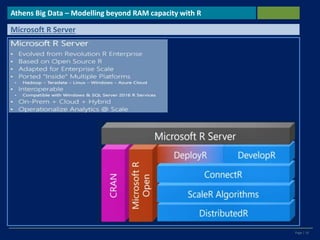

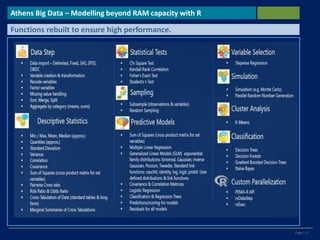

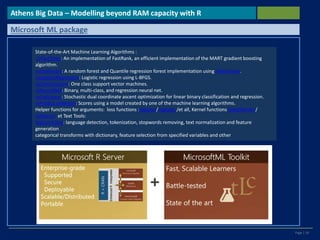

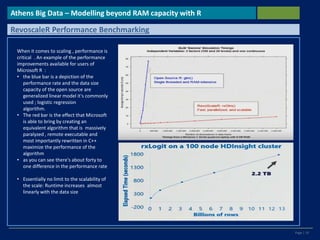

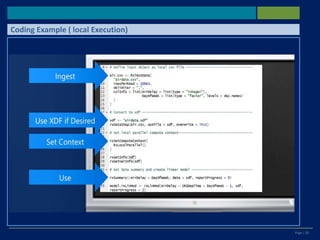

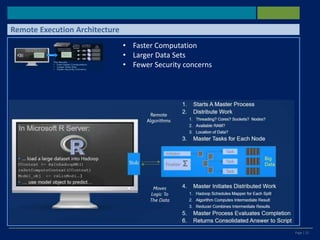

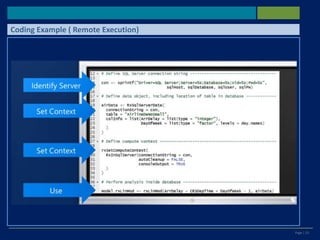

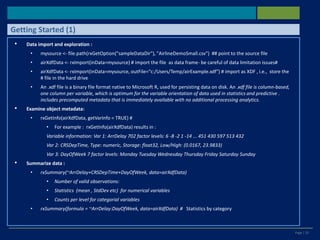

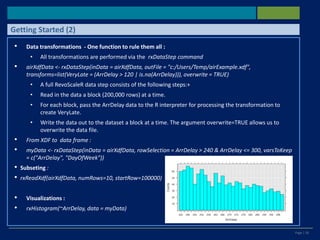

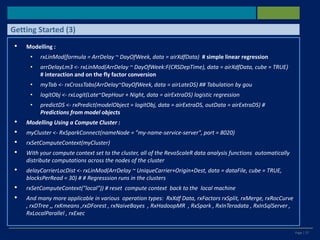

R is a popular open-source statistical programming language and software environment for predictive analytics. It has a large community and ecosystem of packages that allow data scientists to solve various problems. Microsoft R Server is a scalable platform that allows R to handle large datasets beyond memory capacity by distributing computations across nodes in a cluster and storing data on disk in efficient column-based formats. It provides high performance through parallelization and rewriting algorithms in C++.