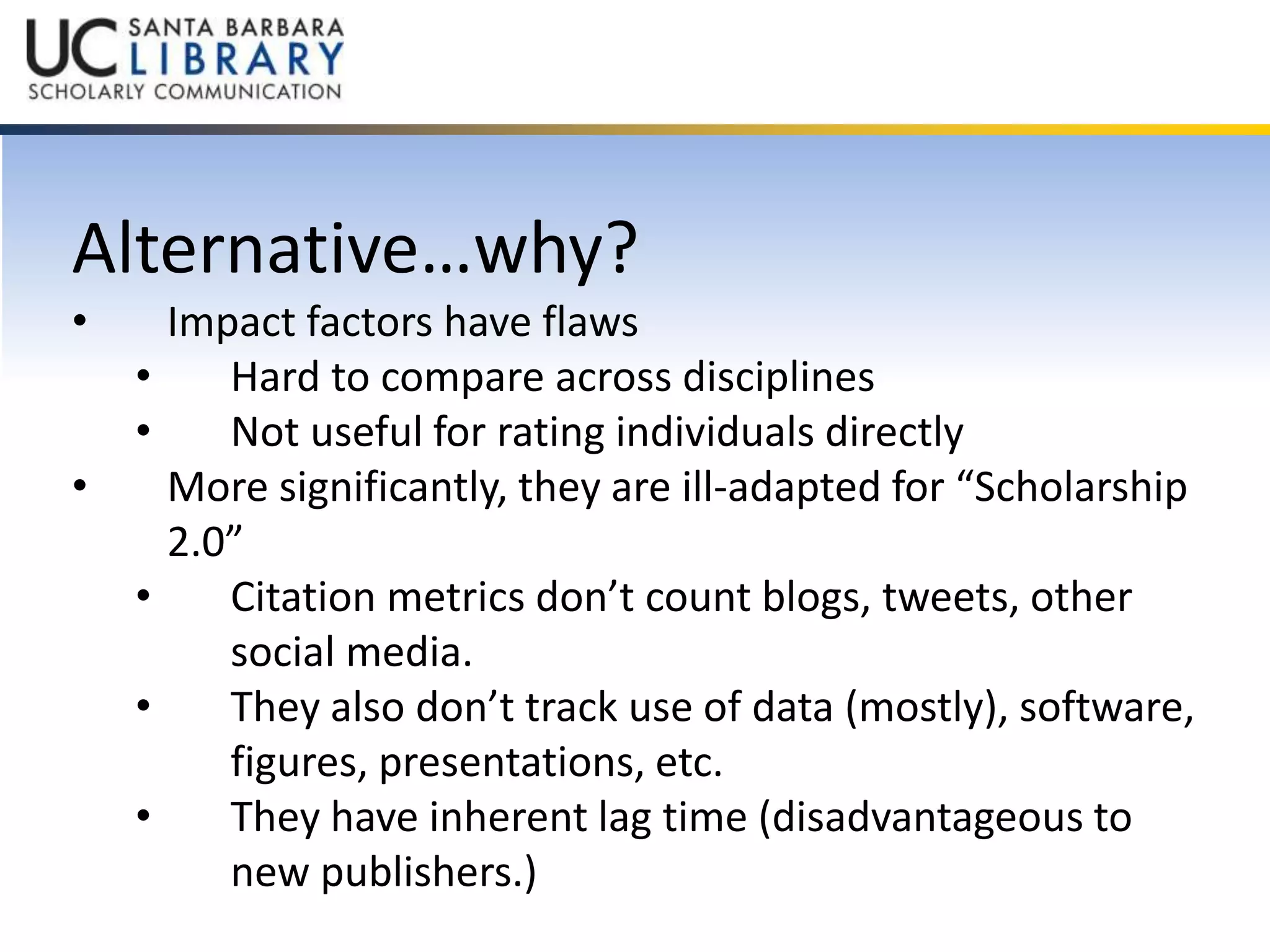

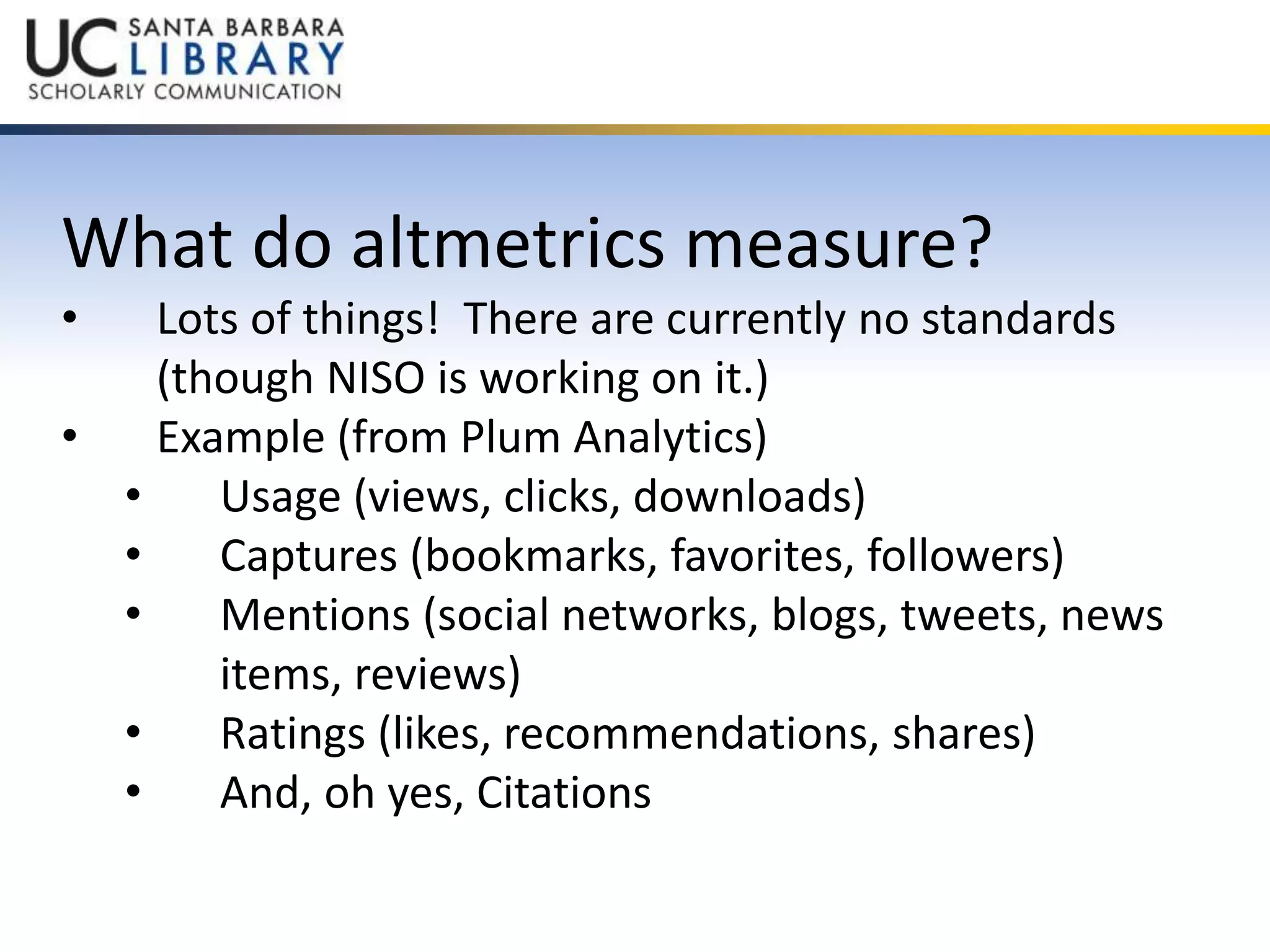

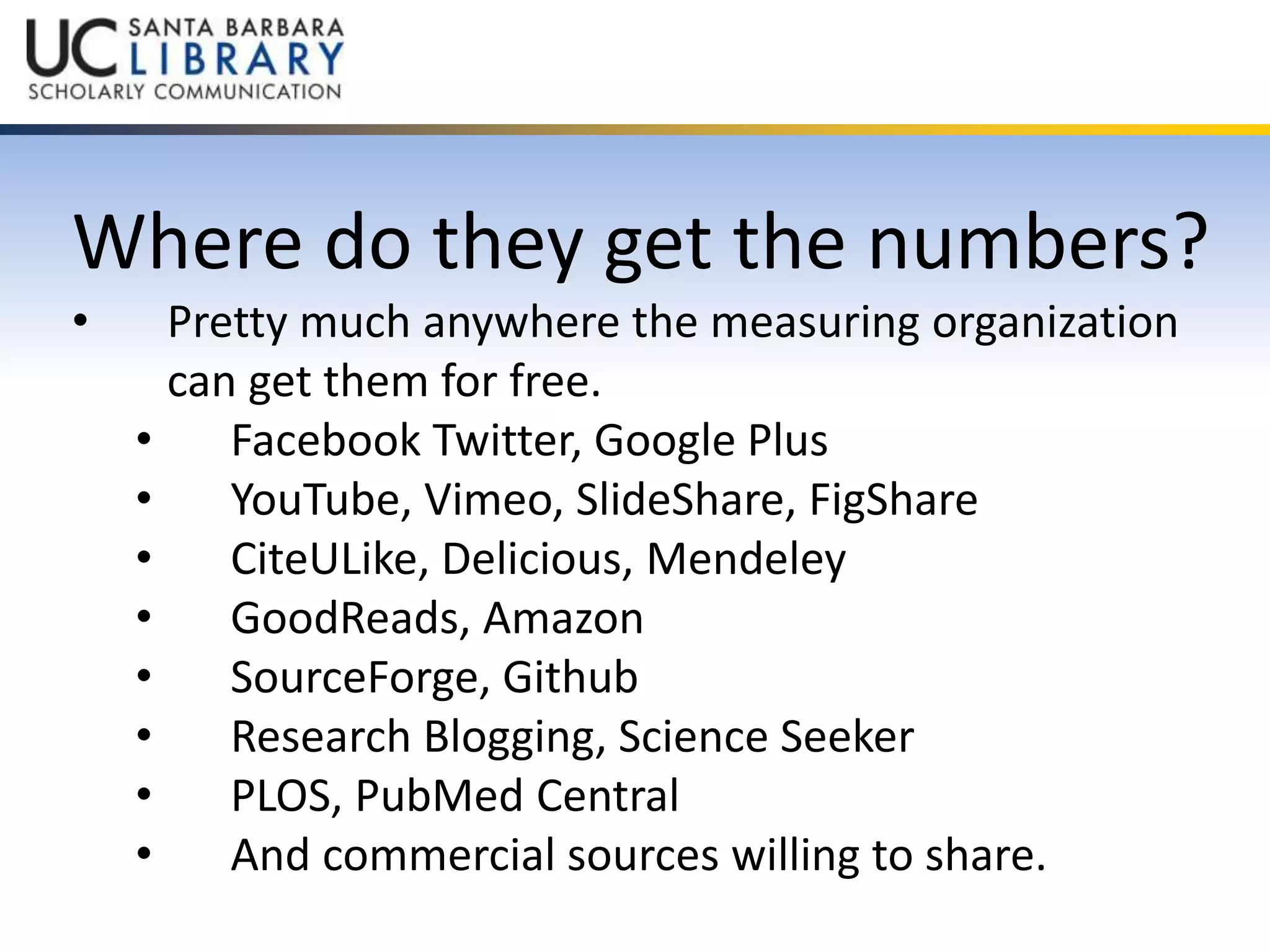

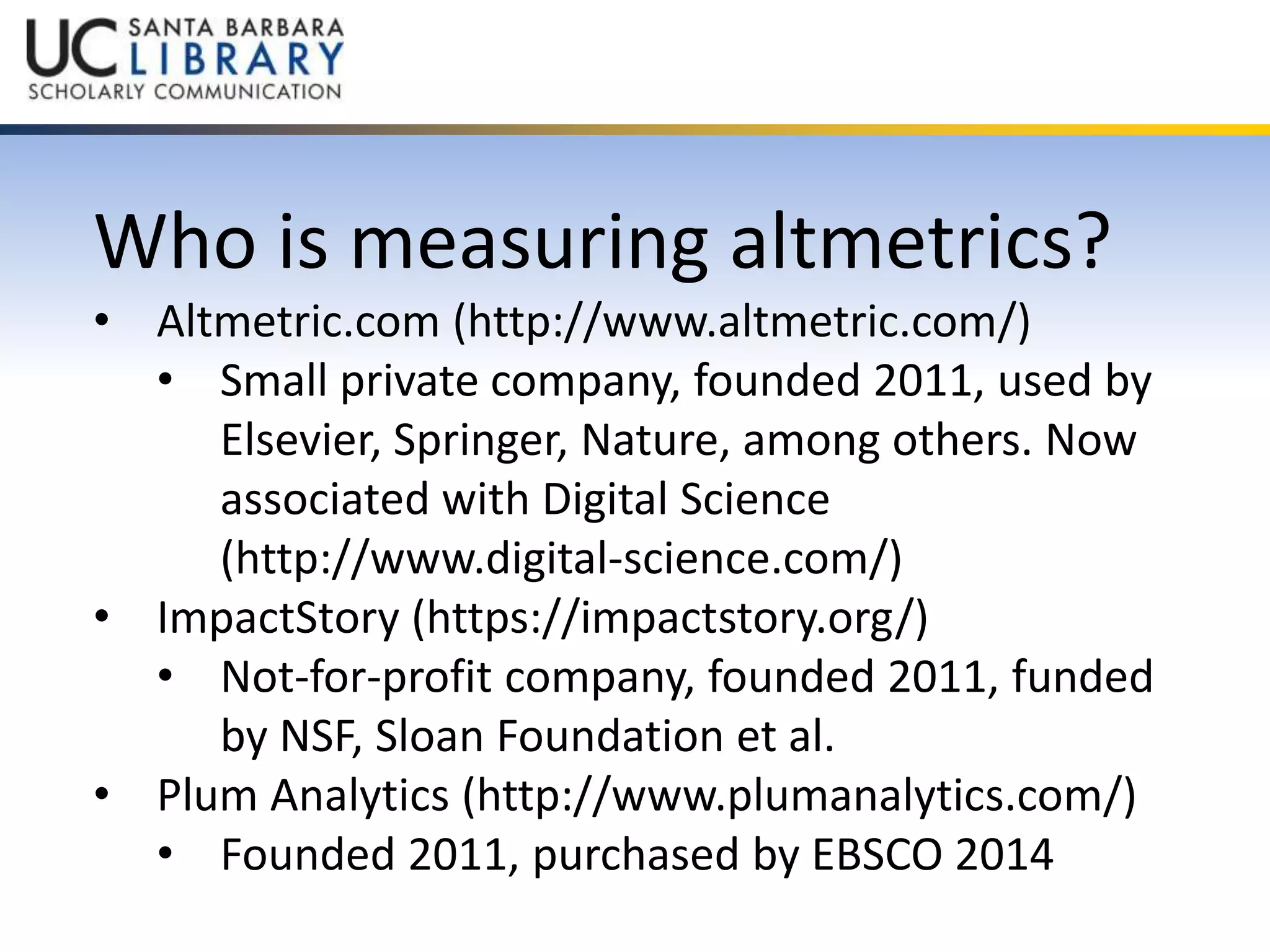

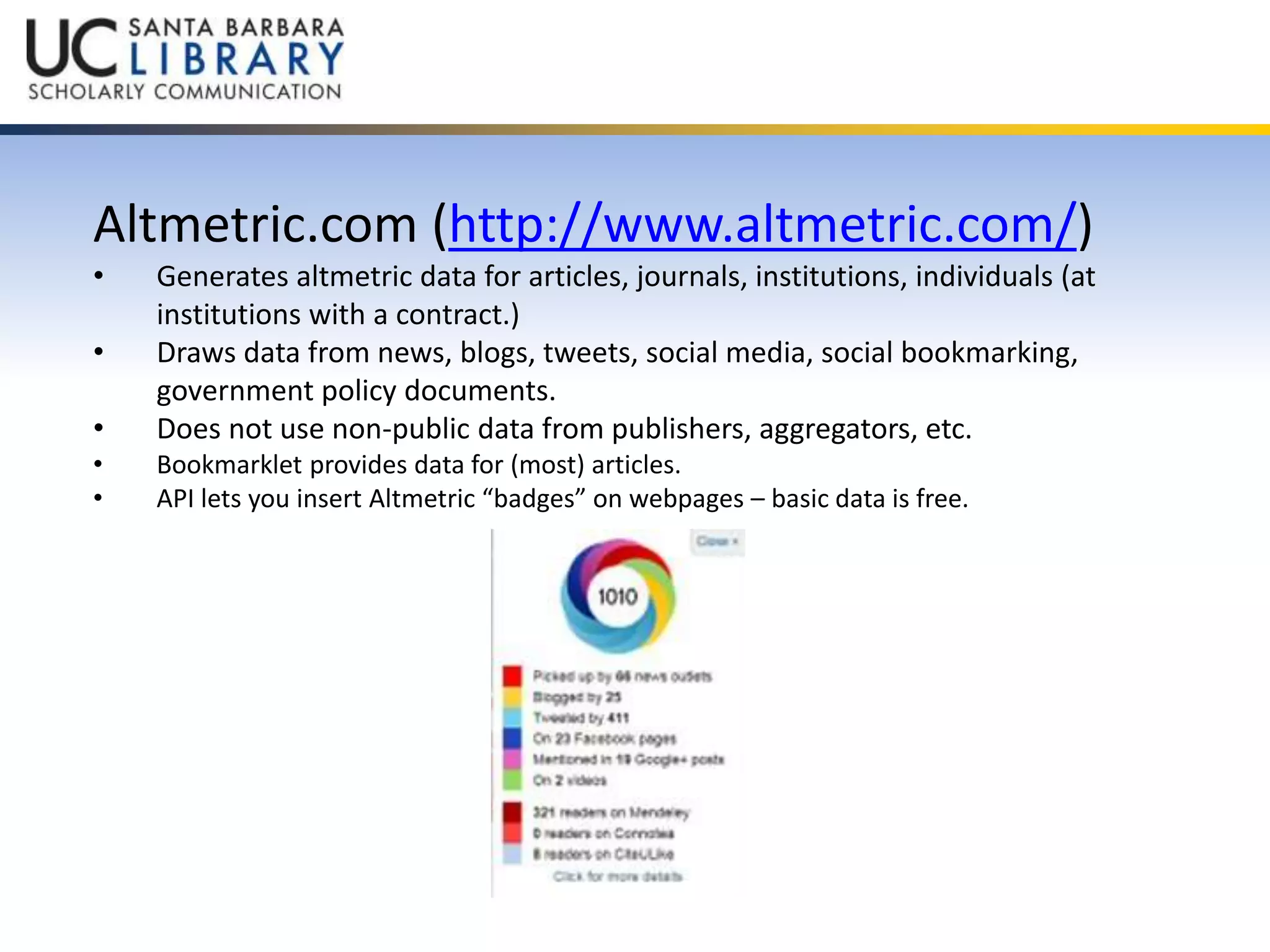

The document discusses altmetrics as alternative metrics to evaluate scholarly contributions in a new publishing environment, addressing the limitations of traditional impact factors. It elaborates on what altmetrics measure, the sources from which they gather data, and highlights several organizations involved in measuring these metrics, such as altmetric.com and Plum Analytics. The document also offers guidance for researchers on how to utilize altmetrics to enhance visibility and engagement with their work.