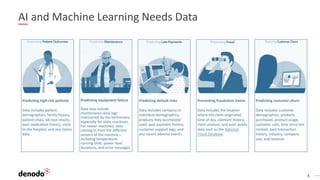

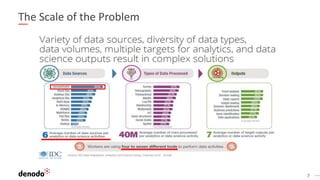

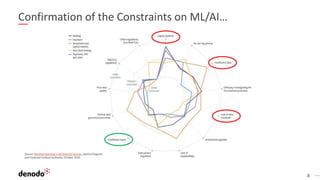

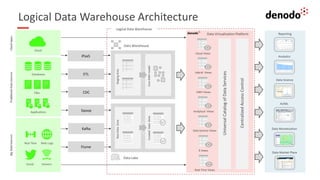

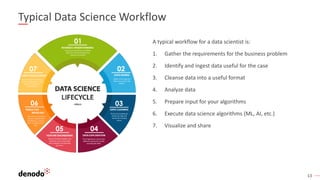

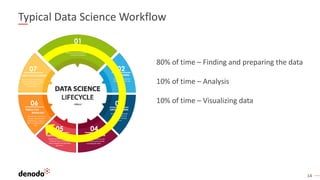

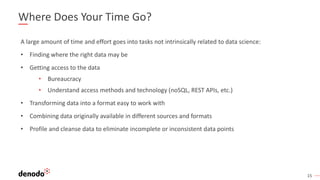

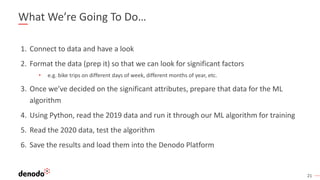

The document discusses the importance of advanced analytics and machine learning in data processing, emphasizing the role of data virtualization in streamlining data pipelines and enhancing accessibility. It outlines the challenges faced in data science workflows, noting that a significant amount of time is spent on data preparation rather than analysis. The Denodo platform is highlighted as a solution that simplifies access to various data sources, helping organizations leverage data more efficiently for analytics and machine learning.