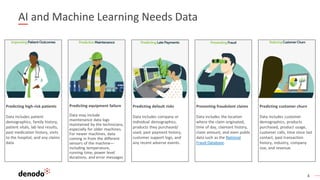

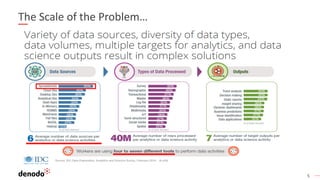

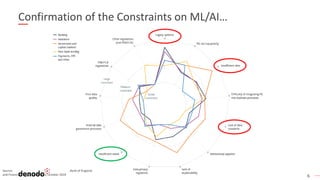

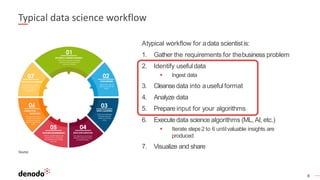

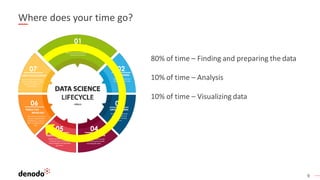

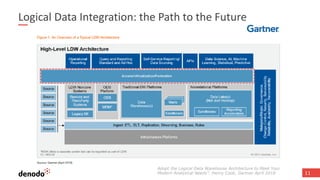

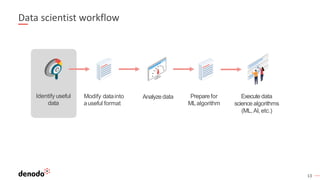

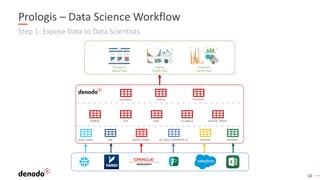

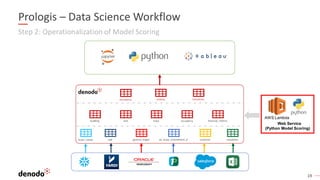

The document discusses the challenges and solutions related to advanced analytics and machine learning, emphasizing the importance of data virtualization in streamlining the data pipeline process. It highlights the difficulties data scientists face in data preparation and showcases a customer story from Prologis, which benefited from data virtualization to improve efficiency and speed in their analytics projects. The content suggests that adopting a logical data architecture can significantly enhance data access and operational capabilities for AI and machine learning initiatives.