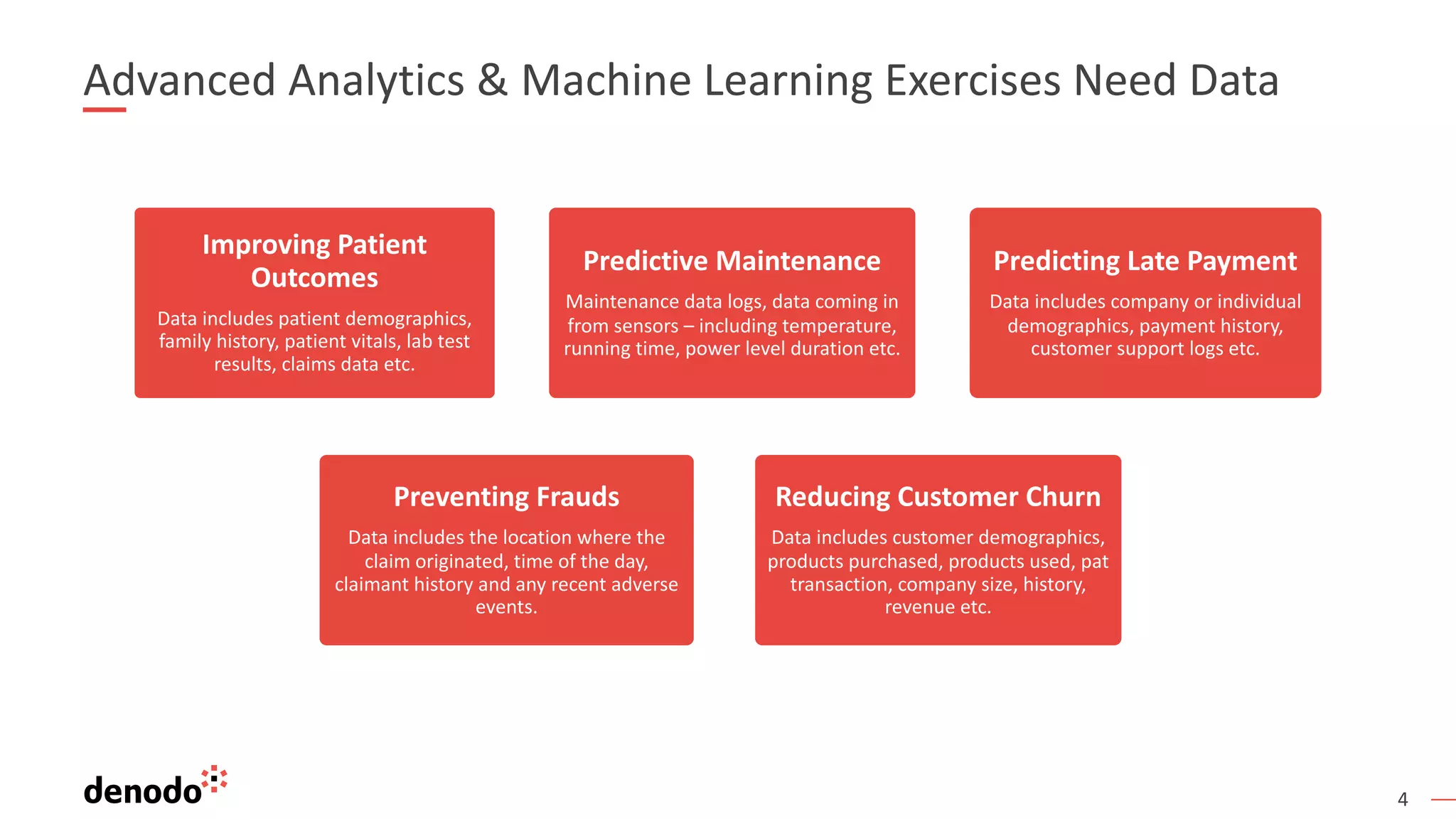

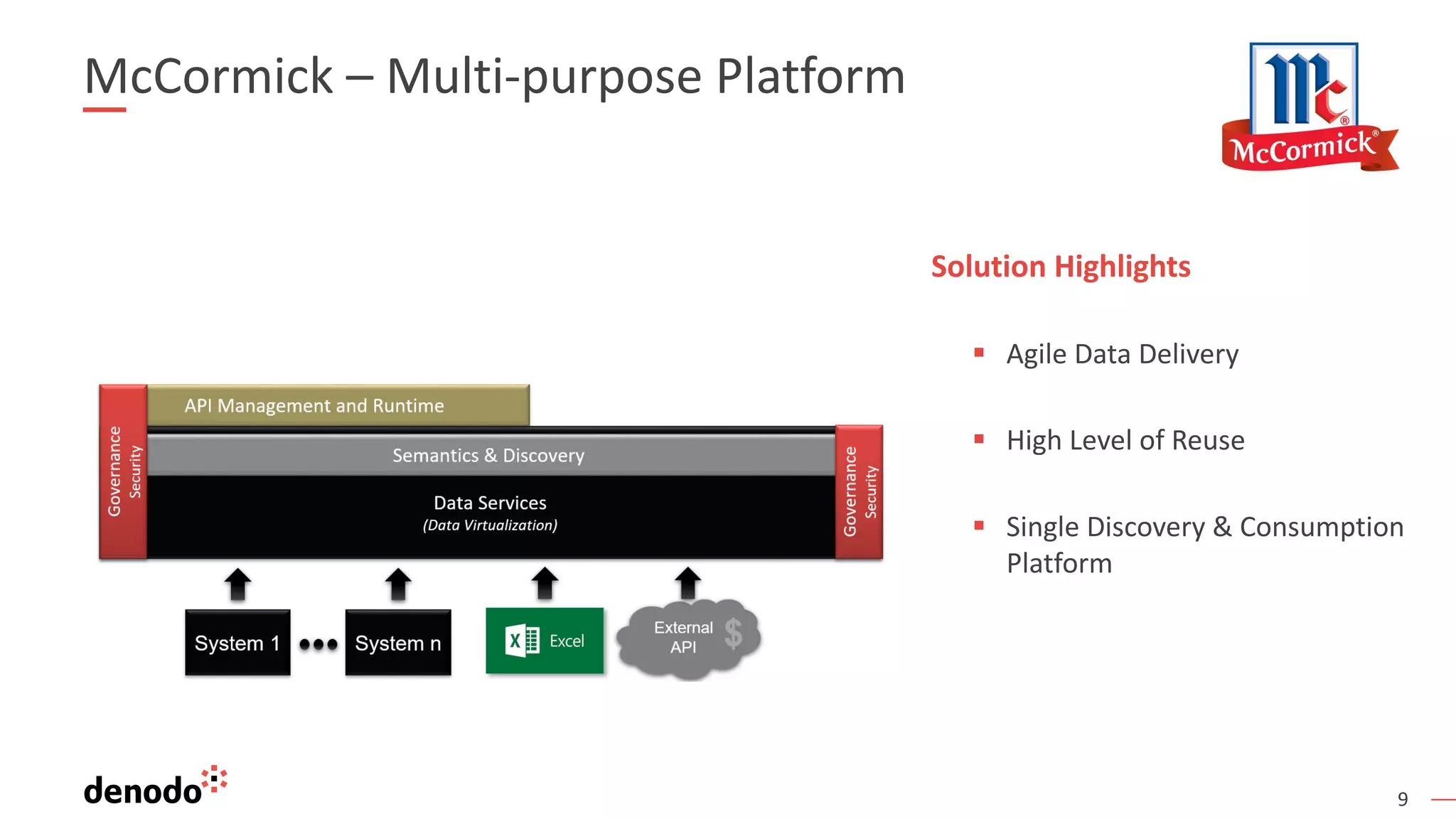

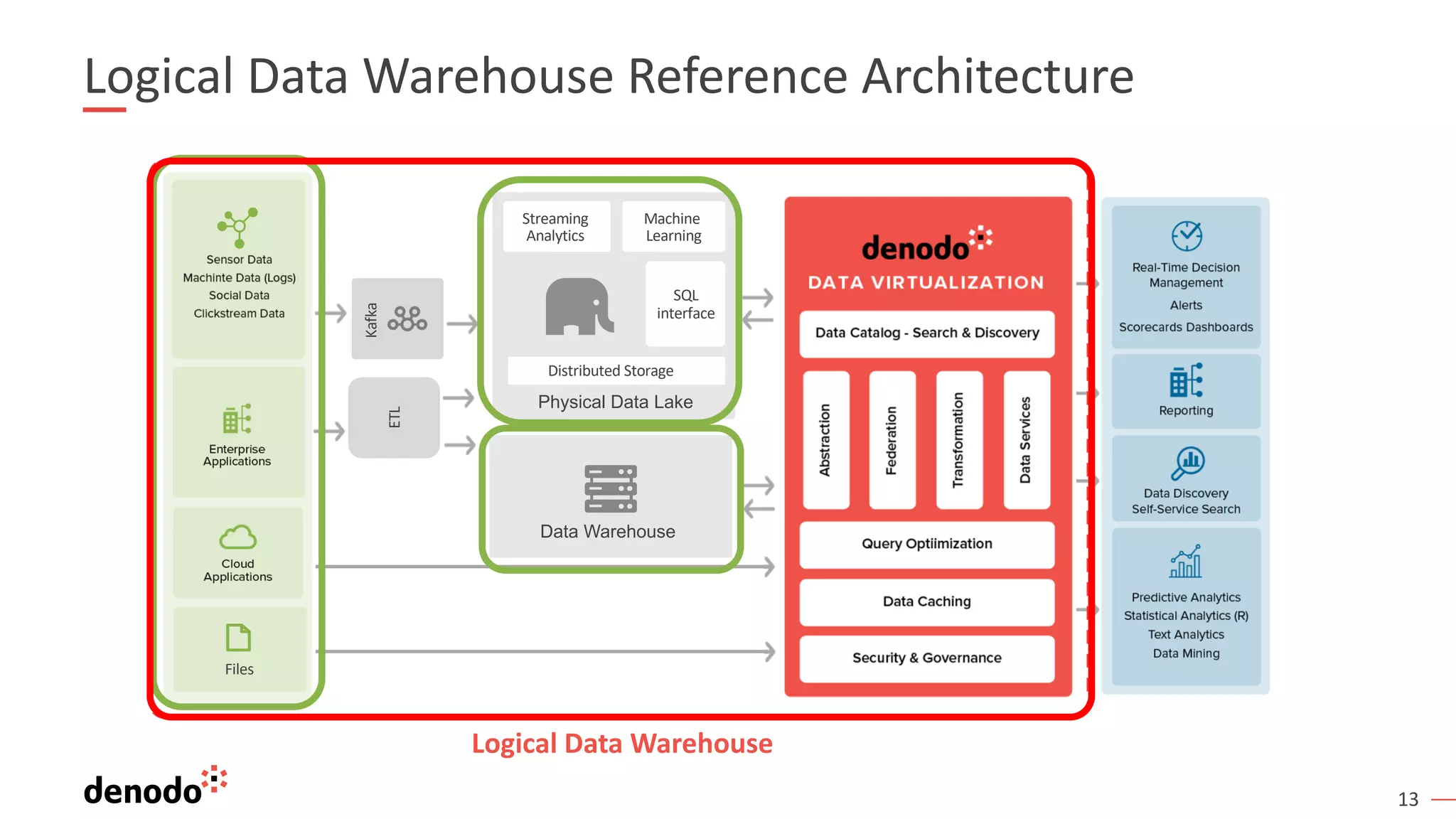

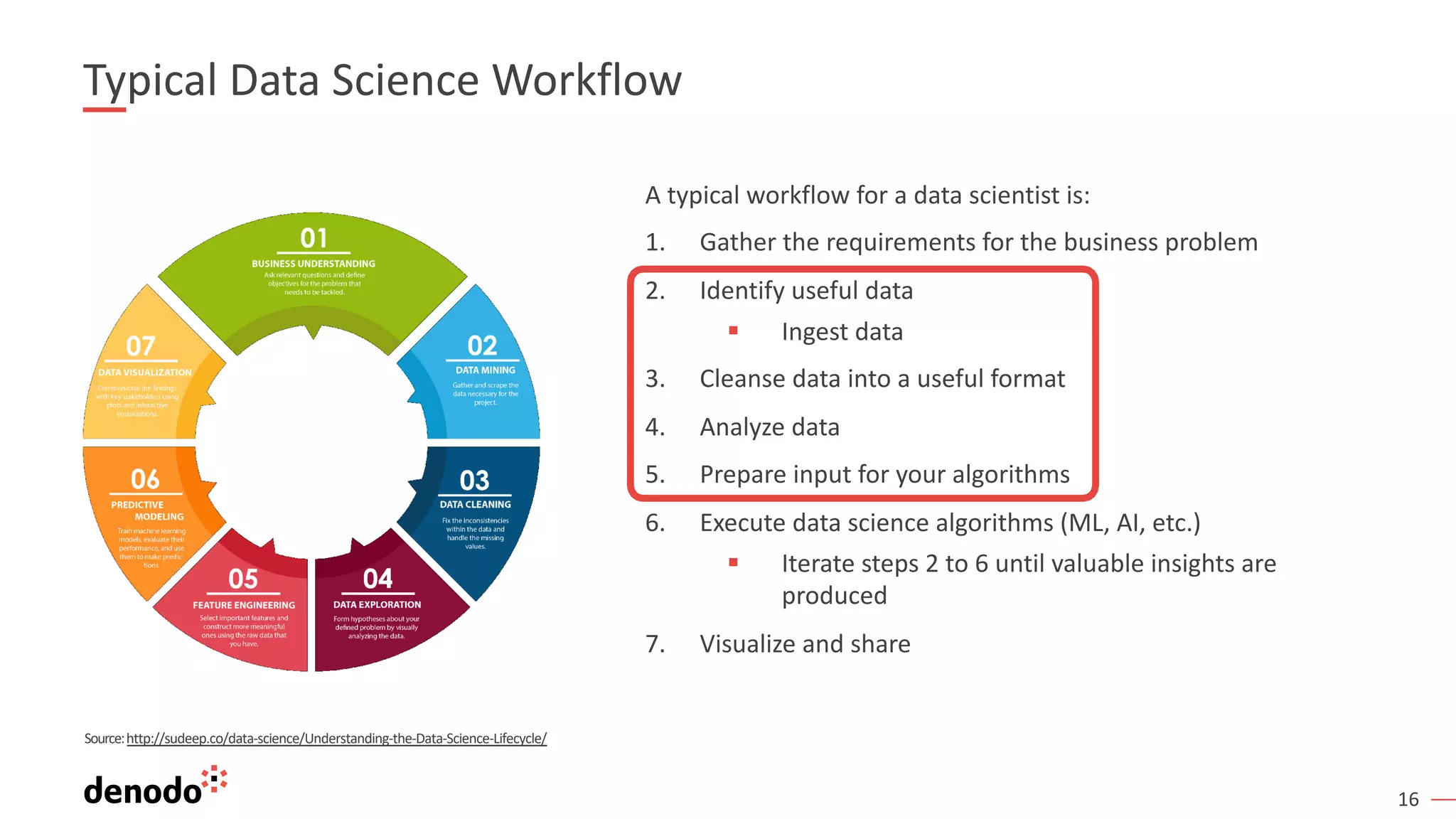

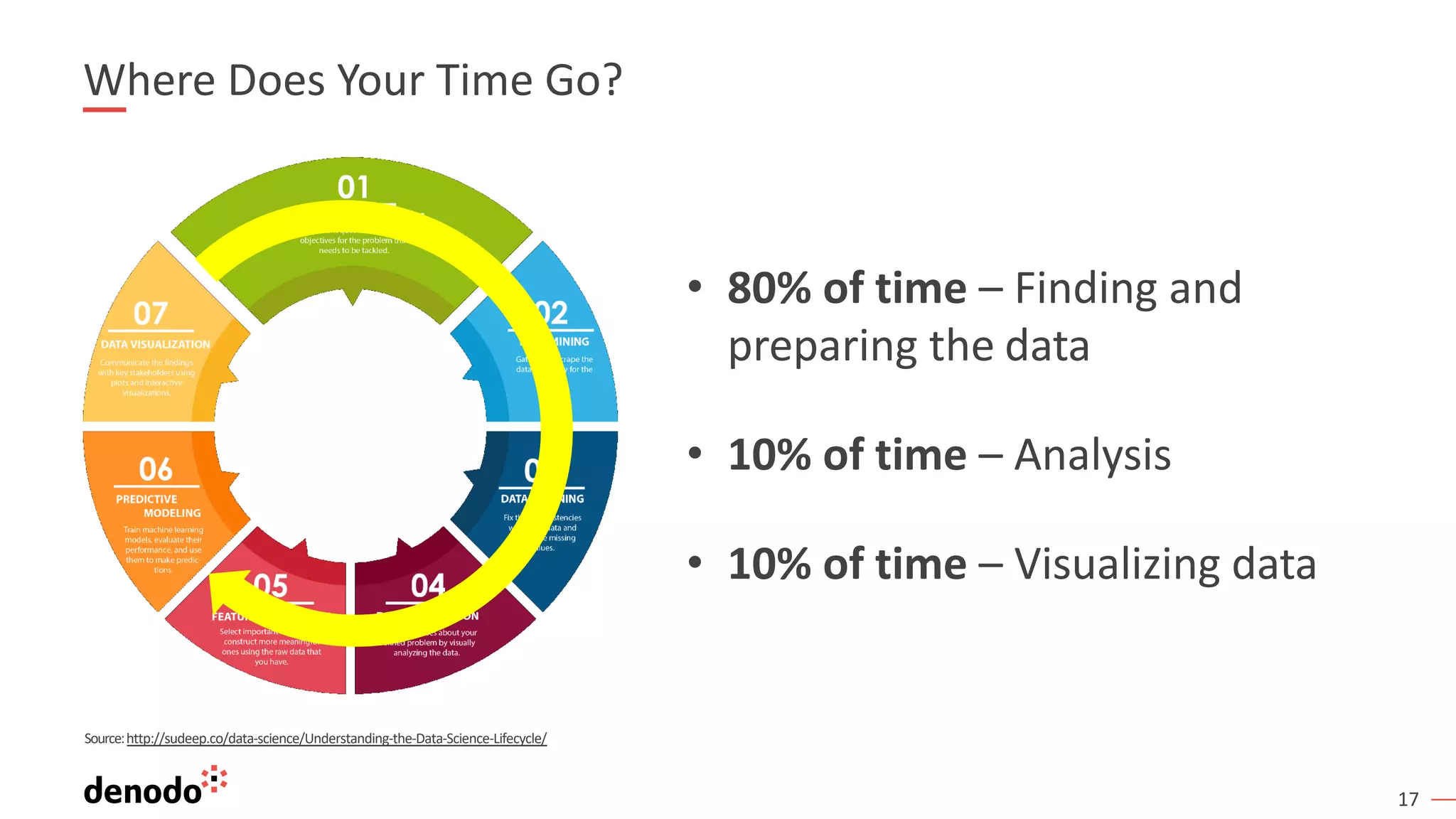

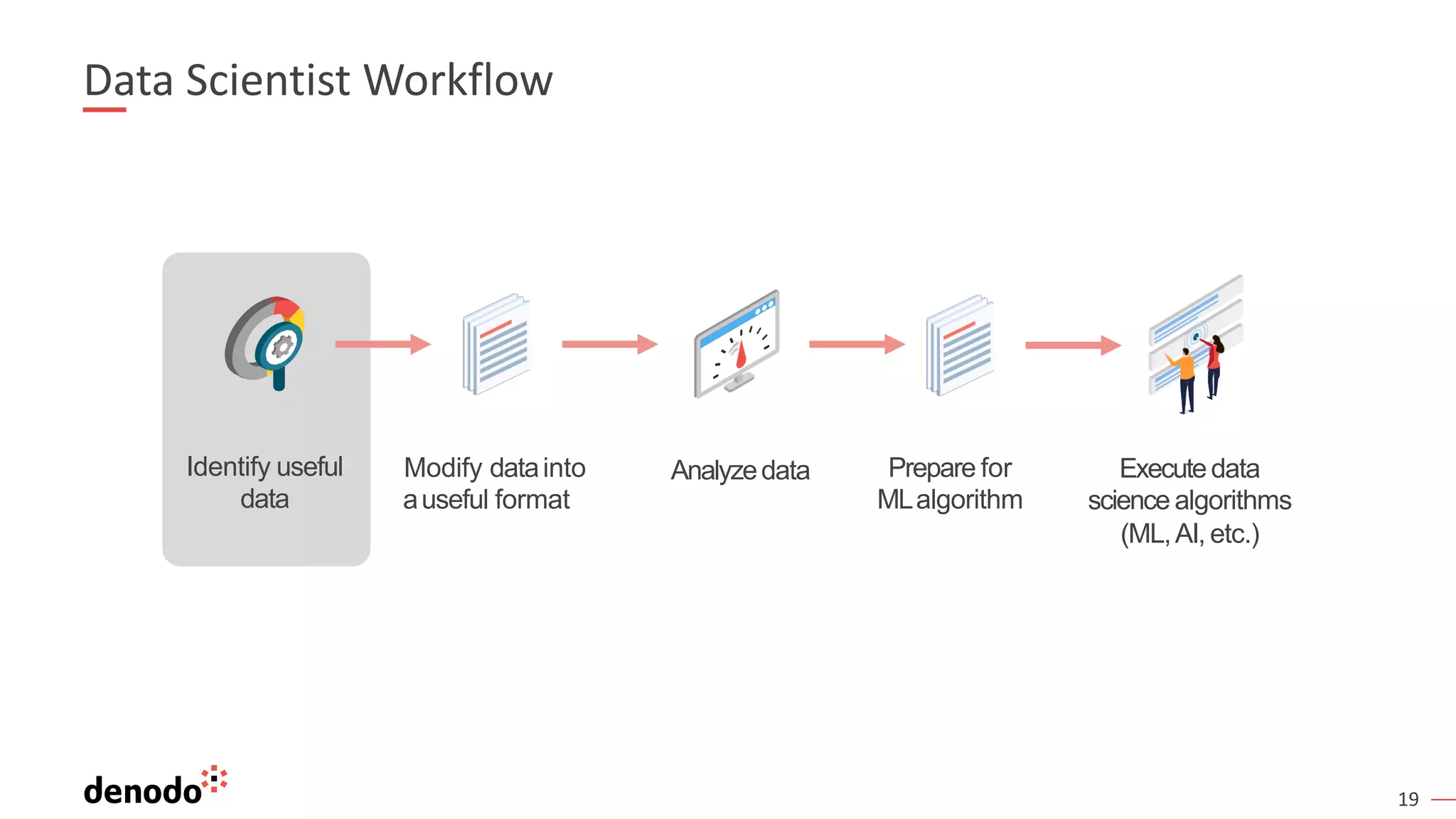

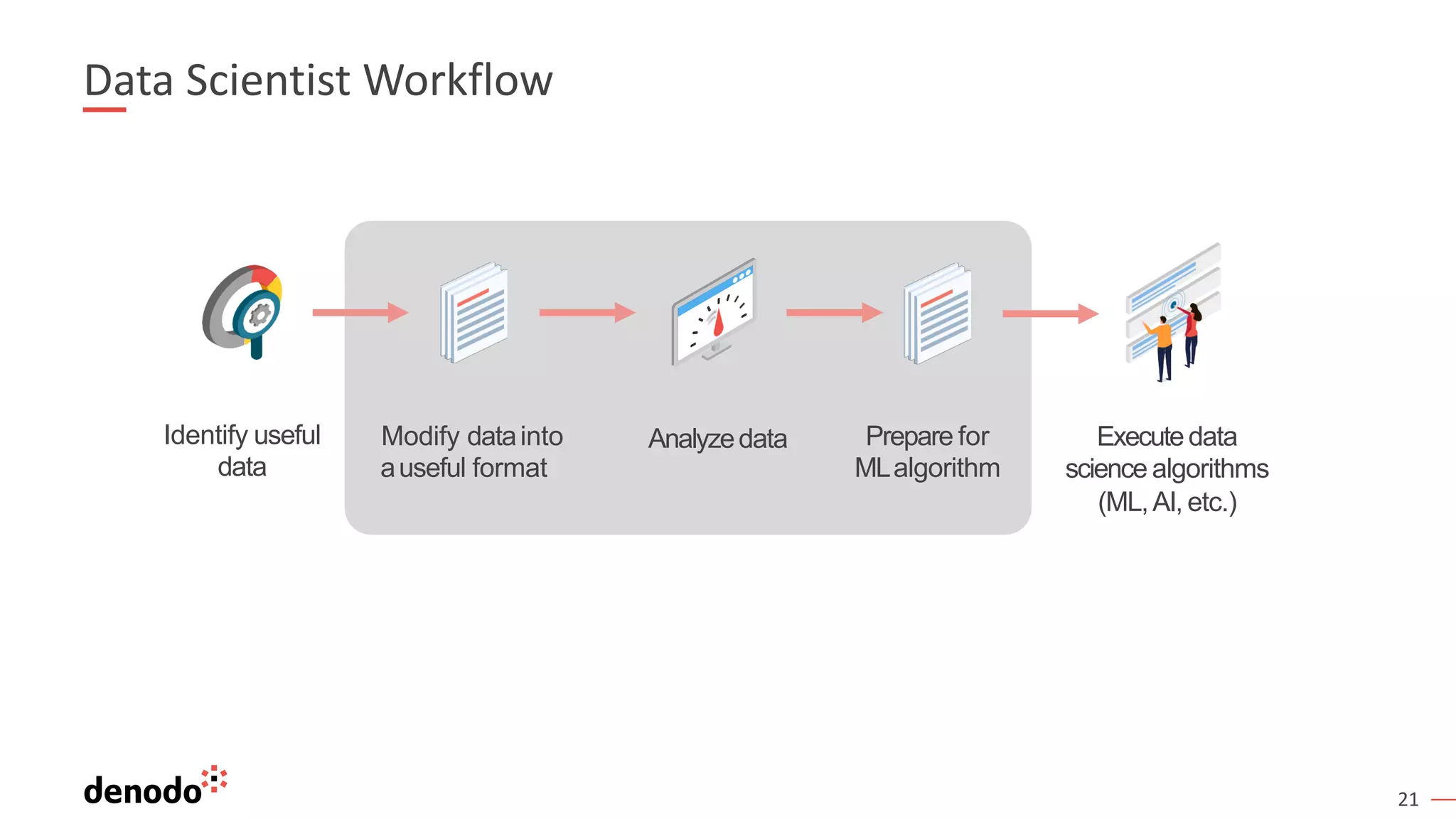

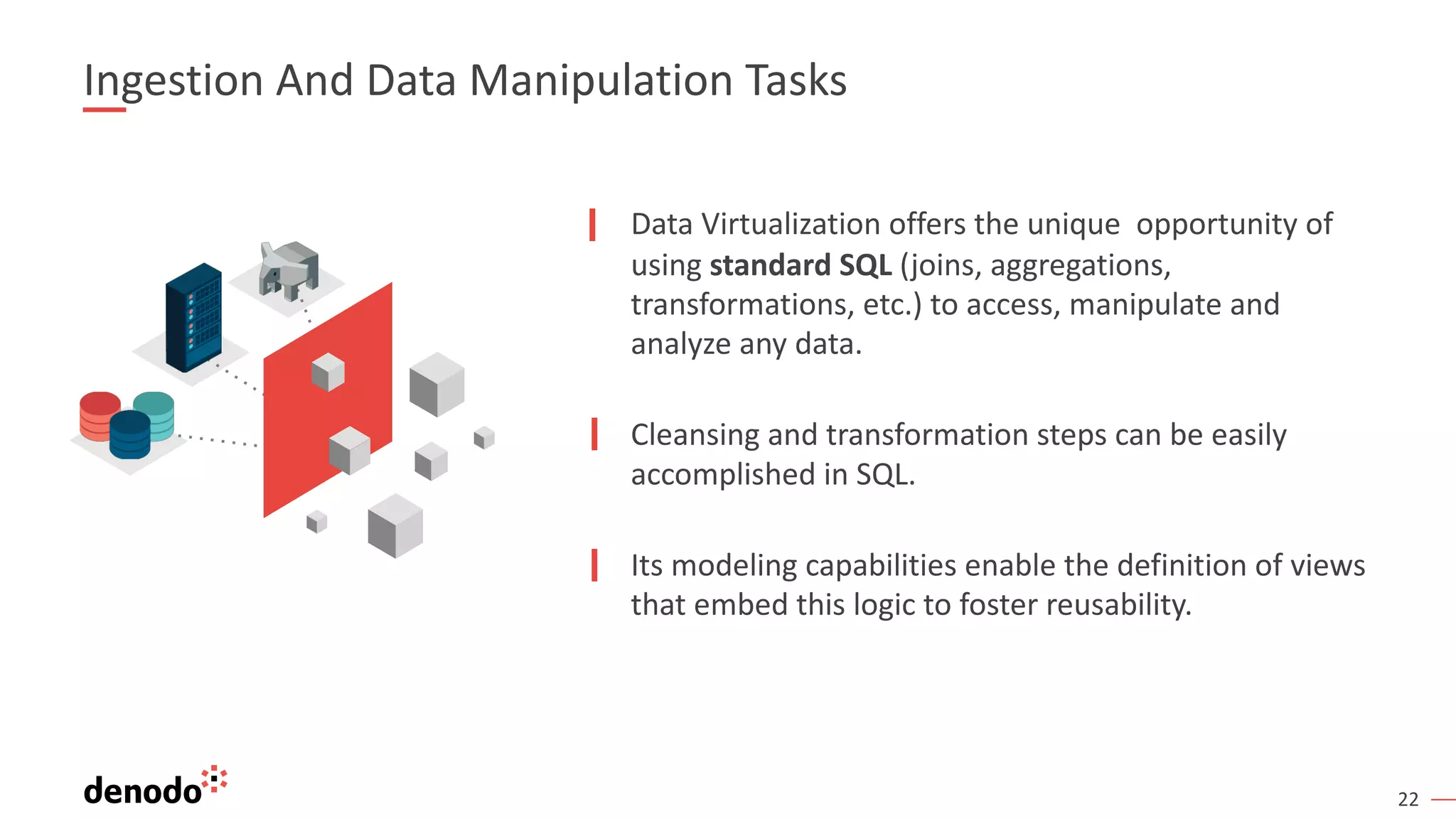

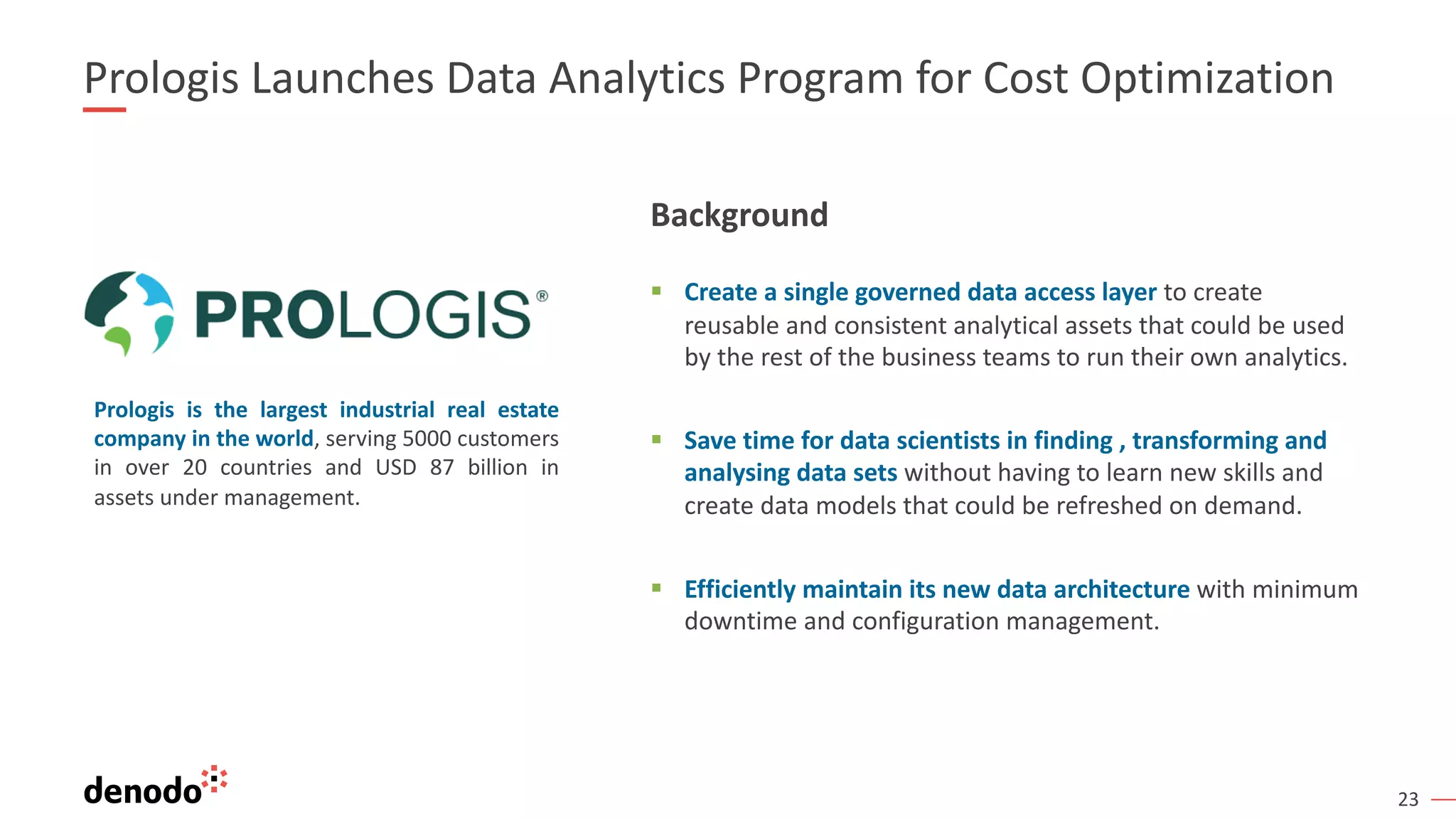

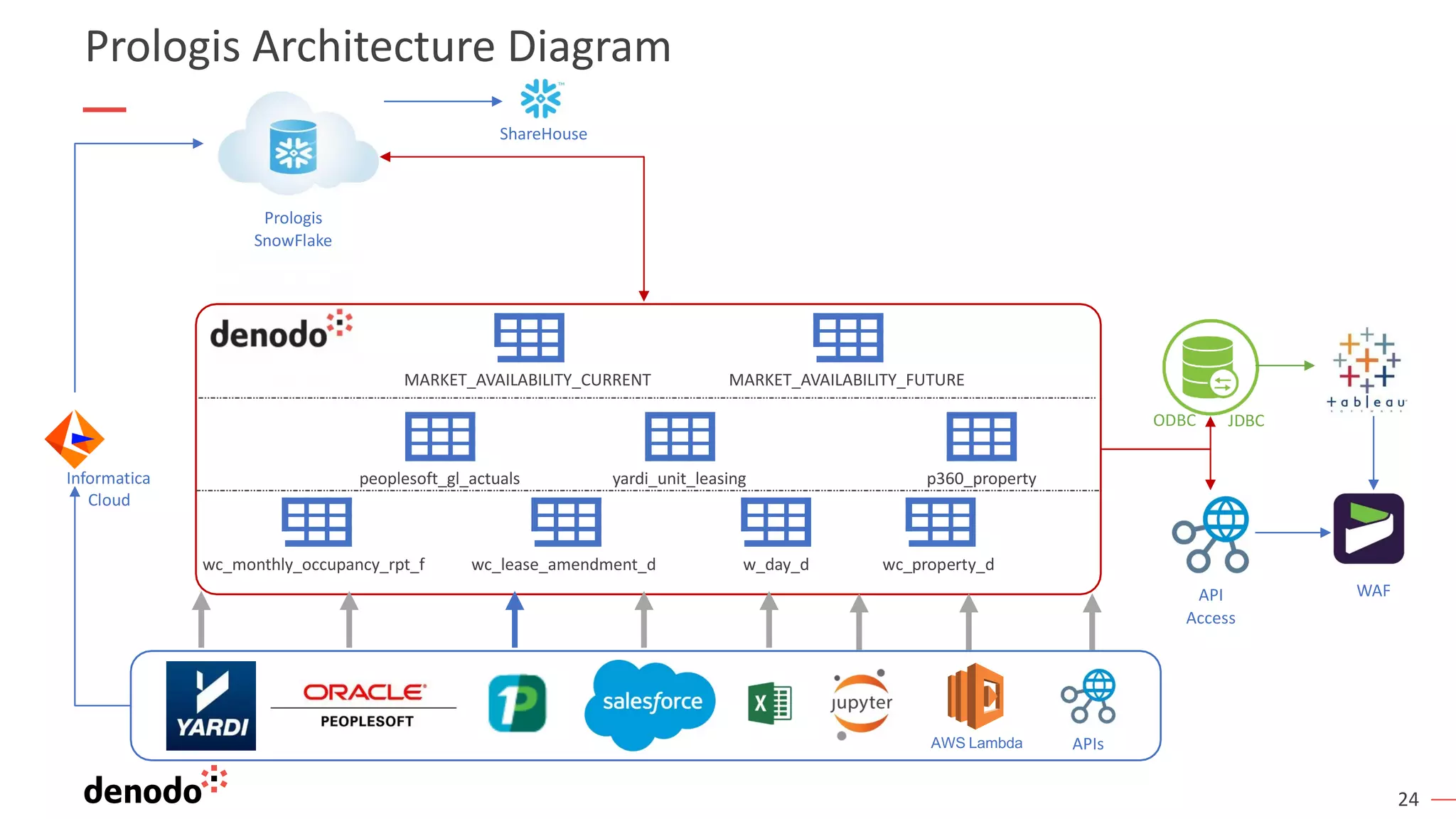

The document discusses the role of data virtualization in deploying enterprise machine learning programs, highlighting its benefits in improving data access and integration. It provides examples from companies like McCormick and Prologis, showcasing how data virtualization enhances analytics speed and simplifies data management. The document emphasizes the importance of a logical data architecture to overcome challenges in data pipeline processes and support advanced analytics initiatives.