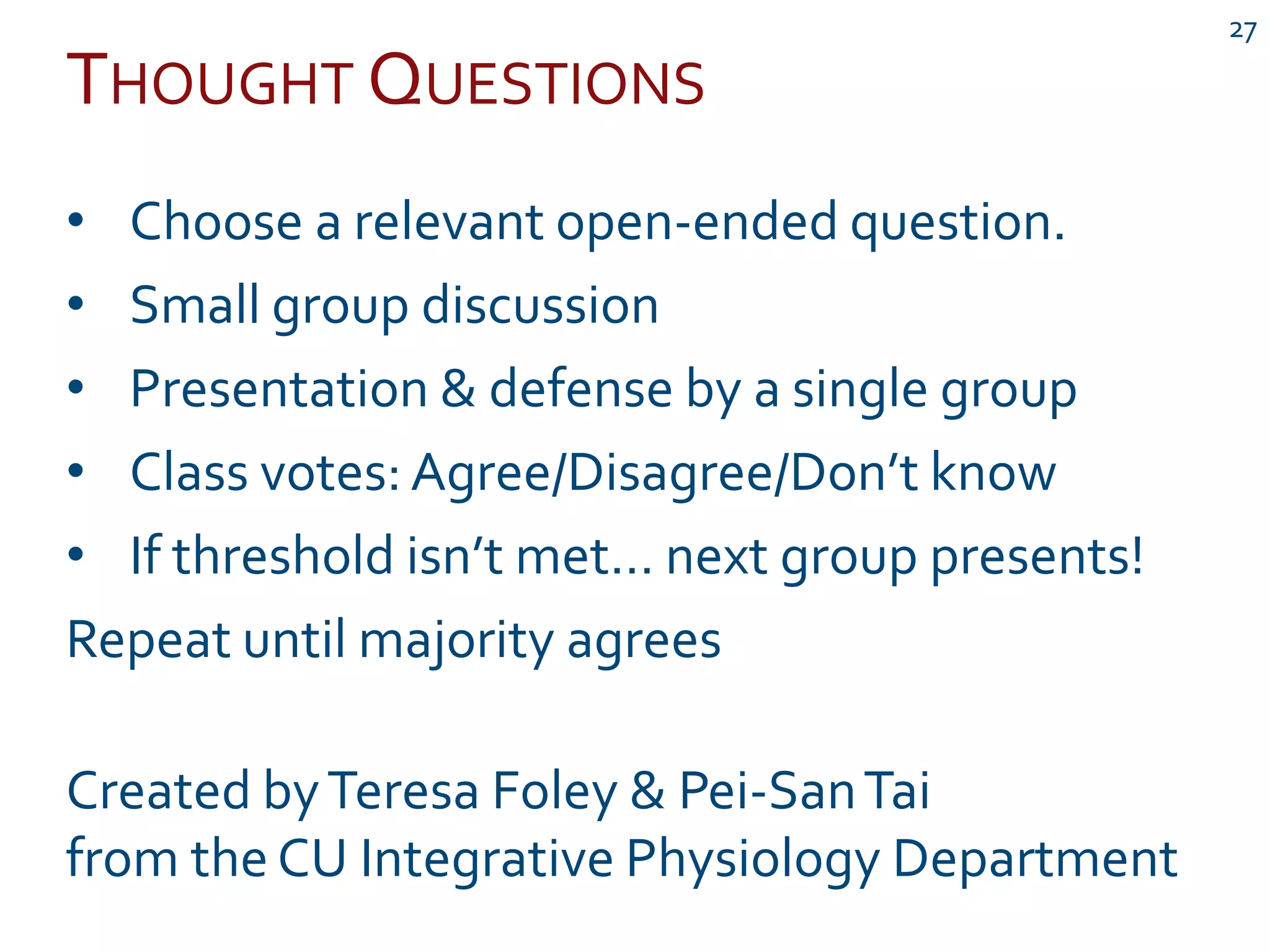

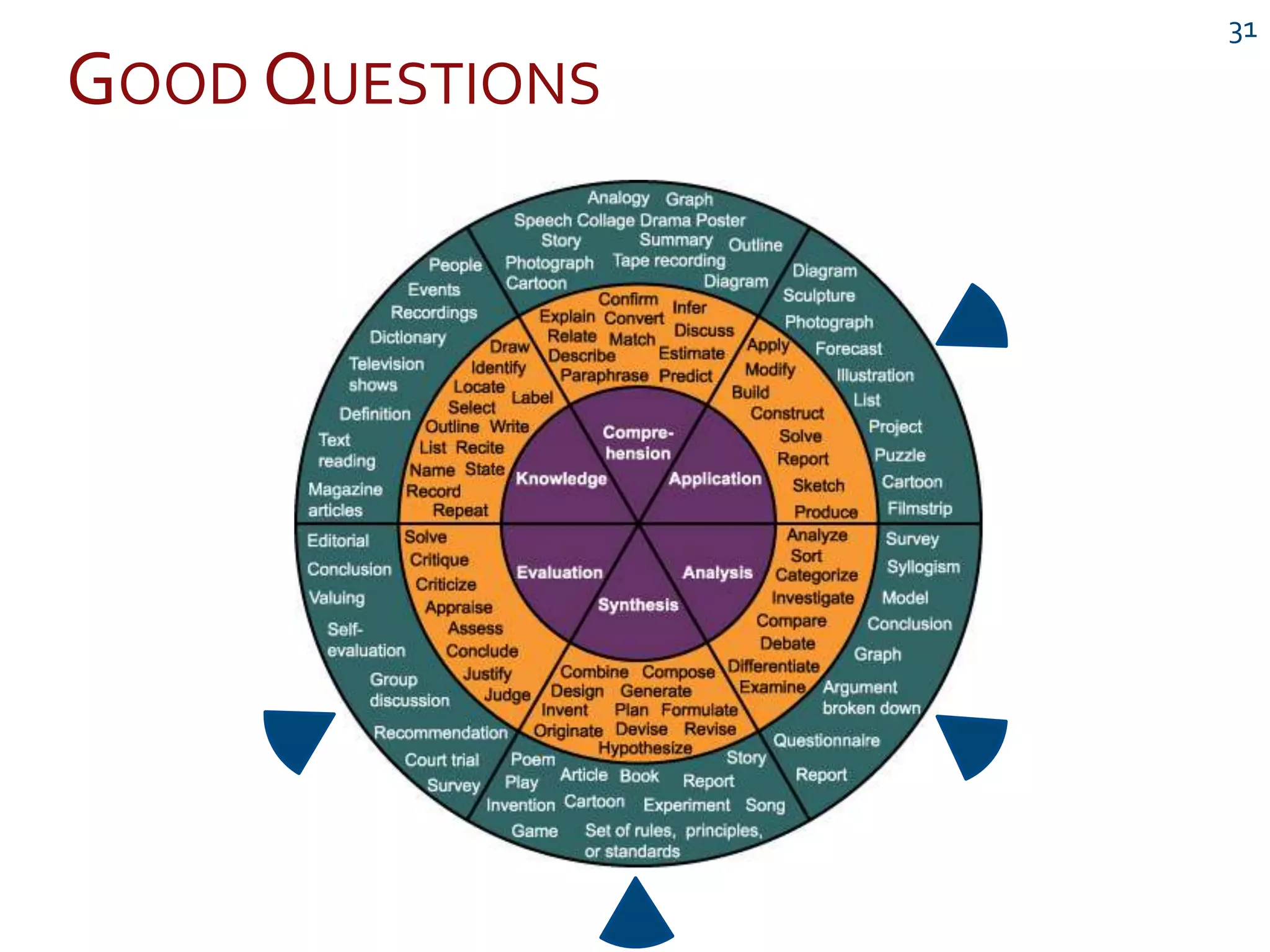

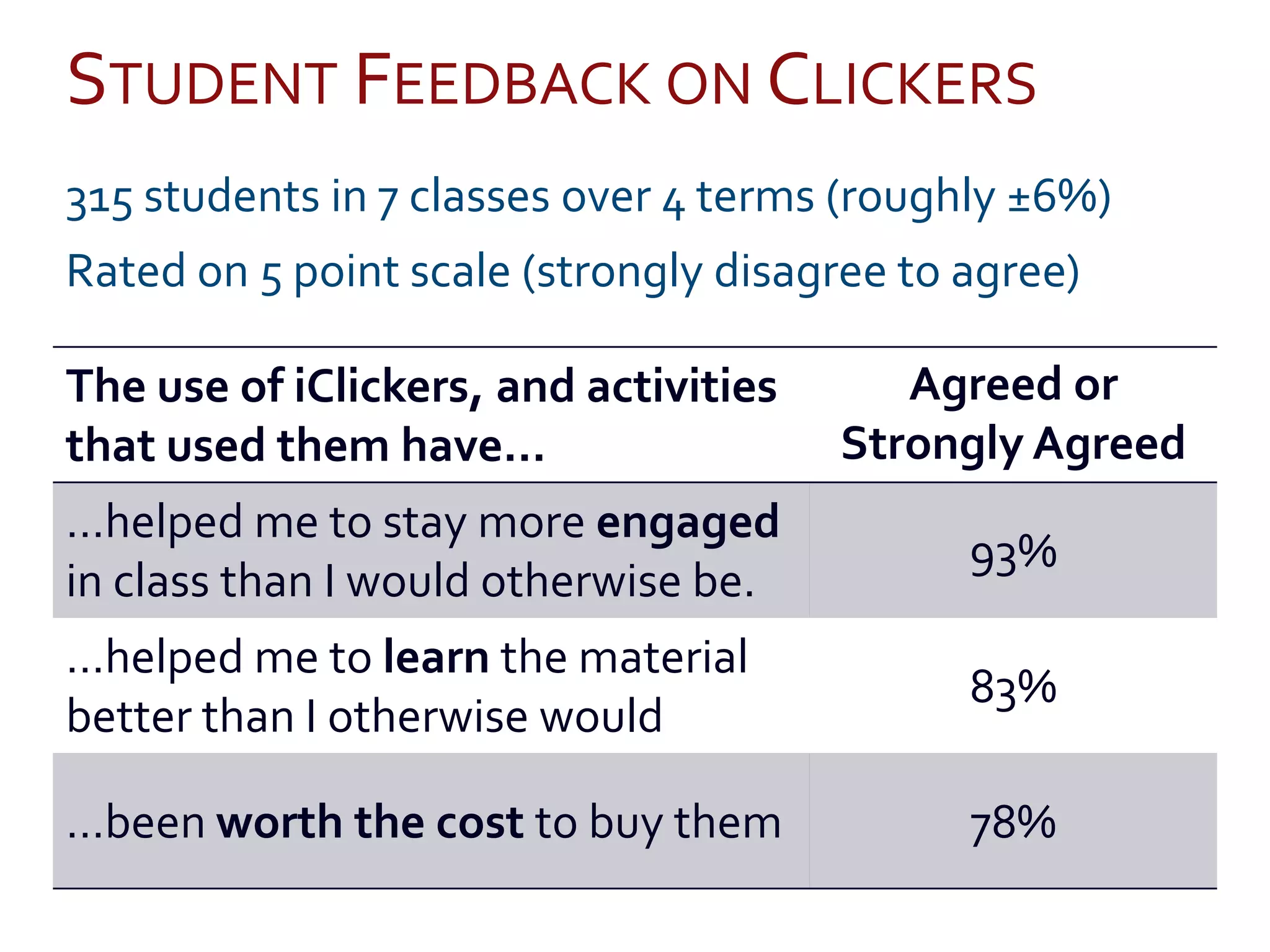

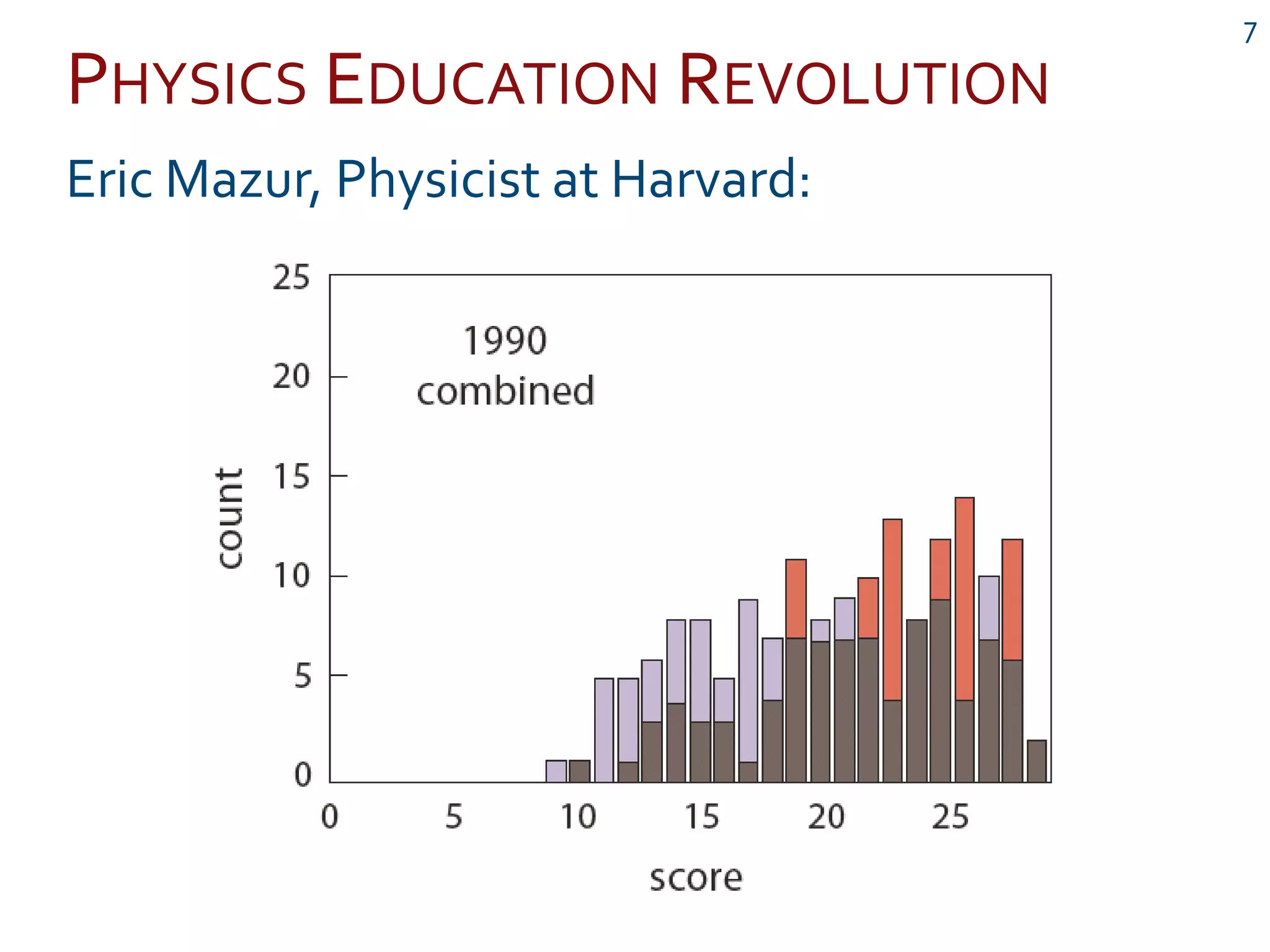

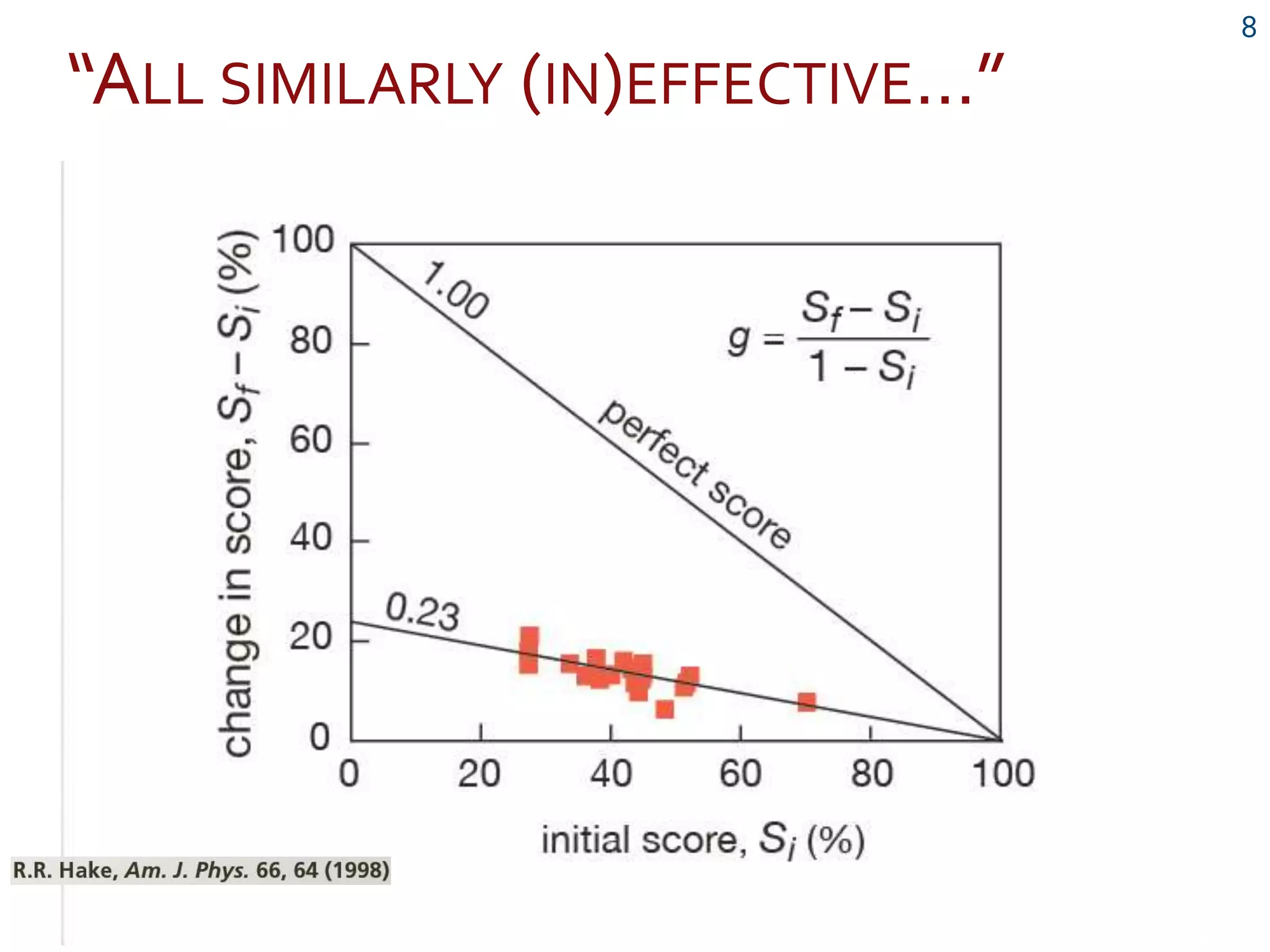

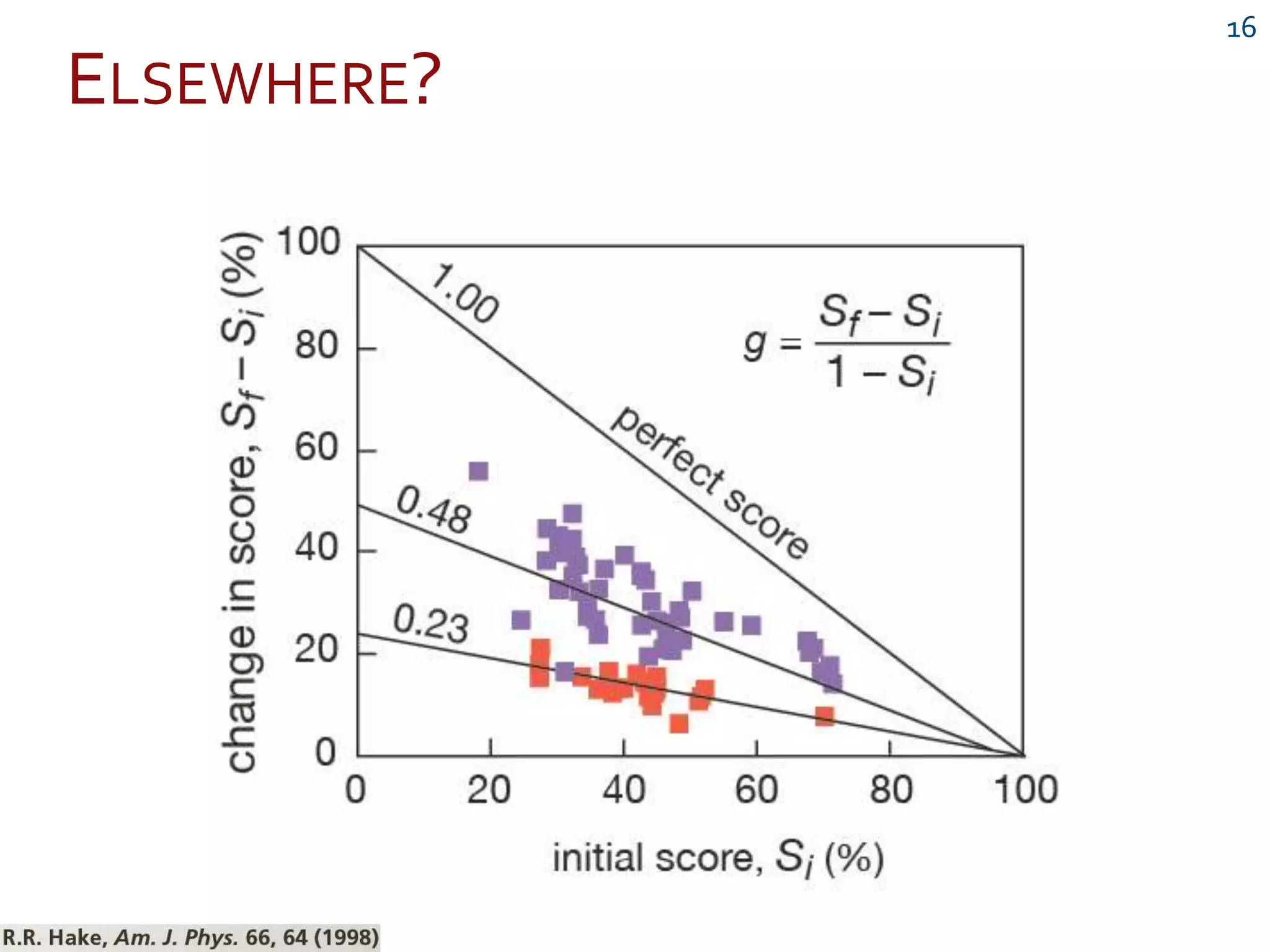

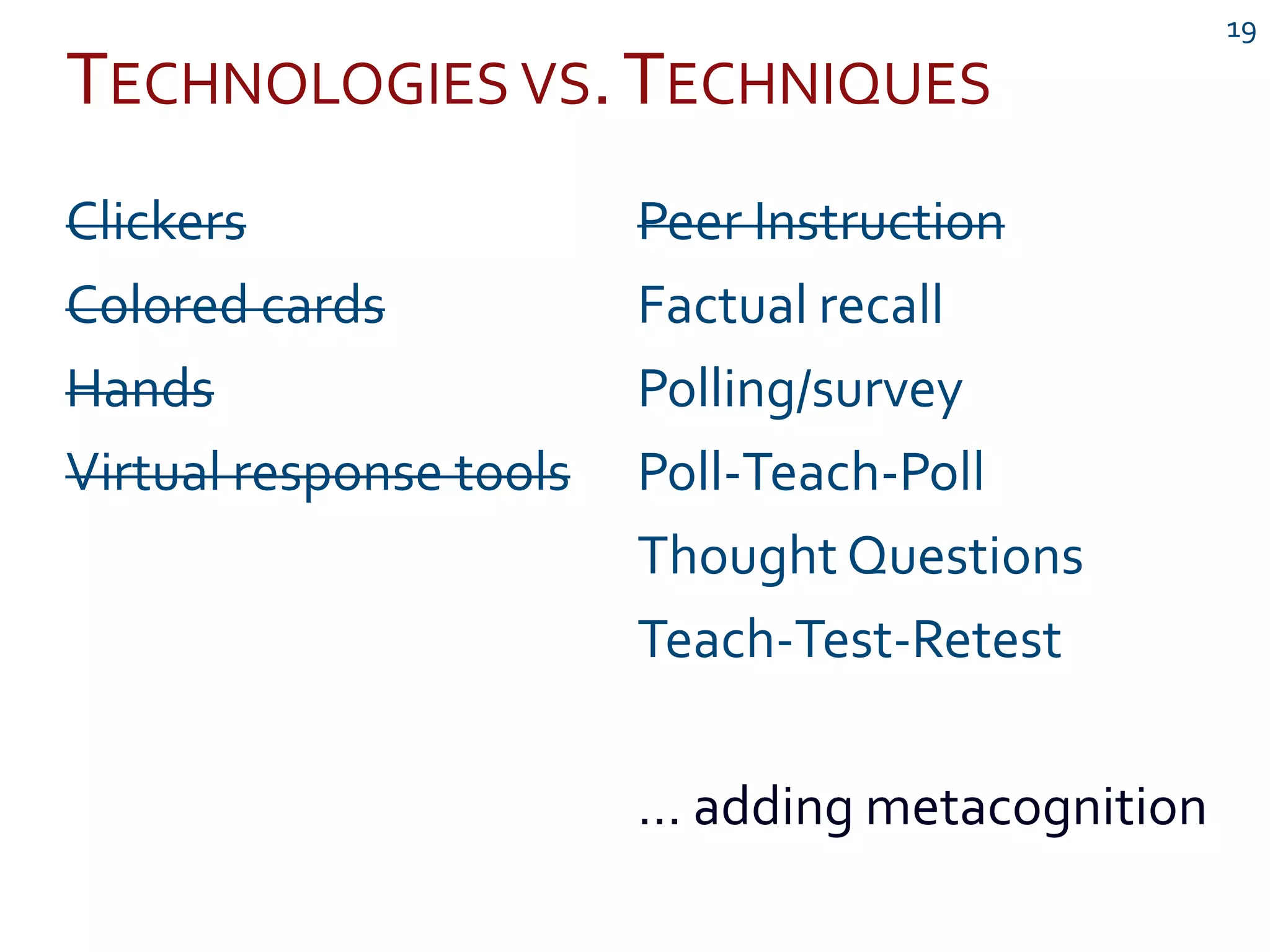

This document discusses the use of classroom response systems, also known as clickers, to promote active engagement in university courses. It provides an overview of techniques like peer instruction and thought questions that can be used with clickers. The evidence suggests that clickers help students learn and perform better on assessments by encouraging participation and concentration. While clickers are effective, the presenter emphasizes that pedagogical techniques are more important than the technology itself, and instructors should start small and focus on conceptual understanding over factual recall.

![How large of an effect does bias have in the social

sciences? [Measurement was of faculty

responsiveness to prospective student emails.]

A) Women/minorities do worse by ~11%)

B) Women/minorities do worse by ~3%

C) No difference across gender/ethnicities

D) Caucasian males do worse by ~3%

E) Caucasian males do worse by ~11%

24](https://image.slidesharecdn.com/activeengagementusingclassroomresponsesystems-csupueblo-jeffloats-130619155007-phpapp01/75/Active-Engagement-Using-Classroom-Response-Systems-CSU-Pueblo-Jeff-Loats-24-2048.jpg)