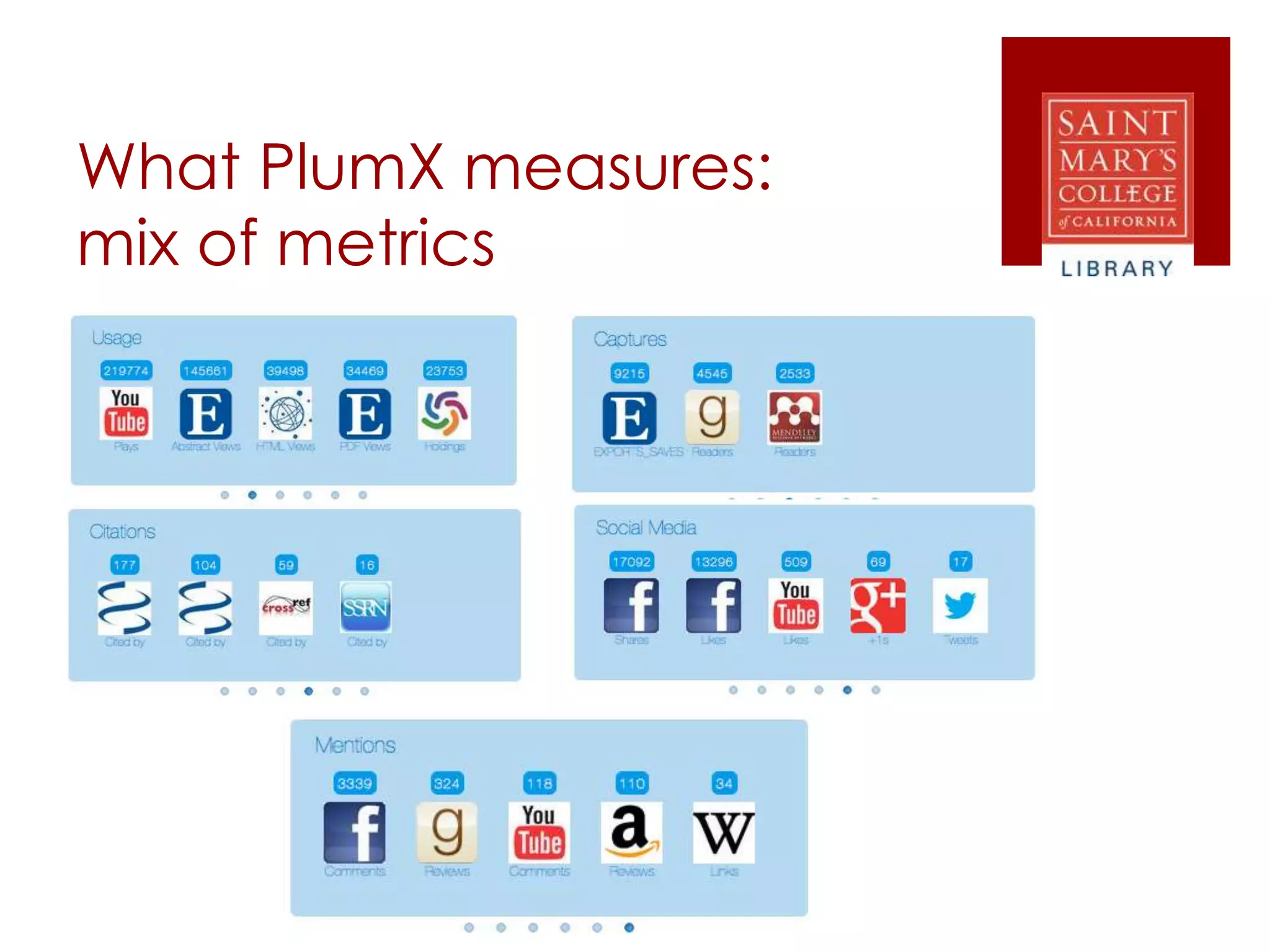

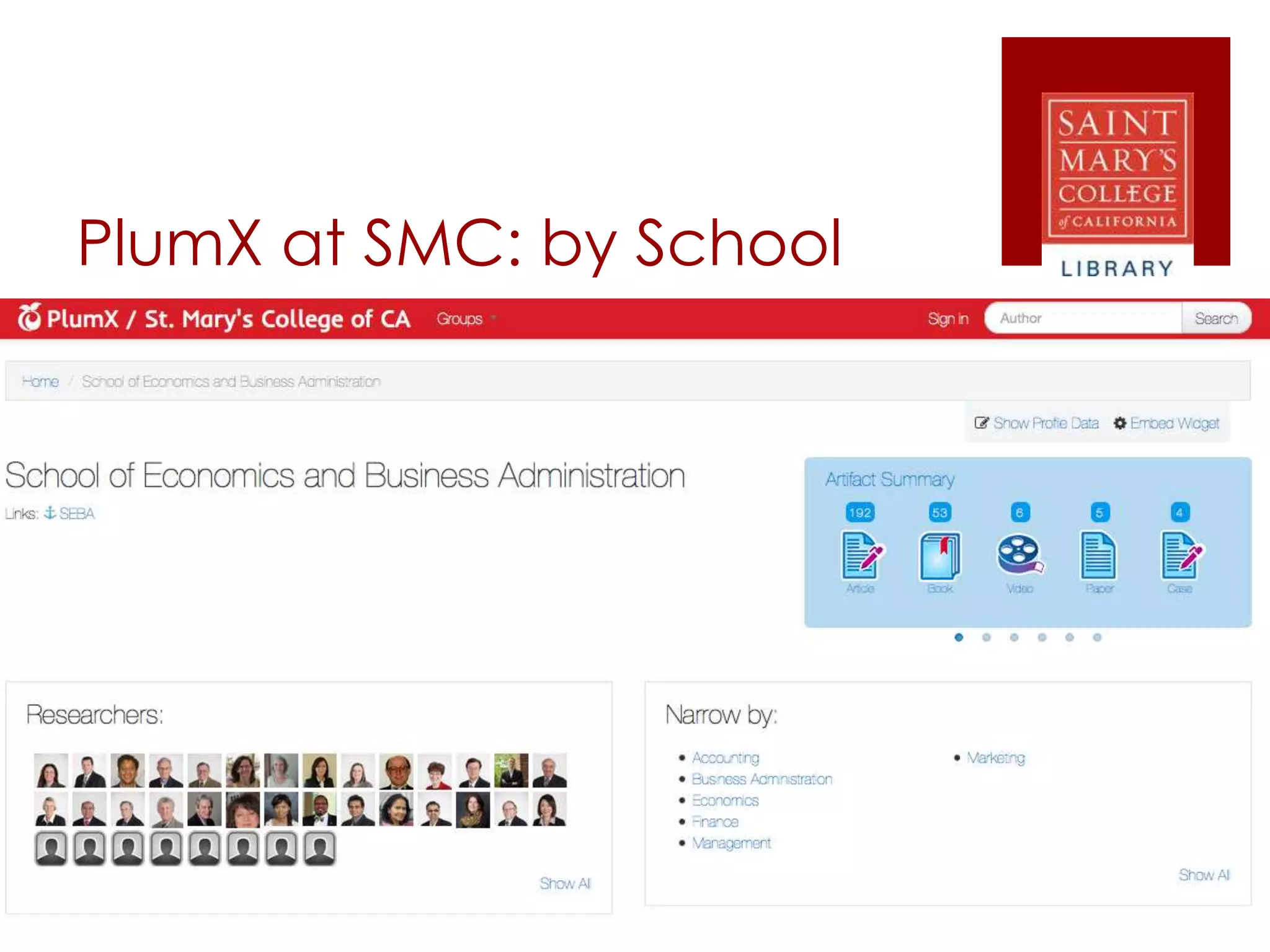

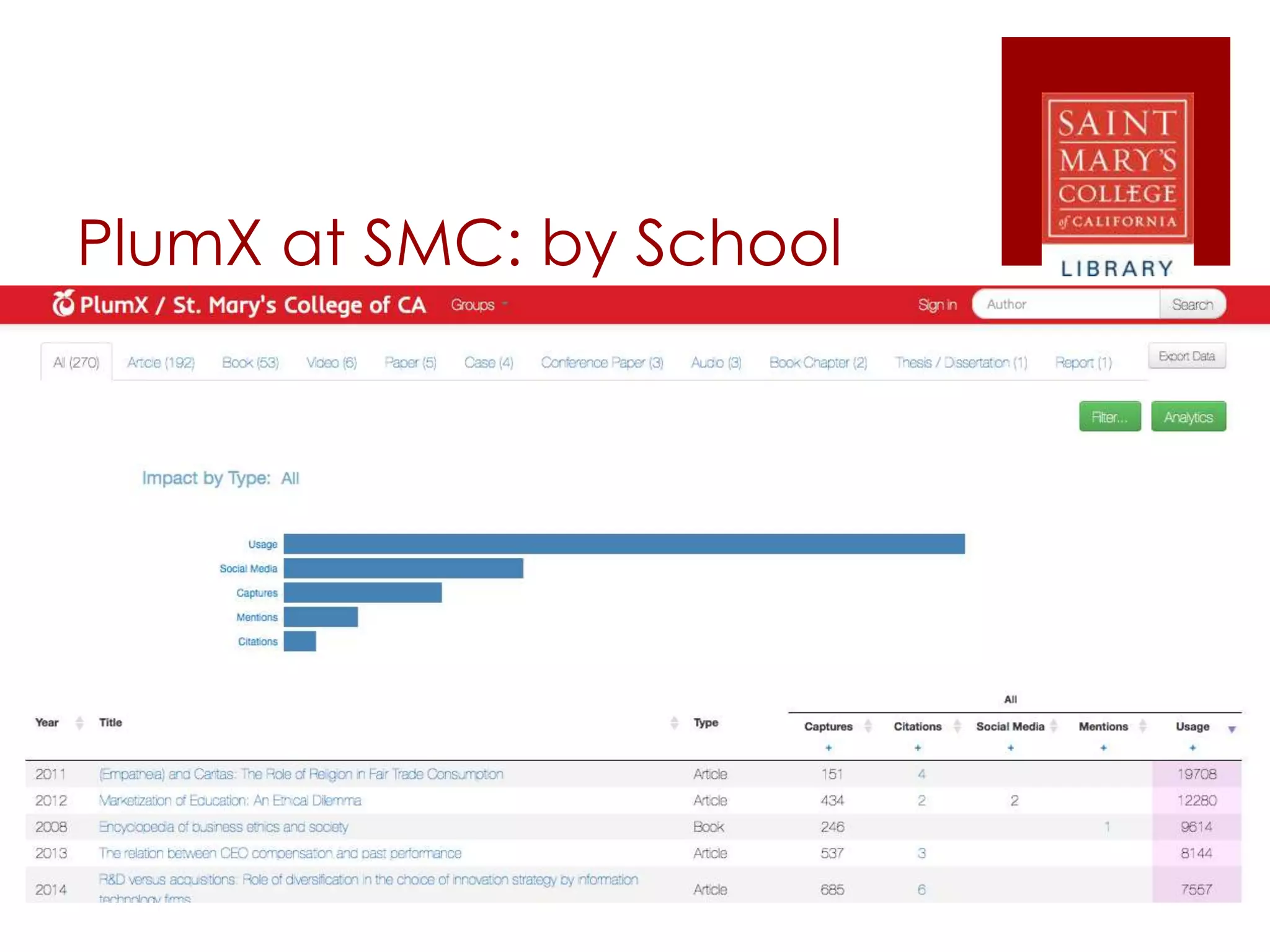

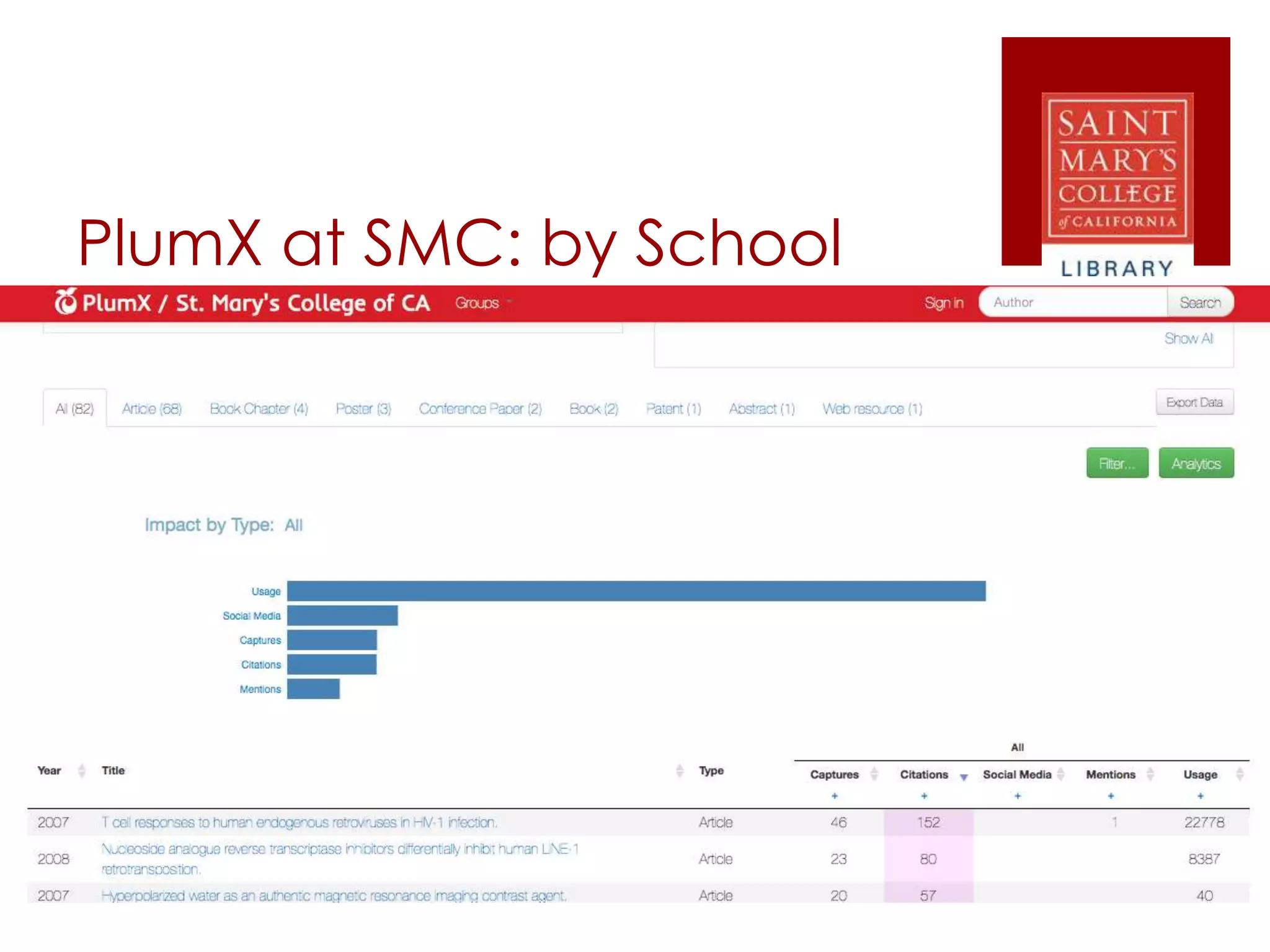

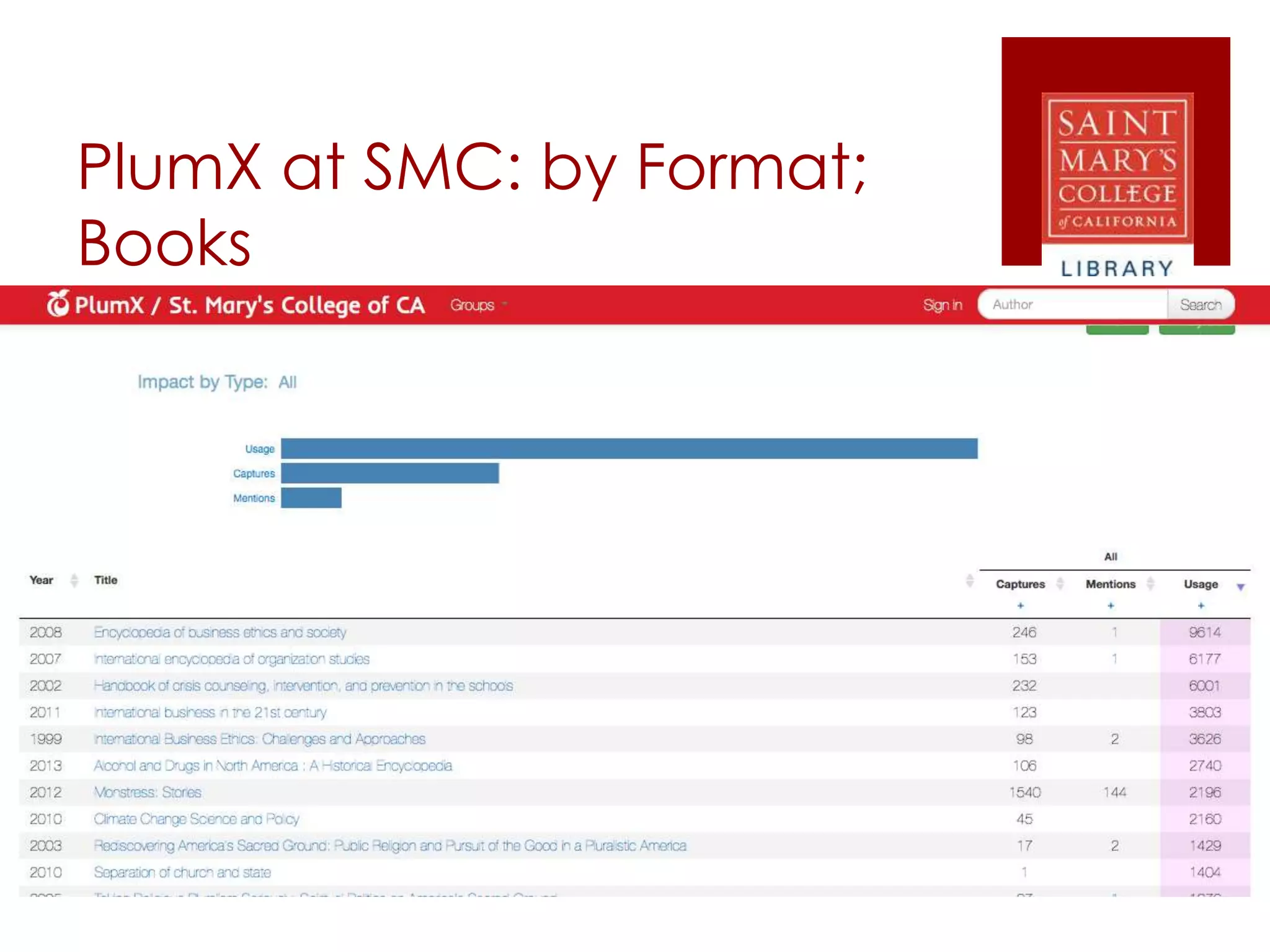

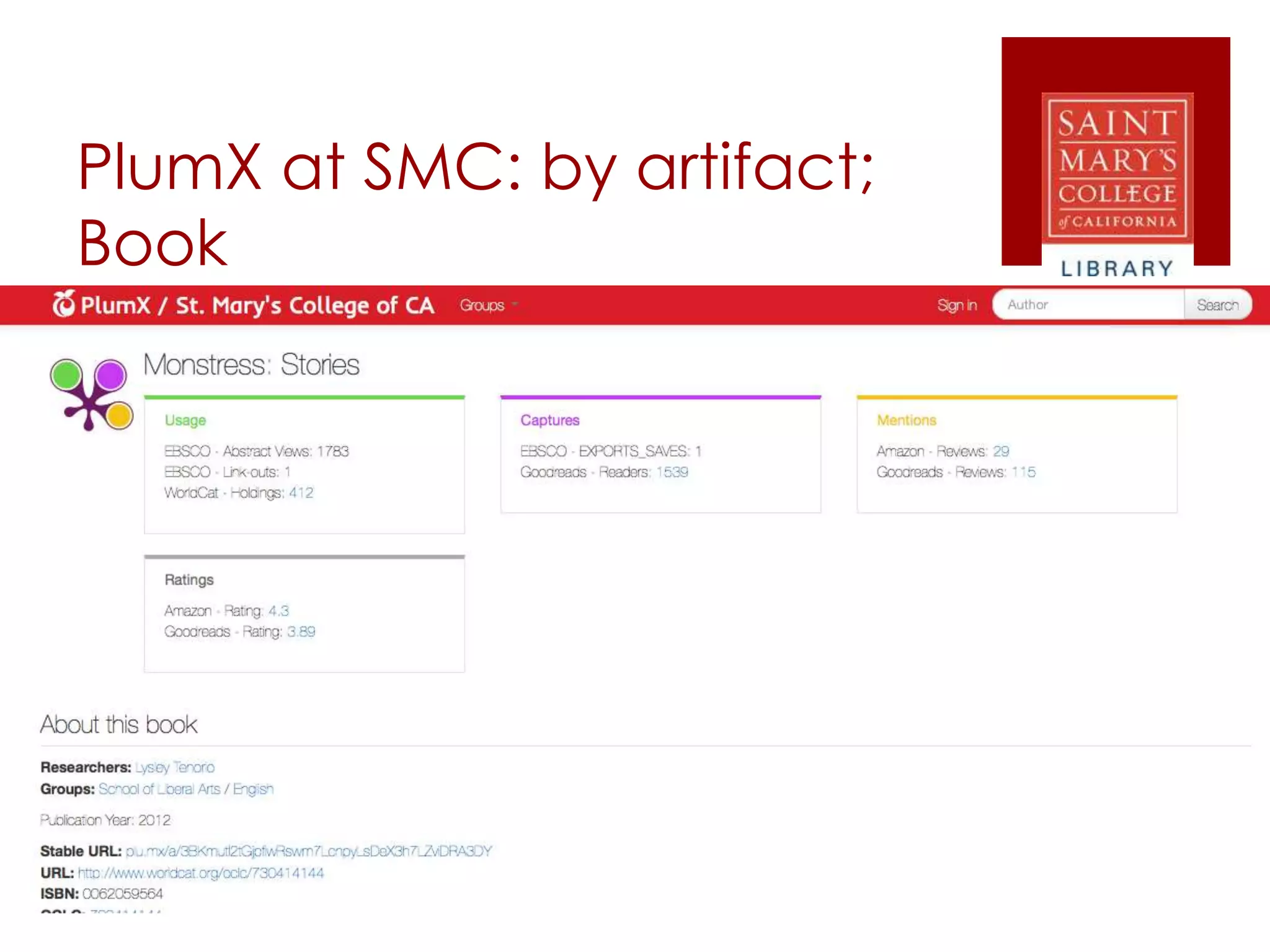

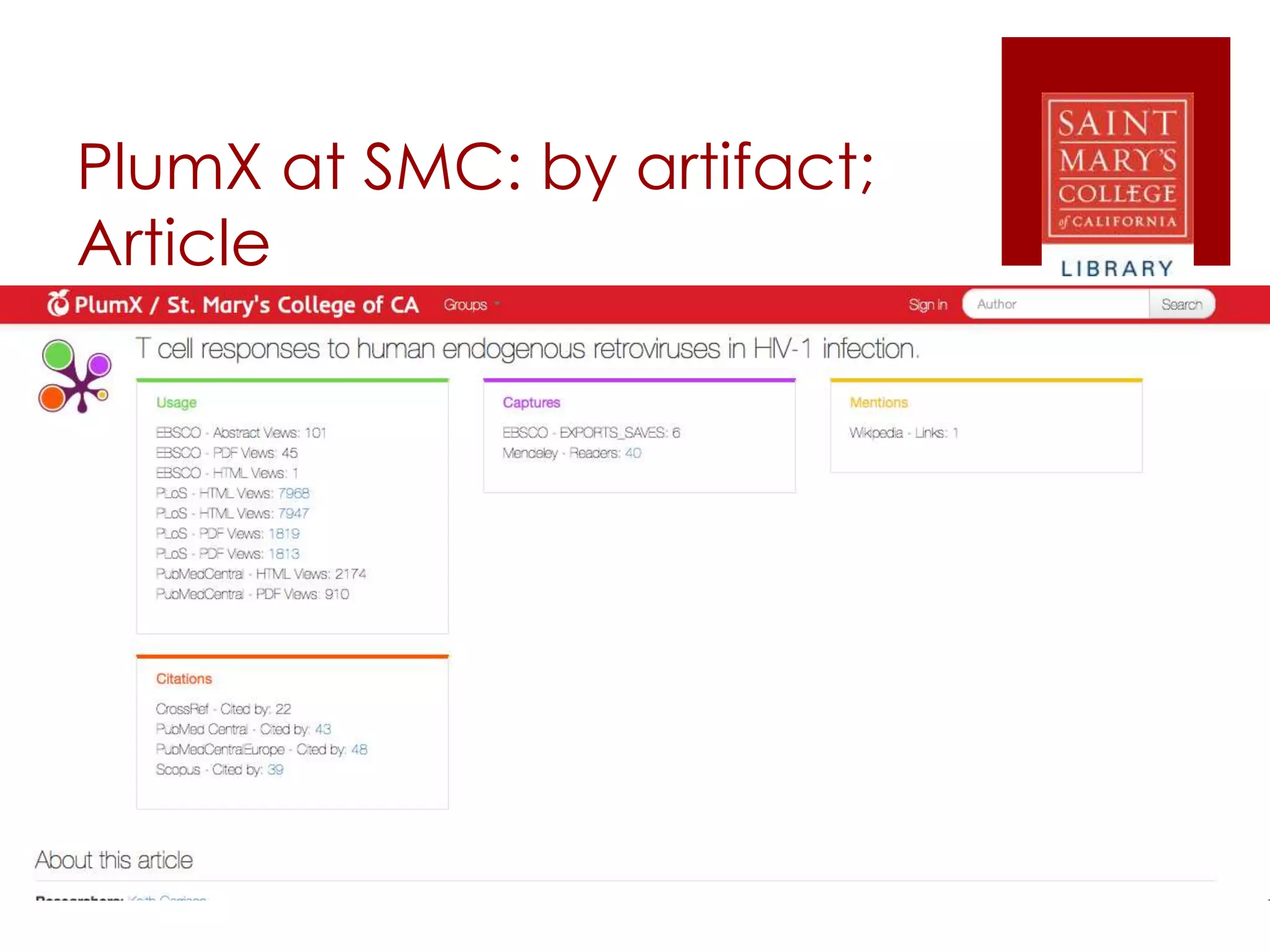

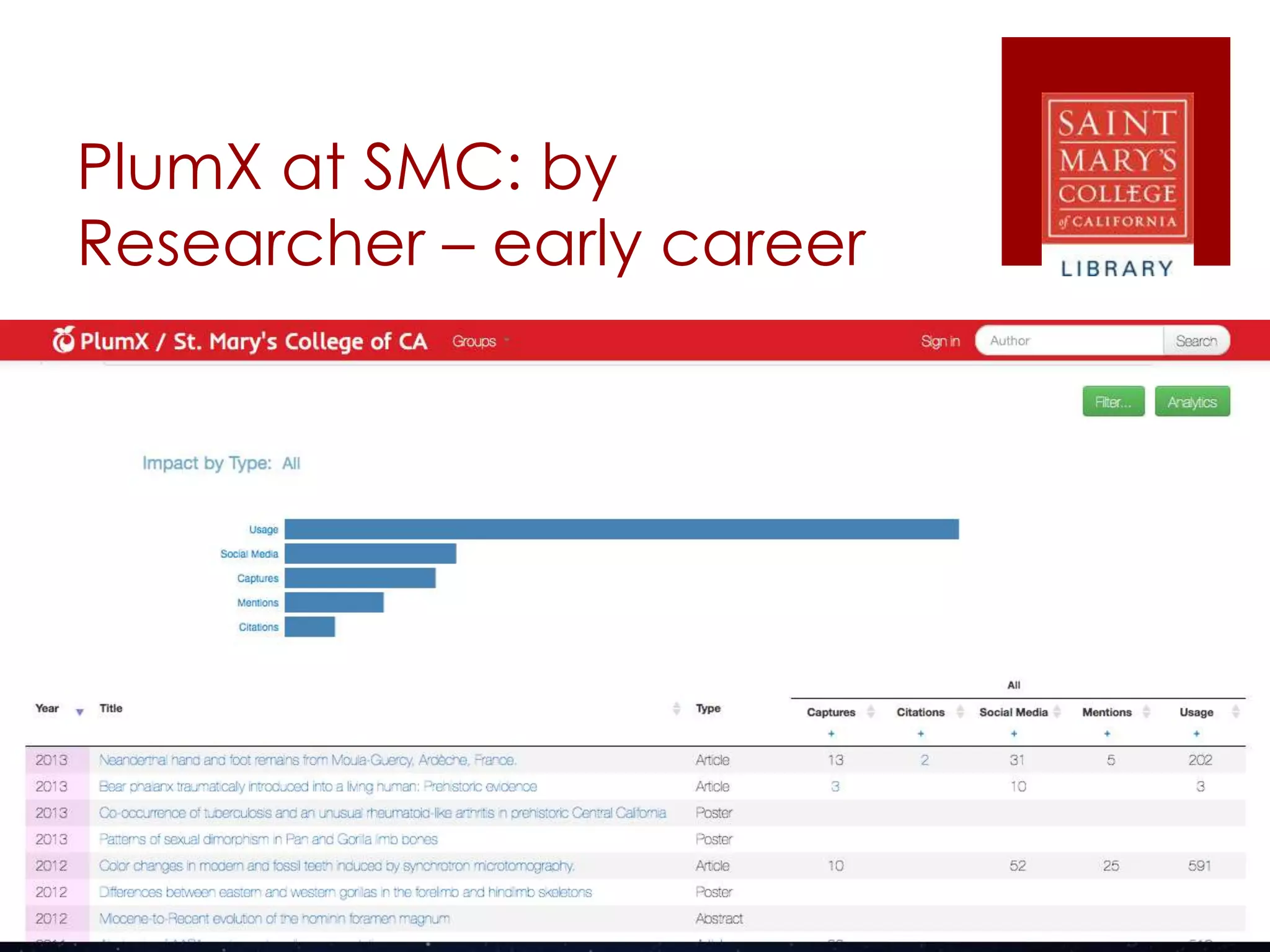

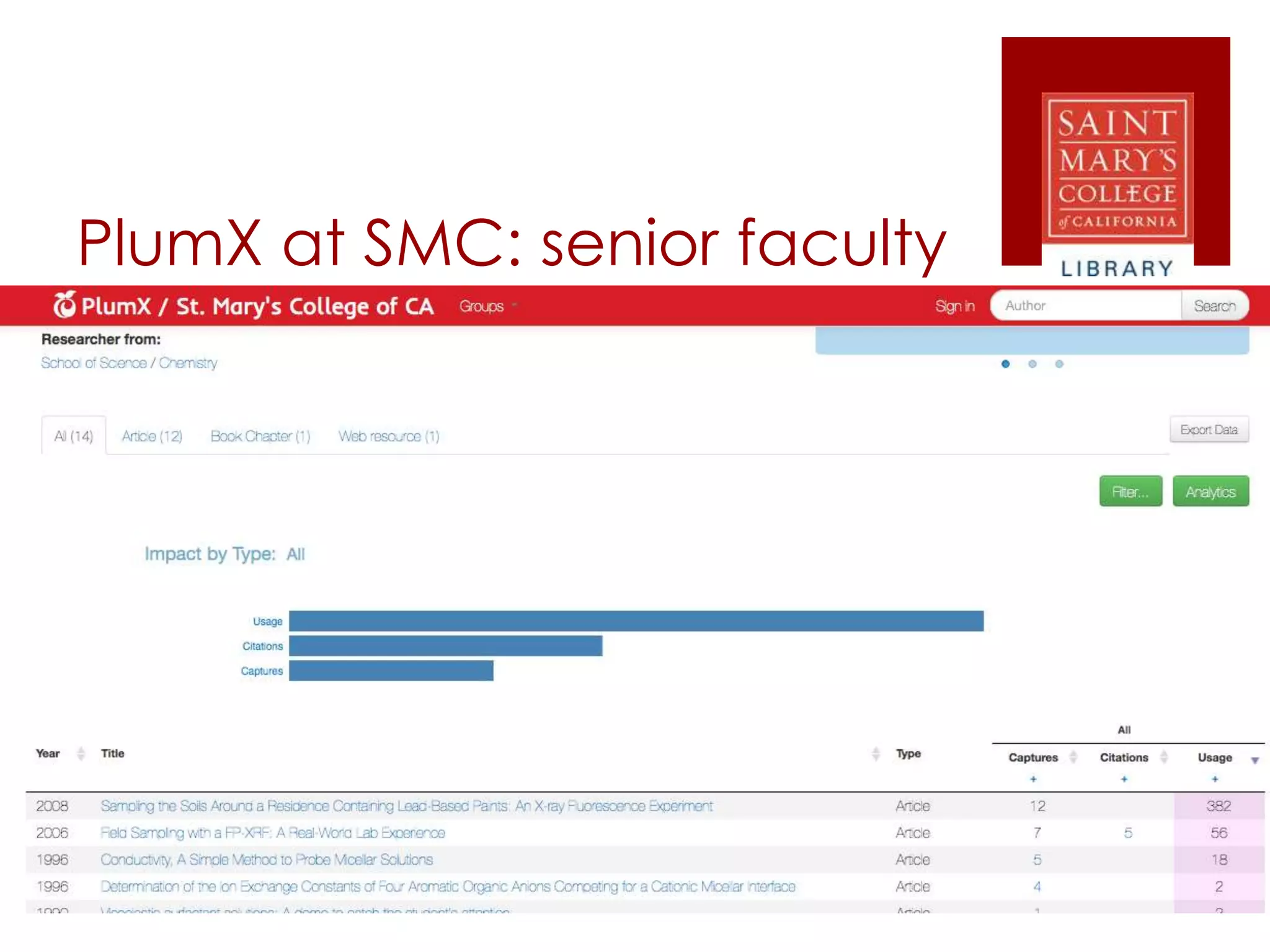

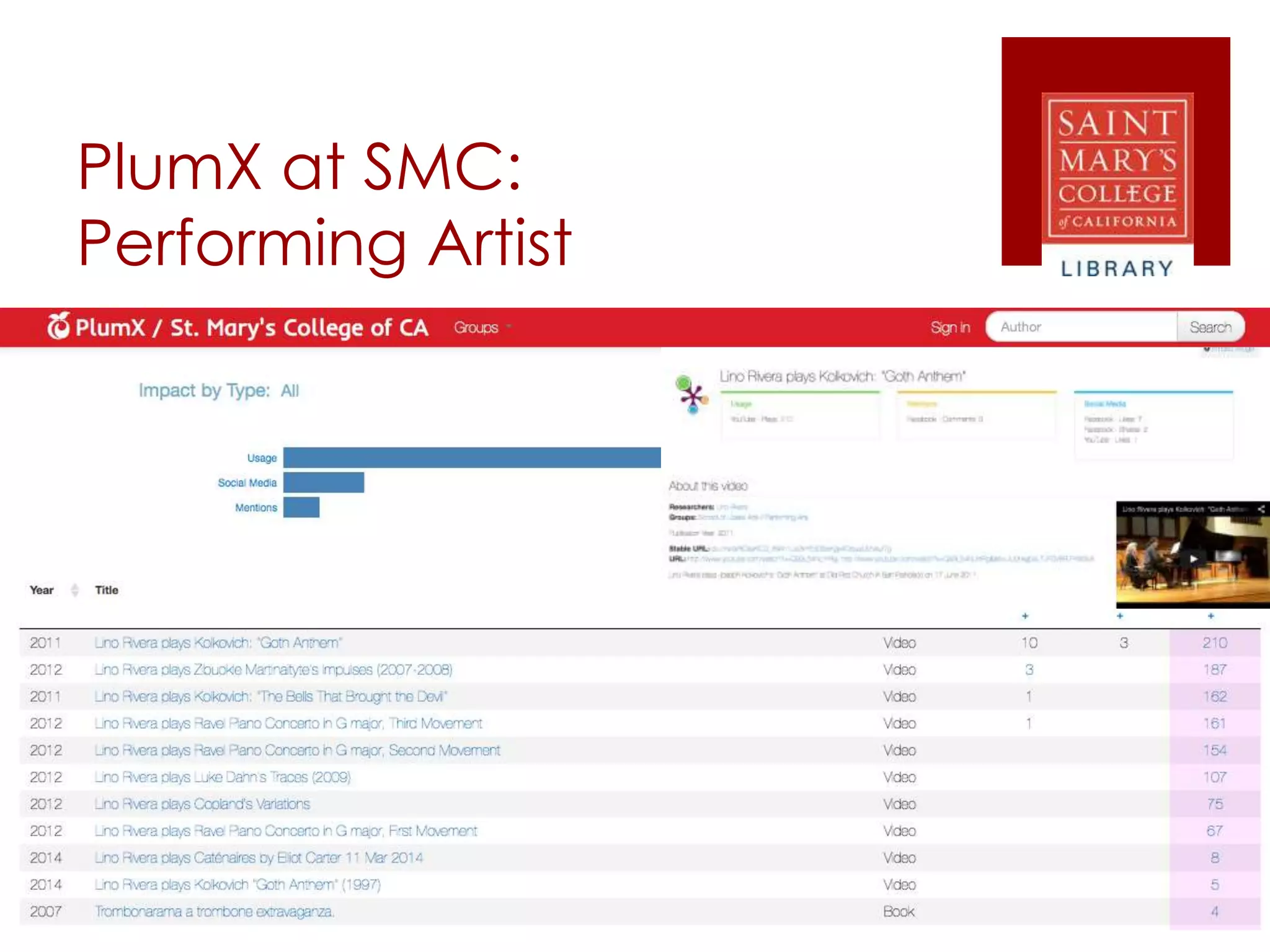

The document discusses the role of altmetrics, specifically PlumX, in evaluating research impact, particularly for non-traditional fields. It highlights both the benefits of altmetrics in providing insights for early career researchers and critiques regarding their reliability and validity. The conclusions raise questions about acceptance and implementation of these metrics by academic institutions and faculty.