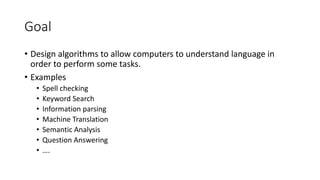

This document discusses natural language processing (NLP) and provides an overview of common NLP techniques. It describes the goal of NLP as allowing computers to understand human language to perform tasks like spell checking, search, translation, and question answering. The main approaches are rule-based methods, probabilistic modeling and machine learning, and deep learning. Text preprocessing steps like vectorization, normalization, and tokenization are explained. Methods for representing words like one-hot vectors and word embeddings are also covered, along with recurrent neural networks, LSTMs, GRUs, attention mechanisms, and transformer architectures.

![Text Pre-processing

Vectorize

Normalize

Tokenize

Text

[0.4566,0.6879],[0.6789,0.2345],[0.4201,0.3456]

nlp, discuss, interest

NLP, discussion, is, interesting

NLP discussion is interesting](https://image.slidesharecdn.com/naturallanguageprocessing-210531023145/85/Natural-Language-Processing-5-320.jpg)