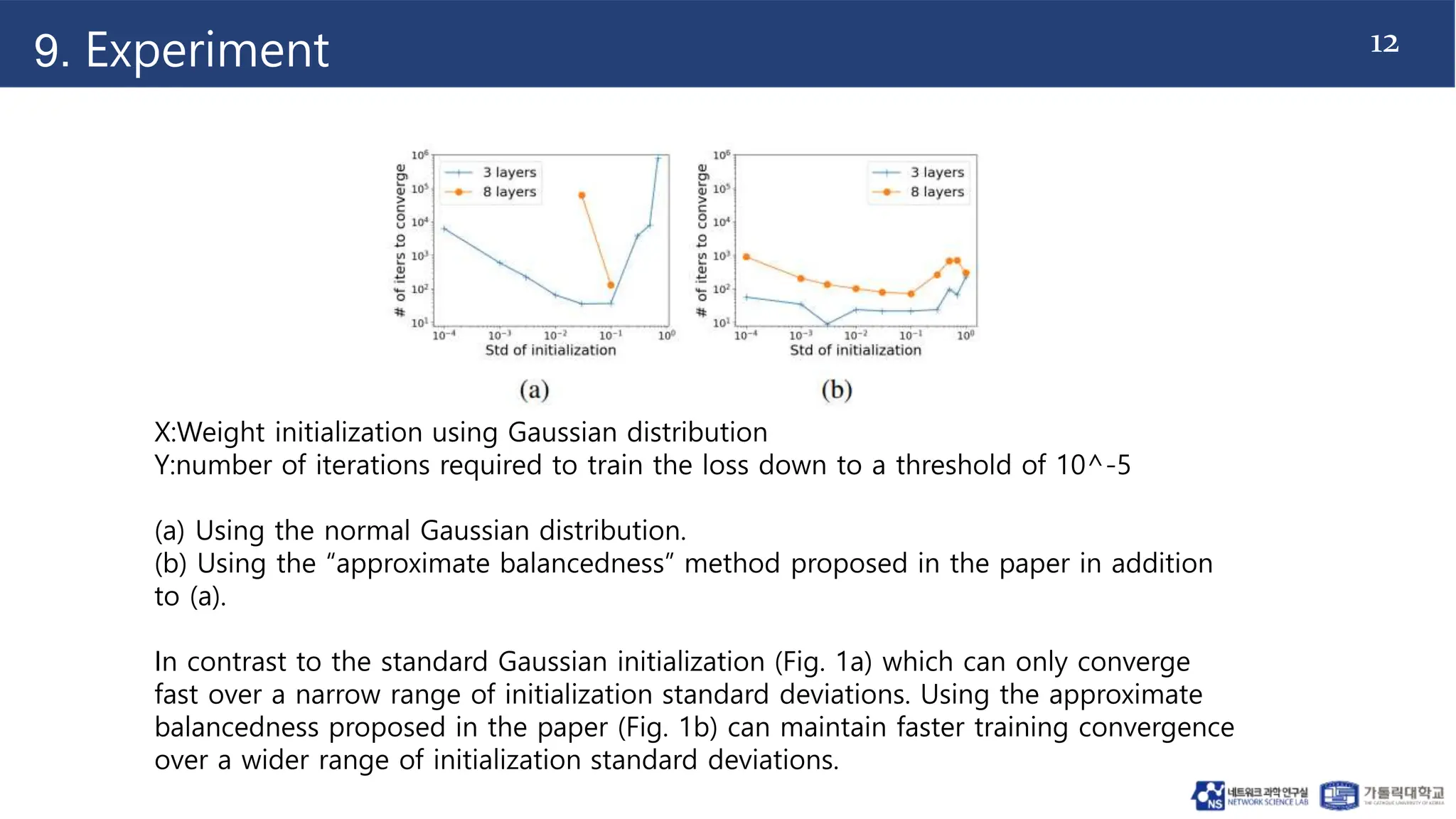

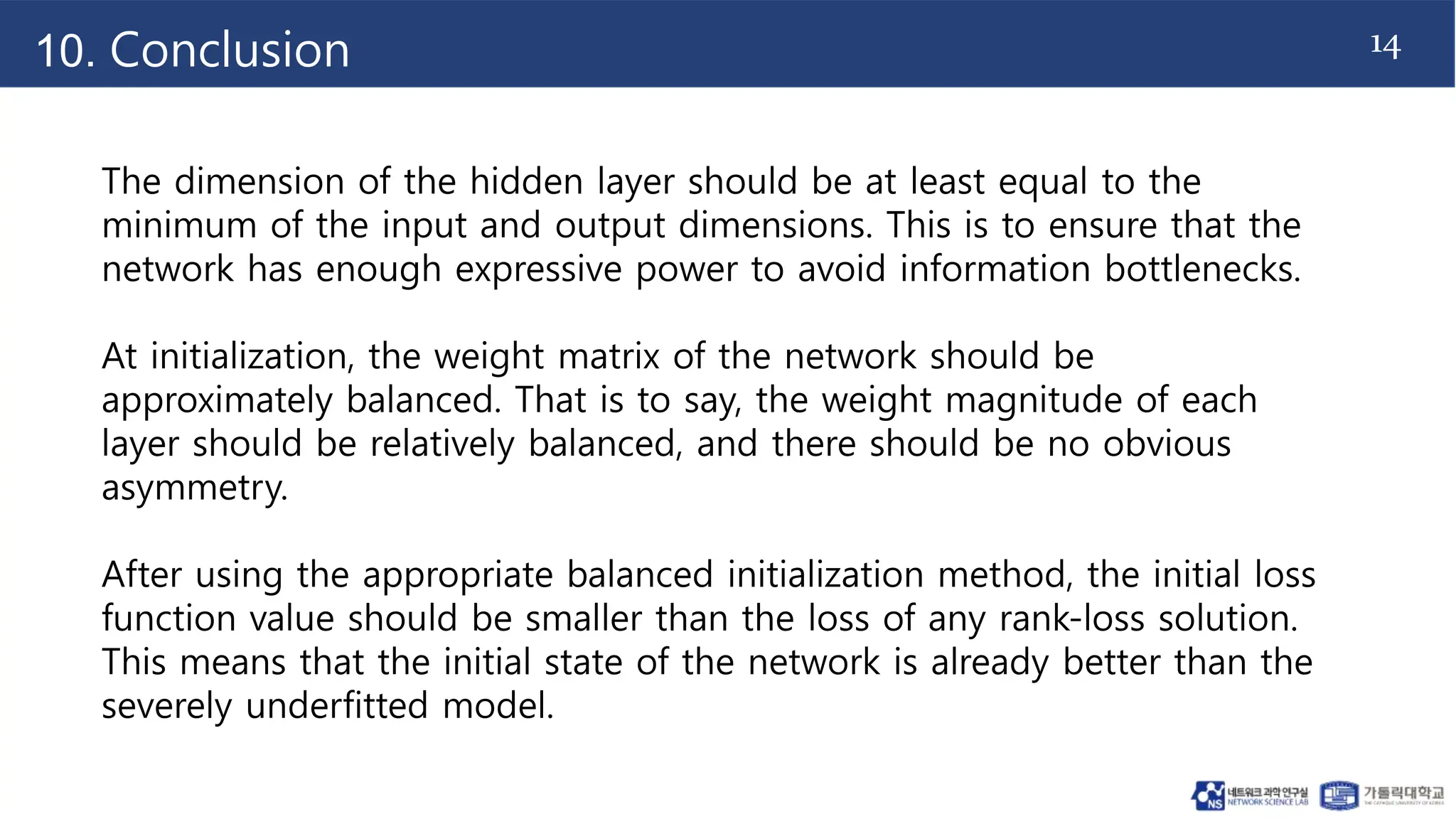

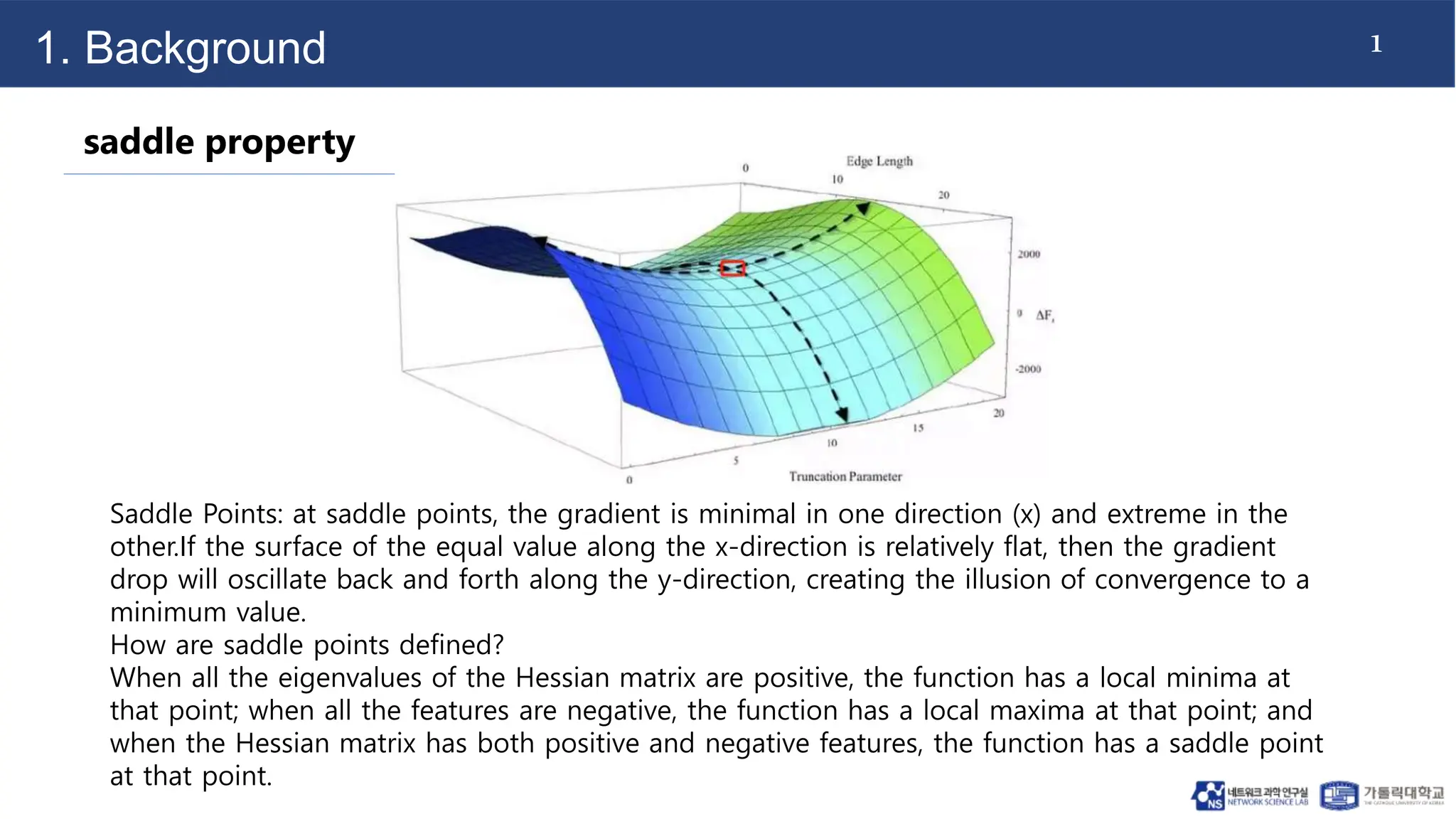

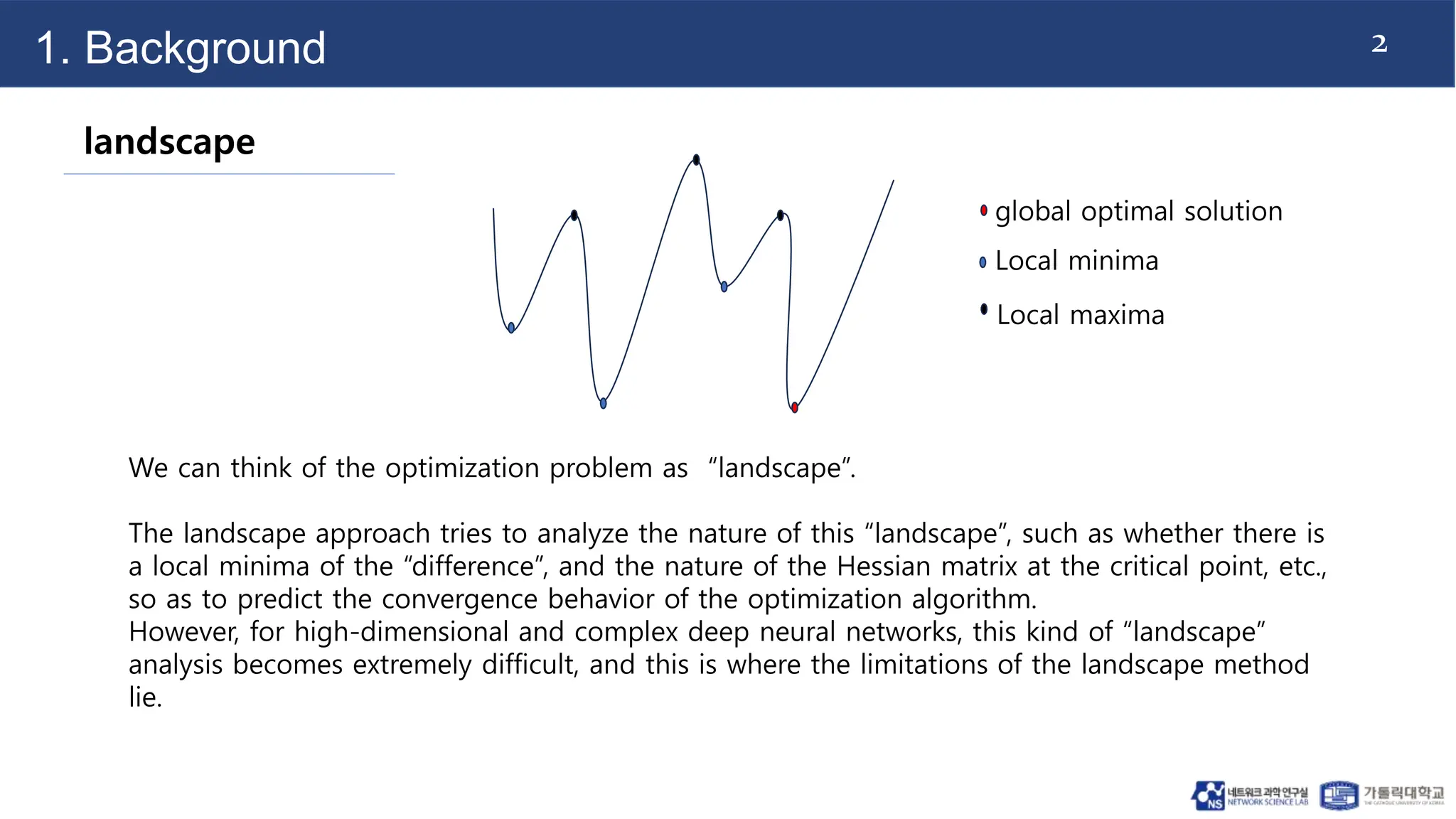

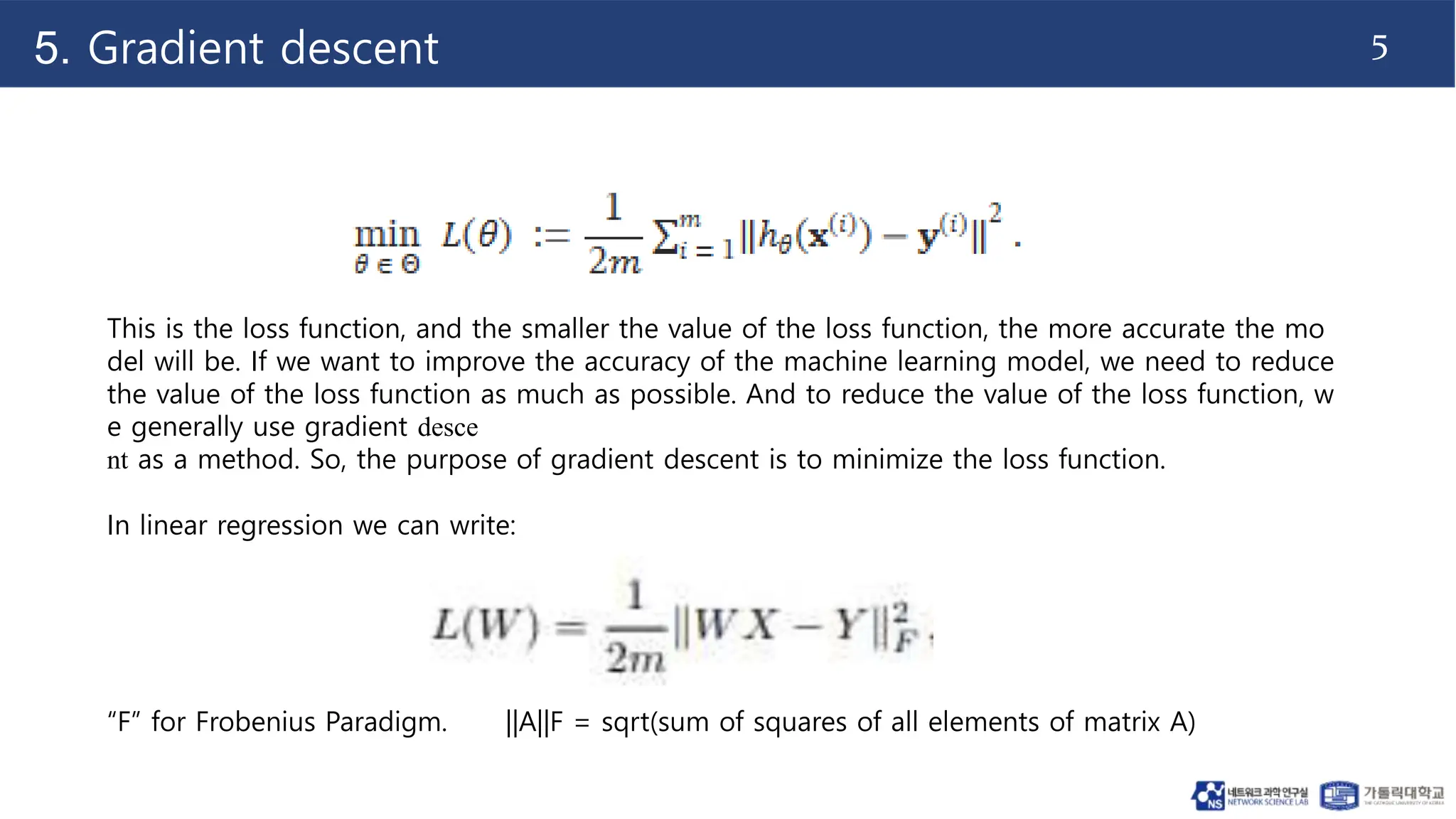

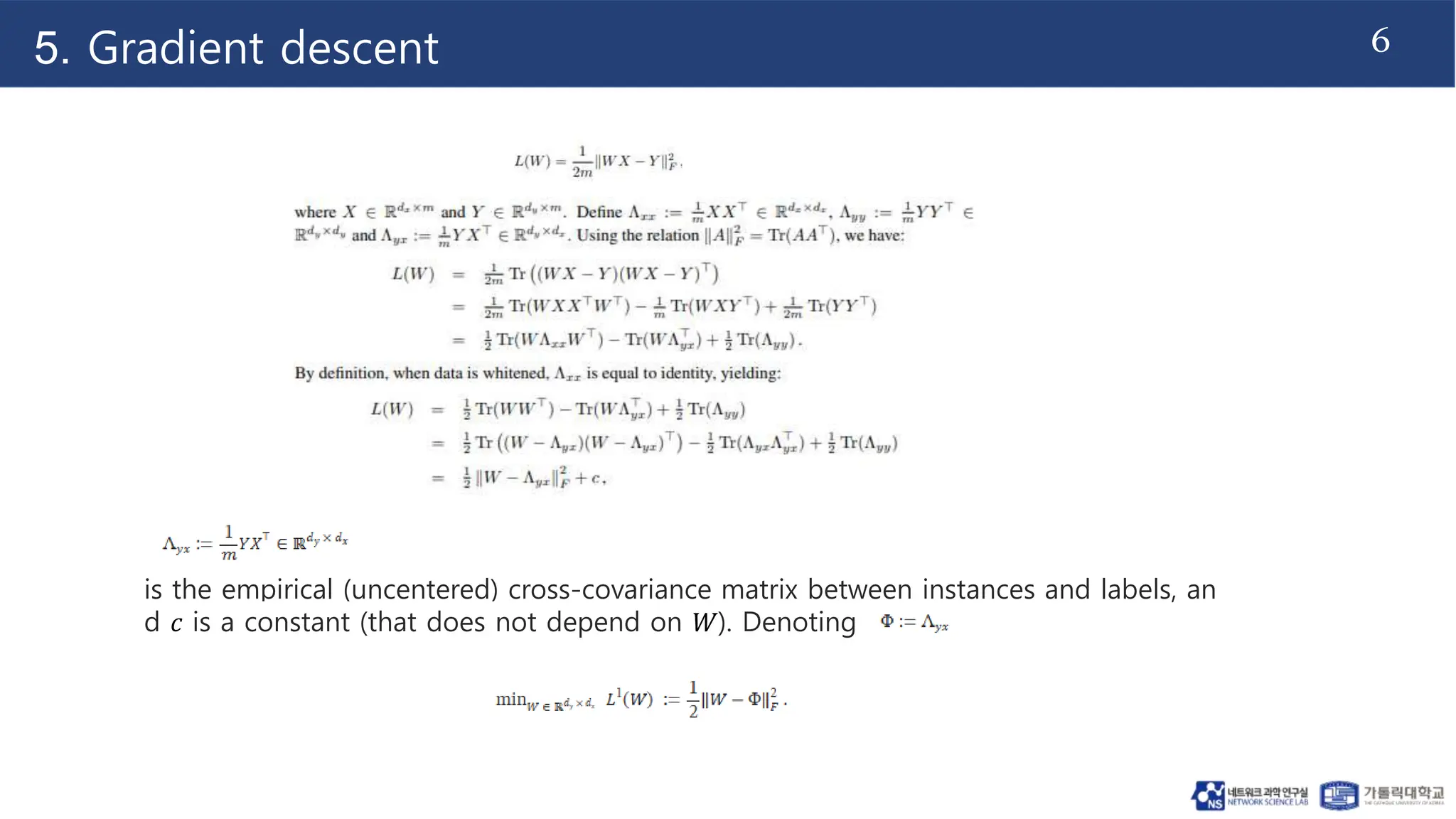

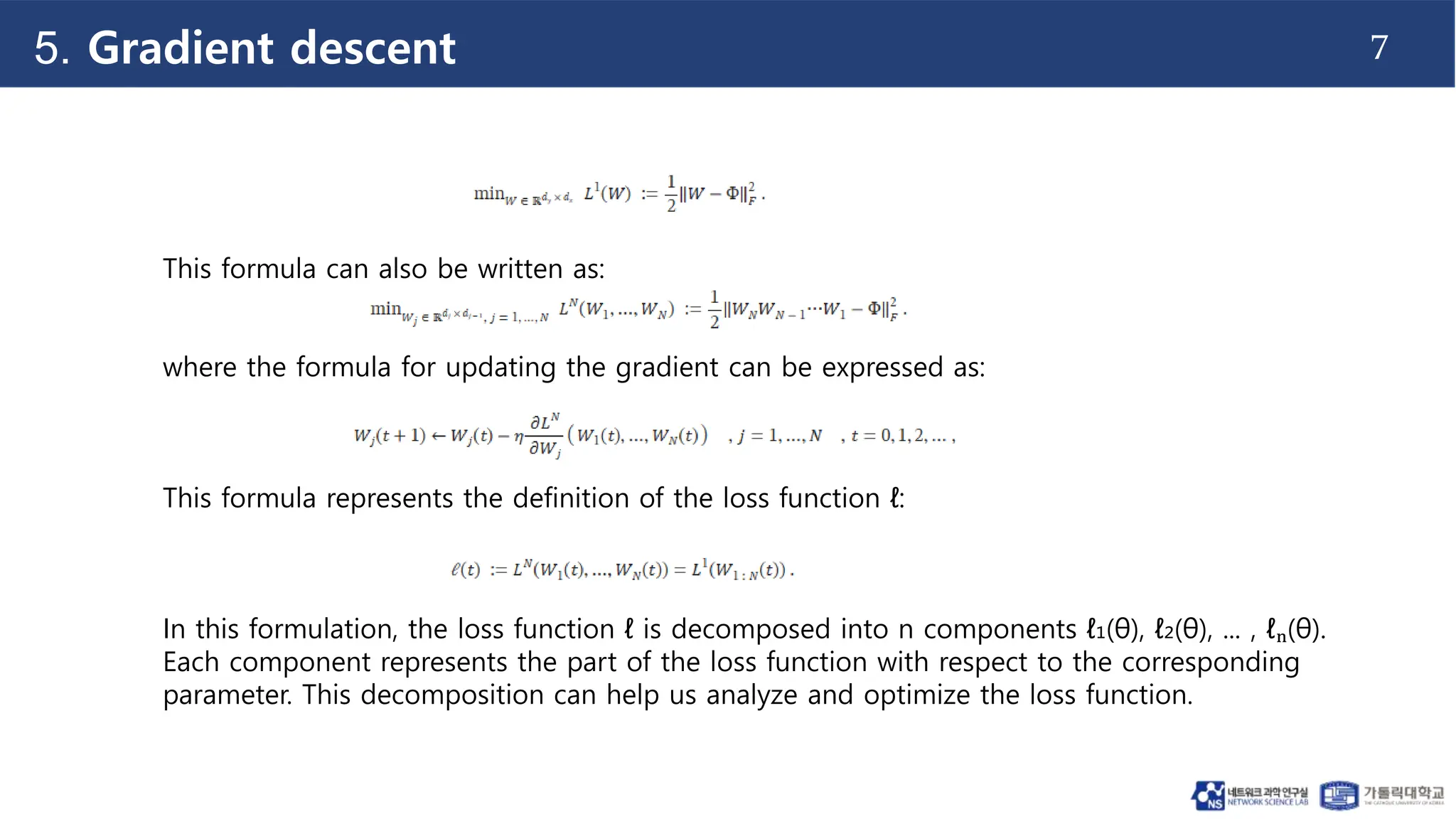

The document discusses the analysis of convergence in gradient descent algorithms applied to deep linear neural networks, emphasizing the importance of weight initialization and conditions that ensure effective convergence to global optimal solutions. It addresses limitations in conventional landscape approaches for deep networks and introduces concepts like approximate balancedness and deficiency margin to facilitate better understanding and optimization. The findings suggest that maintaining balanced weight matrices during initialization enhances convergence speed, especially in deeper networks.

![8

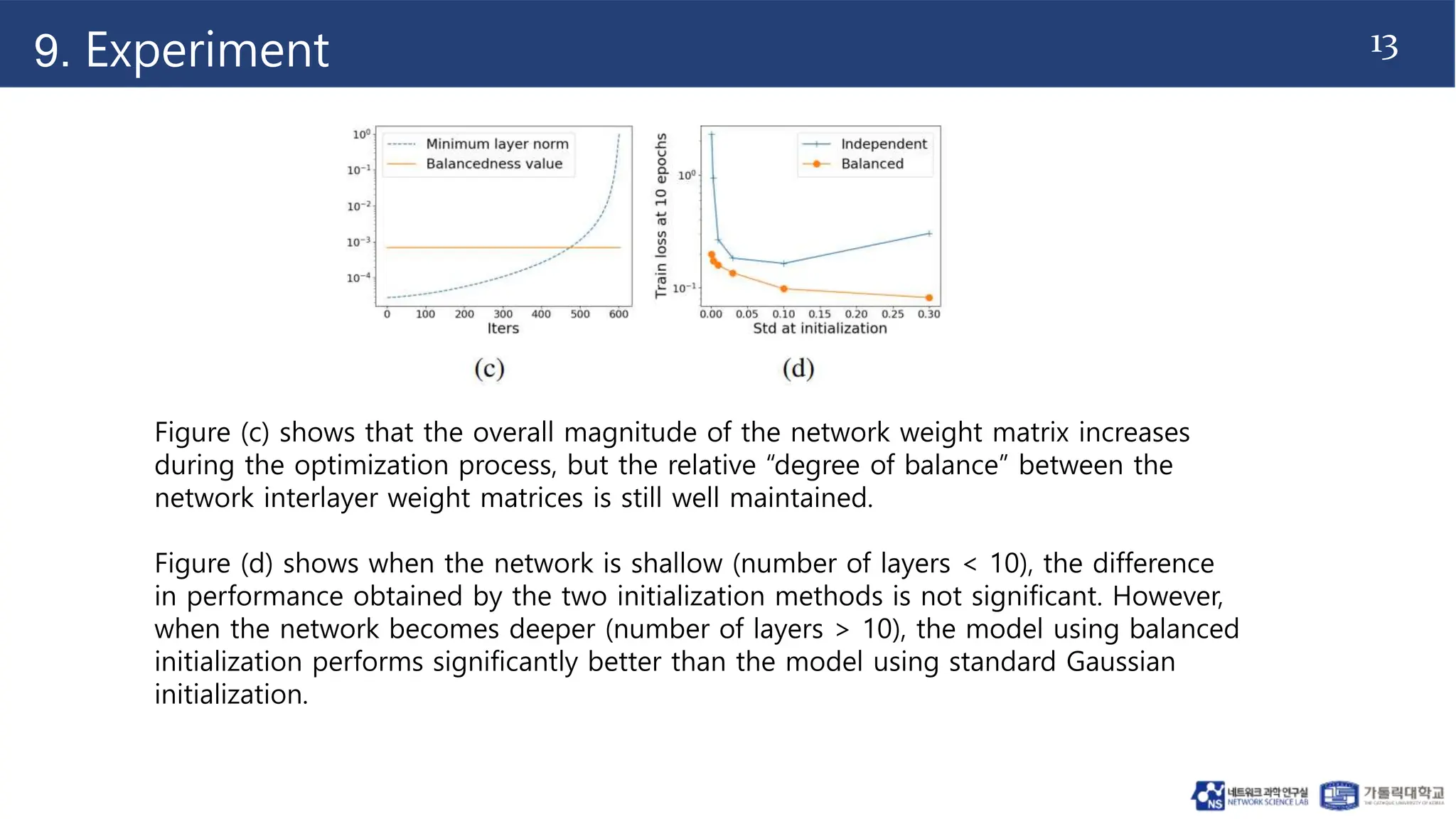

7. Convergence analysis

Definition 1 -- approximate balancedness

approximate balancedness means that the ratio of the singular values of the weight matrix

for each layer cannot be too large.

W1 shape: torch.Size([3, 5])

W2 shape: torch.Size([2, 3])

||[3,2]·[2,3]-[3,5]·[5,3]||F≤ δ

The smaller δ is, the more uniform the distribution of singular values of this weight matrix is,

and the closer to the " balance " state.

W1 W2](https://image.slidesharecdn.com/aconvergenceanalysisofgradient-240605072740-715c9793/75/A-CONVERGENCE-ANALYSIS-OF-GRADIENT_version1-9-2048.jpg)