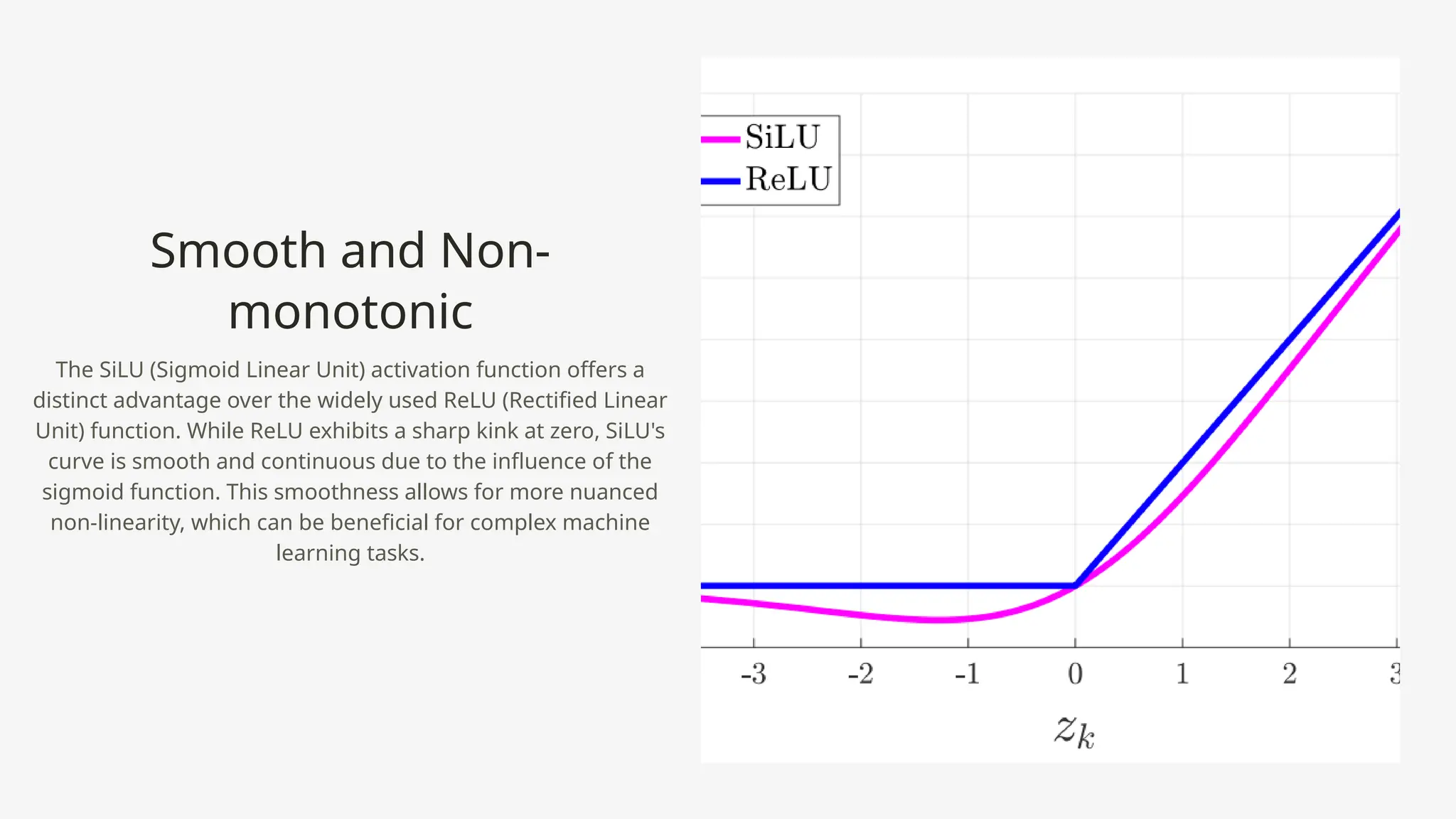

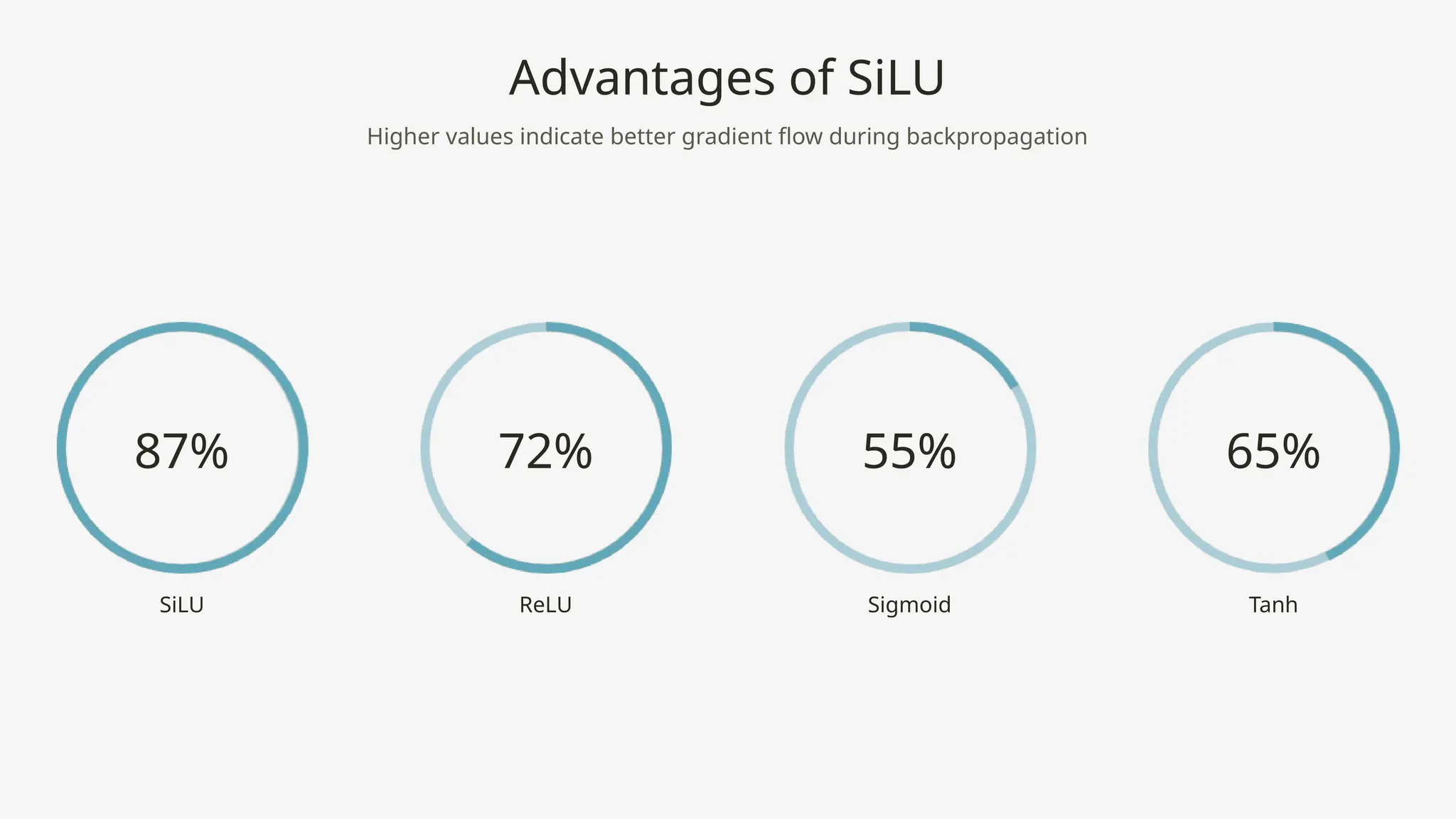

The Silu activation function (sigmoid linear unit) enhances neural networks by combining sigmoid and linear elements, resulting in a smoother, non-monotonic function that improves optimization and expressiveness. Unlike the traditional ReLU, Silu maintains a non-zero gradient, helping to mitigate the vanishing gradient problem and benefiting tasks like object detection in YOLO models. Its distinct characteristics lead to more effective learning and better performance in complex machine learning scenarios.