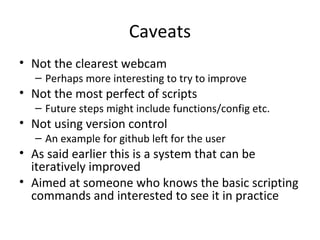

This document discusses applying shell scripting skills to build a time-lapse video from webcam still images of Snowdon, Wales. It describes downloading images from a webcam site over time, cleaning and renaming the image files, and using avconv to combine them into a video. The script is aimed at beginners but leaves room for improvement, such as reducing color noise and investigating better encoders. Further directions are provided to create the highest quality video possible from the still images.

![Building a dataset

• Script

#!/bin/bash

while [ 1 -lt 3 ]

do

curl -o sn_huge_`date +%F-%T`.jpg http://www.fhc.co.uk/weather/images/sn_huge.jpg

sleep 60

done

• Running as a background task

#get_data.sh

#Ctrl-z

#bg %1](https://image.slidesharecdn.com/5-130926111812-phpapp01/85/Applied-Shell-Scripting-stills-to-time-lapse-6-320.jpg)

![Clean files 2 - duplicates

#!/bin/bash

# running this script twice should not produce any 'is a duplicate' output

declare -a SHA1BEFORE

count=0

current_sum_is_duplicate=0

#for all of the files that are pictures

for i in `ls *.jpg`

do

#get the sha1 sum of the current file

sum=`sha1sum $i | cut -d" " -f1`

echo sha1sum of file $i is $sum

#if sha1sum seen before then delete the file as it's a duplicate

for j in ${SHA1BEFORE[@]}

do

if [ $sum == $j ]

then

echo $i is a duplicate and will be deleted

rm $i

current_sum_is_duplicate=1

break

fi

done

#collect sha1sums if not seen before (if you collect all comparisons will slow down quicker)

if [ $current_sum_is_duplicate == 0 ]

then

SHA1BEFORE[$count]=$sum

count=$(( $count + 1 ))

fi

# reset the sha1sum seen before flag

current_sum_is_duplicate=0

done](https://image.slidesharecdn.com/5-130926111812-phpapp01/85/Applied-Shell-Scripting-stills-to-time-lapse-9-320.jpg)

![Making the video

#!/bin/bash

avconv -i filename_%d.jpg -q:v 1 -aspect:v 4:3 output.mp4

# some problems/solutions – default command actions not always best

# video quality seems poor - blocky in parts [fixed -q:v 1 -v is the stream

specifier which is the video in this case]

# image seems stretched - i.e. not same aspect ratio as images - seems

widescreen [fixed with -aspect:v 4:3]

# not sure why frames are being dropped during encoding and no matter

what frame rate I choose the video seems to be the same length in time

to play – weird](https://image.slidesharecdn.com/5-130926111812-phpapp01/85/Applied-Shell-Scripting-stills-to-time-lapse-11-320.jpg)