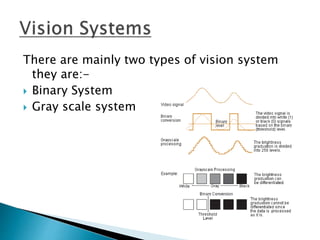

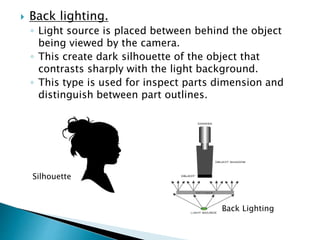

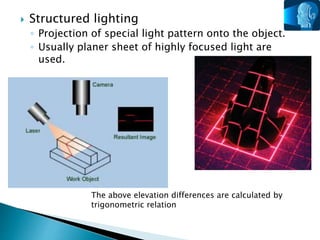

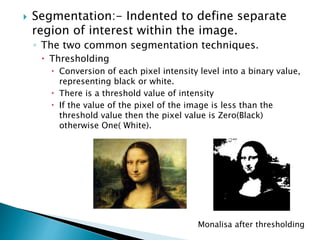

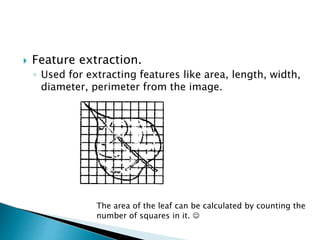

This document discusses machine vision systems and their components and applications. It describes the basic process of image acquisition, digitization, processing, analysis and interpretation. It outlines the main types of vision systems and cameras used. It also discusses different lighting techniques and image processing methods like segmentation, feature extraction and pattern recognition. Finally, it notes that machine vision is widely used for industrial inspection to automate tasks and improve efficiency.