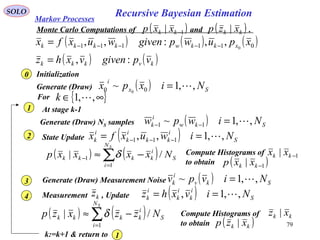

The document extensively reviews recursive Bayesian estimation, covering probability theory fundamentals, including independent events, expected values, and various estimation techniques like Kalman filters and particle filters. It explains concepts of conditional probability, Bayes' theorem, and the total probability theorem with mathematical details. Additionally, it includes discussions on statistical independence and variance related to probability density functions.

![12

SOLO

Statistical Independent Events

( ) ( ) ( ) ( )

( ) ( ) ( ) ( ) ( )∏∑∏∑∏∑

∑∑∑

=

−

≠≠

=

≠

=

=

=

−

≠≠

≠

==

−+−+−=

−+−+−=

n

i

i

n

n

kji

kji i

i

n

ji

ji i

i

n

i

i

tIndependen

lStatisticaA

n

i

i

n

n

kji

kji

kji

n

ji

ji

ji

n

i

i

n

i

i

AAAA

AAAAAAAA

i

1

1

3

,.

3

1

2

.

2

1

1

1

1

1

3

,.

2

.

1

11

Pr1PrPrPr

Pr1PrPrPrPr

From Theorem of Addition

Therefore

( )[ ]∏==

−=

−

n

i

i

tIndependen

lStatisticaA

n

i

i AA

i

11

Pr1Pr1 ( )[ ]∏==

−−=

n

i

i

tIndependen

lStatisticaA

n

i

i AA

i

11

Pr11Pr

Since OAASAA

n

i

i

n

i

i

n

i

i

n

i

i /=

=

====

1111

&

=

−

==

n

i

i

n

i

i AA

11

PrPr1

( )∏==

=

n

i

i

tIndependen

lStatisticaA

n

i

i AA

i

11

PrPr

If the n events Ai i = 1,2,…n are statistical independent

than are also statistical independentiA

( )∏=

=

n

i

iA

1

Pr

=

=

n

i

i

MorganDe

A

1

Pr ( )[ ]∏=

−=

n

i

i

tIndependen

lStatisticaA

A

i

1

Pr1

( ) nrAA

r

i

i

r

i

i ,,2PrPr

11

=∀=

∏==

Table of Content

Review of Probability](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-12-320.jpg)

![13

SOLO Review of Probability

Expected Value or Mathematical Expectation

Given a Probability Density Function p (x) we define the Expected Value

For a Continuous Random Variable: ( ) ( )∫

+∞

∞−

= dxxpxxE X:

For a Discrete Random Variable: ( ) ( )∑=

k

kXk xpxxE :

For a general function g (x) of the

Random Variable x: ( )[ ] ( ) ( )∫

+∞

∞−

= dxxpxgxgE X:

( )xp

x

0 ∞+∞−

0.1

( )xE

( )

( )

( )∫

∫

∞+

∞−

+∞

∞−

=

dxxp

dxxpx

xE

X

X

:

The Expected Value is the center of

surface enclosed between the

Probability Density Function and x

axis.

Table of Content](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-13-320.jpg)

![14

SOLO Review of Probability

Variance

Given a Probability Density Functions p (x) we define the Variance

( ) ( )[ ]{ } ( ) ( )[ ] ( ) ( )22222

2: xExExExExxExExExVar −=+−=−=

Central Moment

( ) { }k

k xEx =:'µ

Given a Probability Density Functions p (x) we define the Central Moment

of order k about the origin

( ) ( )[ ]{ } ( ) ( )∑=

−−

−

=−=

k

j

jk

j

jkk

k xE

j

k

xExEx

0

'1: µµ

Given a Probability Density Functions p (x) we define the Central Moment

of order k about the Mean E (x)

Table of Content](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-14-320.jpg)

![15

SOLO Review of Probability

Moments

Normal Distribution ( ) ( ) ( )[ ]

σπ

σ

σ

2

2/exp

;

22

x

xpX

−

=

[ ] ( )

−⋅

=

oddnfor

evennforn

xE

n

n

0

131 σ

[ ]

( )

+=

=−⋅

= +

12!2

2

2131

12

knfork

knforn

xE kk

n

n

σ

π

σ

Proof:

Start from: and differentiate k time with respect to a( ) 0exp 2

>=−∫

∞

∞−

a

a

dxxa

π

Substitute a = 1/(2σ2

) to obtain E [xn

]

( ) ( ) 0

2

1231

exp 12

22

>

−⋅

=− +

∞

∞−

∫ a

a

k

dxxax kk

k π

[ ] ( ) ( )[ ] ( ) ( )[ ]

( ) ( ) 12

!

0

122/

0

222221212

!2

2

exp

2

22

2/exp

2

2

2/exp

2

1

2

+

∞+

=

∞∞

∞−

++

=−=

−=−=

∫

∫∫

kk

k

k

k

xy

kkk

kdyyy

xdxxxdxxxxE

σ

πσ

σ

π

σ

σπ

σ

σπ

σ

Now let compute:

[ ] [ ]( )2244

33 xExE == σ

Chi-square](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-15-320.jpg)

![17

SOLO Review of Probability

Functions of one Random Variable (continue – 1)

Example 1

bxay += ( )

−

=

a

by

p

a

yp XY

1

Example 2

x

a

y = ( )

=

y

a

p

y

a

yp XY 2

Example 3

2

xay = ( ) ( )yU

a

y

p

a

y

p

ya

yp XXY

−+

=

2

1

Example 4

xy = ( ) ( ) ( )[ ] ( )yUypypyp XXY −+=

Table of Content](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-17-320.jpg)

![18

SOLO Review of Probability

Characteristic Function and Moment-Generating Function

Given a Probability Density Functions pX (x) we define the Characteristic Function or

Moment Generating Function

( ) ( )[ ]

( ) ( ) ( ) ( )

( ) ( )

=

==Φ

∑

∫∫

+∞

∞−

+∞

∞−

x

X

XX

X

discretexxpxj

continuousxxPdxjdxxpxj

xjE

ω

ωω

ωω

exp

expexp

exp:

This is in fact the complex conjugate of the Fourier Transfer of the Probability Density

Function. This function is always defined since the sufficient condition of the existence of a

Fourier Transfer :

Given the Characteristic Function we can find the Probability Density

Functions pX (x) using the Inverse Fourier Transfer:

( )

( )

( ) ∞<== ∫∫

+∞

∞−

≥+∞

∞−

1

0

dxxpdxxp X

xp

X

( ) ( ) ( )∫

+∞

∞−

Φ−= ωωω

π

dxjxp XX exp

2

1

is always fulfilled.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-18-320.jpg)

![21

SOLO Review of Probability

Probability Distribution and Probability Density Functions (Examples)

(2) Poisson’s Distribution ( ) ( )0

0

exp

!

, k

k

k

nkp

k

−≈

(1) Binomial (Bernoulli) ( )

( )

( ) ( ) knkknk

pp

k

n

pp

knk

n

nkp

−−

−

=−

−

= 11

!!

!

,

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 k

( )nkP ,

(3) Normal (Gaussian)

( ) ( ) ( )[ ]

σπ

σµ

σµ

2

2/exp

,;

22

−−

=

x

xp

(4) Laplacian Distribution ( )

−

−=

b

x

b

bxp

µ

µ exp

2

1

,;](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-21-320.jpg)

![23

SOLO Review of Probability

Probability Distribution and Probability Density Functions (Examples)

SOLO

(8) Exponential Distribution

( )

( )

<

≥−

=

00

0exp

;

x

xx

xp

λλ

λ

(9) Chi-square Distribution

( )

( )

( )

( )

<

≥−

Γ=

−

00

02/exp

2/

2/1

;

12/

2/

x

xxx

kkxp

k

k

Γ is the gamma function ( ) ( )∫

∞

−

−=Γ

0

1

exp dttta a

(10) Student’s t-Distribution

( ) ( )[ ]

( ) ( )( ) 2/12

/12/

2/1

; +

+Γ

+Γ

= ν

ννπν

ν

ν

x

xp](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-23-320.jpg)

![26

SOLO Review of Probability

Normal (Gaussian) Distribution

Karl Friederich Gauss

1777-1855

( )

( )

( )σµ

σπ

σ

µ

σµ ,;:

2

2

exp

,;

2

2

x

x

xp N=

−

−

=

( ) ( )

∫

∞−

−

−=

x

du

u

xP 2

2

2

exp

2

1

,;

σ

µ

σπ

σµ

( ) µ=xE

( ) σ=xVar

( ) ( )[ ]

( ) ( )

−=

−

−=

=Φ

∫

∞+

∞−

2

exp

exp

2

exp

2

1

exp

22

2

2

σω

µω

ω

σ

µ

σπ

ωω

j

duuj

u

xjE

Probability Density Functions

Cumulative Distribution Function

Mean Value

Variance

Moment Generating Function](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-26-320.jpg)

![27

SOLO Review of Probability

Moments

Normal Distribution ( ) ( ) ( )[ ] ( )σ

σπ

σ

σ ,0;:

2

2/exp

,0;

22

x

x

xpX N=

−

=

[ ] ( )

−⋅

=

oddnfor

evennforn

xE

n

n

0

131 σ

[ ]

( )

+=

=−⋅

= +

12!2

2

2131

12

knfork

knforn

xE kk

n

n

σ

π

σ

Proof:

Start from: and differentiate k time with respect to a( ) 0exp 2

>=−∫

∞

∞−

a

a

dxxa

π

Substitute a = 1/(2σ2

) to obtain E [xn

]

( ) ( ) 0

2

1231

exp 12

22

>

−⋅

=− +

∞

∞−

∫ a

a

k

dxxax kk

k π

[ ] ( ) ( )[ ] ( ) ( )[ ]

( ) ( ) 12

!

0

122/

0

222221212

!2

2

exp

2

22

2/exp

2

2

2/exp

2

1

2

+

∞+

=

∞∞

∞−

++

=−=

−=−=

∫

∫∫

kk

k

k

k

xy

kkk

kdyyy

xdxxxdxxxxE

σ

πσ

σ

π

σ

σπ

σ

σπ

σ

Now let compute:

[ ] [ ]( )2244

33 xExE == σ

Chi-square](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-27-320.jpg)

![28

SOLO Review of Probability

Normal (Gaussian) Distribution (continue – 1)

Karl Friederich Gauss

1777-1855

( ) ( ) ( ) ( )PxxxxPxxPPxxp

T

,;:

2

1

exp2,; 12/1

N=

−−−= −−

π

A Vector – Valued Gaussian Random Variable has the

Probability Density Functions

where

{ }xEx

= Mean Value

( )( ){ }T

xxxxEP

−−= Covariance Matrix

If P is diagonal P = diag [σ1

2

σ2

2

… σk

2

] then the components of the random vector

are uncorrelated, and

x

( )

( ) ( ) ( ) ( )

∏=

−

−

−

−

=

−

−

−

−

−

−

=

−

−

−

−

−

−

−=

k

i i

i

ii

k

k

kk

kk

k

T

kk

xxxxxxxx

xx

xx

xx

xx

xx

xx

PPxxp

1

2

2

2

2

2

2

2

2

22

1

2

1

2

11

22

11

1

2

2

2

2

1

22

11

2/1

2

2

exp

2

2

exp

2

2

exp

2

2

exp

0

0

2

1

exp2,;

σπ

σ

σπ

σ

σπ

σ

σπ

σ

σ

σ

σ

π

therefore the

components of the

random vector are

also independent](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-28-320.jpg)

![3131

SOLO Review of Probability

The Law of Large Numbers

Proof of the Weak Law of Large Numbers

( ) iXE i ∀= µ ( ) iXVar i ∀= 2

σ ( )( )[ ] jiXXE ji ≠∀=−− 0µµ

( ) ( ) ( )[ ] µµ ==++= nnnXEXEXE nn //1

( ) ( )[ ]{ } ( ) ( )

( )( )[ ] ( )[ ] ( )[ ]

nn

n

n

XEXE

n

XX

E

n

XX

EXEXEXVar

n

jiXXE

nn

nnn

ji 2

2

2

2

22

1

0

2

1

2

12

σσµµ

µµ

µ

µµ

==

−++−

=

−++−

=

−

++

=−=

≠∀=−−

Given

we have:

Using Chebyshev’s inequality on we obtain:nX ( ) 2

2

/

Pr

ε

σ

εµ

n

Xn ≤≥−

Using this equation we obtain:

( ) ( ) ( ) n

XXX nnn 2

2

1Pr1Pr1Pr

ε

σ

εµεµεµ −≥≥−−≥>−−=≤−

As n approaches infinity, the expression approaches 1.

Chebyshev’s

inequality

q.e.d.

Monte Carlo

Integration

Monte Carlo

Integration

Table of Content](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-31-320.jpg)

![3333

SOLO Review of Probability

Central Limit Theorem (continue – 1)

Let X1, X2, …, Xm be a sequence of independent random variables with the same

probability distribution function pX (x). Define the statistical mean:

m

XXX

X m

m

+++

=

21

( ) ( ) ( ) ( ) µ=

+++

=

m

XEXEXE

XE m

m

21

( ) ( )[ ]{ } ( ) ( ) ( )

mm

m

m

XXX

EXEXEXVar m

mmmXm

2

2

22

21

22 σσµµµ

σ ==

−++−+−

=−==

Define also the new random variable

( ) ( ) ( ) ( )

m

XXXXEX

Y m

X

mm

m

σ

µµµ

σ

−++−+−

=

−

=

21

:

We have:

The probability distribution of Y tends to become gaussian (normal) as m

tends to infinity, regardless of the probability distribution of the random

variable, as long as the mean μ and the variance σ2

are finite.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-33-320.jpg)

![3434

SOLO Review of Probability

Central Limit Theorem (continue – 2)

( ) ( ) ( ) ( )

m

XXXXEX

Y m

X

mm

m

σ

µµµ

σ

−++−+−

=

−

=

21

:

Proof

The Characteristic Function

( ) ( )[ ] ( ) ( ) ( )

( ) ( )

( )

m

X

m

i

m

i

i

m

Y

m

X

m

j

E

m

X

jE

m

XXX

jEYjE

i

Φ=

−

=

−

=

−++−+−

==Φ

−

=

∏

ω

σ

µω

σ

µ

ω

σ

µµµ

ωωω

σ

µexpexp

expexp

1

21

( )

( ) ( ) ( ) ( ) ( ) ( )

0/lim

2

1

!3

/

!2

/

!1

/

1

2222

33

1

22

0

=

Ο/

Ο/+−=

+

−

+

−

+

−

+=

Φ

∞→

−

mmmm

X

E

mjX

E

mjX

E

mj

m

m

iii

Xi

ωωωω

σ

µω

σ

µω

σ

µωω

σ

µ

Develop in a Taylor series( )

Φ −

miX

ω

σ

µ](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-34-320.jpg)

![36

SOLO Review of Probability

Central Limit Theorem (continue – 2)

( ) ( ) ( ) ( )

m

XXXXEX

Y m

X

mm

m

σ

µµµ

σ

−++−+−

=

−

=

21

:

Proof

The Characteristic Function

( ) ( )[ ] ( ) ( ) ( )

( ) ( )

( )

m

X

m

i

m

i

i

m

Y

m

X

m

j

E

m

X

jE

m

XXX

jEYjE

i

Φ=

−

=

−

=

−++−+−

==Φ

−

=

∏

ω

σ

µω

σ

µ

ω

σ

µµµ

ωωω

σ

µexpexp

expexp

1

21

( )

( ) ( ) ( ) ( ) ( ) ( )

0/lim

2

1

!3

/

!2

/

!1

/

1

2222

33

1

22

0

=

Ο/

Ο/+−=

+

−

+

−

+

−

+=

Φ

∞→

−

mmmm

X

E

mjX

E

mjX

E

mj

m

m

iii

Xi

ωωωω

σ

µω

σ

µω

σ

µωω

σ

µ

Develop in a Taylor series( )

Φ −

miX

ω

σ

µ](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-36-320.jpg)

![42

SOLO Review of Probability

Estimation of the Mean and Variance of a Random Variable (Unknown Statistics)

{ } { } jimxExE ji ,∀==

Define

Estimation of the

Population mean

∑=

=

k

i

ik x

k

m

1

1

:ˆ

A random variable, x, may take on any values in the range - ∞ to + ∞.

Based on a sample of k values, xi, i = 1,2,…,k, we wish to compute the sample mean, ,

and sample variance, , as estimates of the population mean, m, and variance, σ2

.

2

ˆkσ

kmˆ

( )

{ }

( ) ( ) ( )[ ] ( ) ( )[ ]

2

1

2

1

222

2

22222

1 11

2

1

2

2

11

2

1

2

11

1

1

1

1

1

21

11

2

1

ˆˆ2

1

ˆ

1

σσ

σσσ

k

k

kk

mkmkk

k

mmk

k

m

k

xx

k

Ex

k

xExE

k

mxmxE

k

mx

k

E

k

i

k

i

k

i

k

l

l

k

j

j

k

j

jii

k

k

i

ik

k

i

i

k

i

ki

−

=

−=

++−+++−−+=

+

−=

+−=

−

∑

∑

∑ ∑∑∑

∑∑∑

=

=

= ===

===

{ } { } jimxExE ji ,2222

∀+== σ

{ } { } mxE

k

mE

k

i

ik == ∑=1

1

ˆ

{ } { } { } jimxExExxE ji

tindependenxx

ji

ji

,2

,

∀==

Compute

Biased

Unbiased

Monte Carlo simulations assume independent and identical distributed (i.i.d.) samples.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-42-320.jpg)

![46

SOLO Review of Probability

Estimation of the Mean and Variance of a Random Variable (continue - 4)

Let Compute:

( ){ } ( ) ( )

( ) ( ) ( ) ( )[ ]

( ) ( ) ( ) ( )

−−

−

+−

−

−

+−

−

=

−−+−−+−

−

=

−−+−

−

=

−−

−

=−=

∑∑

∑

∑∑

==

=

==

2

22

11

2

2

2

1

22

2

2

1

2

2

2

1

22222

ˆ

ˆ

11

ˆ2

1

1

ˆˆ2

1

1

ˆ

1

1

ˆ

1

1

ˆ:2

σ

σ

σσσσσσ

k

k

i

i

k

k

i

i

k

i

kkii

k

i

ki

k

i

kik

mm

k

k

mx

k

mm

mx

k

E

mmmmmxmx

k

E

mmmx

k

Emx

k

EE

k

( )

( ){ } ( ){ } ( ){ } ( ){ }

( )

( ){ } ( )

( ){ }

( ){ }

( )

( ){ } ( ){ }

( )

( ){ } ( )

( ){ }

( ){ }

( )

( ){ } ( ){ }

( )

( ){ }

( )

( ){ }

k

k

k

i

i

k

k

i

i

k

k

k

i

i

k

k

i

i

k

k

k

i

i

k

k

k

k

i

i

k

k

k

i

k

ij

j

ji

k

k

i

i

mmE

k

k

mxE

k

mmE

mxE

k

mmEk

mxE

k

mxE

k

mmEk

mxE

k

mmE

mmE

k

k

mxE

k

mmE

mxEmxEmxE

kk

/

2

2

1

0

2

0

1

0

2

3

1

2

2

1

2

2

/

2

1

3

2

0

44

2

2

1

2

2

/

2

1 1

22

1

4

2

2

ˆ

2

222

22

22

4

2

ˆ

1

2

1

ˆ4

1

ˆ4

1

2

1

ˆ2

1

ˆ4

ˆ

11

ˆ4

1

1

σ

σσσ

σσ

σσ

µ

σ

σσ

σ

σσ

−

−

−−

−

−

−−

−

−

+

−

−

−−

−

−

+−

−

−

+

+−

−

+−

−

−

+

−−+−

−

≈

∑∑

∑∑∑

∑∑ ∑∑

==

===

==

≠

==

Since (xi – m), (xj - m) and are all independent for i ≠ j:( )kmm ˆ−](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-46-320.jpg)

![49

SOLO Review of Probability

Estimation of the Mean and Variance of a Random Variable (continue - 6)

[ ] ϕσσσ σσ =≤≤

2

ˆ

2

k

2

k

ˆ-0Prob n

For high values of k, according to the Central Limit Theorem the estimations of mean

and of variance are approximately Gaussian Random Variables.

kmˆ

2

ˆkσ

We want to find a region around that

will contain σ2

with a predefined probability

φ as function of the number of iterations k.

2

ˆkσ

Since are approximately Gaussian Random

Variables nσ is given by solving:

2

ˆkσ

ϕζζ

π

σ

σ

=

−∫

+

−

n

n

d2

2

1

exp

2

1

nσ φ

1.000 0.6827

1.645 0.9000

1.960 0.9500

2.576 0.9900

Cumulative Probability within nσ

Standard Deviation of the Mean for a

Gaussian Random Variable

22

k

22 1

ˆ-

1

σ

λ

σσσ

λ

σσ

k

n

k

n

−

≤≤

−

−

22

k

2

1

1

ˆ-1

1

σ

λ

σσ

λ

σσ

−

−

≤≤

+

−

−

k

n

k

n

( ) ( ) ( ) ( )( )42222

1,0;ˆ~ˆ&,0;ˆ~ˆ σλσσσσ −−− kkkk kmmmk NN](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-49-320.jpg)

![50

SOLO Review of Probability

Estimation of the Mean and Variance of a Random Variable (continue - 7)

[ ] ϕσσσ σσ =≤≤

2

ˆ

2

k

2

k

ˆ-0Prob n

22

k

22 1

ˆ-

1

σ

λ

σσσ

λ

σσ

k

n

k

n

−

≤≤

−

−

22

k

2

1

1

ˆ-1

1

σ

λ

σσ

λ

σσ

−

−

≤≤

+

−

−

k

n

k

n

22

ˆ

1

2

k

σ

λ

σσ

k

−

=

22

k

2 1

1ˆ

1

1 σ

λ

σσ

λ

σσ

−

−≥≥

−

+

k

n

k

n

−

−

≥≥

−

+

k

n

k

n

1

1

ˆ

1

1

2

2

k

2

λ

σ

σ

λ

σ

σσ

k

n

k

n

1

1

:ˆ:

1

1

k

−

−

=≥≥=

−

+

λ

σ

σσσ

λ

σ

σσ](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-50-320.jpg)

![53

SOLO Review of Probability

Estimation of the Mean and Variance of a Random Variable (continue - 10)

k

n

k

n

kk 1ˆ

1

:&

1ˆ

1

:

00

−

−

=

−

+

=

λ

σ

σ

λ

σ

σ

σσ

Monte-Carlo Procedure

Choose the Confidence Level φ and find the corresponding nσ

using the normal (Gaussian) distribution.

nσ φ

1.000 0.6827

1.645 0.9000

1.960 0.9500

2.576 0.9900

1

Run a few sample k0 > 20 and estimate λ according to2

( )

( )

2

1

2

0

1

4

0

0

0

0

0

0

ˆ

1

ˆ

1

:ˆ

−

−

=

∑

∑

=

=

k

i

ki

k

i

ki

k

mx

k

mx

k

λ∑=

=

0

0

10

1

:ˆ

k

i

ik x

k

m

3 Compute and as function of kσ σ

4 Find k for which

[ ] ϕσσσ σσ =≤≤

2

ˆ

2

k

2

k

ˆ-0Prob n

5 Run k-k0 simulations](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-53-320.jpg)

![65

SOLO Review of Probability

Generating Discrete Random Variables

The Acceptance-Rejection Technique (continue – 2)

Example

Generate a truncated Gaussian using the

Accept-Reject method. Consider the case with

( ) [ ]

−∈

≈

−

otherwise

xe

xp

x

0

4,42/2/2

π

Consider the Uniform proposal function

( )

[ ]

−∈

≈

otherwise

x

xq

0

4,48/1

In Figure we can see the results of the

Accept-Reject method using N=10,000 samples.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-65-320.jpg)

![66

SOLO Review of Probability

Generating Continuous Random Variables

The Inverse Transform Algorithm

Let U be a uniform (0,1) random variable. For any continuous

distribution function F the random variable X defined by

( )UFX 1−

=

has distribution F. [ F-1

(u) is defined to be that value of x such that F (x) = u ]

Proof

Let Px(x) denote the Probability Distribution Function X=F-1

(U)

( ) { } ( ){ }xUFPxXPxPx ≤=≤= −1

Since F is a distribution function, it means that F (x) is a monotonic increasing

function of x and so the inequality “a ≤ b” is equivalent to the inequality

“F (a) ≤ F (b)”, therefore

( ) ( )[ ] ( ){ }

( )[ ]

( ){ } ( )

( )

( )xFxFUP

xFUFFPxP

uniformU

xF

UUFF

x

1,0

10

1

1

≤≤

=

−

=≤=

≤=

−](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-66-320.jpg)

![67

SOLO Review of Probability

Importance Sampling

Let Y = (Y1,…,Ym) a vector of random variables having a joint probability density

function f (y1,…,ym), and suppose that we are interested in estimating

( )[ ] ( ) ( )∫== mmmmf dydyyyfyyhYYhE 1111 ,,,,,,θ

Suppose that a direct generation of the random vector Y so as to compute h (Y) is

inefficient possible because

(a) is difficult to generate the random vector Y, or

(b) the variance of h (Y) is large, or

(c) both of the above

Suppose that W=(W1,…,Wm) is another random vector, which takes values in the

same domain as Y, and has a joint density function g(w1,…,wm) that can be easily

generated. The estimation θ can be expressed as:

( )[ ] ( ) ( )

( )

( ) ( ) ( )

( )

=== ∫ Wg

WfWh

Edwdwwwg

wwg

wwfwwh

YYhE gmm

m

mm

mf

11

1

11

1 ,,

,,

,,,,

,,θ

Therefore, we can estimate θ by generating values of random vector W, and then

using as the estimator the resulting average of the values h (W) f (W)/ g (W).

Return to Particle Filters](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-67-320.jpg)

![69

SOLO Review of Probability

Monte Carlo Integration

we draw NS samples from ( )xp( )S

i

Nix ,,1, =

( ) S

i

Nixpx ,,1~ =

( ) ( ) ( )∑∫ =

=≈⋅=

S

S

N

i

i

S

N xf

N

IxdxpxfI

1

1

If the samples are independent, then INS

is an unbiased estimate of I.

i

x

According to the Law of Large Numbers INS

will almost surely converge to I:

II

sa

N

N

S

S

..

∞→

→

( )[ ] ( ) ∞<−= ∫ xdxpIxff

22

:σIf the variance of is finite; i.e.:( )xf

then the Central Limit Theorem holds and the estimation error converges in

distribution to a Normal Distribution:

( ) ( )2

,0~lim fNS

N

IIN S

S

σN−

∞→

The error of the MC estimate, e = INS

– I, is of the order of O (NS

-1/2

), meaning

that the rate of convergence of the estimate is independent of the dimension of

the integrand.

Numerical Integration of

and ( )kk xzp |( )1| −kk xxp

Return to Particle Filters](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-69-320.jpg)

![71

SOLO Review of Probability

Existence Theorems

Existence Theorem 3

Proof of Existence Theorem 3 (continue – 1)

Since is uniform distributed in the interval (-π,+π) and independent of ω,

its spectrum is

( ){ } ( ){ } ( ){ } ( ){ } ( ){ } 0sinsincoscos

00

,

=−=

ϑωϑω ϑωϑω

ϑω

EtEaEtEatxE

tindependen

ϑ

( ) { } ( )

ϖπ

ϖπ

ϖπϖπ

ϑ

π

ϖ

πϖπϖπ

π

ϑϖπ

π

ϑϖϑϖ

ϑϑ

sin

2

1

2

1

2

1

=

−

====

−+

−

+

−

∫ j

ee

j

e

deeES

jjj

jj

or { } ( ){ } ( ){ } ( )

ϖπ

ϖπ

ϑϖϑϖ ϑϑ

ϑϖ

ϑ

sin

sincos =+= EjEeE j

1=ϖ 1=ϖ

( ) ( ){ } ( ) ( )[ ]{ }

( ){ } ( )[ ]{ }

( ){ } ( )[ ]{ } ( ){ } ( )[ ]{ } ( ){ }

0

2

0

22,

22

2

2sin2sin

2

2cos2cos

2

cos

2

22cos

2

cos

2

coscos

ϑτωϑτωτω

ϑτωτω

ϑτωϑωτ

ϑωϑωω

ϑω

EtE

a

EtE

a

E

a

tE

a

E

a

ttEatxtxE

tindependen

+−++=

+++=

+++=+

2=ϖ 2=ϖ

Given a function S (ω)= S (-ω) or, equivalently, a positive-defined function R (τ),

(R (τ) = R (-τ), and R (0)=max R (τ), for all τ ), we can find a stochastic process x (t)

having S (ω) as its power spectrum or R (τ) as its autocorrelation.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-71-320.jpg)

![72

SOLO Review of Probability

Existence Theorems

Existence Theorem 3

Proof of Existence Theorem 3 (continue – 2)

( ){ } 0=txE

( ) ( ){ } ( ){ } ( ) ( ) ( )τωωτωτωτ ω xRdf

a

E

a

txtxE ===+ ∫

+∞

∞−

cos

2

cos

2

22

( ) ( )ϑω += tatx cos:We have

Because of those two properties x (t) is wide-sense stationary with a power spectrum

given by:

( ) ( ) ( ) ( )[ ]

( ) ( )

( ) ( )∫∫

+∞

∞−

−=+∞

∞−

=−= ττωτττωτωτω

ττ

dRdjRS x

RR

xx

xx

cossincos

( ) ( ) ( ) ( )[ ]

( ) ( )

( ) ( )∫∫

+∞

∞−

−=+∞

∞−

=+= ωτωω

π

ωτωτωω

π

τ

ωω

dSdjSR x

SS

xx

xx

cos

2

1

sincos

2

1

Therefore ( ) ( )ωπω faSx

2

=

q.e.d.

Fourier

Inverse

Fourier

( ) ( )∫

+∞

∞−

= ωωτω df

a

cos

2

2

f (ω) definition

( )ωS=

Given a function S (ω)= S (-ω) or, equivalently, a positive-defined function R (τ),

(R (τ) = R (-τ), and R (0)=max R (τ), for all τ ), we can find a stochastic process x (t)

having S (ω) as its power spectrum or R (τ) as its autocorrelation.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-72-320.jpg)

![77

Recursive Bayesian EstimationSOLO

( ) ( ) ( )

( ) ( )kvkkk

xkkwkkkk

vpgivenvxhz

xpuwpgivenwuxfx

:

,,:, 011111 0

+=

+= −−−−−

kx1−kx

kz1−kz

( ) 111, −−− + kkk wuxf

( ) kk vxh +

Markov Processes

( ) ( )[ ]111 ,| −−− −= kkkwkk uxfxpxxptherefore

( ) ( )[ ]kkvkk xhzpxzp −=|and

For additive noise

we have

( )

( )kkk

kkkk

xhzv

uxfxw

−=

−= −−− 111 ,

Analytic Computations of and (continue – 1)( )kk xzp |( )1| −kk xxp](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-77-320.jpg)

![SOLO

Stochastic Processes deal with systems corrupted by noise. A description of those processes is

given in “Stochastic Processes” Presentation. Here we give only one aspect of those processes.

( ) ( ) ( ) [ ]fttttwddttxftxd ,, 0∈+=

A continuous dynamic system is described by:

Stochastic Processes

( )tx - n- dimensional state vector

( )twd - n- dimensional process noise vector

Assuming system measurements at discrete time tk given by:

( ) ( )( ) [ ]fkkkkk tttvttxhtz ,,, 0∈=

kv - m- dimensional measurement noise vector at tk

We are interested in the probability of the state at time t given the set of discrete

measurements until (included) time tk < t.

x

( )kZtxp |,

{ }kk zzzZ ,,, 21 = - set of all measurements up to and including time tk.

The time evolution of the probability density function is described by the

Fokker–Planck equation.](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-80-320.jpg)

![A solution to the one-dimensional

Fokker–Planck equation, with both the

drift and the diffusion term. The initial

condition is a Dirac delta function in

x = 1, and the distribution drifts

towards x = 0.

The Fokker–Planck equation describes the time evolution of

the probability density function of the position of a particle, and

can be generalized to other observables as well. It is named after

Adriaan Fokker and Max Planck and is also known as the

Kolmogorov forward equation. The first use of the Fokker–

Planck equation was the statistical description of Brownian

motion of a particle in a fluid.

In one spatial dimension x, the Fokker–Planck equation for a

process with drift D1(x,t) and diffusion D2(x,t) is

More generally, the time-dependent probability distribution

may depend on a set of N macrovariables xi. The general

form of the Fokker–Planck equation is then

where D1

is the drift vector and D2

the diffusion tensor; the latter results from the presence of the

stochastic force.

Fokker – Planck Equation

Adriaan Fokker

1887 - 1972

Max Planck

1858 - 1947

SOLO

Adriaan Fokker

„Die mittlere Energie rotierender

elektrischer Dipole im Strahlungsfeld"

Annalen der Physik 43, (1914) 810-

820

Max Plank, „Ueber einen Satz der

statistichen Dynamik und eine

Erweiterung in der Quantumtheorie“,

Sitzungberichte der Preussischen

Akadademie der Wissenschaften

(1917) p. 324-341

Stochastic Processes

( ) ( ) ( )[ ] ( ) ( )[ ]txftxD

x

txftxD

x

txf

t

,,,,, 22

2

1

∂

∂

+

∂

∂

−=

∂

∂

( )[ ] ( )[ ]∑∑∑ = == ∂∂

∂

+

∂

∂

−=

∂

∂ N

i

N

j

Nji

ji

N

i

Ni

i

ftxxD

xx

ftxxD

x

f

t 1 1

1

2

2

1

1

1

,,,,,, ](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-81-320.jpg)

![Fokker – Planck Equation (continue – 1)

The Fokker–Planck equation can be used for computing the probability densities of stochastic

differential equations.

where is the state and is a standard M-dimensional Wiener process. If the initial

probability distribution is , then the probability distribution of the state

is given by the Fokker – Planck Equation with the drift and diffusion terms:

Similarly, a Fokker–Planck equation can be derived for Stratonovich stochastic differential

equations. In this case, noise-induced drift terms appear if the noise strength is state-dependent.

SOLO

Consider the Itô stochastic differential equation:

( ) ( ) ( )[ ] ( ) ( )[ ]txftxD

x

txftxD

x

txf

t

,,,,, 22

2

1

∂

∂

+

∂

∂

−=

∂

∂](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-82-320.jpg)

![Fokker – Planck Equation (continue – 2)

Derivation of the Fokker–Planck Equation

SOLO

Start with ( ) ( ) ( )11|1, 111

|, −−− −−−

= kxkkxxkkxx xpxxpxxp kkkkk

and ( ) ( ) ( ) ( )∫∫

+∞

∞−

−−−

+∞

∞−

−− −−−

== 111|11, 111

|, kkxkkxxkkkxxkx xdxpxxpxdxxpxp kkkkkk

define ( ) ( )ttxxtxxttttt kkkk ∆−==∆−== −− 11 ,,,

( ) ( )[ ] ( ) ( ) ( ) ( )[ ] ( ) ( )[ ] ( )∫

+∞

∞−

∆−∆− ∆−∆−∆−= ttxdttxpttxtxptxp ttxttxtxtx ||

Let use the Characteristic Function of

( ) ( ) ( ) ( ) ( )[ ]{ } ( ) ( ) ( ) ( )[ ] ( ) ( ) ( ) ( )ttxtxtxtxdttxtxpttxtxss ttxtxttxtx ∆−−=∆∆−∆−−−=Φ ∫

+∞

∞−

∆−∆−∆ |exp: ||

( ) ( ) ( ) ( )[ ]ttxtxp ttxtx ∆−∆− ||

The inverse transform is ( ) ( ) ( ) ( )[ ] ( ) ( )[ ]{ } ( ) ( ) ( )∫

∞+

∞−

∆−∆∆− Φ∆−−=∆−

j

j

ttxtxttxtx sdsttxtxs

j

ttxtxp || exp

2

1

|

π

Using Chapman-Kolmogorov Equation we obtain:

( ) ( )[ ] ( ) ( )[ ]{ } ( ) ( ) ( )

( ) ( ) ( ) ( )[ ]

( ) ( )[ ] ( )

( ) ( )[ ]{ } ( ) ( ) ( ) ( ) ( )[ ] ( )ttxdsdttxpsttxtxs

j

ttxdttxpsdsttxtxs

j

txp

j

j

ttxttxtx

ttx

ttxtxp

j

j

ttxtxtx

ttxtx

∆−∆−Φ∆−−=

∆−∆−Φ∆−−=

∫ ∫

∫ ∫

∞+

∞−

∞+

∞−

∆−∆−∆

+∞

∞−

∆−

∆−

∞+

∞−

∆−∆

∆−

|

|

|

exp

2

1

exp

2

1

|

π

π

Stochastic Processes](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-83-320.jpg)

![Fokker – Planck Equation (continue – 3)

Derivation of the Fokker–Planck Equation (continue – 1)

SOLO

The Characteristic Function can be expressed in terms of the moments about x (t-Δt) as:

( ) ( )[ ] ( ) ( )[ ]{ } ( ) ( ) ( ) ( ) ( )[ ] ( )ttxdsdttxpsttxtxs

j

txp

j

j

ttxttxtxtx ∆−∆−Φ∆−−= ∫ ∫

+∞

∞−

∞+

∞−

∆−∆−∆ |exp

2

1

π

( ) ( ) ( ) ( )

( ) ( ) ( ) ( )[ ] ( ){ }∑

∞

=

∆−∆∆−∆ ∆−∆−−

−

+=Φ

1

|| |

!

1

i

i

ttxtx

i

ttxtx ttxttxtxE

i

s

s

Therefore

( ) ( )[ ] ( ) ( )[ ]{ } ( )

( ) ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ] ( )ttxdsdttxpttxttxtxE

i

s

ttxtxs

j

txp

j

j

ttx

i

i

ttxtx

i

tx ∆−∆−

∆−∆−−

−

+∆−−= ∫ ∫ ∑

+∞

∞−

∞+

∞−

∆−

∞

=

∆−

1

| |

!

1exp

2

1

π

Use the fact that ( ) ( ) ( )[ ]{ } ( ) ( ) ( )[ ]

( )[ ]

,2,1,01exp

2

1

=

∂

∆−−∂

−=∆−−−∫

∞+

∞−

i

tx

ttxtx

sdttxtxss

j i

i

i

j

j

i δ

π

( ) ( )[ ] ( ) ( )[ ]{ } ( ) ( )[ ] ( )

( ) ( ) ( )[ ]

( )[ ]

( ) ( )[ ] ( ){ } ( ) ( )[ ] ( )∫∑

∫ ∫

∞+

∞−

∞

=

∆−

+∞

∞−

∆−

∞+

∞−

∆−∆−∆−∆−−

∂

∆−−∂−

+

∆−∆−∆−−=

1

|

!

1

exp

2

1

i

ttx

i

i

ii

ttx

j

j

tx

ttxdttxpttxttxtxE

tx

ttxtx

i

ttxdttxpsdttxtxs

j

txp

δ

π

where δ [u] is the Dirac delta function:

[ ] { } ( ) [ ] ( ) ( ) ( ) ( ) ( )000..0exp

2

1

FFFtsuFFduuuFsdus

j

u

j

j

==∀== −+

+∞

∞−

∞+

∞−

∫∫ δ

π

δ

Stochastic Processes](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-84-320.jpg)

![Fokker – Planck Equation (continue – 4)

Derivation of the Fokker–Planck Equation (continue – 2)

SOLO

[ ] ( ){ } ( ) [ ] ( ) ( ) ( ) ( ) ( )afafaftsufufduuaufsduas

j

ua au

j

j

==∀=−−=− −+=

+∞

∞−

∞+

∞−

∫∫ ..exp

2

1

δ

π

δ

[ ] ( ){ } ( ) ( ) { } ( ) ( ) { }∫∫∫

∞+

∞−

∞+

∞−

∞+

∞−

=→=−

−

=−

j

j

j

j

j

j

sdussFs

j

uf

du

d

sdussF

j

ufsduass

j

ua

ud

d

exp

2

1

exp

2

1

exp

2

1

πππ

δ

( ) [ ] ( ) ( ){ } ( ) ( ){ }

{ } ( ) { } { } ( ) ( )

au

j

j

j

j

j

j

j

j

ud

ufd

sdsFass

j

sdduusufass

j

sdduuasufs

j

dusduass

j

ufduua

ud

d

uf

=

∞+

∞−

∞+

∞−

∞+

∞−

∞+

∞−

+∞

∞−

+∞

∞−

∞+

∞−

+∞

∞−

−=

−

=−

−

=

−

−

=−

−

=−

∫∫ ∫

∫ ∫∫ ∫∫

exp

2

1

expexp

2

1

exp

2

1

exp

2

1

ππ

ππ

δ

[ ] ( ) ( ){ } ( ) ( ) { } ( ) ( ) { }∫∫∫

∞+

∞−

∞+

∞−

∞+

∞−

=→=−

−

=−

j

j

i

i

ij

j

j

j

i

i

i

i

sdussFs

j

uf

du

d

sdussF

j

ufsduass

j

ua

ud

d

exp

2

1

exp

2

1

exp

2

1

πππ

δ

( ) [ ] ( ) ( ) ( ){ } ( ) ( ) ( ){ }

( ) { } ( ) { } ( ) ( ) { } ( ) ( )

au

i

i

i

j

j

i

ij

j

i

i

j

j

i

ij

j

i

i

i

i

ud

ufd

sdassFs

j

sdduusufass

j

sdduuasufs

j

dusduass

j

ufduua

ud

d

uf

=

−=

−

=−

−

=

−

−

=−

−

=−

∫∫ ∫

∫ ∫∫ ∫∫

∞+

∞−

∞+

∞−

∞+

∞−

∞+

∞−

+∞

∞−

+∞

∞−

∞+

∞−

+∞

∞−

1exp

2

1

expexp

2

1

exp

2

1

exp

2

1

ππ

ππ

δ

Useful results related to integrals involving Delta (Dirac) function

Stochastic Processes](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-85-320.jpg)

![Fokker – Planck Equation (continue – 5)

Derivation of the Fokker–Planck Equation (continue – 3)

SOLO

( ) ( )[ ]{ }

( ) ( )[ ]

( ) ( )[ ] ( ) ( ) ( )[ ] ( ) ( )[ ] ( ) ( ) ( )[ ]txpttxdttxpttxtxttxdttxpsdttxtxs

j

ttxttxttx

ttxtx

j

j

∆−

+∞

∞−

∆−

+∞

∞−

∆−

∆−−

∞+

∞−

=∆−∆−∆−−=∆−∆−∆−− ∫∫ ∫ δ

π

δ

exp

2

1

( ) ( ) ( )[ ]

( )[ ] ( ) ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ] ( )

( ) ( ) ( )[ ]

( )[ ] ( ) ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ] ( )

( ) ( ) ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ]( )

( )[ ]∑

∑ ∫

∫∑

∞

=

=∆

∆−∆−

∞

=

∞+

∞−

∆−∆−

+∞

∞−

∞

=

∆−∆−

∂

∆−∆−−∂−

=

∆−∆−∆−∆−−

∂

∆−−∂−

=

∆−∆−∆−∆−−

∂

∆−−∂−

1

0

|

1

|

1

|

|

!

1

|

!

1

|

!

1

i

t

i

ttx

i

ttxtx

ii

i

ttx

i

ttxtxi

ii

i

ttx

i

ttxtxi

ii

tx

txpttxttxtxE

i

ttxdttxpttxttxtxE

tx

ttxtx

i

ttxdttxpttxttxtxE

tx

ttxtx

i

δ

δ

( ) [ ] ( ) ( ) ( )

[ ]

[ ] ( )

auau

i

i

i

i

i

i

i

i

i

ud

ufd

duua

uad

d

uf

ud

ufd

duua

ud

d

uf

==

=−

−

→−=− ∫∫

+∞

∞−

+∞

∞−

δδ 1We found

( ) ( )[ ] ( ) ( )[ ] ( ) ( ) ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ]( )

( )[ ]∑

∞

=

=∆

∆−∆−

∆−

∂

∆−∆−−∂−

+=

1

0

| |

!

1

i

t

i

ttx

i

ttxtx

ii

ttxtx

tx

txpttxttxtxE

i

txptxp

( ) ( )[ ] ( ) ( )[ ] ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ]( )

( )[ ]∑

∞

=

∆−

→∆

∆−

→∆ ∂

∆−∆−−∂

∆

−

=

∆

−

1

00

|1

lim

!

1

lim

i

i

ttx

ii

t

i

ttxtx

t tx

txpttxttxtxE

tit

txptxp

Therefore

Rearranging, dividing by Δt, and tacking the limit Δt→0, we obtain:

Stochastic Processes](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-86-320.jpg)

![Fokker – Planck Equation (continue – 6)

Derivation of the Fokker–Planck Equation (continue – 4)

SOLO

We found ( ) ( )[ ] ( ) ( )[ ] ( ) ( ) ( ) ( ) ( )[ ] ( ){ } ( ) ( )[ ]( )

( )[ ]∑

∞

=

∆−∆−

→∆

∆−

→∆ ∂

∆−∆−−∂

∆

−

=

∆

−

1

|

00

|1

lim

!

1

lim

i

i

ttx

i

ttxtx

i

t

i

ttxtx

t tx

txpttxttxtxE

tit

txptxp

Define: ( ) ( )[ ] ( ) ( ) ( ) ( )[ ] ( ){ }

t

ttxttxtxE

txtxm

i

ttxtx

t

i

∆

∆−∆−−

=−

∆−

→∆

−

|

lim:

|

0

Therefore ( ) ( )[ ] ( ) ( ) ( )[ ] ( ) ( )[ ]( )

( )[ ]∑

∞

=

−

∂

−∂−

=

∂

∂

1 !

1

i

i

tx

iii

tx

tx

txptxtxm

it

txp

( ) ( )ttxtx

t

∆−=

→∆

−

0

lim: and:

This equation is called the Stochastic Equation or Kinetic Equation.

It is a partial differential equation that we must solve, with the initial condition:

( ) ( )[ ] ( )[ ]000 0 txptxp tx ===

Stochastic Processes](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-87-320.jpg)

![Fokker – Planck Equation (continue – 7)

Derivation of the Fokker–Planck Equation (continue – 5)

SOLO

We want to find px(t) [x(t)] where x(t) is the solution of

( ) ( ) ( ) [ ]fg ttttntxf

dt

txd

,, 0∈+=

( ){ } 0: == tnEn gg

( )tng

( ) ( )[ ] ( ) ( )[ ]{ } ( ) ( )τδττ −=−− ttQnntntnE gggg

ˆˆ

Wiener (Gauss) Process

( ) ( )[ ] ( ) ( )[ ] ( ){ } [ ] ( ){ } [ ]{ } ( )tQnEtxnE

t

ttxttxtxE

txtxm gg

t

===

∆

∆−∆−−

=−

→∆

−

22

2

2

0

2

|

|

lim:

( ) ( )[ ] ( ) ( )[ ] ( ){ } ( ) ( ) ( ) ( ) ( )txfnEtxftx

td

txd

E

t

ttxttxtxE

txtxm g

t

,,|

|

lim:

0

0

1

=+=

=

∆

∆−∆−−

=−

→∆

−

( ) ( )[ ] ( ) ( )[ ] ( ){ } 20

|

lim:

0

>=

∆

∆−∆−−

=−

→∆

− i

t

ttxttxtxE

txtxm

i

t

i

Therefore we obtain:

( ) ( )[ ] ( )[ ] ( ) ( )[ ]( )

( )

( ) ( ) ( )[ ]

( )[ ]2

2

2

1,

tx

txp

tQ

tx

txpttxf

t

txp txtxtx

∂

∂

+

∂

∂

−=

∂

∂

Stochastic Processes

Fokker–Planck Equation

Return to Daum](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-88-320.jpg)

![99

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 3)

111111 −−−−−− Γ++Φ= kkkkkkk wuGxx

Prediction phase (before zk measurement)

{ } { } { }

0

1:111111:1111:11| |||:ˆ −−−−−−−−−− Γ++Φ== kkkkkkkkkkkk ZwEuGZxEZxEx

or 111|111|

ˆˆ −−−−−− +Φ= kkkkkkk uGxx

The expectation is

{ }[ ] { }[ ]{ }

( )[ ] ( )[ ]{ }1:1111|111111|111

1:11|1|1|

|ˆˆ

|ˆˆ:

−−−−−−−−−−−−−

−−−−

Γ+−ΦΓ+−Φ=

−−=

k

T

kkkkkkkkkkkk

k

T

kkkkkkkk

ZwxxwxxE

ZxExxExEP

( ) ( ){ } ( ){ }

( ){ } { } T

k

Q

T

kkk

T

k

T

kkkkk

T

k

T

kkkkk

T

k

P

T

kkkkkkk

wwExxwE

wxxExxxxE

kk

11111

0

1|1111

1

0

11|11111|111|111

ˆ

ˆˆˆ

1|1

−−−−−−−−−−

−−−−−−−−−−−−−−

ΓΓ+Φ−Γ+

Γ−Φ+Φ−−Φ=

−−

T

kk

T

kkkkkk QPP 1111|111| −−−−−−− ΓΓ+ΦΦ=

{ } ( )1|1|1:1 ,ˆ;| −−− = kkkkkkk PxxZxP N

Since is a Linear Combination of Independent

Gaussian Random Variables:

111111 −−−−−− Γ++Φ= kkkkkkk wuGxx](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-99-320.jpg)

![101

( ) ( )kkkv Rvvp ,0;N=

kkkk vxHz +=

Consider a Gaussian vector , where ,

measurement, , where the Gaussian noise

is independent of and .

v

kx ( ) [ ]1|1| ,; −−= kkkkkkx Pxxxp

N

kx

( ) ( ) ( ) ( )∫∫

+∞

∞−

+∞

∞−

== kkxkkxzkkkzxkz xdxpxzpxdzxpzp |, |,

is Gaussian with( )kz zp ( ) ( ) ( ) ( ) 1|

0

−=+=+= kkkkkkkkk xHvExEHvxHEzE

( ) ( )[ ] ( )[ ]{ } [ ][ ]{ }

( )[ ] ( )[ ]{ } [ ]{ }

[ ]{ } [ ]{ } { } k

T

kkkk

T

kk

T

k

T

kkkk

T

kkkkk

T

k

T

kkkkkkk

T

kkkkkkkkkk

T

kkkkkkkkkkkk

T

kkkkk

RHPHvvEHxxvEvxxEH

HxxxxEHvxxHvxxHE

xHvxHxHvxHEzEzzEzEz

+=+−−−−

−−=+−+−=

−+−+=−−=

−−−

−−−−

−−

1|

0

1|

0

1|

1|1|1|1|

1|1|cov

( )

( ) ( )

( )[ ] ( )[ ] ( )[ ]

−−+−−−−

+−

=

−

xHzRHPHxHz

RHPH

zp TT

Tpz

ˆˆ

2

1

exp

2

1 1

2/12/

π

( )

( )

( ) ( )

−−−= −

−

−−

−

−− 1|

1

1|1|2/1

1|

2/1:1|

2

1

exp

2

1

|1:1 kkkkk

T

kkk

kk

nkkZx xxPxx

P

Zxp kk

π

( ) ( )

( )

( ) ( )

−−−=−= −

kkk

T

kkkpkkkvkkxz xHzRxHz

R

xHzpxzp 1

2/12/|

2

1

exp

2

1

|

π

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 5)

Correction Step (after zk measurement) 1st

Way (continue – 1)](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-101-320.jpg)

![102

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 6)

kkkk vxHz +=

( ) ( )Rvvpv ,0;N=

( )

( )

−= −

vRv

R

vp T

pv

1

2/12/

2

1

exp

2

1

π

Correction Step (after zk measurement) 1st

Way (continue – 2)

( )

( )

( ) ( )

−−−= −

−

−−

−

−− 1|

1

1|1|2/1

1|

2/1:1|

2

1

exp

2

1

|1:1 kkkkk

T

kkk

kk

nkkZx xxPxx

P

Zxp kk

π

( ) ( )

( )

( ) ( )

−−−=−= −

kkk

T

kkkpkkkvkkxz xHzRxHz

R

xHzpxzp 1

2/12/|

2

1

exp

2

1

|

π

( )

( )

[ ] [ ] [ ]

−+−−

+

= −

−

−−

−

1|

1

1|1|2/1

1|

2/

ˆˆ

2

1

exp

2

1

kkkk

T

kkkk

T

kkk

k

T

kkkk

p

kz xHzRHPHxHz

RHPH

zp

π

( ) ( ) ( )

( )

( )

( ) ( ) ( ) ( ) [ ] [ ] [ ]

−+−+−−−−−−⋅

+

==

−

−

−−−

−

−−

−

−

−−

−

1|

1

1|1|1|

1

1|1|

1

2/1

1|

2/12/1

1|2/1:1

1:1

:1

ˆˆ

2

1

2

1

2

1

exp

2

1

|

||

|

kkkkk

T

kkkk

T

kkkkkkkkk

T

kkkkkkk

T

kkk

k

T

kkkk

kkknkk

kkkk

kk

xHzRHPHxHzxxPxxxHzRxHz

RHPH

RPZzp

Zxpxzp

Zxp

π

from which](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-102-320.jpg)

![103

( ) ( ) ( ) ( ) ( ) [ ] ( )1|

1

1|1|1|

1

1|1|

1

−

−

−−−

−

−−

−

−+−−−−+−− kkkk

T

kkkkk

T

kkkkkkkkk

T

kkkkkkk

T

kkk xHzHPHRxHzxxPxxxHzRxHz

( )[ ] ( )[ ] ( ) ( )

( ) [ ] ( ) ( ) [ ]{ }( )

( ) ( ) ( ) ( ) ( ) [ ]( )1|

11

1|1|1|

1

1|1|

1

1|

1|

1

1|

1

1|1|

1

1|1|

1|

1

1|1|1|1|

1

1|1|

−

−−

−−−

−

−−

−

−

−

−

−

−

−−

−

−−

−

−

−−−−

−

−−

−+−+−−−−−−

−+−−=−+−−

−−+−−−−−−=

kkkkk

T

kkk

T

kkkkkkkk

T

kkkkkkkkk

T

k

T

kkk

kkkk

T

kkkkkk

T

kkkkkkkk

T

kkkkk

T

kkkk

kkkkk

T

kkkkkkkkkkkk

T

kkkkkkkk

xxHRHPxxxxHRxHzxHzRHxx

xHzHPHRRxHzxHzHPHRxHz

xxPxxxxHxHzRxxHxHz

[ ] [ ] 1111

1|

1111

1|

1 −−−−

−

−−−−

−

−

++/−/=+− k

T

kkk

T

kkkkkkk

LemmaMatrixInverse

T

kkkkkk RHHRHPHRRRHPHRRwe have

Define:

[ ] [ ] 1

1|

1

1|

1

1|

1

1|

111

1|| :

−

−

−

−

−

−

−

−

−−−

− +−=+= kk

T

k

T

kkkkkkkkkk

LemmaMatrixInverse

kk

T

kkkkk PHHPHRHPPHRHPP

( )[ ] ( )[ ]1|

1

|1|

1

|1|

1

|1| −

−

−

−

−

−

− −+−−+−= kkkkk

T

kkkkkkkk

T

kkkkk

T

kkkkkk xHzRHPxxPxHzRHPxx

( )

( )

( )[ ] ( )[ ]

−+−−+−−⋅= −

−

−

−

−

−

− 1|

1

|1|

1

|1|

1

|1|2/1

|

2/:1|

2

1

exp

2

1

| kkkkk

T

kkkkkkkk

T

kkkkk

T

kkkkkk

kk

nkkzx xHzRHPxxPxHzRHPxx

P

Zxp

π

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 7)

Correction Step (after zk measurement) 1st

Way (continue – 3)

then ( ) ( ) ( ) ( ) ( ) [ ] ( )1|

1

1|1|1|

1

1|1|

1

−

−

−−−

−

−−

−

−+−−−−+−− kkkkk

T

kkkk

T

kkkkkkkkk

T

kkkkkkk

T

kkk xHzRHPHxHzxxPxxxHzRxHz

( ) ( ) ( ) ( ) ( ) ( )

( ) ( )( ) ( ) ( )1|

1

|1|1|

1

||

1

1|

1|

1

|

1

|1|1|

1

|

1

||

1

1|

−

−

−−

−−

−

−

−−

−−

−−−

−

−−+−−−

−−−−−=

kkkkk

T

kkkkkkkkkkkk

T

kkkk

kkkkk

T

kkkkk

T

kkkkkkkk

T

kkkkkkkkk

T

kkkk

xxPxxxxPPHRxHz

xHzRHPPxxxHzRHPPPHRxHz

](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-103-320.jpg)

![104

then

( )kkzx

x

Zxp

k

:1| |max

( )

{ }kk

kkkkk

T

kkkkkkkk

ZxE

xHzRHPxxx

:1

1|

1

|1|

*

|

|

ˆˆ:ˆ

=

−+== −

−

−

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 8)

Correction Step (after zk measurement) 1st

Way (continue – 4)

( )

( )

( )[ ] ( )[ ]

−+−−+−−⋅= −

−

−

−

−

−

− 1|

1

1|

1

|1|

1

1|2/1

|

2/:1|

2

1

exp

2

1

| kkkkk

T

kkkkkk

T

kkkkk

T

kkkk

kk

nkkzx xHzRHxxPxHzRHxx

P

Zxp

π

where:[ ] ( )( ){ }k

T

kkkkkkkk

T

kkkkk ZxxxxEHRHPP :1||

111

1||

ˆˆ: −−=+=

−−−

−](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-104-320.jpg)

![105

{ } ( ) ( )

ki

kkkkkkkkkkkkkkkkk zzKxxHzKxZxEx 1|1|1|1|:1| ˆ| −−−− −+=−+==

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 9)

Summary 1st

Way – Kalman Filter

Initial Conditions:

[ ] 111

1|| :

−−−

− += kk

T

kkkkk HRHPP

Prediction phase (before zk measurement)

111|111|

ˆˆ −−−−−− +Φ= kkkkkkk uGxx

Correction Step (after zk measurement)

T

kk

T

kkkkkk QPP 1111|111| −−−−−−− ΓΓ+ΦΦ=

1

|:

−

= k

T

kkkk RHPK

{ }00|0

ˆ xEx = ( ) ( ){ }T

xxxxEP 0|000|000|0

ˆˆ: −−=

kkkk wxHz += { } { } { }

0

1:11|1:11:11| |ˆ||ˆ −−−−− +=+== kkkkkkkkkkkkk ZwExHZwxHEZzEz

1|1|

ˆˆ −− = kkkkk xHz](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-105-320.jpg)

![106

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 10)

kkkk vxHz +=

( ) ( )Rvvpv ,0;N= ( )

( )

−= −

vRv

R

vp T

pv

1

2/12/

2

1

exp

2

1

π

( )

( )

[ ] [ ] [ ]

−+−−

+

= −

−

−−

−

1|

1

1|1|2/1

1|

2/

ˆˆ

2

1

exp

2

1

kkkkk

T

kkkk

T

kkkk

k

T

kkkk

p

kz xHzRHPHxHz

RHPH

zp

π

from which { } 1|1:11|

ˆ|ˆ −−− == kkkkkkk xHZzEz

( ) ( ){ } kk

T

kkkkk

T

kkkkkk

zz

kk SRHPHZzzzzEP =+=−−= −−−−− :ˆˆ 1|1:11|1|1|

[ ][ ]{ }

[ ] ( )[ ]{ } T

kkkk

T

kkkkkkkk

k

T

kkkkkk

xz

kk

HPZvxxHxxE

ZzzxxEP

1|1:11|1|

1:11|1|1|

ˆˆ

ˆˆ

−−−−

−−−−

=+−−=

−−=

We also have

Correction Step (after zk measurement) 2nd

Way

Define the innovation: 1|1|

ˆˆ: −− −=−= kkkkkk xHzzzi](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-106-320.jpg)

![107

Recursive Bayesian EstimationSOLO

Joint and Conditional Gaussian Random Variables

=

k

k

k

z

x

yDefine: assumed that they are Gaussian distributed

Prediction phase (before zk measurement) 2nd

way (continue -1)

{ }

=

=

−

−

−

−

−

1|

1|

1:1

1:1

1:1

ˆ

ˆ

|

|

|

kk

kk

kk

kk

kk

z

x

Zz

Zx

EZyE

=

−

−

−

−

=

−−

−−

−

−

−

−

−

− zz

kk

zx

kk

xz

kk

xx

kk

k

T

kkk

kkk

kkk

kkkyy

kk

PP

PP

Z

zz

xx

zz

xx

EP

1|1|

1|1|

1:1

1|

1|

1|

1|

1|

ˆ

ˆ

ˆ

ˆ

where: [ ][ ]{ } 1|1:11|1|1|

ˆˆ −−−−− =−−= kkk

T

kkkkkk

xx

kk PZxxxxEP

[ ][ ]{ } kk

T

kkkkk

T

kkkkkk

zz

kk SRHPHZzzzzEP =+=−−= −−−−− :ˆˆ 1|1:11|1|1|

[ ][ ]{ } T

kkkk

T

kkkkkk

xz

kk HPZzzxxEP 1|1:11|1|1| ˆˆ −−−−− =−−=

Linear Gaussian Markov Systems (continue – 11)](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-107-320.jpg)

![111

Recursive Bayesian EstimationSOLO

Joint and Conditional Gaussian Random Variables

Prediction phase (before zk measurement) 2nd

way (continue – 5)

=

−−

−−

−

−−

−−

zz

kk

zx

kk

xz

kk

xx

kk

zz

kk

zx

kk

xz

kk

xx

kk

TT

TT

PP

PP

1|1|

1|1|

1

1|1|

1|1|

1

1|1|1|

1

1|

1|

1

1|1|1|

1

1|

1|

1

1|1|1|

1

1|

−

−−−

−

−

−

−

−−−

−

−

−

−

−−−

−

−

−=

−=

−=

zz

kk

xz

kk

xz

kk

xx

kk

xz

kk

xx

kk

zx

kk

zz

kk

zz

kk

kkzxkkzzkkxzkkxxkkxx

PPTT

TTTTP

PPPPT

( ) ( )k

xz

kk

xx

kkk

xx

kk

T

k

xz

kk

xx

kkk TTTTTq ςξςξ 1|

1

1|1|1|

1

1| −

−

−−−

−

− ++=

1|1| ˆ:&ˆ: −− −=−= kkkkkkkk zzxx ςξ

( )

( )[ ] ( )[ ]

−−−−−−−=

−=

−−−−−

−

−

−

−

1|1|1|1|1|2/1

1|

2/1

1|

2/1

1|

2/1

1|

|

ˆˆˆˆ

2

1

exp

2

2

2

1

exp

2

2

|

kkkkkkk

xx

kk

T

kkkkkkk

yy

kk

zz

kk

yy

kk

zz

kk

kkzx

zzKxxTzzKxx

P

P

q

P

P

zxp

π

π

π

π

( )1|

1

1|1|1|

1

1|1| ˆˆ −

−

−−−

−

−− −−−=+ kkk

K

zz

kk

xz

kkkkkk

xx

kk

xz

kkk zzPPxxTT

k

ςξ

Linear Gaussian Markov Systems (continue – 15)](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-111-320.jpg)

![112

Recursive Bayesian EstimationSOLO

Joint and Conditional Gaussian Random Variables

Prediction phase (before zk measurement) 2nd

Way (continue – 6)

( ) ( )[ ] ( )[ ]

−−−−−−−= −

−

−−−−−

−

−−− 1|

1

1|1|1|1|1|

1

1|1|1|| ˆˆˆˆ

2

1

exp| kkk

xx

kk

xz

kkkkk

xx

kk

T

kkk

xx

kk

xz

kkkkkkkzx zzPPxxTzzPPxxczxp

From this we can see that

{ } ( )1|

1

1|1|1|| ˆˆˆ| −

−

−−− −+== kkk

K

zz

kk

xz

kkkkkkkk zzPPxxzxE

k

( )( ){ }

T

k

zz

kkk

xx

kk

zx

kk

zz

kk

xz

kk

xx

kk

xx

kkk

T

kkkkkk

xx

kk

KPKP

PPPPTZxxxxEP

1|1|

1|

1

1|1|1|

1

1|:1|||

ˆˆ

−−

−

−

−−−

−

−

−=

−==−−=

[ ][ ]{ } 1|1:11|1|1|

ˆˆ −−−−− =−−= kkk

T

kkkkkk

xx

kk PZxxxxEP

[ ][ ]{ } k

T

kkkkkk

T

kkkkkk

zz

kk SHPHRZzzzzEP =+=−−= −−−−− :ˆˆ 1|1:11|1|1|

[ ][ ]{ } T

kkkk

T

kkkkkk

xz

kk HPZzzxxEP 1|1:11|1|1| ˆˆ −−−−− =−−=

Linear Gaussian Markov Systems (continue – 16)](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-112-320.jpg)

![113

Recursive Bayesian EstimationSOLO

Joint and Conditional Gaussian Random Variables

Prediction phase (before zk measurement) 2nd

Way (continue – 7)

From this we can see that

( ) [ ] 111

1|1|

1

1|1|1||

−−−

−−

−

−−− +=+−= kk

T

kkkkkk

T

kkkkk

T

kkkkkkk HRHPPHHPHRHPPP

( ) 1

1|

1

1|1|

1

1|1|

−

−

−

−−

−

−− =+== k

T

kkk

T

kkkkk

T

kkk

zz

kk

xz

kkk SHPHPHRHPPPK

Linear Gaussian Markov Systems (continue – 17)

kk

T

kkkkk KSKPP −= −1||

or

[ ][ ]{ } 1|1:11|1|1|

ˆˆ −−−−− =−−= kkk

T

kkkkkk

xx

kk PZxxxxEP

[ ][ ]{ } k

T

kkkkkk

T

kkkkkk

zz

kk SHPHRZzzzzEP =+=−−= −−−−− :ˆˆ 1|1:11|1|1|

[ ][ ]{ } T

kkkk

T

kkkkkk

xz

kk HPZzzxxEP 1|1:11|1|1| ˆˆ −−−−− =−−=](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-113-320.jpg)

![114

We found that the optimal Kk is

[ ] 1

1|1|

−

−− +=

T

kkkkk

T

kkkk HPHRHPK

[ ] [ ] 1111

|1

11

&

1

|1 1

1|

1

−−−−

+

−−−

+ +−=+ −

−

− k

T

kkk

T

kkkkkk

LemmaMatrixInverse

existPR

T

kkkkk RHHRHPHRRHPHR

kkk

[ ] 1111

1|

1

1|

1

1|

−−−−

−

−

−

−

− +−= k

T

kkk

T

kkkkk

T

kkkk

T

kkkk RHHRHPHRHPRHPK

[ ]{ } [ ] 1111

|1

111

|1|1

−−−−

+

−−−

++ +−+= k

T

kkk

T

kkkkk

T

kkk

T

kkkkk RHHRHPHRHHRHPP

[ ] 1

|

1111

|1

−−−−−

+ =+= RHPRHHRHPK T

kkk

T

kkk

T

kkkk

If Rk

-1

and Pk|k-1

-1

exist:

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 18)

Relation Between 1st

and 2nd

ways

2nd

Way

1st

Way = 2nd

Way](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-114-320.jpg)

![115

1|1| ˆˆ: −− −=−= kkkkkkkk zzxHzi

Recursive Bayesian EstimationSOLO

Linear Gaussian Markov Systems (continue – 19)

Innovation

The innovation is the quantity:

We found that:

{ } ( ){ } { } 0ˆ||ˆ| 1|1:11:11|1:1 =−=−= −−−−− kkkkkkkkkk zZzEZzzEZiE

[ ][ ]{ } { } k

T

kkkkkk

T

kkk

T

kkkkkk SHPHRZiiEZzzzzE =+==−− −−−−− :ˆˆ 1|1:11:11|1|

Using the smoothing property of the expectation:

{ }{ } ( ) ( ) ( ) ( )

( )

( ) ( ) { }xEdxxpxdxdyyxpx

dxdyypyxpxdyypdxyxpxyxEE

x

X

x y

YX

x y

yxp

YYX

y

Y

x

YX

YX

==

=

=

=

∫∫ ∫

∫ ∫∫ ∫

∞+

−∞=

∞+

−∞=

∞+

−∞=

∞+

−∞=

∞+

−∞=

∞+

−∞=

∞+

−∞=

,

||

,

,

||

,

{ } { }{ }1:1 −= k

T

jk

T

jk ZiiEEiiEwe have:

Assuming, without loss of generality, that k-1 ≥ j, and innovation I (j) is

Independent on Z1:k-1, and it can be taken outside the inner expectation:

{ } { }{ } { } 0

0

1:11:1 =

== −−

T

jkkk

T

jk

T

jk iZiEEZiiEEiiE

](https://image.slidesharecdn.com/3-recursivebayesianestimation-150113075152-conversion-gate02/85/3-recursive-bayesian-estimation-115-320.jpg)

![122

Extended Kalman Filter

Sensor Data

Processing and

Measurement

Formation

Observation -

to - Track

Association

Input

Data Track Maintenance

( Initialization,

Confirmation

and Deletion)

Filtering and

Prediction

Gating

Computations

Samuel S. Blackman, " Multiple-Target Tracking with Radar Applications", Artech House,

1986

Samuel S. Blackman, Robert Popoli, " Design and Analysis of Modern Tracking Systems",

Artech House, 1999

SOLO

In the extended Kalman filter, (EKF) the state

transition and observation models need not be linear

functions of the state but may instead be (differentiable)

functions.

( ) ( ) ( )[ ] ( )kwkukxkfkx +=+ ,,1

( ) ( ) ( )[ ] ( )11,1,11 +++++=+ kkukxkhkz ν

State vector dynamics

Measurements

( ) ( ) ( ){ } ( ) ( ){ } ( )kPkekeEkxEkxke x

T

xxx =−= &:

( ) ( ) ( ){ } ( ) ( ){ } ( ) lk

T

www kQlekeEkwEkwke ,

0

&: δ=−=

( ) ( ){ } lklekeE

T

vw ,0 ∀=

=

≠

=

lk